We would first need to record a video of a box with a regular pattern of equally spaced points.

We would then need to select at least 20 points from the box by using the plt.ginput function to get the 2D image coordinates of the point.

We would also need the 3D world coordinates of these points which we could recover by setting the bottom corner of the box as the (0,0,0) coordinate and recover the other points.

First Frame points

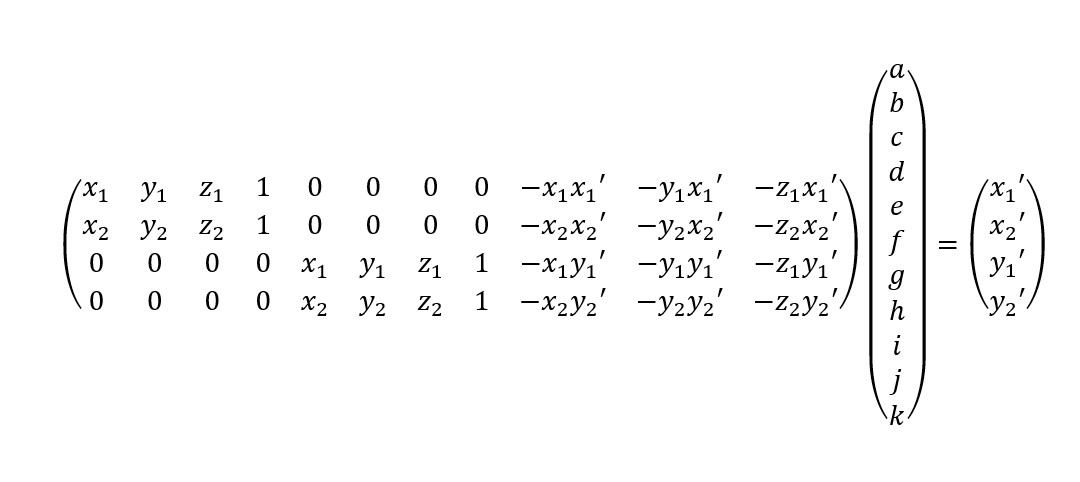

As we have found the 2D image coordinates of the key points of the first frame, we would like to find the 2D coordinates for the other frames automatically. We could use an off-the-shelf tracker from the OpenCV library, cv2.TrackerMIL_create() which would create a 8x8 pixel bounding box around the coordinate and would keep track of the coordinate when going through the next frames. By using this tracker, we would get the 2D image coordinates of the points for all the frames of the video

frame_20_pts.jpg

While we need to track and find the 2D image coordinates of each point for all frames, the 3D world coordinates of the points remain the same for all the frames.

As we now have the 2D and 3D coordinates for each point, we can now calculate the projection matrix by using least squares.

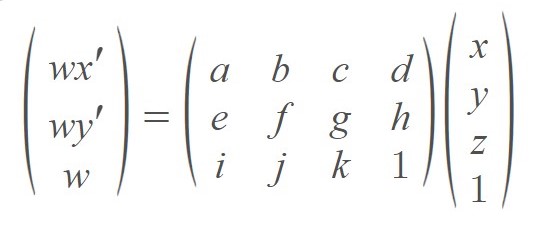

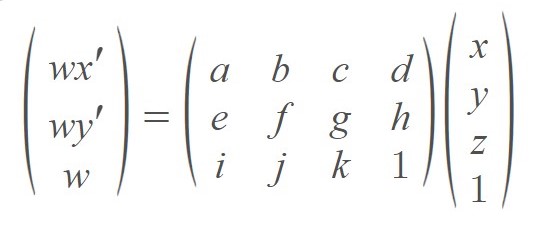

Similar to solving the homography matrix, though this time there are 11 unknowns with the projection matrix of a 3x4 matrix.

Solving algebraically we would then get the following equation.

Where we could now use the least-squares to solve the 11 unknowns and construct the projection matrix for each frame as we have the 2D and 3D coordinates for each frame.

As we now have the projection matrix of each frames, we could now project a cube on the video. To get the 2D image coordinate of the vertices of the cube, we could first define the 3D world coordinates of the vertices of the cube and we could then do matrix multiplication of these 3D coordinates with the projection matrix to get the 2D image coordinates. We could then use the OpenCV cv2.line to draw the edges of the cube for each frame.

cubed_output.gif

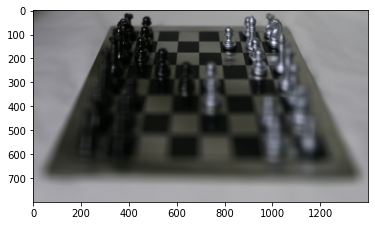

In this project, we would observe that even the simplest operations on images could produce complex effects. Where given a large set of images that were taken on different grid locations, we could change "focus" from far to near objects of an average image just by extrapolating shifts of the images.

Given a large dataset of images taken at different grid positions from the Stanford Light Field Archive, we would apply simple operations such as shifting and averaging on these images to show the refocusing effects on the image.

When we take the average of all the images from the dataset by summing onto a single empty mask and dividing by the number of images, we would notice that the average image only focuses on the far object.

From this, we could refocus the image by applying appropriate shifts on the images.

Where given the u and v coordinates of each image from the filename, we would first extract these u and v coordinates and would find the average of the u and v coordinates of all the images.

We could then apply the shift on each image by the difference of the average image and the u v coordinate of the image. We could then multiply this shift with a constant alpha to extrapolate or control this shift on all the images.

alpha = 0 (Average Image)

alpha = 0.25

alpha = 0.5

Where we could notice that as we increase the alpha, we could refocus the image from focusing far objects to the near objects

This is the result of the depth refocusing with the alpha of intervals of 0.1 from 0 to 0.5

refocus_output.gif

We could also show the effects of aperture adjustment by limiting the number of images for the average. Where more images would mimic a bigger aperture while fewer images would mimic a smaller aperture.

We could achieve this effect by first applying a desirable or appropriate shift to focus on a specific point. We would then create a list of increasing batches of images (ex: [[im0-im4], [im0-im8], ...]) and take the average of each batch of images. After compiling the average images into a single GIF we could observe the changing aperture effect.

From this project, I learned that even using simple operations such as shifting and averaging could provide complex effects on an image.