Part 1: Loading Images

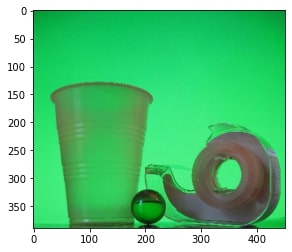

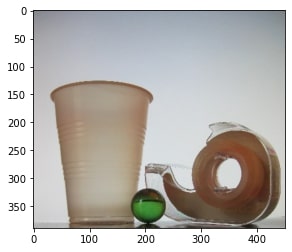

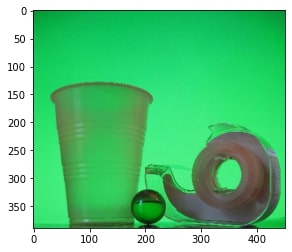

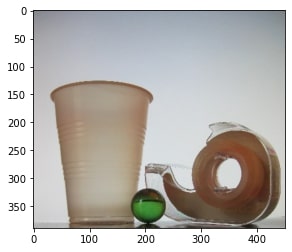

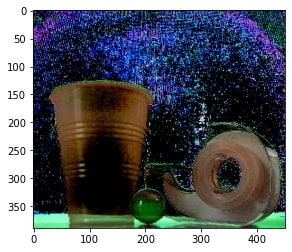

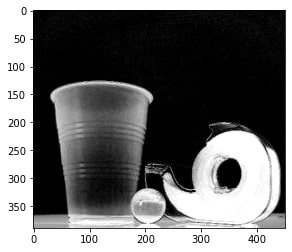

We load four images first: two images of the backgrounds and two images of the object against the backgrounds.

We load four images first: two images of the backgrounds and two images of the object against the backgrounds.

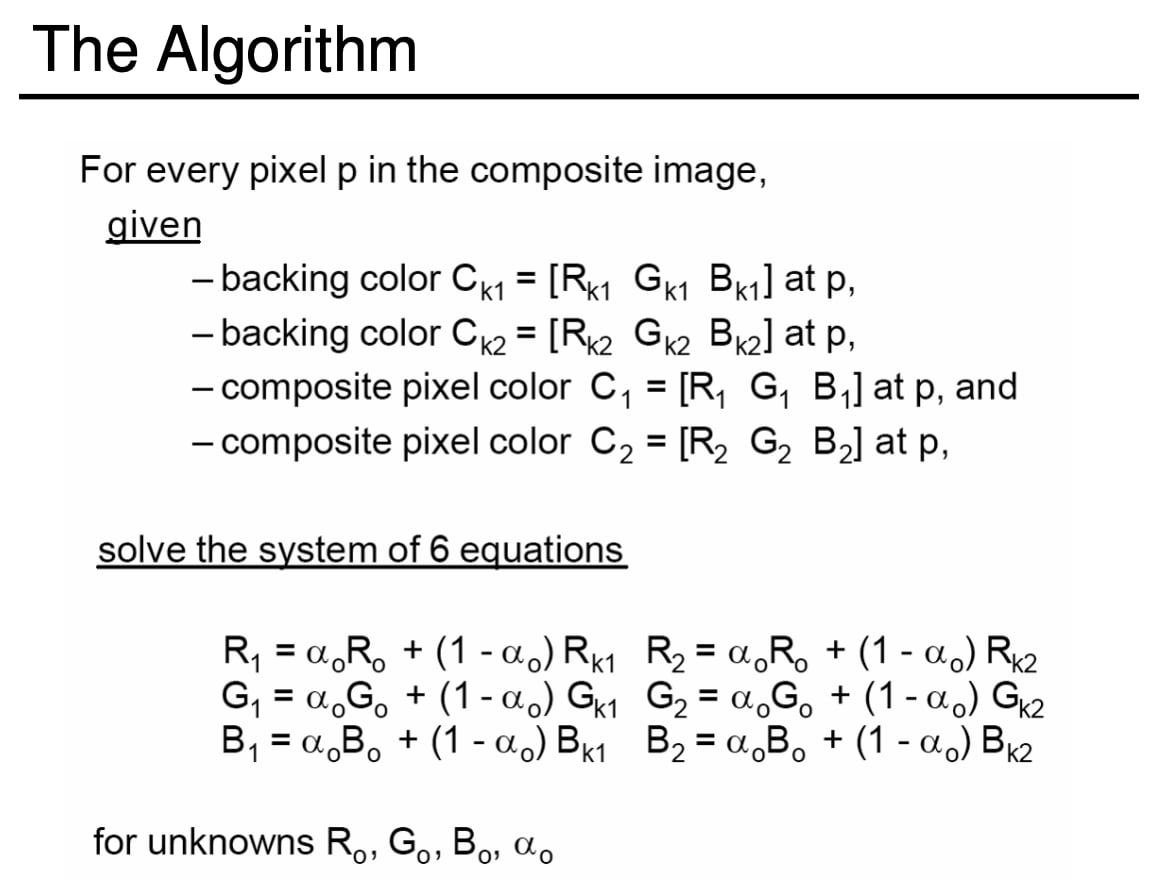

For each pixel in the image, we solved the following system of linear equations using least squares as covered in the lecture slides.

Here are the images of the object and the alpha matte.

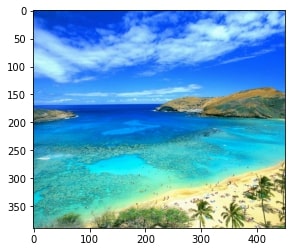

We use the alpha matte to composite the object and a new background together. Since the new background is just a generic background, no homography is needed.

By Joshua Levine and Yin Deng

We start by constructing matrices for each of the three objectives, using the x-gradients for the first objective, y-gradients for the second, and matching the top-left corners for the third. Below is the original image followed by the generated image, and you can see that they are equal, as desired.

We first ask users to input 10 landmarks in the source image, which will be used as the vertices to construct a polygon around the source. We also give users the option to scale up or down the source before blending.

Next we ask users to input 1 landmark, which will be used as the location where the source will be blended into the target.

We then naively blend the source into the target to see what it looks like.

Unlike the toy problem, since we are blending two relatively large images this time, we have to take advantage of the sparse nature of the system of linear equations we are trying to solve; otherwise, our program will run for a long time and terminate when it runs out of memory. Luckily, SciPy gives us the tools we need. We then set up the system of linear equations for make the gradients match and solve it using least squares. Before setting up the system of linear equations, we first need to keep track of all the points inside the polygon. Then we iterate through every point inside the source polygon, and look at its four neighbors: if the neighbor is also inside the polygon, we add a new equation corresponding to the first part of the given formula; if the neighbor is outside the polygon, we add a new equation corresponding to the second part of the given formula.

We worked with RGB images, so we processed each image separately. Here are the results

This worked pretty well overall, except that in the first example, the text is surrounded by a blurred, transparent, box.

We used the same process as Poisson Blending, but used the gradient in the source or target with the largest magnitude as the guide, rather than just the source gradient. To do so, we solved the new equation for v, given in this portion of the project spec. Here is the result:

This worked much better than Poisson Blending, because you can’t see the blurred rectangle around the text.

By Joshua Levine and Yin Deng

The following summarizes the algorithm proposed in the paper. The goal is to choose an input image to VGG19, which minimizes a linear combination of the content loss and the style loss. The style loss measures the similarity between the network’s output and the style image, while the content loss measures the similarity between the network’s output and the original image. The alpha-beta ratio is used to tune that linear combination, where a high ratio will weight the content loss higher, so the image won’t change as much.

The paper is fascinating because it demonstrates that neural networks allow us to differentiate between style and content in images.

The paper calls for us to minimize a linear combination of the style loss and the content loss using gradient descent. Hence, we used the Adam optimizer to minimize the loss. We start by creating a new neural network architecture which returns the feature representations of select layers during the forward pass.

To minimize the loss, we first choose an alpha-beta ratio. In the paper, they started with a white noise image, but after experimentation, we found it was easier to start with the original image. Hence, we pass the original image as a parameter to Adam.

Then, we run 300 training epochs on the original image, with a learning rate scheduler that decreases the learning rate on a plateau. Once the generated image is trained for a given alpha-beta ratio, and subset of layers, we only have to train for 150 epochs after changing those parameters.

In all images below, I used the same picture as the paper as the original content image. Here is a recreation of the plot from the paper, with the rows as Conv1_1, Conv2_1, Conv3_1, Conv4_1, and Conv5_1. The columns are 1e-5, 1e-7, 1e-10, and 1e-15, which are the alpha-beta ratios.

Below are results for the same paintings used in the paper, and you can see the results on Composition VII above. They show Starry Night, The Shipwreck of the Minotaur, Der Schrei, and Femme nue assise.

Here is an example using Der Schrei, and an image I chose. I show the original image first, followed by the result.

Overall, I was pleased with the results. They aren’t exactly the same as the ones in the paper, but the differences can be attributed to the use of different pictures, at different scales, different training methods, or different weights in the pre-trained model. As I ran the code, I realized that it was pretty unstable: the output changed a lot between runs. This is partially due to a high learning rate during training, but this made it challenging to produce results that were similar to those in the paper.