CS194 - Computer Vision

Project Final 1: Eularian Video Magnification

Nick Lai

Overview

In this project, we aim to be magnify the temporal image variations in position and colour in order to reveal imperceptable movement or changes in tone and hue. This has many applications in real life and is a pretty cool project overall, if it weren't for how much of a pain it was to make.

Laplacian pyramid

Probably the most straightforward part of the project, we were asked to construct a laplacian pyramid from the input image. Having learnt how to do this many weeks ago, this was simply a matter of implementing a low-pass filter, and utilising it to generate each layer of the laplacian pyramid.

First we needed to apply the low-pass, and then subtract the low-passed image from the original to get a bandpass of the image frequencies, save that as the first layer of the laplacian pyramid, then downsample to a smaller image of dimensions 1/2 that of the original. Then repeat this process until we have generated a list of laplacian pyramid layers.

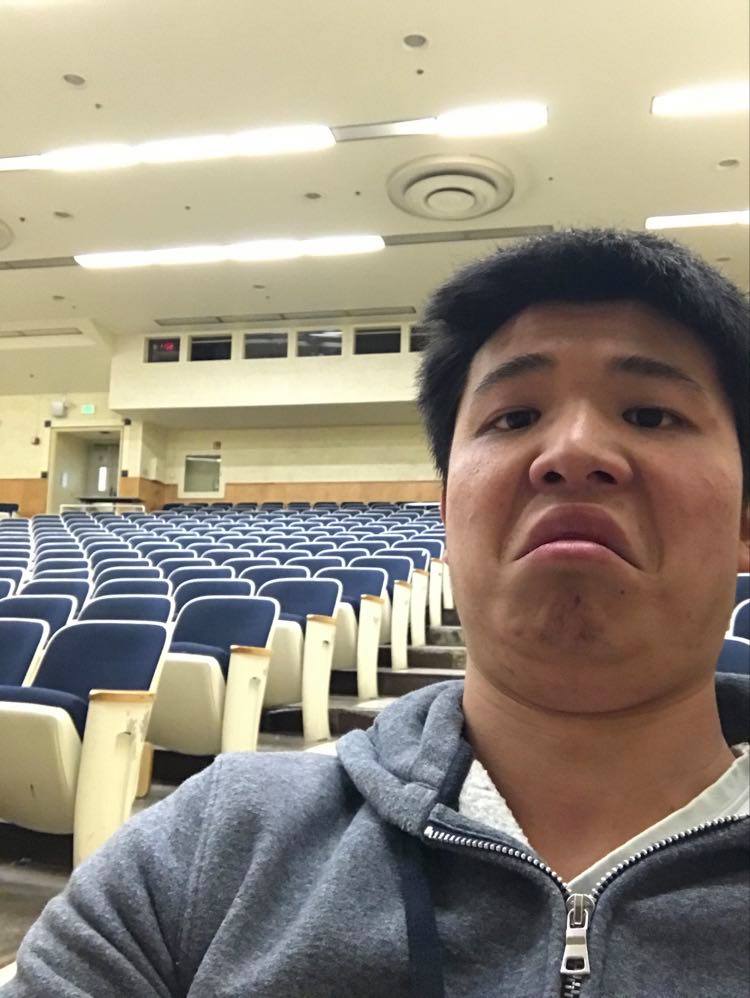

Me in dwinelle original.JPG

Me in dwinelle laplacian pyramid.JPG

To confirm that this is a respectable laplacian pyramid, I have reconstructed the image from the laplacian pyramid by upsampling and then summing the images, and it comes out to be somewhat respectable.

Laplacian pyramid reconstructed.jpg

Laplacian pyramid reconstructed2.jpg

But just in case I can do better, I have implemented another function "laplacian_pyramid(im)" that utilises cv2.pyrUp and cv2.pyrDown to create a laplacian pyramid, and the pyramid reconstructed from this function (right) looks marginally better than the original (left) which doesn't utilise cv2.pyrUp and cv2.pyrDown.

Temporal filtering and image reconstruction

Having missread the assignment, I had spent many hours coding up a motion magnification algorithm instead of a colour magnification. But anywho, it was a matter of first generating a laplacian pyramid for each and every frame of a given input video. Stacking every frame horizontally into the same numpy array, and then applying the Butterworth bandpass across the temporal axis filter to each layer of the pyramid, before selecting which layers to retain for the final reconstruction of the original image.

So my original video for which I did most of my testing and whatnot was on an image of me sitting in a small library being really tired. It was taken using my iphone's front camera of me exhaustedly squinting at the camera itself (fear not, I took a nap aftwards).

tired me.mp4

The original video was too large both in dimension and in duration, so I reduced the dimensions to 640x360 and the duration to 5 seconds. This also helped with testing the functions and rendering the outputs as it took far less time. Additionally, I encountered further memory erros, which got resolved by uninstalling 32-bit python and installing the 64-bit python.

The reconstruction is a simple matter of first indicating which range of layers I would like to utilise. Originally, I had simply slapped together ecery layer of the band-passed laplacian pyramid, however, the result flashed too intensely.

tired me too many layers.mp4

I only discovered the solution after talking to Kamyar in office hours and he suggesting that certain layers may be discarded for "better results", so I implimented a section to do that. After indicating which layers to keep, the layers are amplified by multiplication of a certain value, then added back to the original image in order to display the difference. Then all the frames of the video are outputted and reconstructed into a gif via a third party software.

This issue somewhat persisted throughout the other versions of this video, but I suspect that is partly due to the temporal balndpass filter accounting for the period of time before there was even video footage, as well as the fact that my hand at the start probably wasn't that stable causing movements that change the overall brightness of the video.

Fixing this gave me a much better result:

tired me magnified motion.mp4

Notice how the bobbing of my head and the movement of my shirt to my breathing become much more evident in this version? Yeah, that's it :D I also ran through with a couple more iterations of the same video and different parameters and settings.

tired me magnified motion heavily amplified.mp4

tired me magnified colour.mp4

Following suit, I have applied the filter to some other videos which I have obtained either striaght off the internet or recorded myself.

boiling water original.mp4

blender original.mp4

boiling water magnified motion.mp4

blender magnified motion.mp4

i find it quite interesting the motion magnification quite clearly picked up on the speed or frequencies of the steam and particles within the blender and kind of neglected to show the faster or slower farticles. I confirmed my suspicion by inputing parameters to acquire a higher frequency bandpass from the boiling water video, and I acquired a rather turbulent motion magnification. I haven't posted that video because I am lazy.

Below are my results for the required "face" input as required by the spec

face1 low=0.2 high=3 amp=20.mp4

face2 low=0.2 high=1 amp=20.mp4

face2 low=0.2 high=2 amp=40.mp4

And here are the "baby" inputs

baby1 low=1 high=3 amp=40.mp4

baby low=0.2 high=1 amp=20.mp4

As expected, the closer your bandpass is to encapsulating the frequency of the specific changes, the more prominant the changes are. Although, oddly, the baby results came out inferior to the face results. Also, if you're curious, here is what happened when I applied the motion temporal filter instead of the colour one.