The goal of the project was to refocus images at different depths and generate images corresponding to different apertures.

I used the rectified images from the two examples below to generate the image refocused at different depths. The focus can be adjusted through taking the average of the photos which are slightly shifted from the center camera with a certain stride.

For the change of aperture, we had to subsample from the n-nearest neighbors from the middle camera to get a more focused picture. On the other side, taking all the pictures and average them gives us a very clear photo.

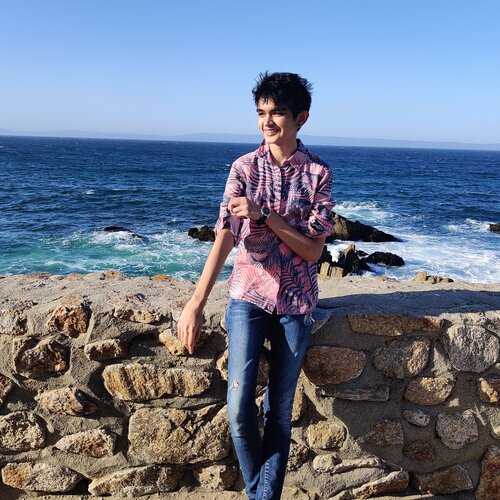

For my own data I used a 3x3 grid and these were the results:

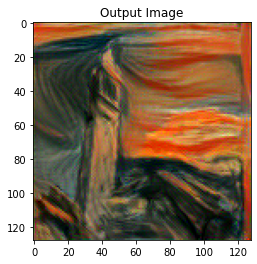

As we can see the results are blurry and not good because of the limited number of pictures and because of my failure to mimic the function as a grid manually. The spacings are too inconsistent and large for a true light field camera.

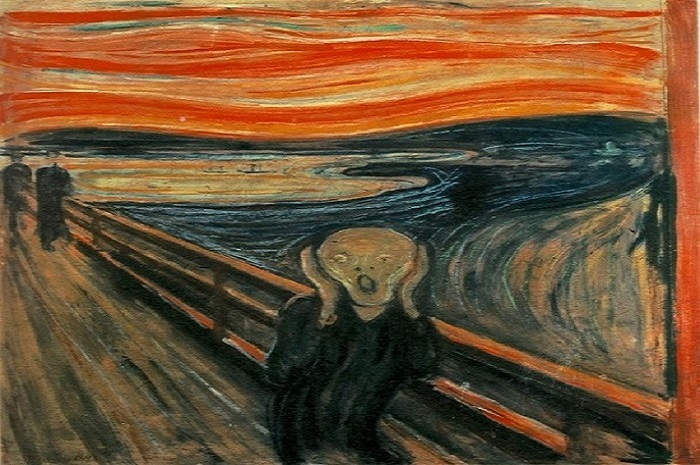

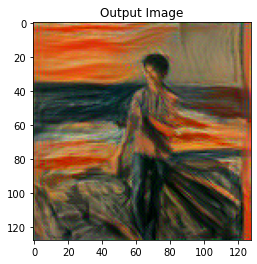

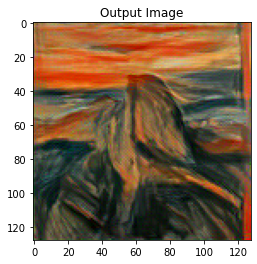

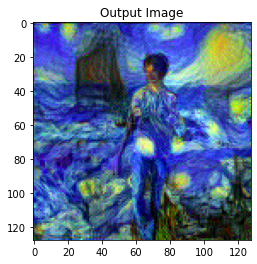

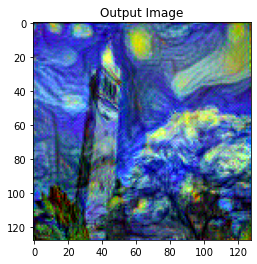

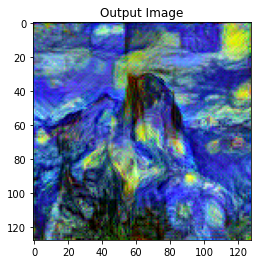

The essence of the algorithm is to minimize a two component loss which can be split out in a content loss and a style loss by running backprop on the desired output image. For the model, you are supposed to use some certain pre-determined layers of the VGG-19 CNN. The layers of interest that were essential for the style loss were CNN1_1, CNN2_1, CNN3_1, CNN4_1 and CNN5_1. Where we calculate the loss through taking MSE loss between the gram matrix of our target features and our current features. For the content loss, we take the MSE Loss between the target and input image.