Light Field Camera

Light field cameras are able to capture the light field in a scene. We can set up a light field camera in two ways - one with a grid of cameras facing a single direction, or another with a single camera with a specially manufactured microlens layered on top of the camera sensor. Both of these enable us to collect the same information about a scene - we will be using the camera-grid representation for this project. Light fields allow us to capture light rays of a scene at one point in time and use post-processing methods to achieve cool effects, like refocusing an image after it has been taken, or varying effective aperture size in an image to increase or reduce bokeh.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Depth Refocusing

Because we are capturing information from hundreds of different points spread spatially out on a plane orthogonal to our direction of view, we end up with a parallax effect when switching between images captured from different cameras - objects that are heavily in the foreground are in distinctly different positions from each other, whereas objects far from the cameras (i.e. in the background) are closer to each other, from a pixel position standpoint.

|

Averaging across all images, unshifted, thus will result in a natural focus on the background, where the pixels captured by the array overlap well with each other, whereas corresponding parts of the image in the foreground will have significant differences, thus resulting in a foreground blur when averaged over each other. This blur effect is exactly the same as one would achieve on a single camera with a wide aperture lens.

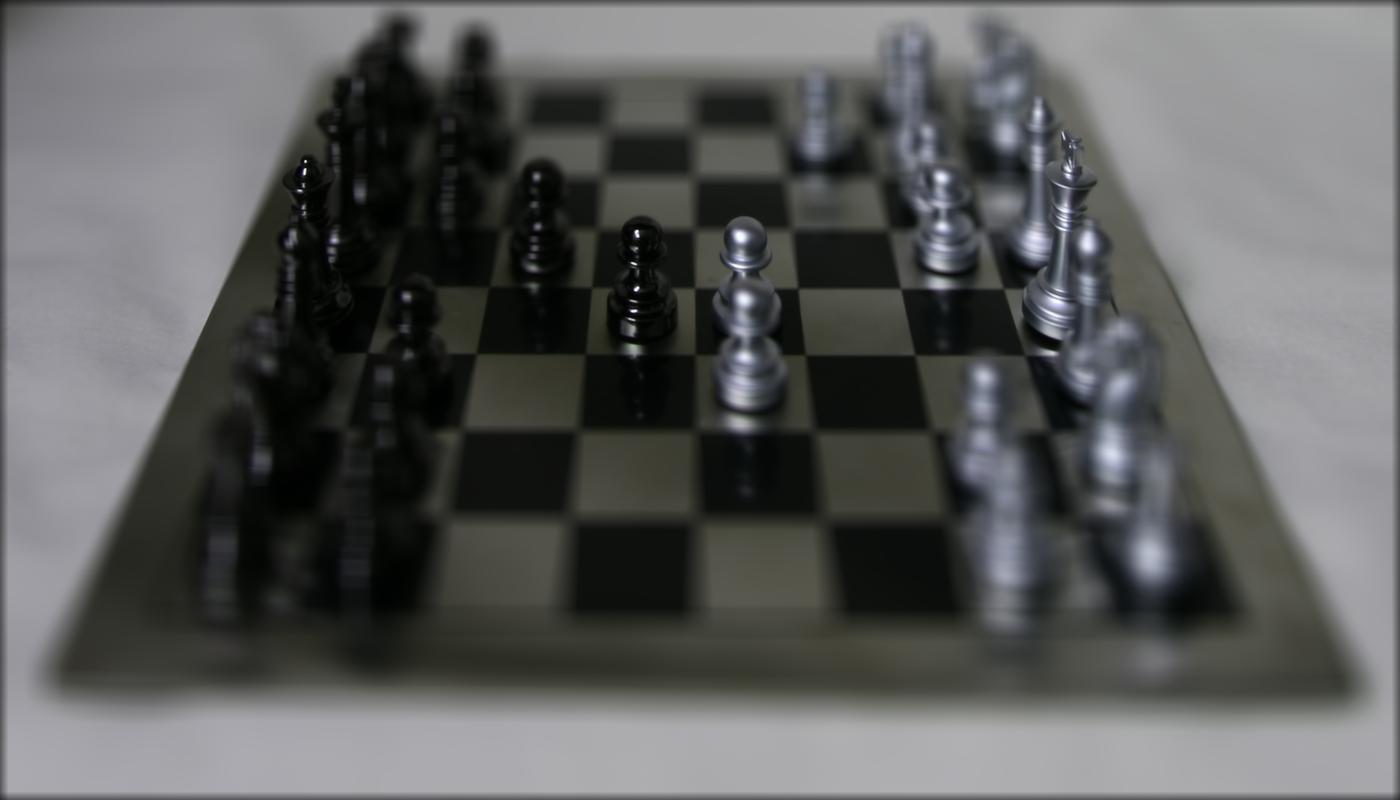

The chess scene, averaged across all images, is shown below - note the significant focus on the rear plane.

|

We can, of course, change this - because differences in our camera grid images are a result of 'movement', we can attempt to focus on a different part of the scene by counteracting this movement by artificially shifting our images before we average them. We approached this shift by assuming the cameras were equally spaced - we also had access to the rectified versions of these images, which makes processing them easier. Taking the center camera as a reference, a camera image taken on a right-side camera in the grid will have the parts of the same scene from the center camera, but shifted a little bit to the left. We counteract this shift by shifting the image rightward. Doing this for 1 pixel per distance from the center camera (so, with an array of 17x17 images, the outermost camera images get shifted 8 pixels, whereas the cameras adjacent to the center only get shifted by 1 pixel), we can average the images and get the result shown below.

|

|

Repeating this process for a variety of pixel shifts (including some fractional ones - we use a simple bi-lerp to account for this), we can slowly increase the pixel shift amount and get the gif shown below, where we smoothly transition from focus in a rear plane to focus in the front plane.

|

|

Aperture Adjustment

As mentioned before, the blur/bokeh in the images is identical to that achieved with a wide aperture lens, and is caused by motion/differences in the images captured by each grid camera. If we have less motion in the image, then, we should also reduce blur. This motion is caused by spatial displacement each camera inherently has - if we simply sample from fewer cameras, then, we should be able to maintain the same focus plane while changing the effective aperture of the image.

To achieve this effect, we simply set our center grid camera to be a reference, and increase or decrease a ring of cameras that we average around it to adjust the aperture setting. The results of this, in a gif, are shown below. (Focus plane is in the middle)

|

|

Bell and Whistle - Interactive Refocusing

If we would like to focus on a specific point, we need translations from each camera image to the reference image. However, because we have a pre-determined structure where the cameras are arranged in a grid, we can determine the translations for all camera images, given only the translation for one and its position. To reduce noise, I calculated the pixel offset needed from the center camera image to the bottom left corner camera. For an array of 17x17 cameras, (i.e. 8 camera radius from central reference camera), a 16-pixel difference, for example, is equivalent to a 2-pixel difference for the cameras adjacent to the center.

To determine how much offset is needed, we slice a square sample from our corner camera image to compare to a corresponding square from our reference image. Then, we perform a simple image alignment with these two sample squares, similar to as was done in project 1 - brute force works perfectly fine, since we keep our square sample windows small to ensure we optimize for a small region of focus. We then return the coordinate shifts that result in a minimized sum of square differences between the two regions. Once this is done, we run the same algorithm as the beginning of the project (we input the pixel difference we obtained). The results are shown in this video.

Summary

Light field cameras are awesome! They give us so much information about the scene we are looking at that can't be captured by a conventional camera. There is a lot of post-processing that can happen to modify any part of the image you'd like, and this is due to implicit spatial information being encoded in the data as well (due to the span of the camera grid).