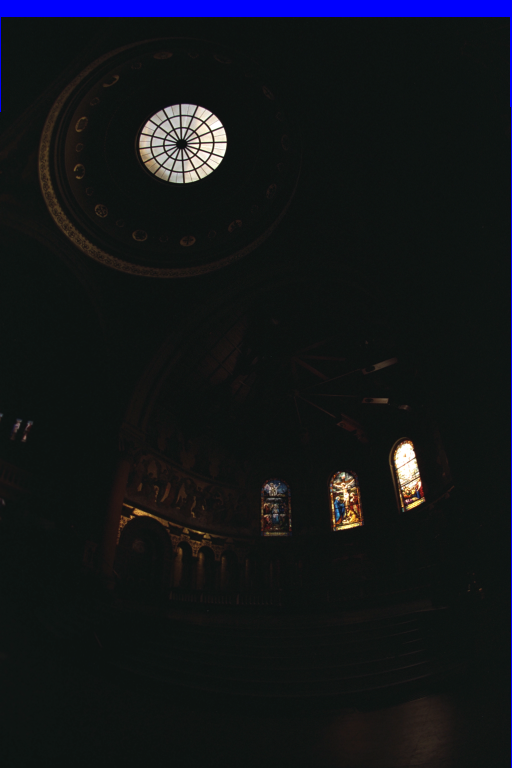

We want to build an HDR radiance map from several LDR exposures. Essentially, given several exposures of the same scene, we wish to recover the original radiance of the scene.

Variables:

- E is the scene radiance at pixel i.

- Zij is the observed pixel value for pixel i in image j, and is some function of that pixel’s exposure. f(Ei Δtj)

- Ei Δ tj is the exposure at a given pixel in a given image. It is the scene radiance multiplied over some time interval

- f is some sort of pixel response curve. Instead, we solve for g = ln(f-1), which maps from pixel values (0 to 255) to log exposure values

- g(Zij) = ln(Ei) + ln(tj)

The key here is that Ei remains the same across each image.

Finding g sounds extremely difficult, but it becomes much easier when you consider the constraint that its domain is simply the possible range of pixel brightness values (256); thus, its range is also finite.

Thus, g is simply a mapping of 256 input values to 256 outputs, and can be modeled as a least-squares approximation problem.

We also introduce a few other effects on our optimization:

- We constrain that g(Zmid) = 0, where Zmid = ½(Zmin + Zmax). We arbitrarily assume that the pixel value of middle brightness has unit exposure.

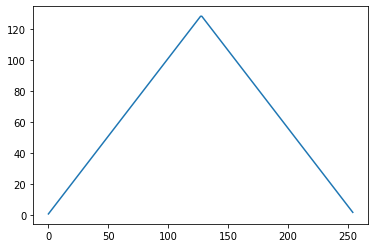

- We introduce a weighting function w(z) with a triangle curve in order to less punish jagged curves near extremes and induce the function to optimize for smoothness near the middle of the curve.

Our images will naturally have discontinuities, thanks to noise affecting darker parts of the images and clipping affecting the brighter parts.

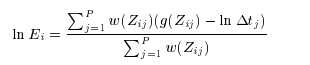

Having solved our optimization problem, we now have g(). We may recover pixel radiance via this formula.

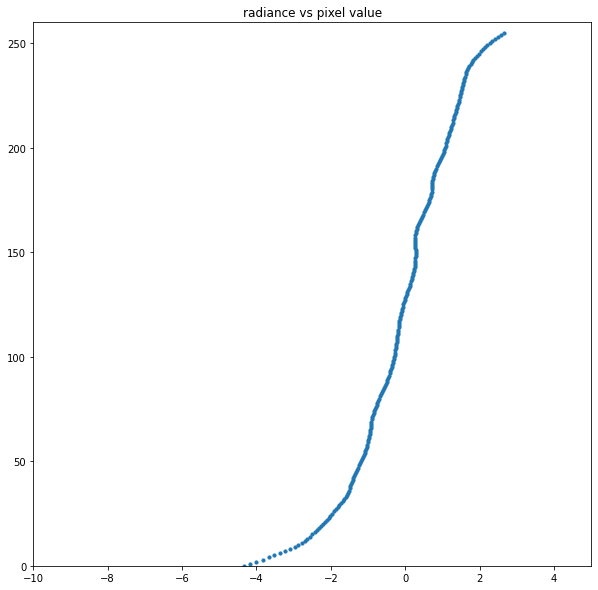

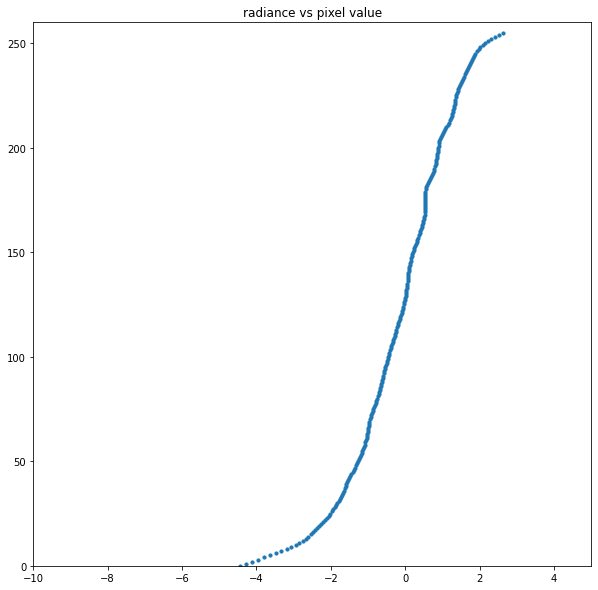

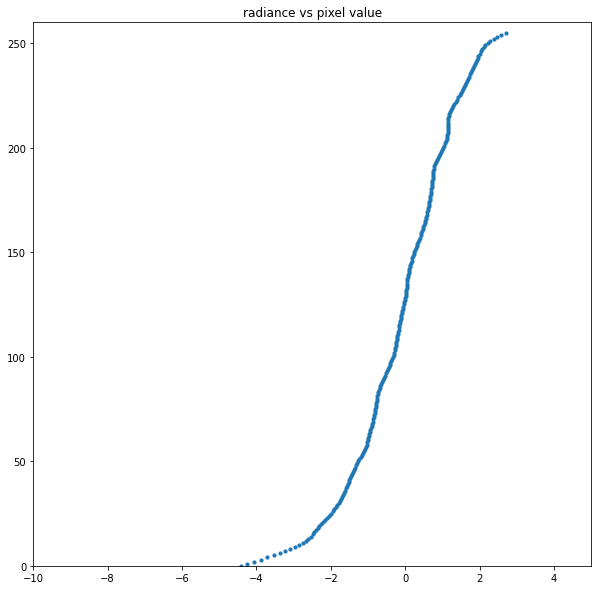

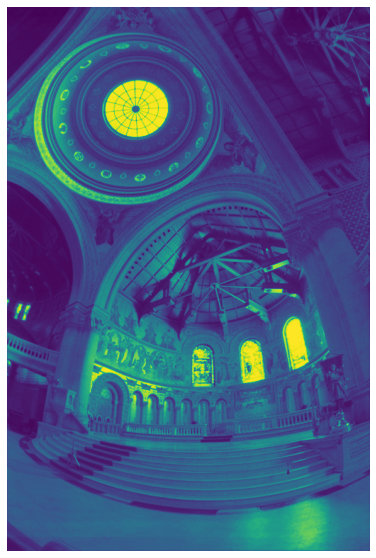

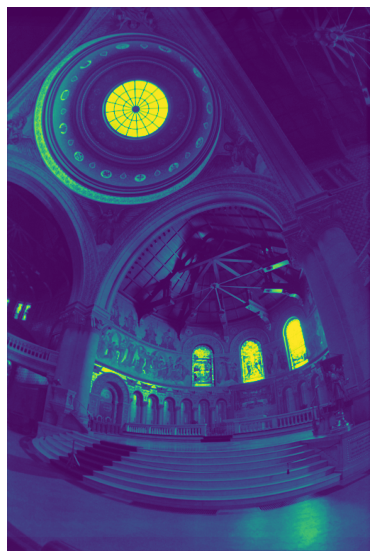

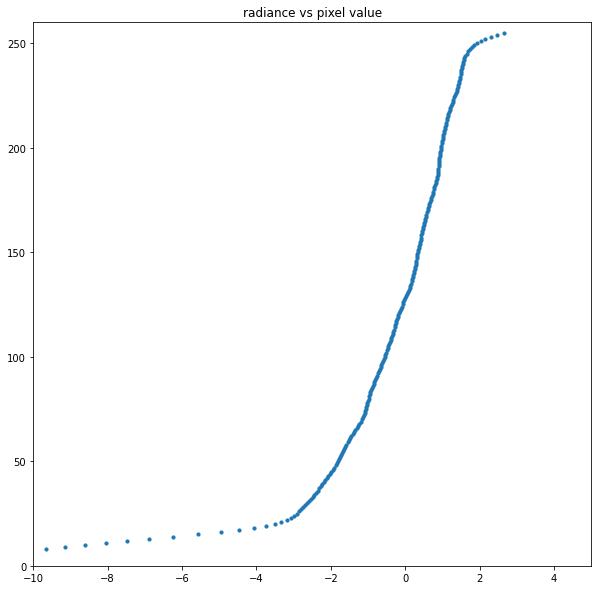

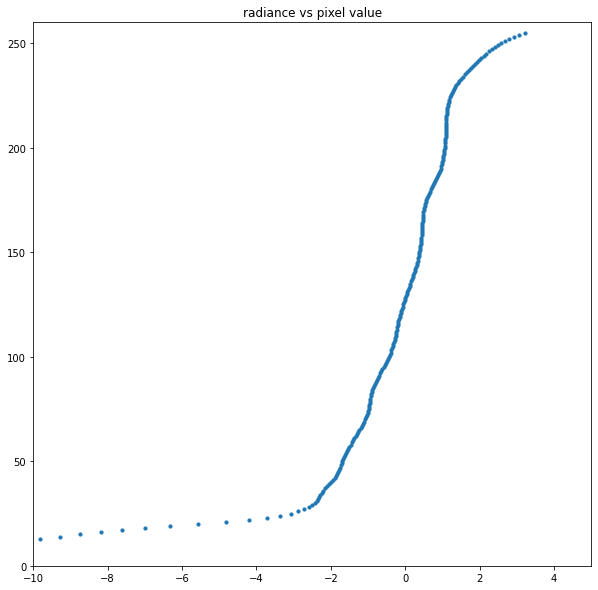

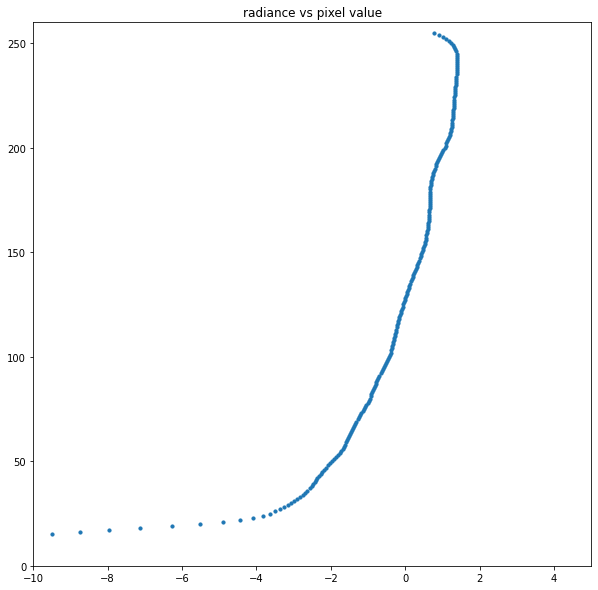

Here are the radiances and response curves for red, green and blue, respectively.

There is some nonlinearity in the response curve at the highest blue values. I would put that down to the relative unavailability of data points with such high blue values. Thankfully, this and the fact that the nonlinearity only kicks in at around B=249 also means that this nonlinearity of the response curve shouldn't have a huge aesthetic effect on the final image.

Now, in order to display the image we need a tonemap function to map these radiances to pixel values.