Reimplementing: A Neural Algorithm of Artistic Style

I followed the paper's methods section closely to reimplement the algorithm: https://arxiv.org/pdf/1508.06576.pdf

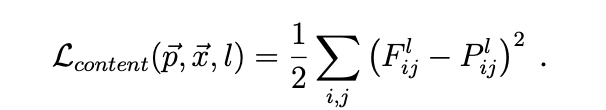

I started by defining the content loss:

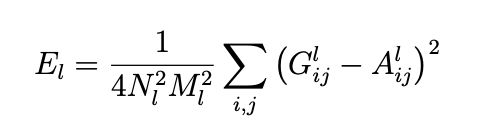

I then defined the style loss:

I created a helper function to compute the gram matrix:

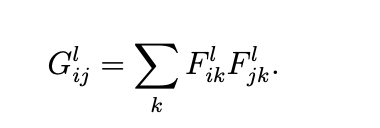

I then loaded in the pretrained vgg network. However since only the first 5 convolutions are needed the full model that was run is shown below:

I then fed in the style image and stored all 5 activations from the conv layers. I also fed in the content image and stored the 4th conv activation.

I then began the training process by creating a random white noise image. The associated activations of this white noise image would then be used to computed the style and content loss. I ran 700 iterations with a style weight of 1e-6 and content weight of 1.

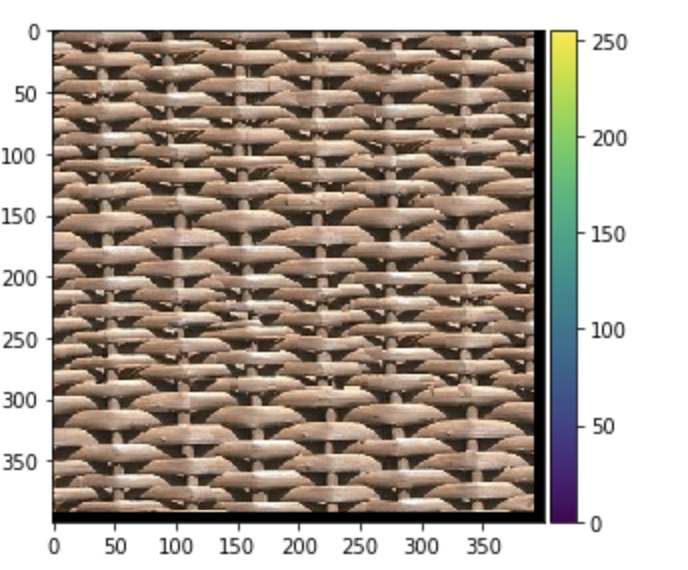

Below is the original image.

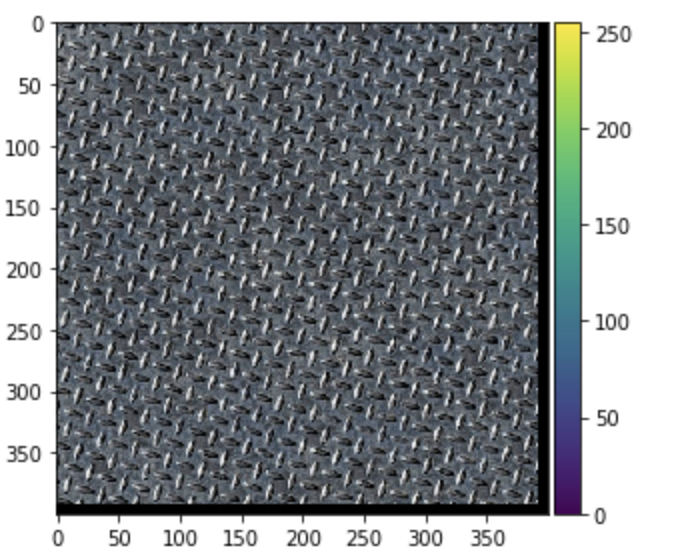

On the left is the style and the right is the resulting generated image:

Image Quilting

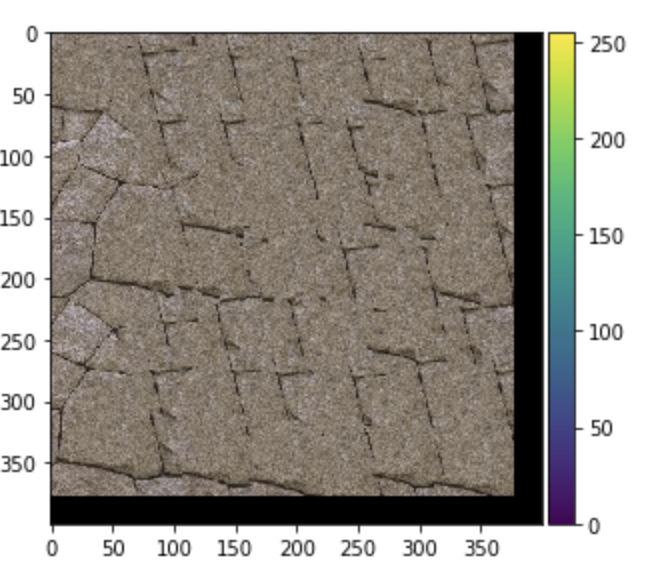

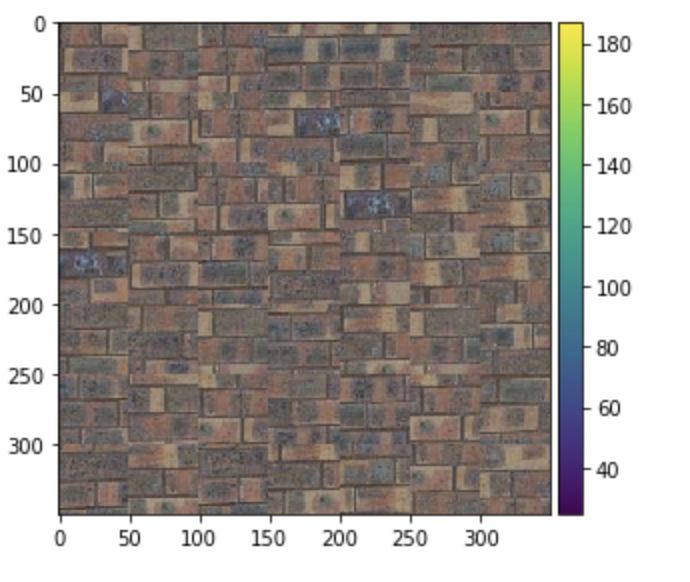

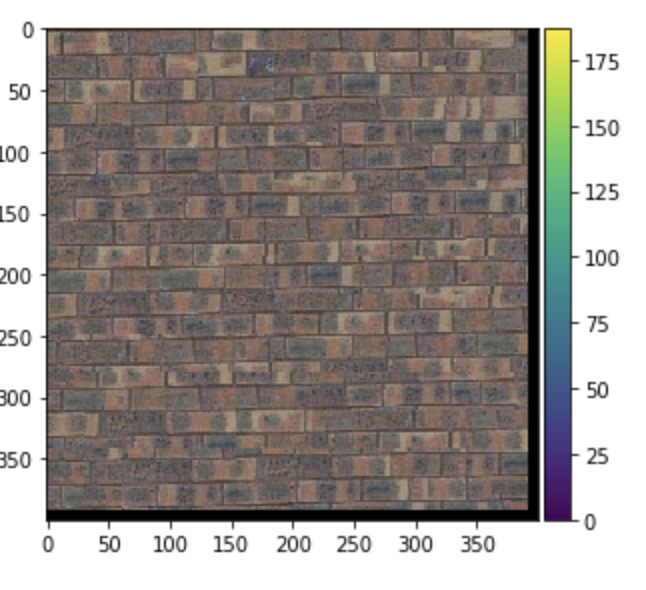

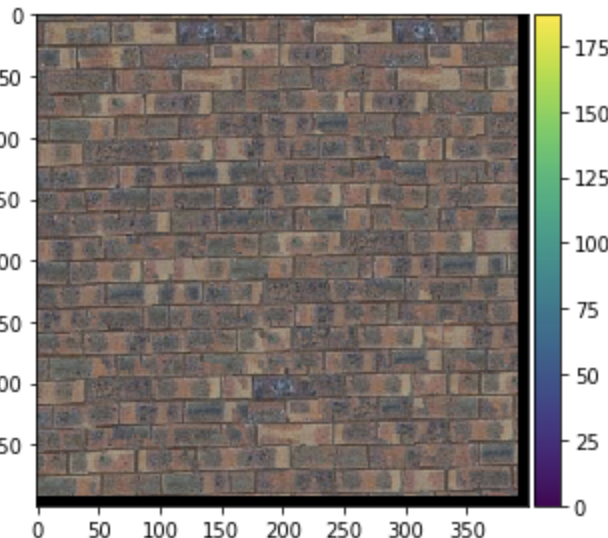

comparing random, overlapping, and seam-finding methods for one texture sample

Here are some more examples

Image synthesis works by first randomly picking a initial patch. It then will fill in patches that slightly overlap to the left and/or top. To find the best patch that overlaps it will go through the sample and find the one with the lowest ssd.

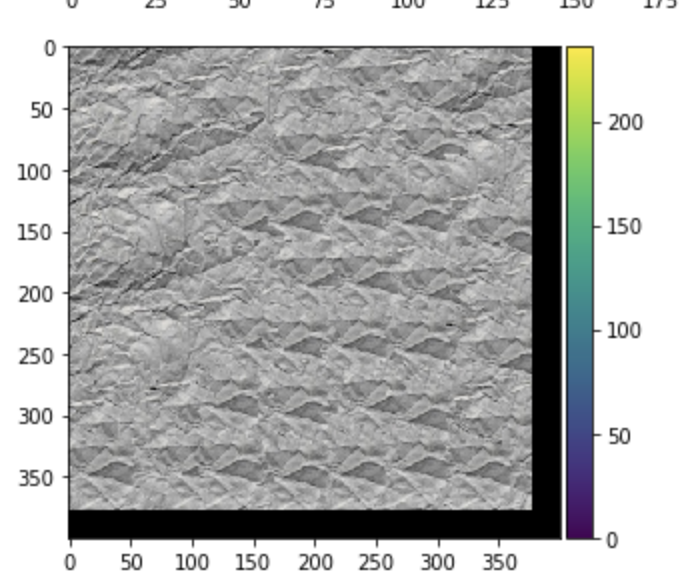

Texture Transfer Examples

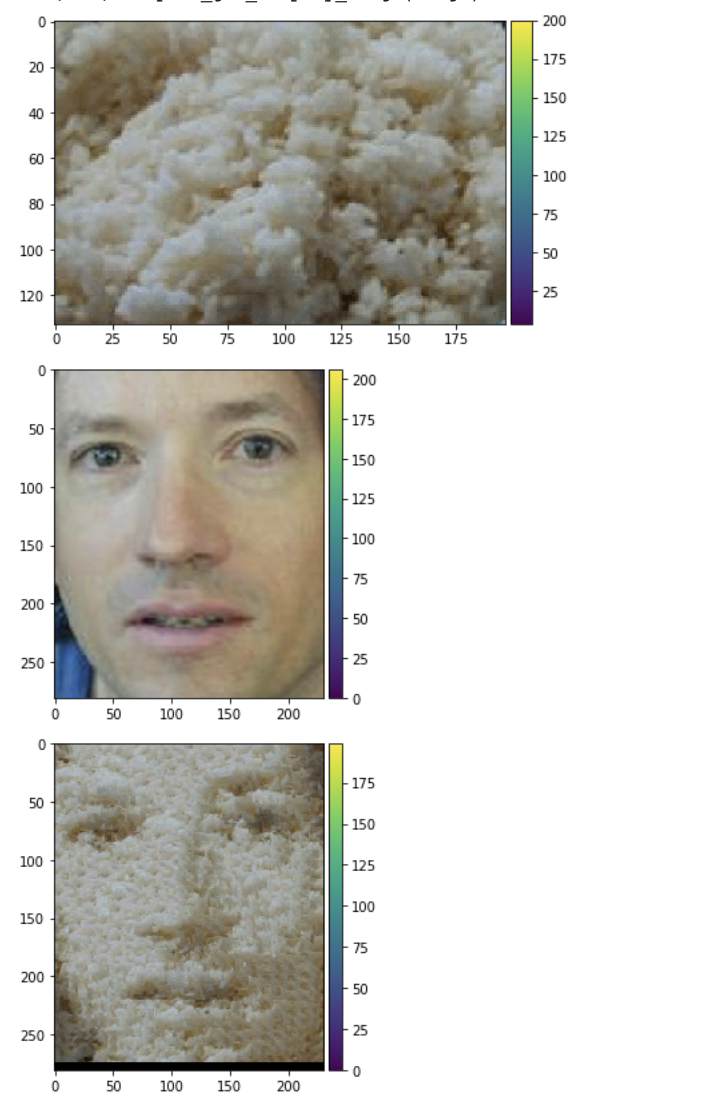

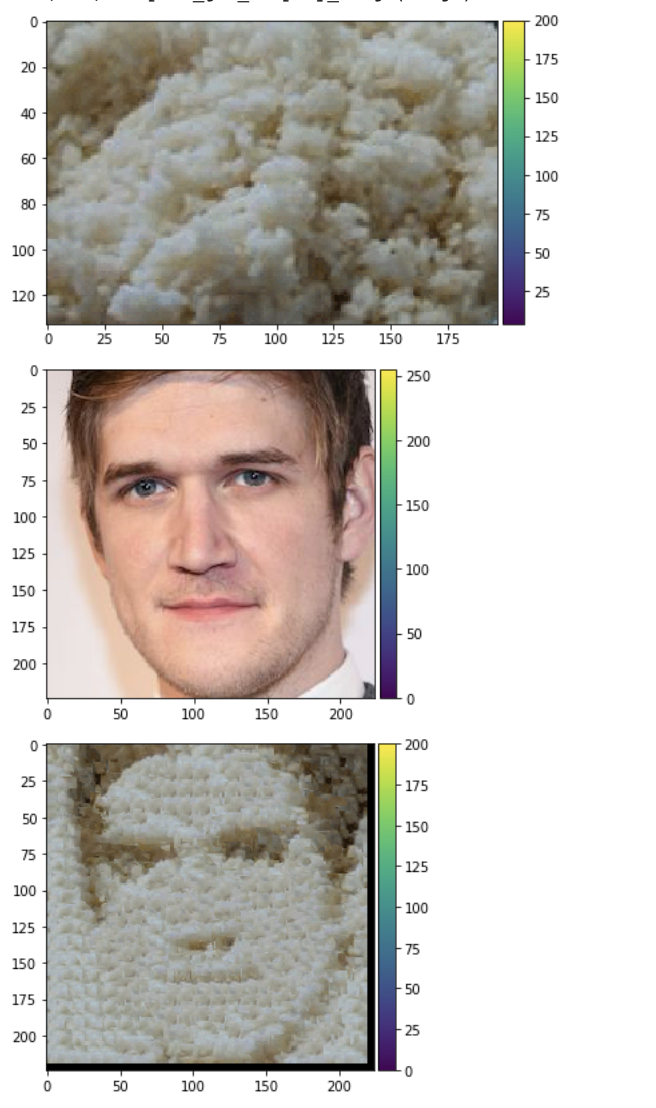

Here is an example with Bo Burnham

Texture transfer works by doing texture synthesis while also including a ssd cost that compares the potential patch with the image of interest. By comparing the patch with both the quilt and the image it will create an image that looks like the person but with the texture.

.jpeg)

.jpg)

.jpg)