I used 24 points to track the video, as shown in the output video marked by the small blue rectangle.

For each frame in the picture, I used the tracked points to compute a matrix to transfer their coordinates from 2d to 3d in based on the lower left corner(0, 0, 0), then use this matrix to compute the image pixel locations for the cube locations

The model I used is a pretrained SqueezeNet model trained on ImageNet, since it's smaller and much faster to train

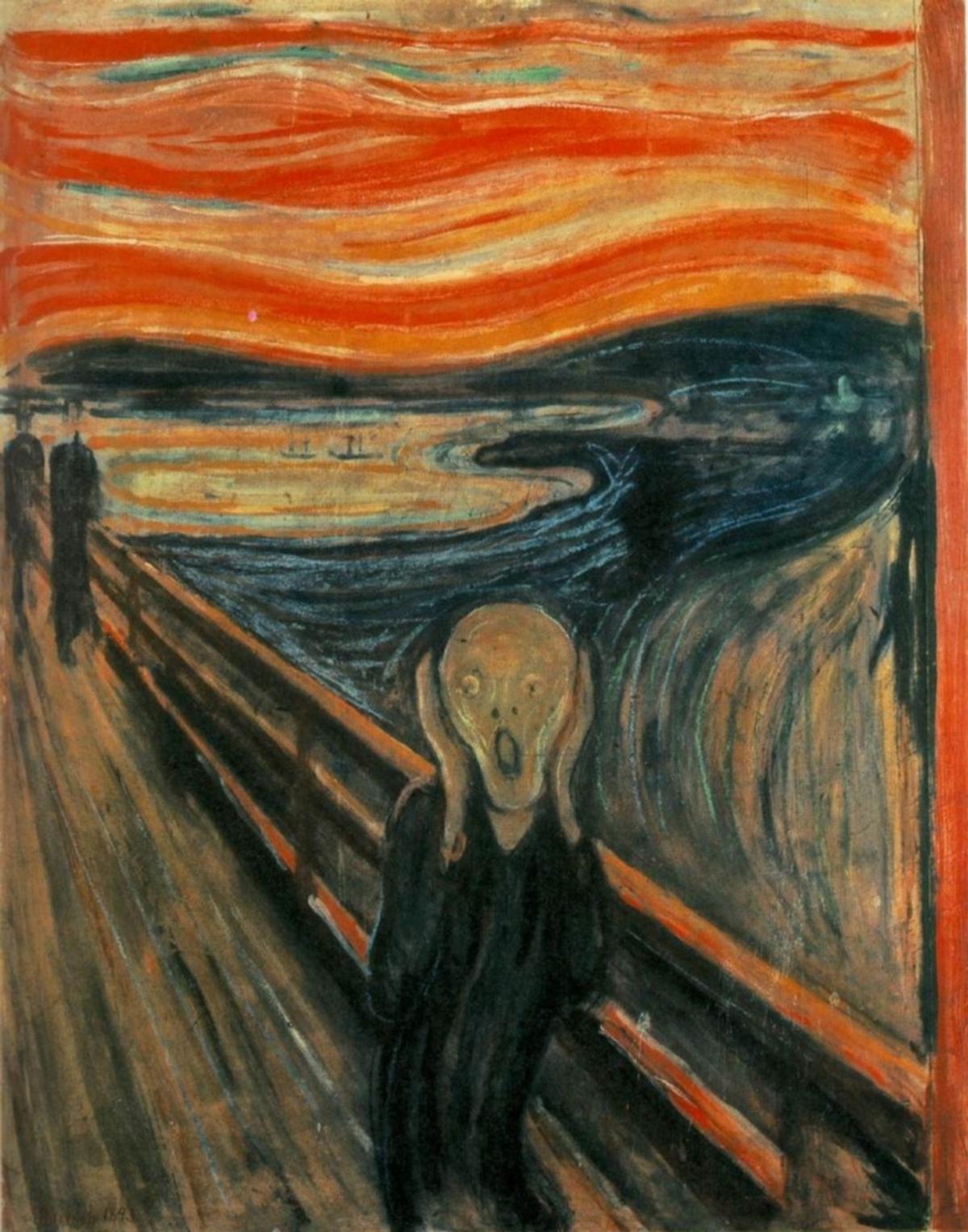

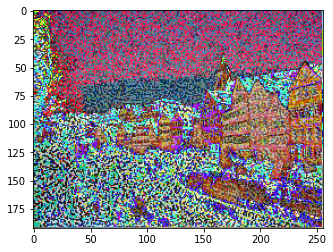

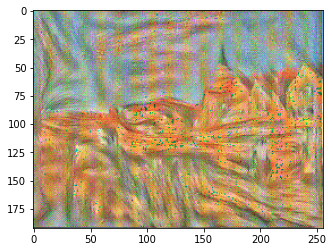

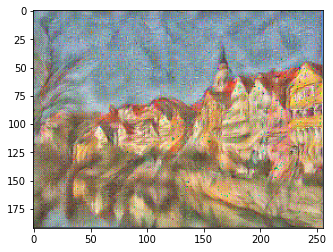

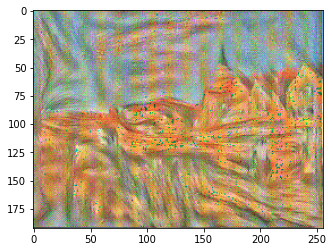

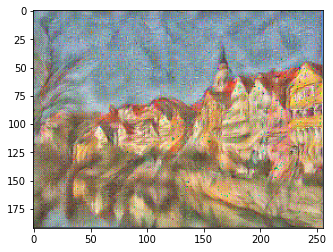

I used the 3th layer in the model for the content layer, and 1, 4, 6, 7th layers for the style layers.

For the style layers, since the first few layers catch much more detailed information, I decided to give them a larger weights depends on specific pictures

I used the formula mentioned in the paper to calculate the content loss and style loss and update the loss each time

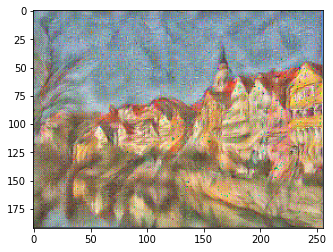

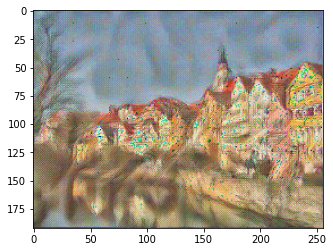

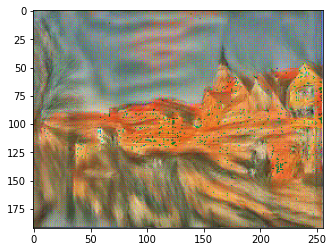

I tuned for the number of steps for the optimizer to update the image, it seems 100-150 steps with learning rate 3 would be a good fit, here are a few contrasts on the final results

I tuned for the learning rate with 0.1, 3, 10. Based on the comparison, 3 seems to be the best results