GameboyAir: A Camera-based User Interface for Cursor Control and Gaming

Authors:

- Zhibo Fan

- Tzu-Chuan Lin

For more details, please check our paper.

For the implementation details, please check our code.

Abstract

In this project, we proposed GameboyAir, mouse-free and keyboard-free user interface library for laptop which sup-ports cursor control and gaming. GameboyAir could run on any laptop with a camera and controls mouse cursor andkeyboard inputs based on detected hand gestures and movements. We utilized MediaPipe for hand keypoint detection and tracking, and create higher level features and build classifiers upon the detected keypoint coordinates. With gesture sequence and keypoint coordinate sequence, we can move the cursor, raise mouse events, and simulate keyboardinput for various applications. It is worth mentioning that GameboyAir is a framework rather than an application that developers can easily create new applications based on it.

Method

MediaPipe Hand

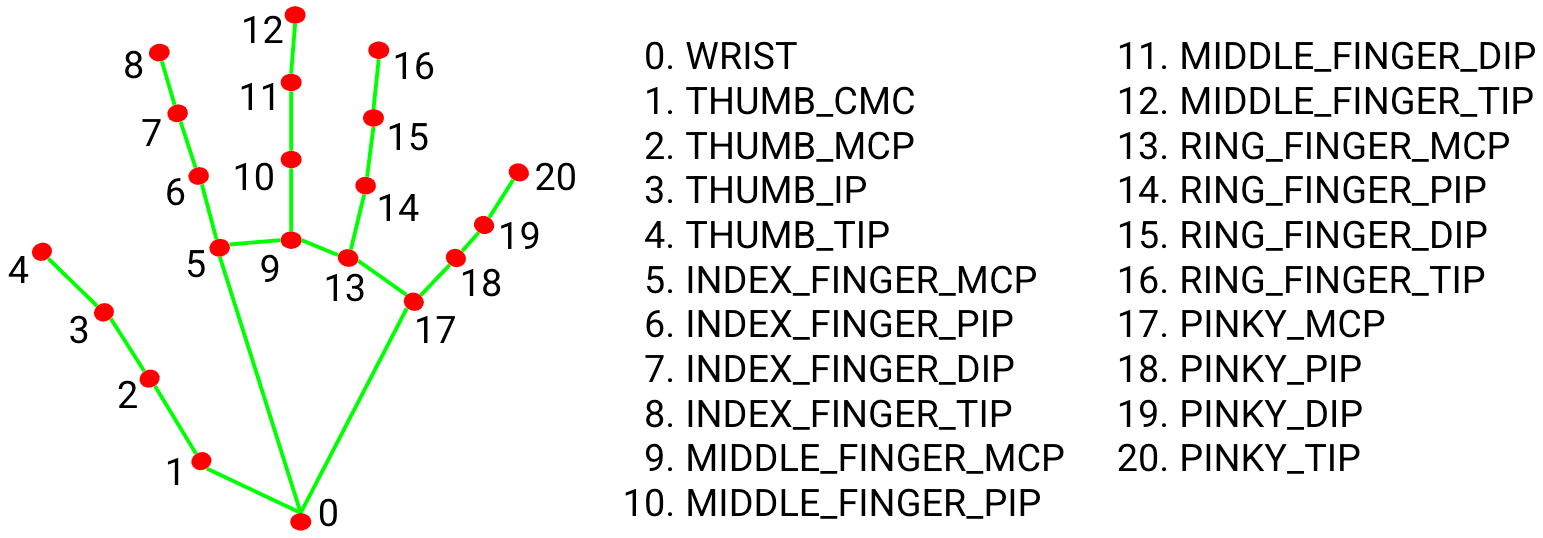

In this project, we used Google’s MediaPipe Hand solution to detect the landmark of the hand.

The detected 21 keypoints are shown in the figure below.

Heuristic Finger Descriptor

After the keypoints of the hand are detected, we then utilized this information to contruct the finger descriptor. We construct two types of the finger descriptors based on: 1) distances 2) 3D angles.

For the distance-based method, we used the information of the distance (x, y) between keypoints (knuckles) to determine whether this finger is straight or bent.

For the angle-based method, we divide each finger into 4 segments and then compute the 3D angles (because MediaPipe will also give us (x, y, z) information for each keypoint) to decide whether this finger is straight or bent.

After the construction of the finger descriptor, we will feed this information to the next stage of gesture recognition.

Gesture Recognition

In this project, for simplicity, we only assume one hand will appear in the camera.

Gesture recognition can be classied into two types: 1) static gestures and 2) dynamic gestures

Static gestures are gestures that can be classified by seeing only one frame of it. For example, victory, open palm and fist belong to this category.

While dynamics gestures are gestures that can only be classified by looking a sequence of frames.

For the static gestures, we classified the gesture based on if-else conditions upon the finger descriptors.

For the dynamic gestures, we treated them as a sequence of static gestures.

Without using any complex machine learing models, our carefully justified heuristic-based classifiers work well in the whole pipeline.

Cursor Control and Kalman Filtering

We notice if we directly use the keypoint detection results from MediaPipe, the cursor will not be stable enough to provide a good user experience.

Therefore, we apply a technique called Kalman Filtering which is common for object tracking field. By using the Kalman filtering, we can remove the measurement noise incurred from the keypoint prediction in the cursor movement trajectory.

Results

Framework Design

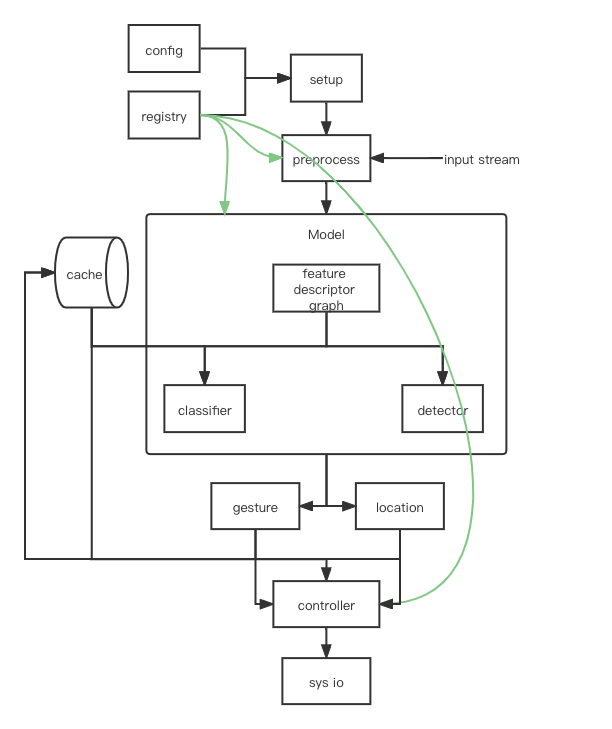

The overview of our framework is shown in the above graph.

First, the pipeline is initialized based on the configuration file and registered modules, then the input video frames are preprocessed and fed into the model part.

Model consists of a feature descriptor graph, where successive feature descriptors may rely on other feature descriptors, for example, the default feature graph consists of the MediaPipe API and the finger descriptor, where the latter one depends on outputs from MediaPipe.

Every output from the nodes in the feature descriptor graph is fed into the classifier and the detector, which yields the gestures and locations to control the cursor and raise mouse events.

Kalman filter is applied in the detector module, which perceives the keypoints as measurement and predicts the final location.

In addition, an external memory cache stores a fixed-length queue of the output from the modules, which facilitates sequence-based modules like the dynamic gesture classifier and relative control of the cursor.

Cursor Control

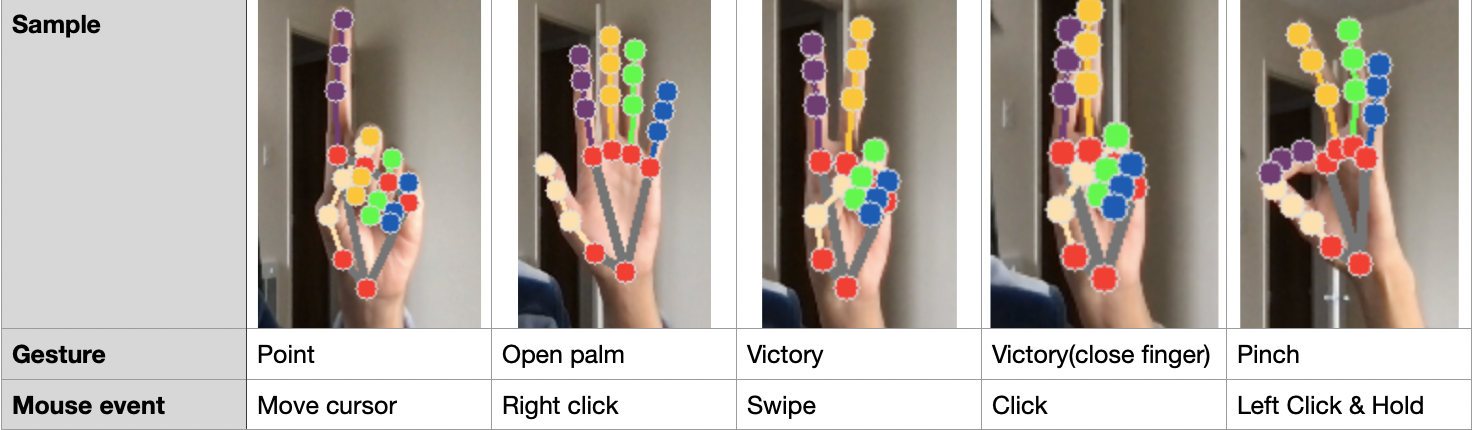

For the cursor control, we support gestures shown in the above table.

To resolve activating a same event multiple times due to the fact most gesture will exist over 1 frames, we borrow an idea from other's literature called: single-time activation. Specifically, we will only raise the event if the current frame's gesture does not appear in the near, say 5 consecutive frames, in the history gestures sequence.

Gaming

We currently support 4 games (Greedy Snake, Flappy Bird, Super Mario and NS Shaft) in our project.

For Greedy snake, we use the orientation to determine the movement of the snake.

For Flappy bird, we use open palm to flap the bird.

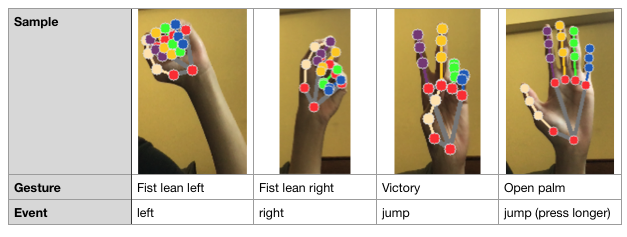

For Super Mario, we define the 4 kinds of gesture shown in the table below.

Finally, for NS Shaft, we adapt the idea from Super Mario for moving left and right.

Demo videos

- Cursor control

- Flappy bird

- NS Shaft

- Super Mario