|

|

|

|

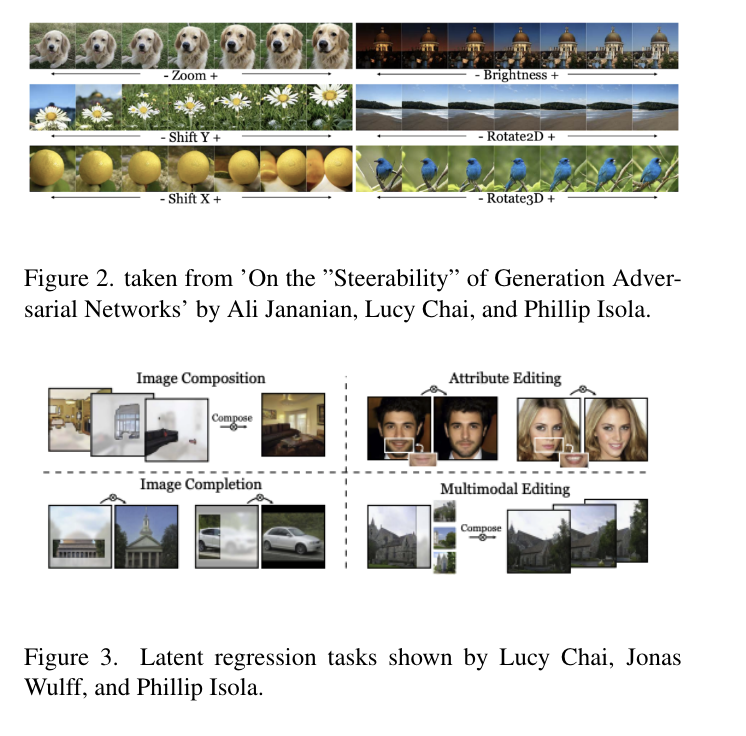

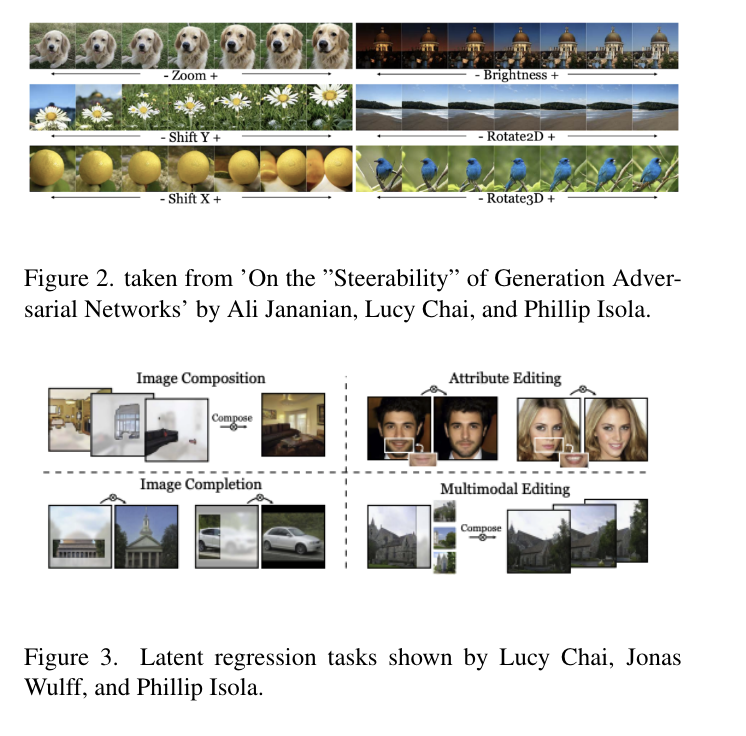

Recent work has shown that Generative Adversarial Network (GAN) models are able to learn semantically rich representations in the latent space. As shown in by figure 2, manipulating the latent code of generative adversarial models allows editing attributes such as zoom, shift, brightness, and rotation. Furthermore, Lucy et al. (Figure 3) show that latent regression allows you to tackle tasks such as composition and inpainting.

Conditional GANs rely on labelling from human or pre-trained models. This creates a bottleneck for scaling conditional GANs. If we wanted to train GANs to produce realistic images of cars of different classes, we need class-level supervision which is expensive and time-consuming to obtain. Recent advancements in deep learning have built off of large, unlabeled datasets by leveraging self-supervised tasks. In this work, we aim to use unconditional pre-trained GANs, which can be trained on any data distribution without any supervision, to generate task-conditional images. Effective methods for conditional generation using pre-trained GANs will allow us to leverage large unlabeled datasets for better performance on conditional tasks.

In our experiments, we introduce 3 tasks for benchmarking the performance of our algorithms. Additionally, we present trade-offs between sample diversity and performance on the task. Sample diversity is qualitatively assessed by the variety of backgrounds, car models, poses, and colors in the sampled images while the performance on the task is quantified by the task loss.

|

|

We are able to leverage CLIP's multi-modal encodings to condition images on text queries, such as "racecar". We use the cosine distance between the encoding of the generated image and the encoding for the target word as a loss function and optimize the latent vector to minimize the loss. We show that optimizing the loss function directly leads to color artifacts and oversatured, unrealistic samples which is corrected by using a prior in the latent space.

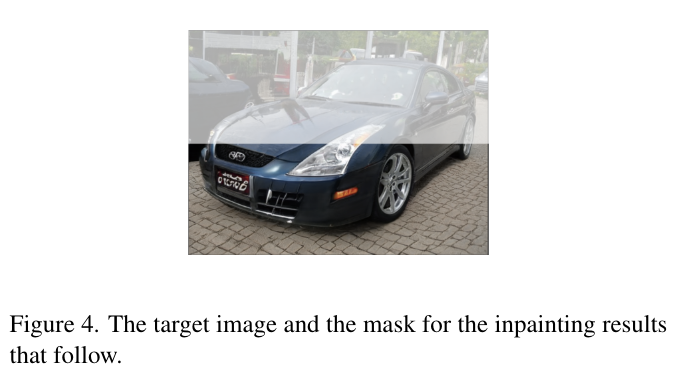

In the inpainting task, we are given a target image and a mask (shown in Figure 4), and are tasked with creating a realistic images that matches the unmasked region of the target. This task allows us to strongly condition on target images, requiring the samples to match the unmasked target while keeping diversity in the masked-out region. For the loss function, we mask the generated and target images and use LPIPS loss (taking L2 distance between downstream VGG encodings for both images).

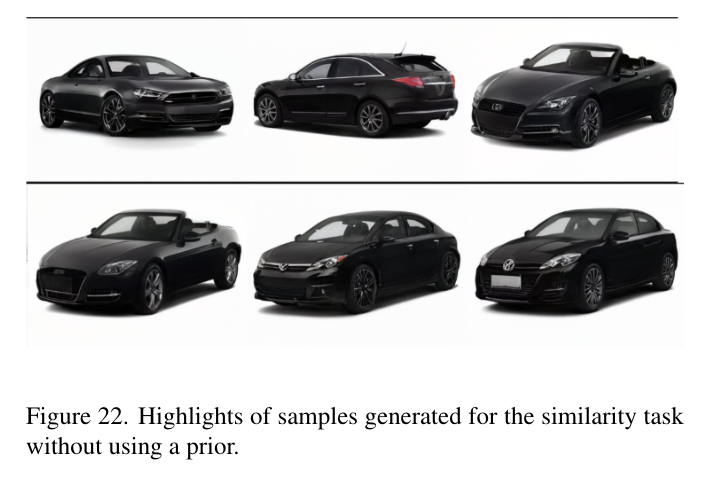

Lastly, we present a similarity task, which is a special case of inpainting with no masked-out region. Although latent inversion achieves optimal loss on this task, we aim to show a trade-off between similarity and diversity in samples using different prior models. We use Figure 5 as the target for this task.

Denoising diffusion models are trained to directly estimate the gradient of log-likelihood of a data distribution. In other words, diffusion models output the direction of higher likelihood. Diffusion models have state of the art sample quality when trained directly on images. Rather than applying it on 512x512 data, we train a latent space diffusion model to use as a prior for task-conditioned sampling (algorithm detailed in methods).

|

|

Conditional GANs perform similar tasks at much better fidelity level; however, they are limited by the amount of supervised data. Hence, they are not able to scale to complex datasets such as scenes or regimes where labelling is too expensive or noisy.

Latent optimization serves as the backbone for this work. We show that latent optimization by itself is not sufficient for tasks such as inpainting or CLIP text supervision. Furthermore, As shown in Figure 6, optimizing a task does not enforce realism. In any supervised signal from a task, there may be artifacts such as higher contrast images preferred, which, when taken to the extreme, produces blown out and unrealistic samples. Naturally, this brings out the need for priors that constrain the optimization results to the data manifold, which is the exact task of diffusion models.

Latent regression trains a regressor to predict the latent code of a given image. Furthermore, Lucy et al. have shown it to generalize to images not sampled from the GAN, allowing to perform image composition. The pitfall of this method is that the regression objective fails in multimodal settings, where the loss minimal target is in a low density area. By sacrificing the forward pass runtime of regression for looped optimization, we are able to overcome multimodality of the latent space.

|

To train the diffusion model, we used a continuous time denoising diffusion approach. We trained a diffusion model in W and Style latent spaces of Style-GAN2 (car images) by repeatedly sampling a W vector from the generator's mapping network, adding noise into the vector, and applying denoising (Refer to Dhariwal et. al for full training procedure).

Specifically, we use a network with 5000 units and 7 layers, using group normalization, exponential moving averaging for the saved model, and normalizing the latent vector according to different modes (more in results).

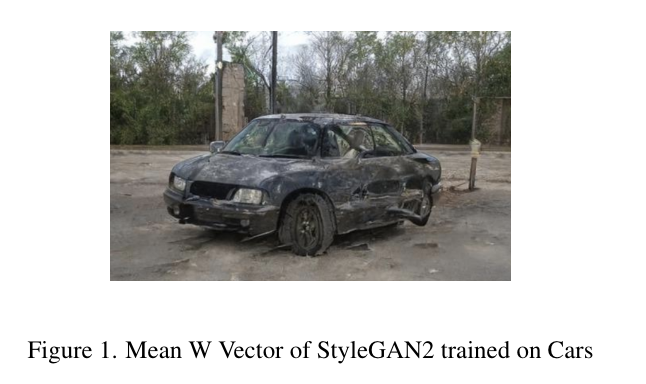

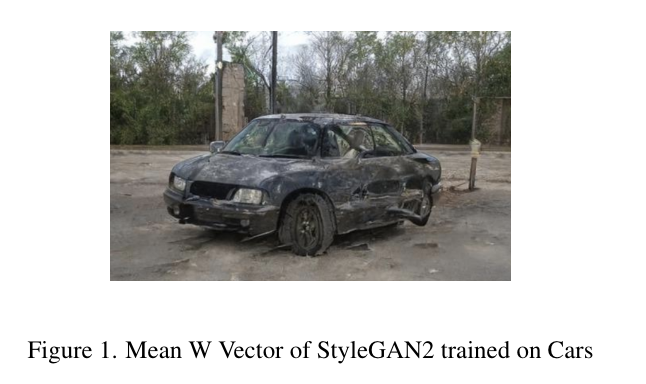

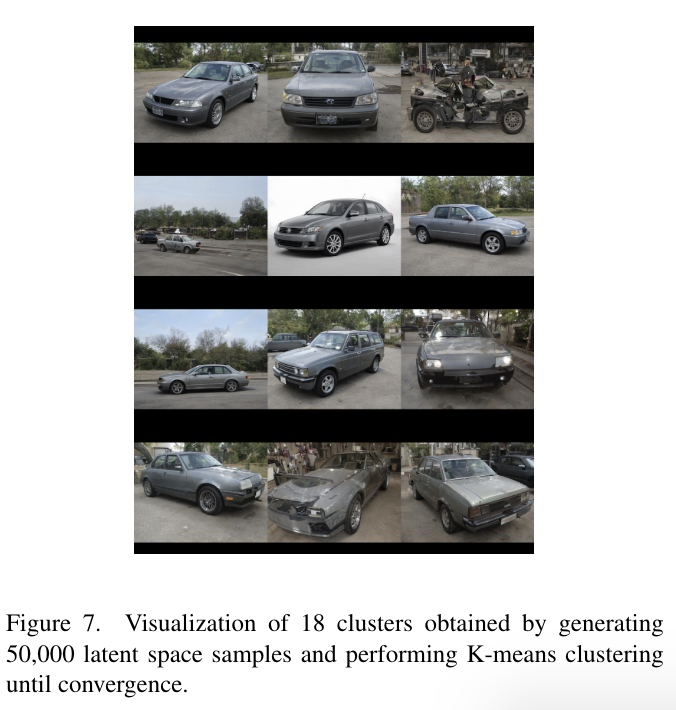

Other than proposing a diffusion prior, we showcase mean and cluster baseline priors. The mean prior consists of adding a loss into the optimization objective for the L2 distance of the latent from the mean latent. This approximates the latent distribution as a gaussian, which has been shown incorrect in Figure 1. Additionally, we generalize the mean prior into a cluster prior, which adds an L2 distance loss to the nearest cluster, which are pre-computed using k-means on 50,000 latent samples (Figure 7).

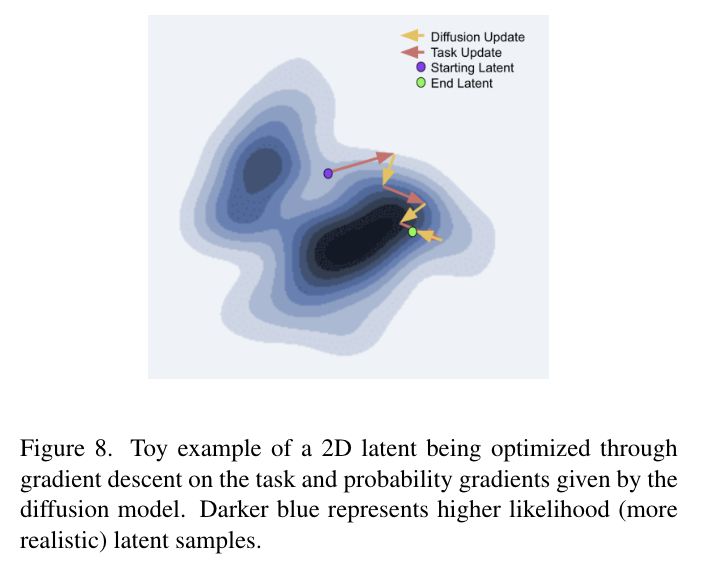

We propose an algorithm for conditional sampling using denoising diffusion. As illustrated in figure 8, the algorithm consists of first sampling a latent vector from the generator's mapping network. Then, we alternate between performing a task update and adjusting the latent vector according to the diffusion model's forward pass. More specifically, we perform 10 diffusion update steps for each task update. Diffusion models also take in a time variable which corresponds to the percent noise in the data. Empirically, we find that decreasing noise from 5\% to 0\% over each 10 diffusion steps enforces the prior well. For specific diffusion algorithm implementation, refer to the code base.

|

|

|

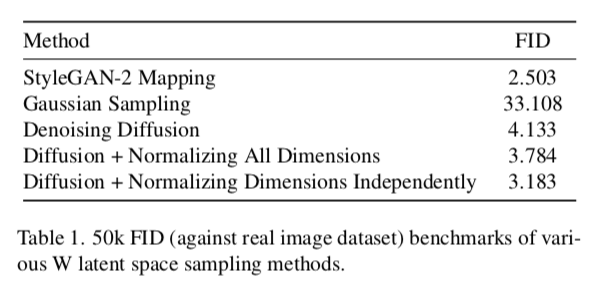

We first inspect the ability of the diffusion model to produce latent vector samples without any task conditioning. We use a predictive-corrective sampler proposed for diffusion models by Dhariwal and Nichol. We record the FID values of the diffusion latents against real dataset images in Table 1.

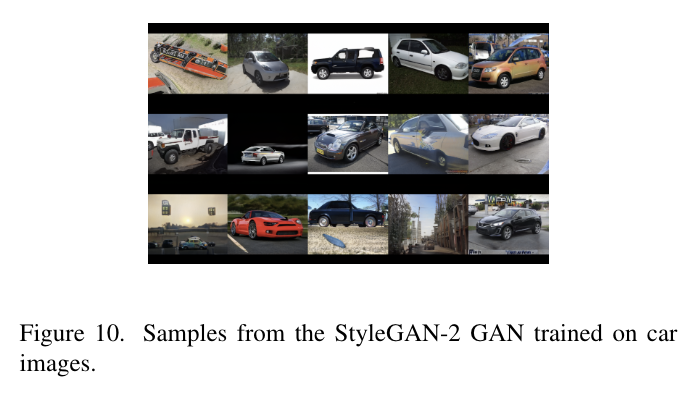

For visual inspection, we provide samples from the best diffusion model in Figure 9 and samples from StyleGAN in Figure 10. The diffusion samples perform well, especially given that the generator samples themselves sometimes have poor quality.

|

|

|

|

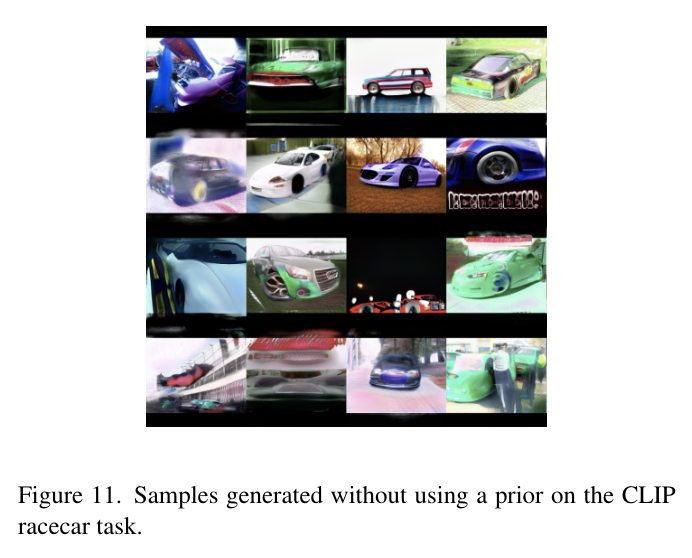

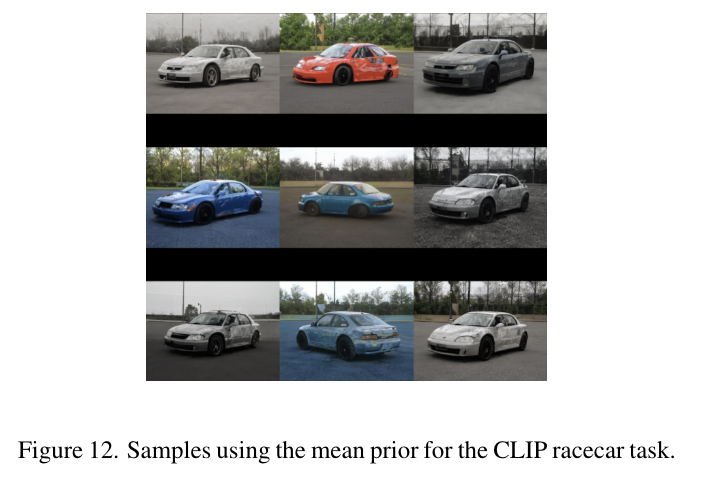

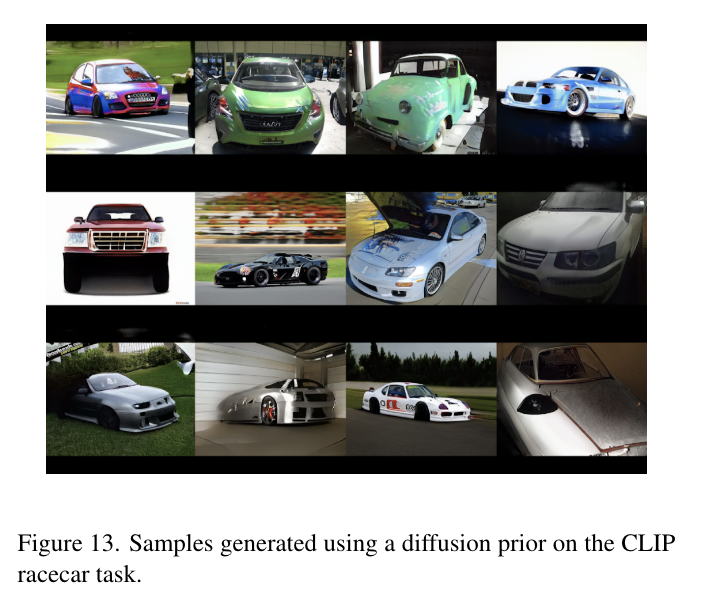

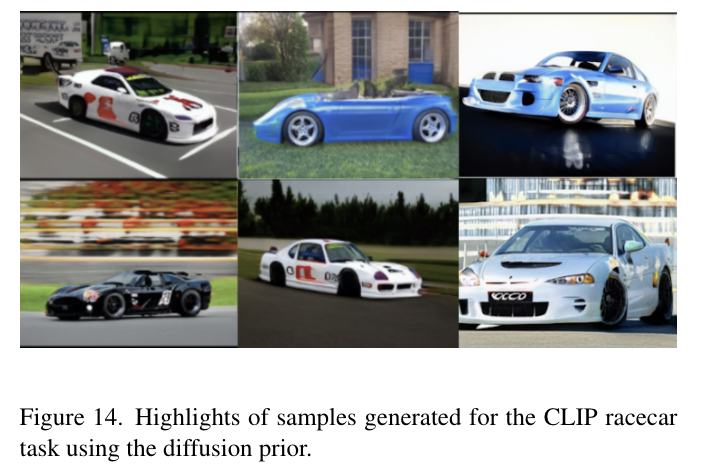

For the CLIP embedding task, we present the results of optimization without any prior in Figure 11. All images are high in contrast and saturation which is an artifact of CLIP’s learned embedding. Since this is not a realistic (as given by the original dataset) attribute, a successful prior would remove the artifacts. In figure 12, we see the samples using a mean prior, which more or less has the same car colored differently. Although this creates a realistic image, it doesn’t resemble a race car in a natural image or have sufficient diversity. In Figure 13, we see the results of sampling with the diffusion prior. The diffusion prior doesn’t always produce realistic images; however, has much better car and background diversity. Furthermore, as seen in the highlights in Figure 14, the best samples perform the task and capture a lot more diversity than any other result.

|

|

|

|

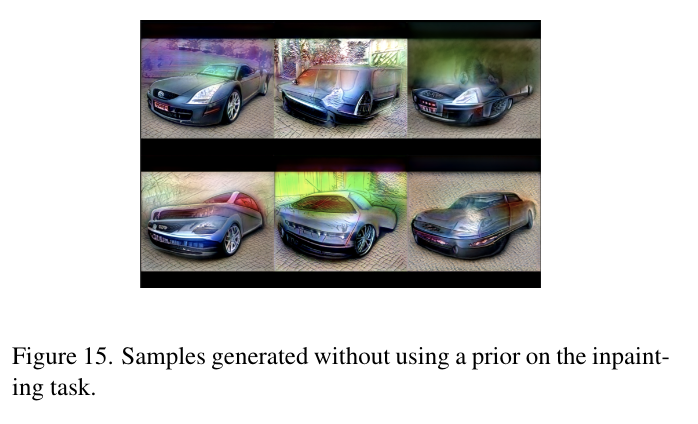

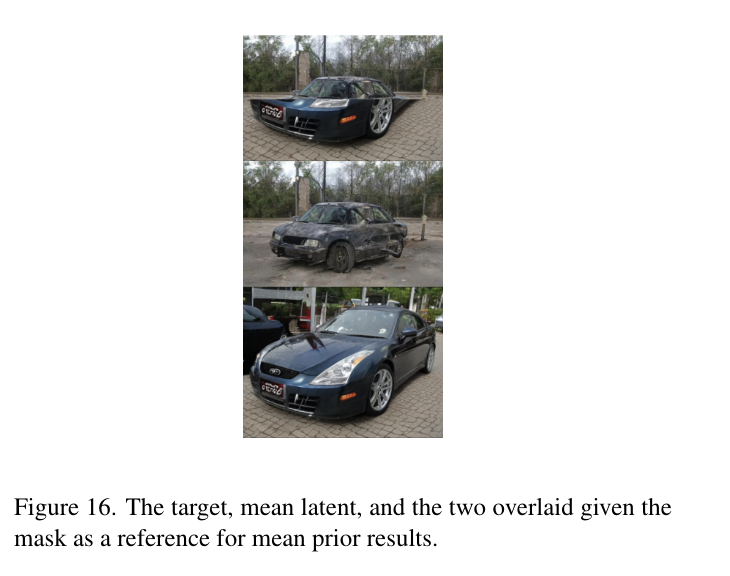

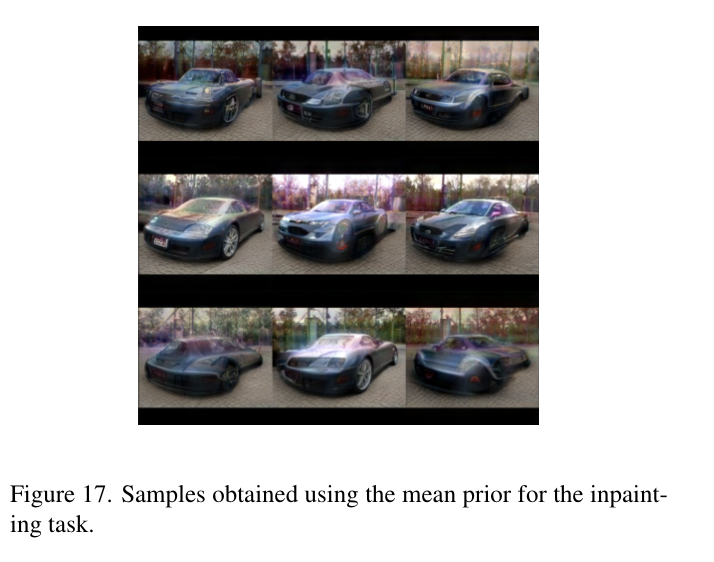

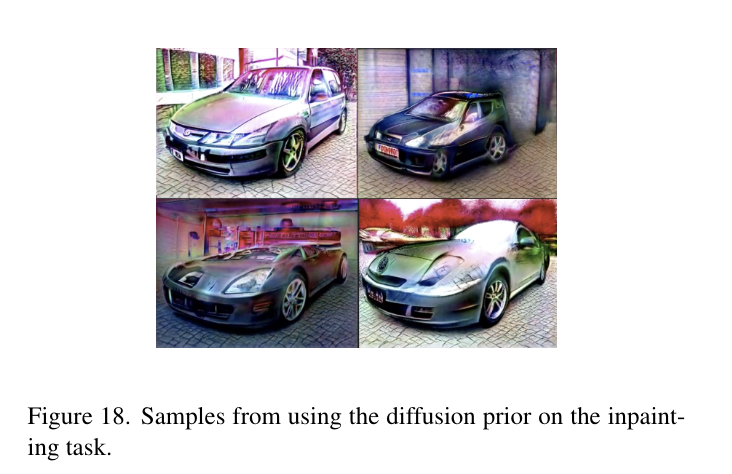

The inpainting task presents stronger and more challenging conditioning than the other two tasks, because the task loss has to force the unmasked region to match while generating fully plausible data in the masked region. To reflect this, I chose to perform the experiments in Style space, which is more expressive, higher dimensional, yet harder to control. Optimization without prior successfully matches the unmasked region however generates a mess in the masked region as shown in Figure 15. Optimization using the mean prior produces images similar to the overlay of the mean latent and target image (Figure 16); however, with more color distortion as shown in Figure 17. The diffusion results in Figure 18 have the color saturation problem, however, the content in the masked out region is more realistic than no prior. To improve results, we recommend fine-tuning the optimization procedure and training a better style-space diffusion model.

|

|

|

|

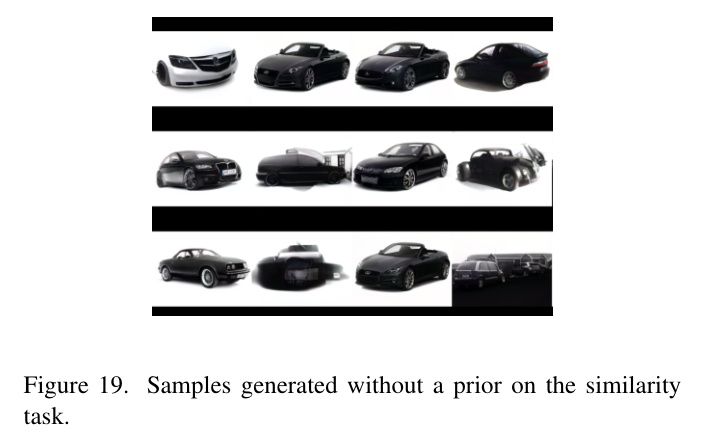

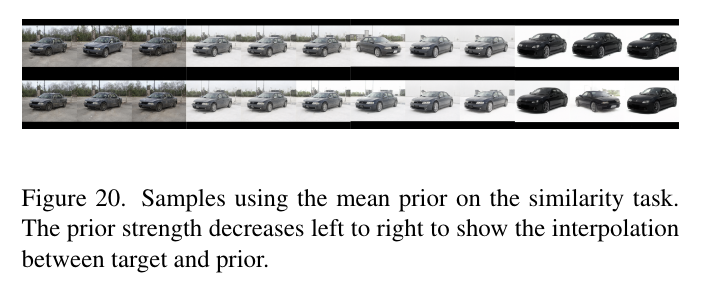

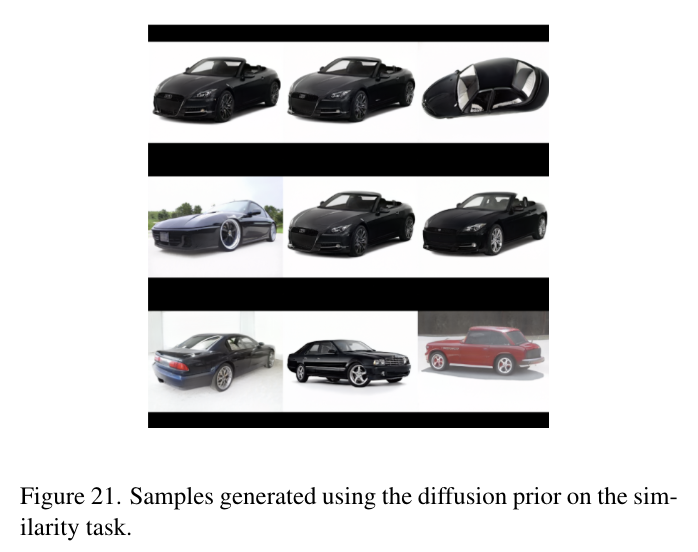

Optimization without a prior leads to poor sampling quality as seen in Figure 19. We show that the mean and cluster priors interpolate from the target to the prior modes as shown in Figure 20. Using the diffusion prior, we improve the sample quality while retaining diversity and similarity, with a batch of samples in Figure 20. The diffusion prior highlights shown in Figure 21 show different samples with very similar visual features with pose and shape diversity.

We show improvements to prior-less latent optimization in CLIP embedding and similarity tasks. We quantitatively show that diffusion networks are able to estimate W latent space density by showing that the FID of W samples from diffusion is comparable to that of the StyleGAN mapping network.

Then, we show that using both baseline and diffusion priors in the CLIP embedding task is needed to get rid of color artifacts present when optimizing latent only from CLIP guidance. Additionally, using a diffusion prior allows for better sample diversity than mean or cluster priors in the pose, color, background, and shape of the cars sampled. We show similar results in the similarity task, showing that cluster and mean priors are limited to interpolating between target and neighbor, while diffusion achieves good sample quality, diversity, and similarity.

We are not able to show convincing results of the diffusion algorithm working in style space on the inpainting task; however, we believe that bigger, ablated diffusion models and carefully tuned optimizer parameters are needed to achieve good results in style space. We also note that the inpainting task provides the most difficult conditioning.

We note that sampling the initial optimization latent randomly from the mapping network of the generator as the initial point is problematic. Since the initial starting point is a sample and the task loss only gives you a local signal, the optimization often converges to a poor local minimum. We observe that either the gradient from the task approaches 0 or the task gradients and diffusion steps cancel each other out. In practice, when starting from an old car and optimizing for “racecar”, there is no high-likelihood path in W latent space to avoid a poor local minima. This is a major issue in the projected samples and would need to be fixed by starting with noise and guiding diffusion updates using the task loss.

Training a diffusion in style space is challenging. This can be due to style having a much higher dimensionality, larger than the size of the network we can use without inductive biases in architecture due to memory limits. Other problems may be poor numerical conditioning in the style space, which we do not explore. Additionally, it may be useful to explore the random noise buffers’ (in StyleGAN-2) effect on the optimization results. Style space allows for much better expressivity, which magnifies the problems with latent optimization highlighted above, so building a prior for style space would be the most useful result to show.