Human Meshes in Olympic Videos

Nithin Chalapathi

CS294 Final Project

SID: 3032738412

|

Abstract

|

The task of generation human meshes on static imagesand video data has made tremendous progress. However,many state of the art methods struggle on complicatedvideos with multiple occlusions, multiple people, and fastmotion. In this work, we generate 200 short video clipsfrom the 2018 and 2020 Olympics and evaluate VIBE andHMMR models on each clip. Our results are public in a BoxRepository. Finally, we attempt to use the motion discrimi-nator from VIBE to match pose tracks to generate a smoothtrack. Our method provides sub-par results and we discussfuture directions.

|

Olympics Data

In order to evaluate HMMR and VIBE, we also generate a database with 200 clips from the from 2018 and 2020 Olympics. We specifically focus on rock climbing, snowboarding, skateboarding, and figure skating. For each clip, we run both OpenPose and AlphaPose to ensure that at least one method is able to detect a valid pose track. We run both since HMMR uses AlphaPose and VIBE uses OpenPose; by making sure at least one method can recover a track, we try to avoid biasing the results.

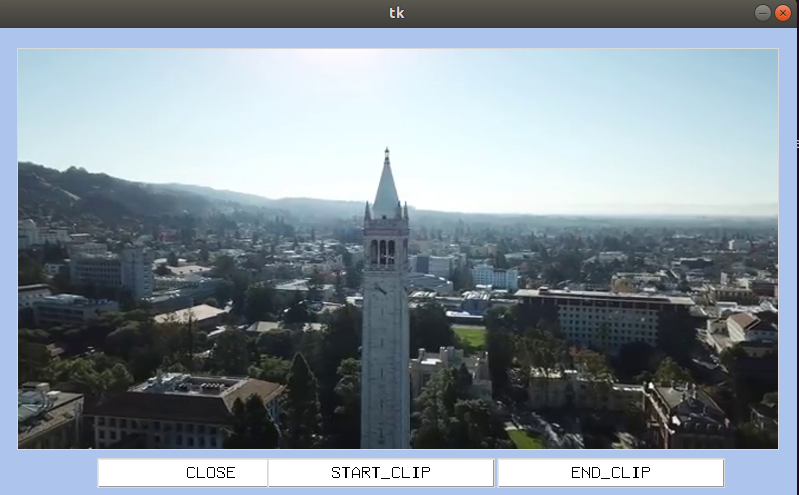

To build the clip extractor, we use PyTube to download various YouTube clips. Each clip is then played in a TKinter window using OpenCV2. Below is our interface. The person clipping the video selects a start and end time stamp. Multiple clips can be extracted from the same video.

For each of the sports, we extract 50 clips; each clip is roughly 7-10 seconds. We provide a public Box link to view the videos.

Discriminator Based Track Matching

One common problem we noticed was OpenPose hastrouble keeping continuity between tracks that should be thesame. While VIBE visually performs better on a per framebasis, the discontinuity in tracks negatively affects the final output. In order to attempt to tackle this, we introducediscriminator based track matching.

For tracks that are within 30 (1 second) frames, concate-nate the predicted motion sequences from both. After, passthe result into the motion discriminator from VIBE. If the probability the combined sequence is “real” is higher thaneach of the individual tracks. Finally, in order to ensurethat tracks are spatially similar, for the last bounding box oftrack 1 and the first bounding box of track 2, we computean intersection over union score. If all of the conditionsare met, the tracks are combined and VIBE is rerun on thetrack. For frames in between the tracks, the bounding boxis linearly interpolated.

For each of the following results:

LEFT: HMMR Result

MIDDLE: VIBE Result

RIGHT: VIBE + Discrim Result