Programming Project #5 (proj5)

Programming Project #5 (proj5)CS194-26: Intro to Computer Vision and Computational Photography

Programming Project #5 (proj5)

Programming Project #5 (proj5)

In this project, you will learn how to use neural networks to automatically detect facial keypoints -- no more clicking! For this project, we suggest using PyTorch as the deep learning framework, and any provided starter or reference code will reflect this. Here are some tutorial videos that might be helpful for you: Neural Networks Demystified and PyTorch in 5 minutes. We include more tutorial links in the resources section. For parts 1 and 2, you should be able to train your models locally. For part 3, we recommend using Google Colab, but for all parts you may use any hardware that works for you. If you choose to use Colab, once you create a new notebook, you need to go to Runtime --> change runtime type and set the hardware accelerator to GPU. Note that one Colab session has an idle timeout for 90 minutes and an absolute timeout for 12 hours, so please download your results/your trained model frequently. Make sure to START EARLY, especially if you are not familiar with PyTorch or Colab - we will not provide additional slip days or deadline extensions. Once you complete the project, please submit the code to bCourses.

For the first part, we will use the IMM Face Database available on this website for training an initial toy model for nose tip detection. The dataset has 240 facial images of 40 persons and each person has 6 facial images in different viewpoints. All images are annotated with 58 facial keypoints. Please use all 6 images of the first 32 persons (index 1-32) as the training set (total 32 x 6 = 192 images) and the images of the remaining 8 persons (index 33-40) (8 * 6 = 48 images) as the validation set. As a reference, the staff solution takes less than 1 minute to train 10 epoches locally.

We will cast the nose detection problem as a pixel coordinate regression problem, where the input is a single grayscale image, and the outputs are the nose tip positions (x, y). In practice, (x, y) are represented as the ratio of image width and height, ranging from 0 to 1.

Dataloader: Use the dataloader from torch.utils.data.DataLoader. This tutorial shows how to write a custom dataloader. You need to load both the images and the keypoints - you may be able to reuse code from Project 3. Then, you need to convert the image into grayscale and convert image pixel values in uint8 from 0 to 255, to normalized float values in range -0.5 to 0.5 (image.astype(np.float32) / 255 - 0.5). After that, resize the image into smaller size, e.g., 80x60. For loading the facial keypoints including the nose keypoint, we have provided example code. Once you have the dataloader, sample a few images and display them along with the nose keypoints.

CNN: Once you have the dataloader, write a convolutional neural network using torch.nn.Module. This tutorial gives an example of how to write a neural network in PyTorch. If you are not familiar with PyTorch or CNN architectures, please refer to this tutorial. Our CNNs will use a convolutional layer (torch.nn.Conv2d), max pooling layer (torch.nn.MaxPool2d) and Rectilinear Unit as non-linearity (torch.nn.ReLU). The architecture of your neural network should be 3-4 convolutional layers, 12-32 channels each. The kernel/filter size for each convolutional layer should be 7x7, 5x5 or 3x3. Each convolutional layer will be followed by a ReLU followed by a maxpool. Finally, this should be followed by 2 fully connected layers. Apply ReLU after the first fully connected layer (but not after the last fully connected layer). You should play around with different design choices to improve your result.

Loss Function and Optimizer: Now that you have the predictor (CNN) and the dataloader, you need to define the loss function and the optimizer before you can start training your CNN. You will use mean squared error loss (torch.nn.MSELoss) as the prediction loss. Train your neural network using Adam (torch.optim.Adam) with a learning rate of 1e-3. Run the training loop for 10 to 25 epoches (one epoch means going through all training images). Try different different learning rates.

Hyperparameter Tuning: Try varying two of the hyperparameters (number of layers, channel size, filter size, or learning rate) and show how it affects (or doesn't affect) the performance of your network.

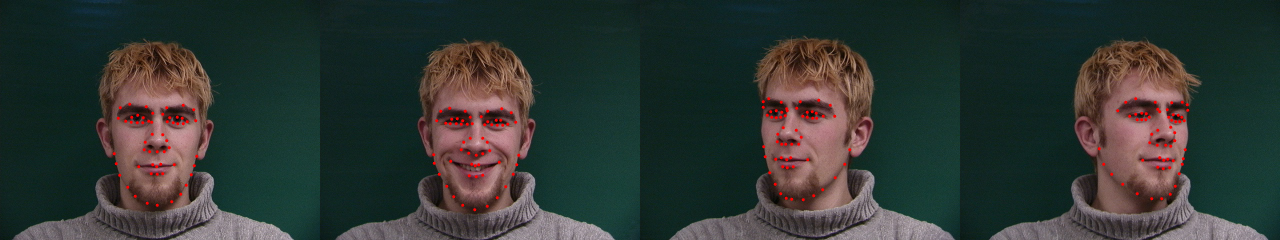

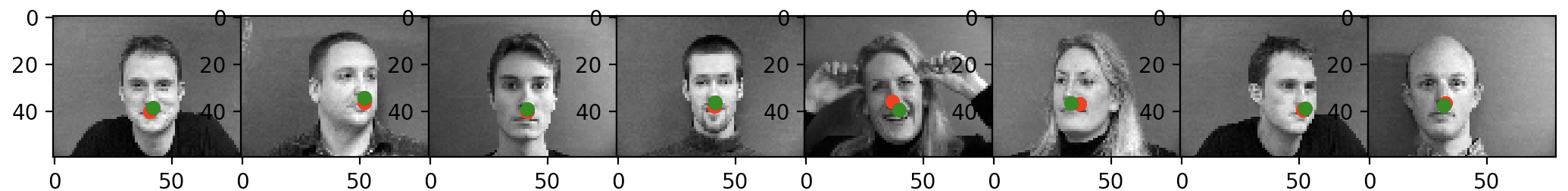

Results: As an reference, the above image shows the result from the staff solution, where the green points are ground-truth annotation and red points are predictions. You need show the following results:

We are not satisfied with just detecting the nose tip position - in this section we want to move forward and detect all 58 facial keypoints/landmarks. You need to use the same dataset as Part 1 but now try to load all 58 keypoints and predict them.

Dataloader The code in this section should be similar to part 1, but this time, try a larger input image size like 160x120 or 240x180. Since it is a small dataset, we will also need data augmentation to prevent the trained model from overfitting. Check this tutorial to learn more about data augmentation in PyTorch. There are many ways to perform data augmentation, including randomly changing the brightness and saturation of the resized face (torchvision.transforms.ColorJitter), randomly rotating the face for like -15 to 15 degrees, and randomly shifting the face for like -10 to 10 pixels. Note that if you rotate or shift the image, you will also need to update the keypoints so that they reflect the change. Once you have the dataloader, sample a few images and display them along with the ground-truth keypoints.

CNN: With larger input images, you need to have more convolution layers in the neural network. Write a CNN with 5-6 convolution layers for this task. Each convolution layer should be followed by a ReLU layer, and optionally a maxpool layer. You should play around with different design choices to improve your result.

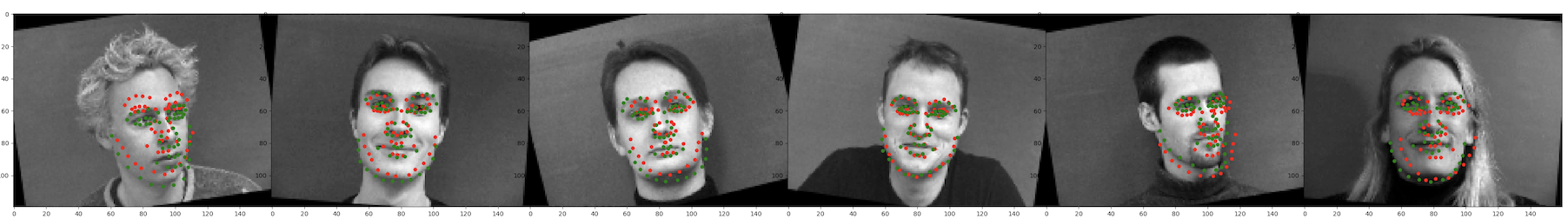

Results: As an reference, the above image shows the result from the staff solution, where the green points are ground-truth annotation and red points are predictions. Once you have trained your model, show the following results for the network:

For this part, we will use a larger dataset, specifically the ibug face in the wild dataset for training a facial keypoints detector. This dataset contains 6666 images of varying image sizes, and each image has 68 annotated facial keypoints. You will need to use Colab with GPU to train the model. As a reference, the staff solution takes 1.5 hours to train 10 epoches using Colab. Here is the dataset to download.

For our class Kaggle competiton: use this link to download the test set xml file. It contains the image path and face bounding boxes but it does not include the keypoints annotation. You will need to predict the keypoints location and submit the result to Kaggle. Please note (1) Do not use data augmentation for your test set dataloader (2) You need to convert your keypoint predictions (ratio of width/height in the crop image) to the absolute pixel coodinate in the entire image. (3) Please save all results into one csv file; the csv file should contain 137088 rows (image_0001_keypoints_01_x, image_0001_keypoints_01_y, image_0001_keypoints_02_x, image_0001_keypoints_02_y, ..., image_0001_keypoints_68_x, image_0001_keypoints_68_y, image_0002_keypoints_01_x, image_0002_keypoints_01_y, ...), each with two columns 'Id' and 'Predicted'.

More keypoint detection networks such as Toshev et. al. (2014) or Jain et. al. (2014) turn the regression problem of predicting the keypoint coordinates into a pixelwise classification problem: for every pixel, they predict how likely is that pixel is the keypoint? You can do this by using an architecture that outputs pixel-aligned heatmaps such as fully convolutional network or UNet. Here are some useful pre-trained models (FCN_ResNet, FCN32s, U-Net, etc.) you can use.

You can turn the ground truth keypoint coordinates into pixel-aligned heatmaps to supervise your model by placing 2D Gaussians at the ground truth coordinate location in the map. Try training your model with this setup and see how it does! Remember to turn your heatmaps back to the coordinates at the end. You can do this by using a weighted average of the heatmap as the keypoint (or other methods). Report on the details of your implementation and your findings.

Results:

Report your best model (if it is different from part 3 or part 4, please describe the model architecture) and report the mean absolute error and Kaggle username on the website after uploading your predictions on the testing set to our class Kaggle competiton! We are aware that students may come into this class with varied prior exposure to deep learning. We will not be releasing exact thresholds, but a model better than predicting average facial keypoints from the dataset would receive 75% of the credit. The maximum number of submissions is 5 per day.