Recolorizing the Prokudin-Gorskii Collection

Chris Mitchell

Overview

In the early 18th century, Sergei Mihailovich Prokudin-Gorskii captured various photos of Russia at the time. Predicting the possibility of color photographs, he captured these images using red, green, and blue filters. Already digitized by the Library of Congress, I applied image similarity matching and channel combining techiques in order to convert these images into color.

Approach

The first steps involved preprocessing the data. I read in the image, converted the values to floats, and divided the image into thirds for each channel. I implemented automatic contrasting by dividing the image values by the max value (plus a small threshold value just in case darker images ever have values close to zero).

For the actual alignment, I used an L2 metric to determine the best overlap of the channels in order to align the red and green channels to the blue channel. I computed the sum of squared differences in values, also known as the L2 norm, for the image, looking at all image index shifts within a 30 x 30 square centered around the origin. When images align perfectly, the L2 norm should be zero, so I took the indices of this minimum and used them to shift the image in the final output.

The above strategy works, but for larger images the computation time is infeasible and the 30 x 30 square is too constrictive. For this reason, I implemented an image pyramid. Whenever the image to align was too large, I would first align the image looking at both the aligning and reference images scaled down by a factor of two. This call was done recursively, and the returned shift indices were then multiplied and used as the center of the 30 x 30 shift region for the more fine detailed levels. As an additional speed up, I ignored off roughly 10 % of the border for each L2 calculation, which should also have helped with ignoring the preexisting borders for the similarity metric, increasing accuracy.

Problems

The initial implementation did not take the most time. However, the pyramid recursion had some issues at first. I noticed that shifts throughout the recursion pyramid were maxing out at 15 in some direction from the center. I first attempted to rerun the shifted L2 calculations, shifting the center point whenever a minimum value was found on the border of the 30 x 30 square. I later realized the main issue was while I was adding double the courser grain shift to my result, I wasn't using those shifts to center my fine grain shifts, so they effectively were useless.

Baseline Photos

These are all produced with an L2 metric (with a 10 pixel border trimmed off), using image pyramids and max value rescaling each channel. Each include the red and green channel shifts used to align to the blue channel.

|

Cathedral

R: (-1, 11), G: (-1, 5)

|

Emir

R: (17, 98), G: (21, 49)

|

Harvesters

R: (9, 124), G: (12, 59)

|

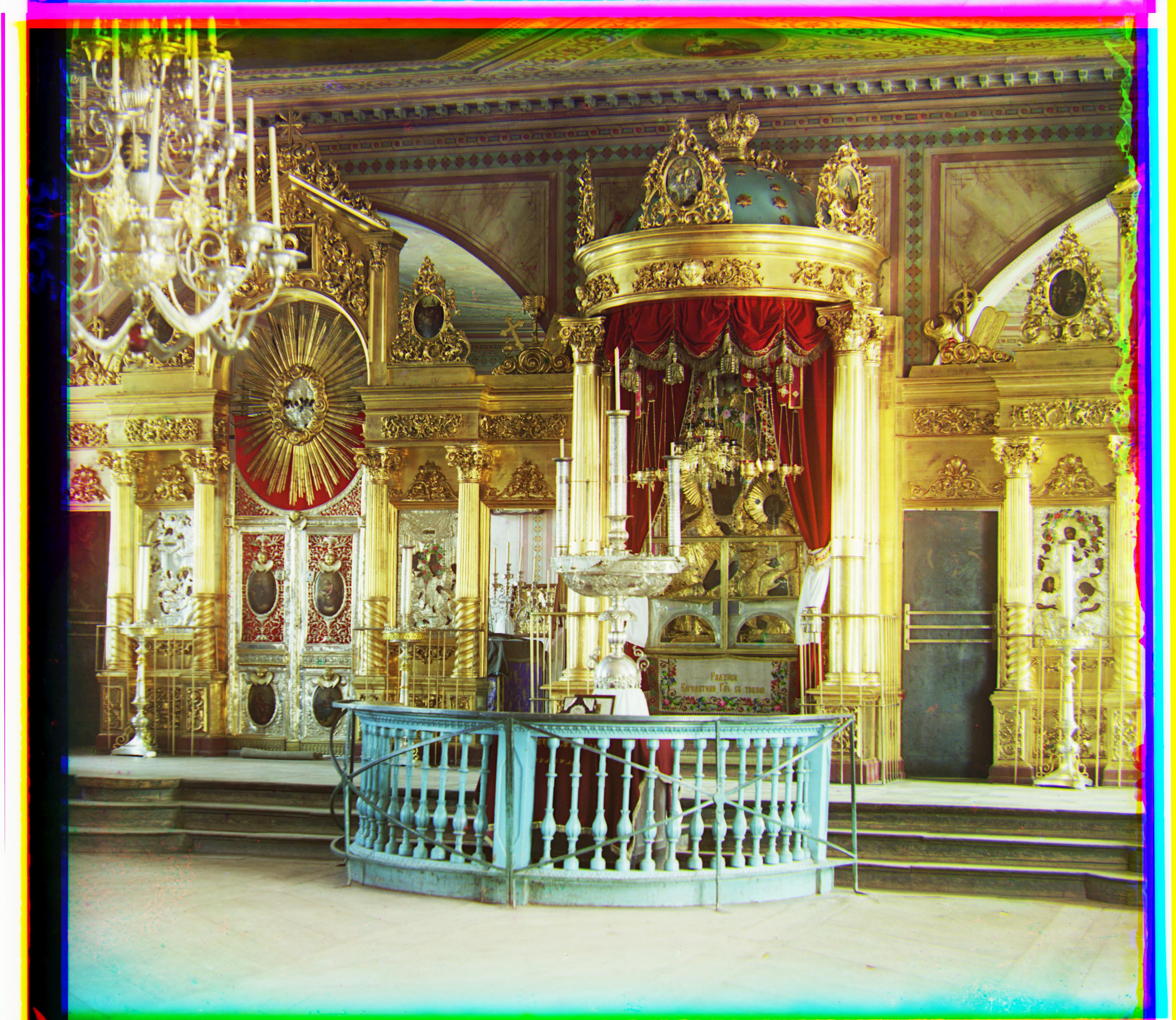

Icon

R: (22, 90), G: (16, 41)

|

|

Lady

R: (-16, 118), G: (-4, 57)

|

Melons

R: (9, 179), G: (5, 81)

|

Monastery

R: (2, 3), G: (1, -3)

|

Onion Church

R: (35, 108), G: (23, 52)

|

|

Self Portrait

R: (-2, 172), G: (-1, 77)

|

Three Generations

R: (8, 111), G: (7, 52)

|

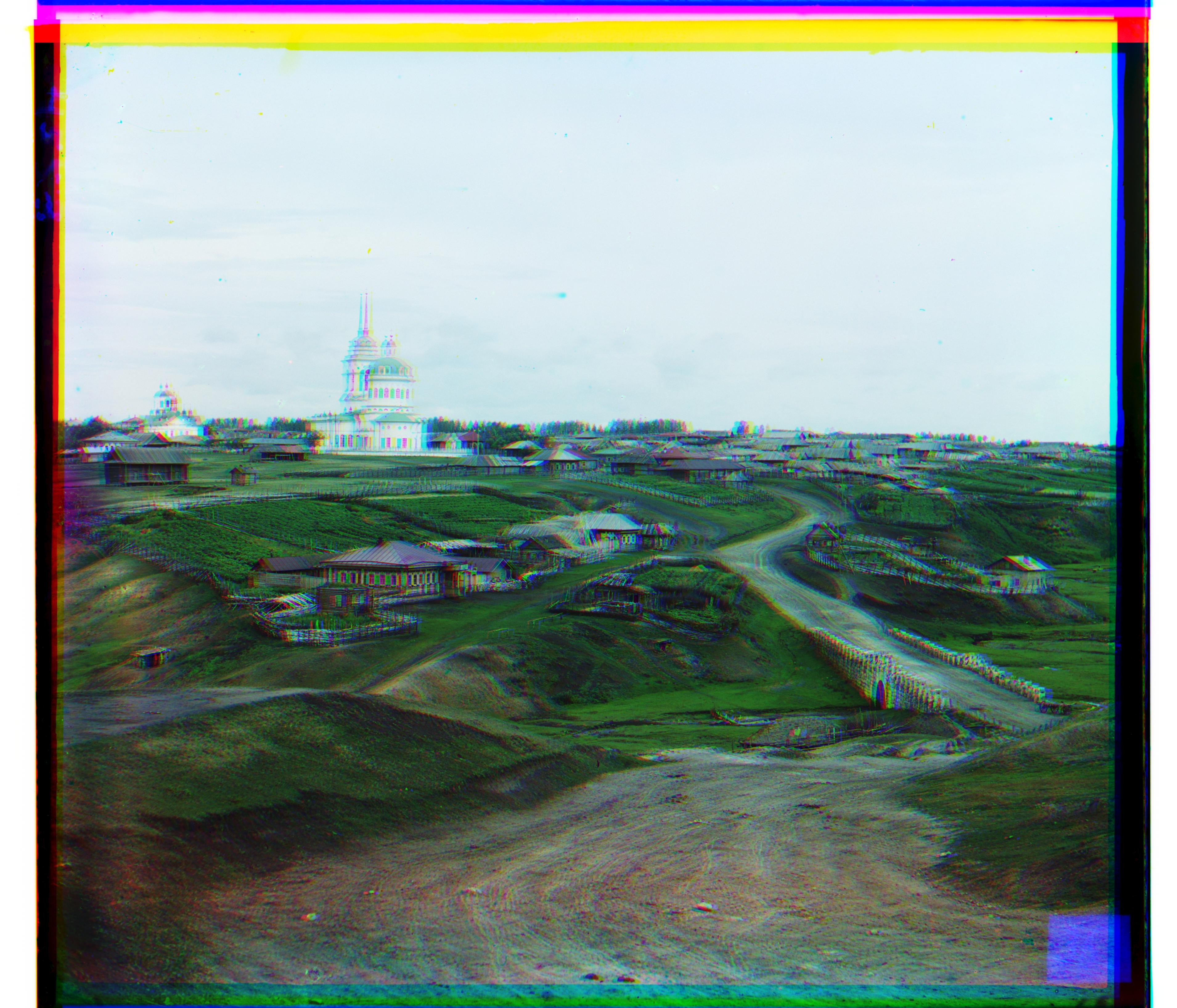

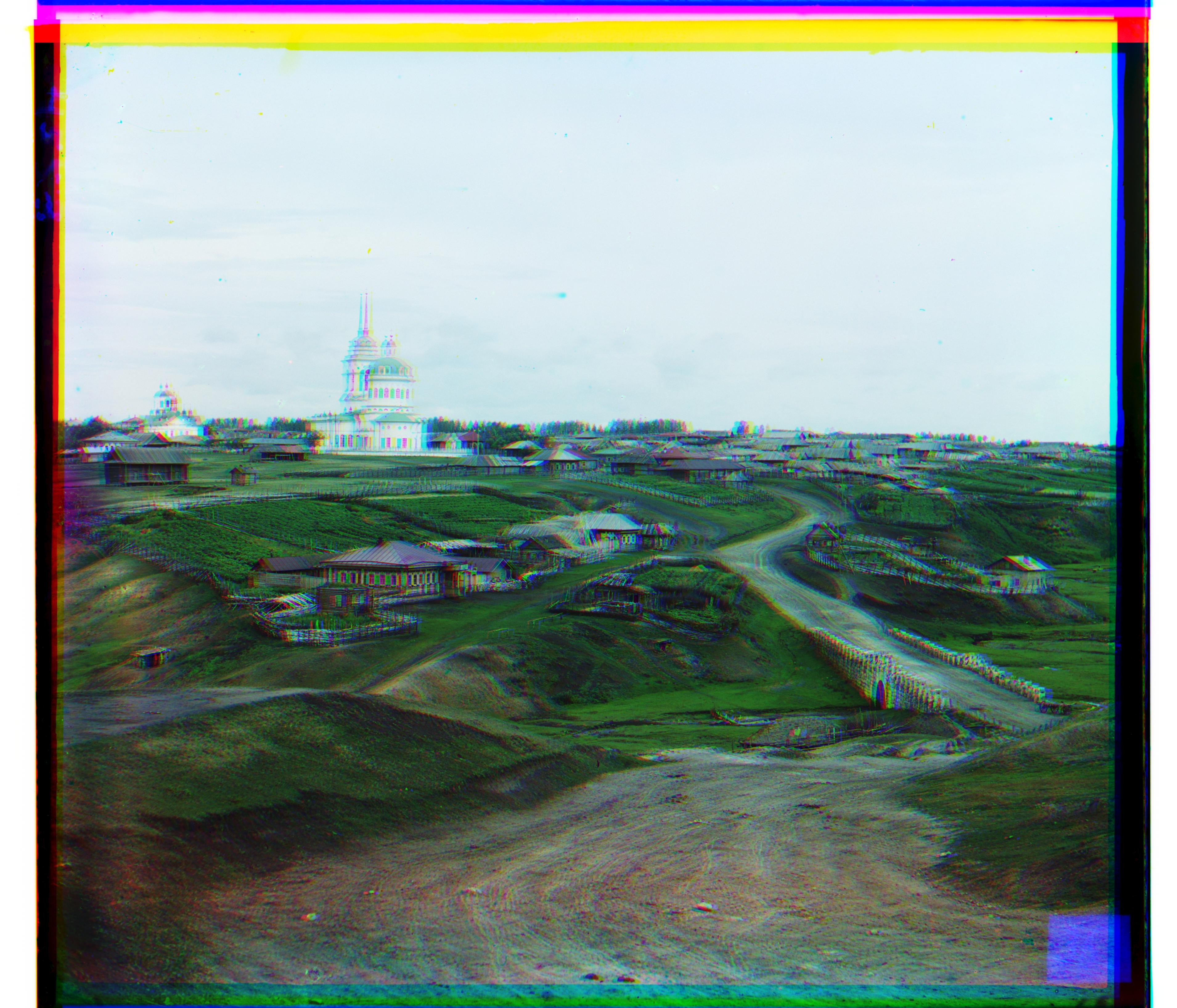

Tobolsk

R: (3, 6), G: (2, 3)

|

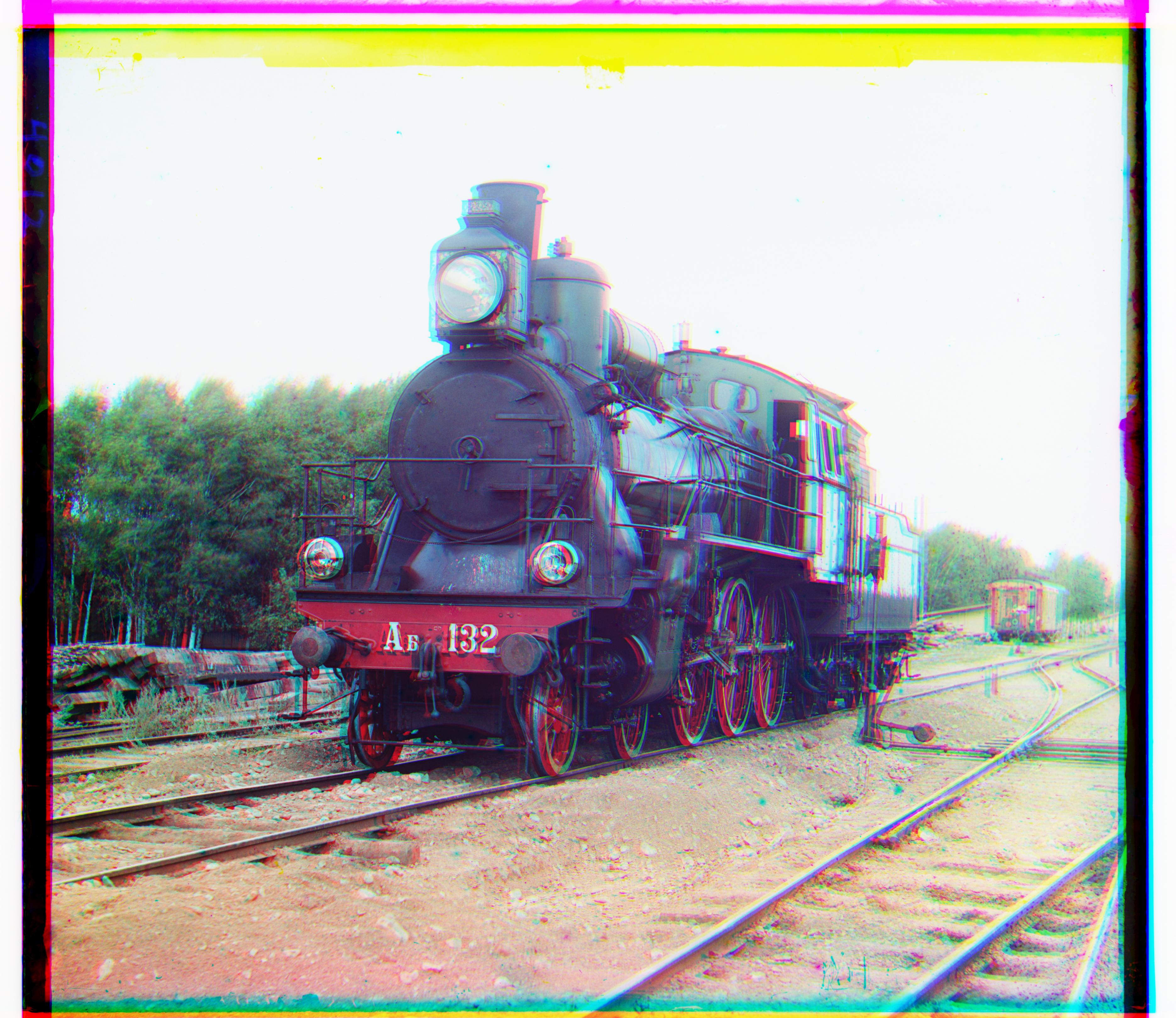

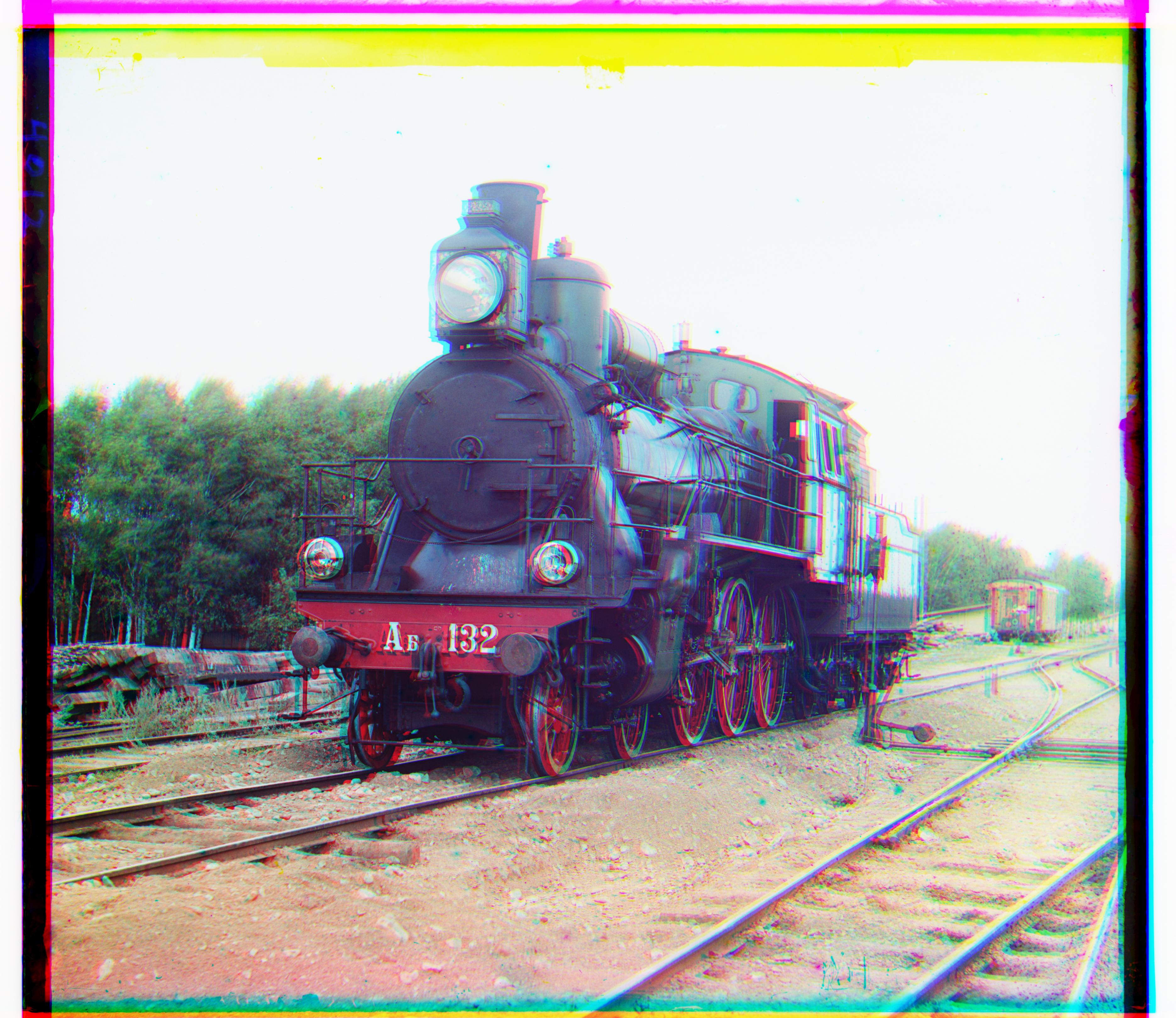

Train

R: (3, 90), G: (-1, 41)

|

|

Village

R: (-14, 138), G: (-7, 63)

|

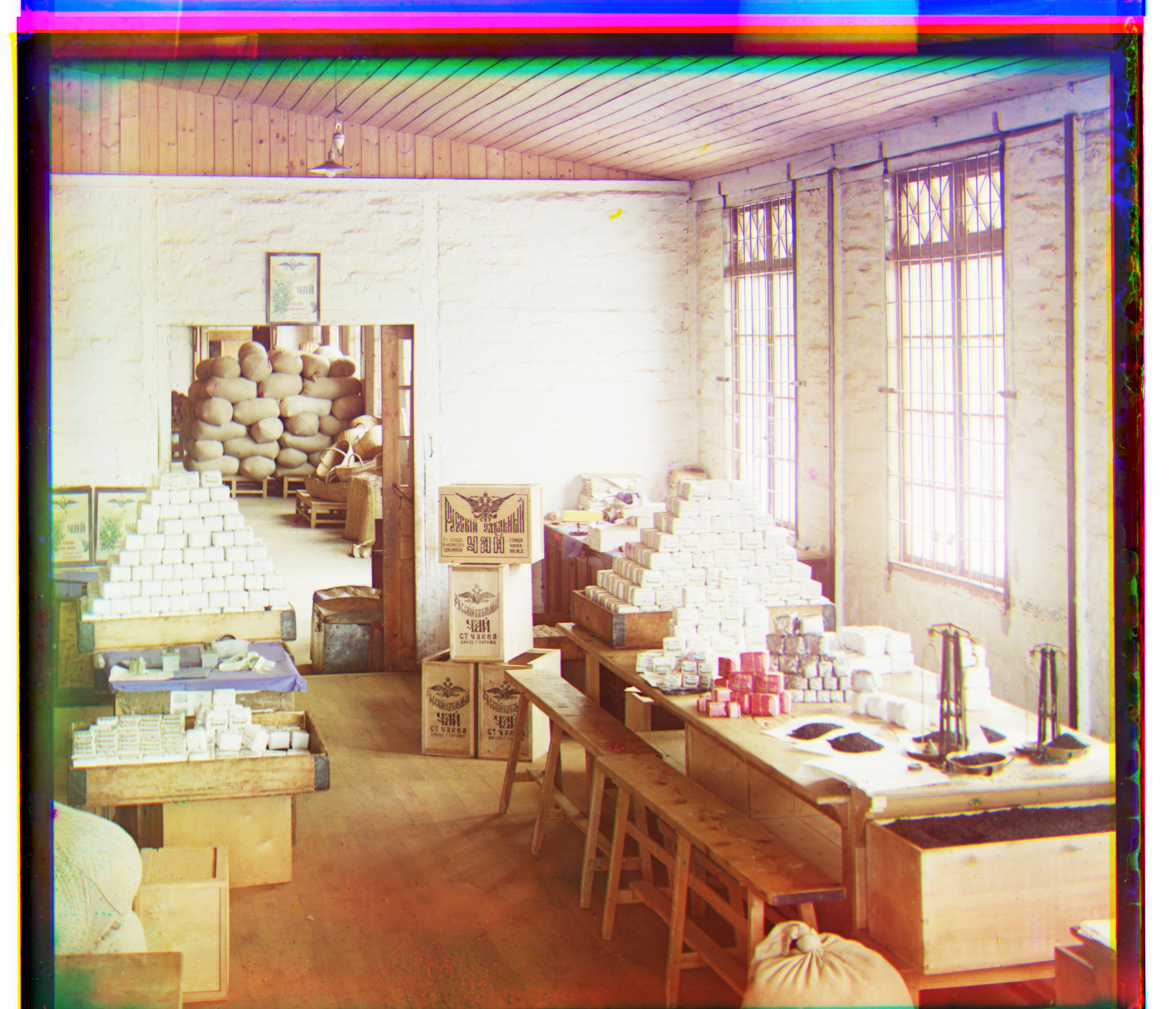

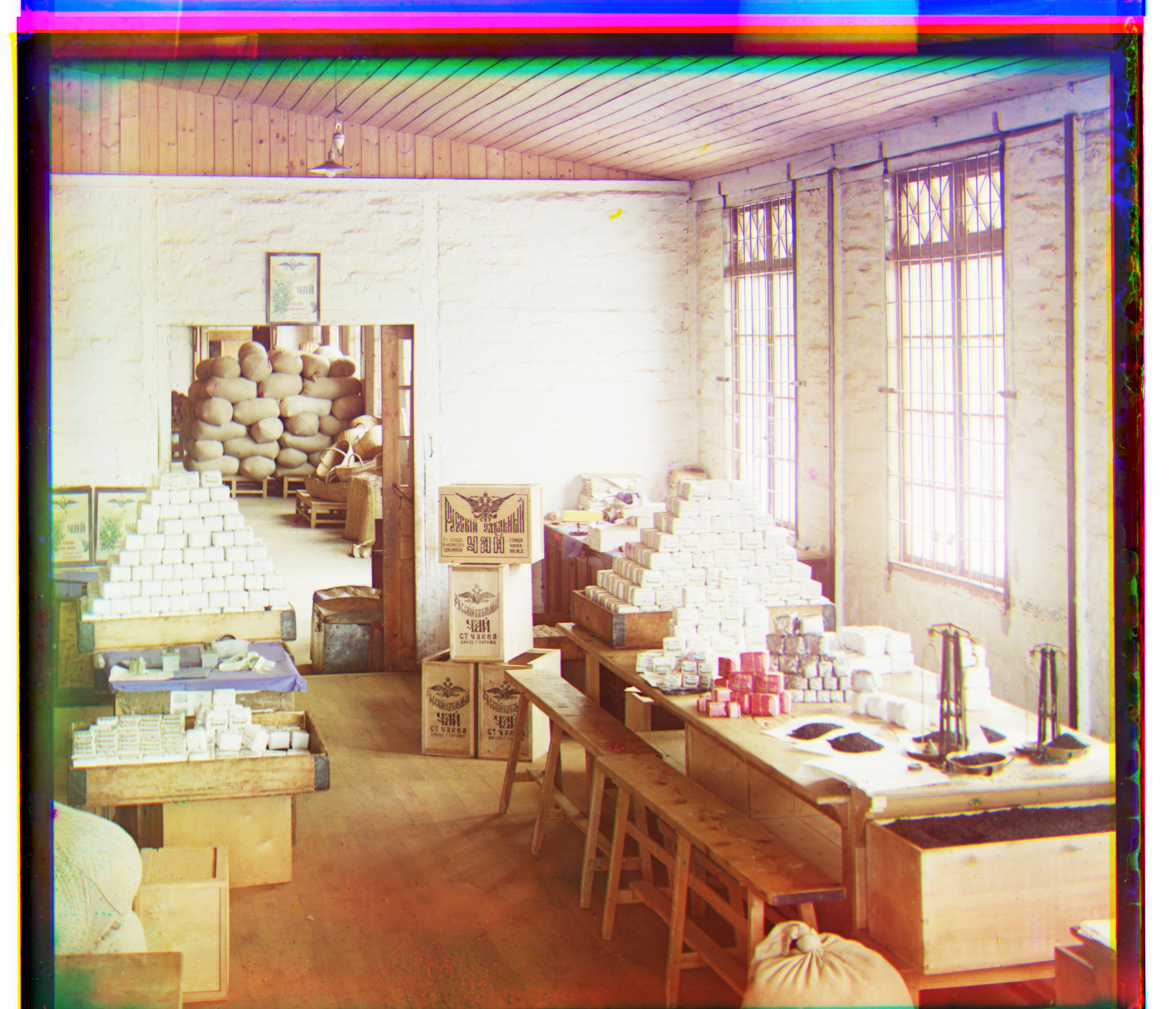

Workshop

R: (-14, 105), G: (-4, 52)

|

Additional Photos

|

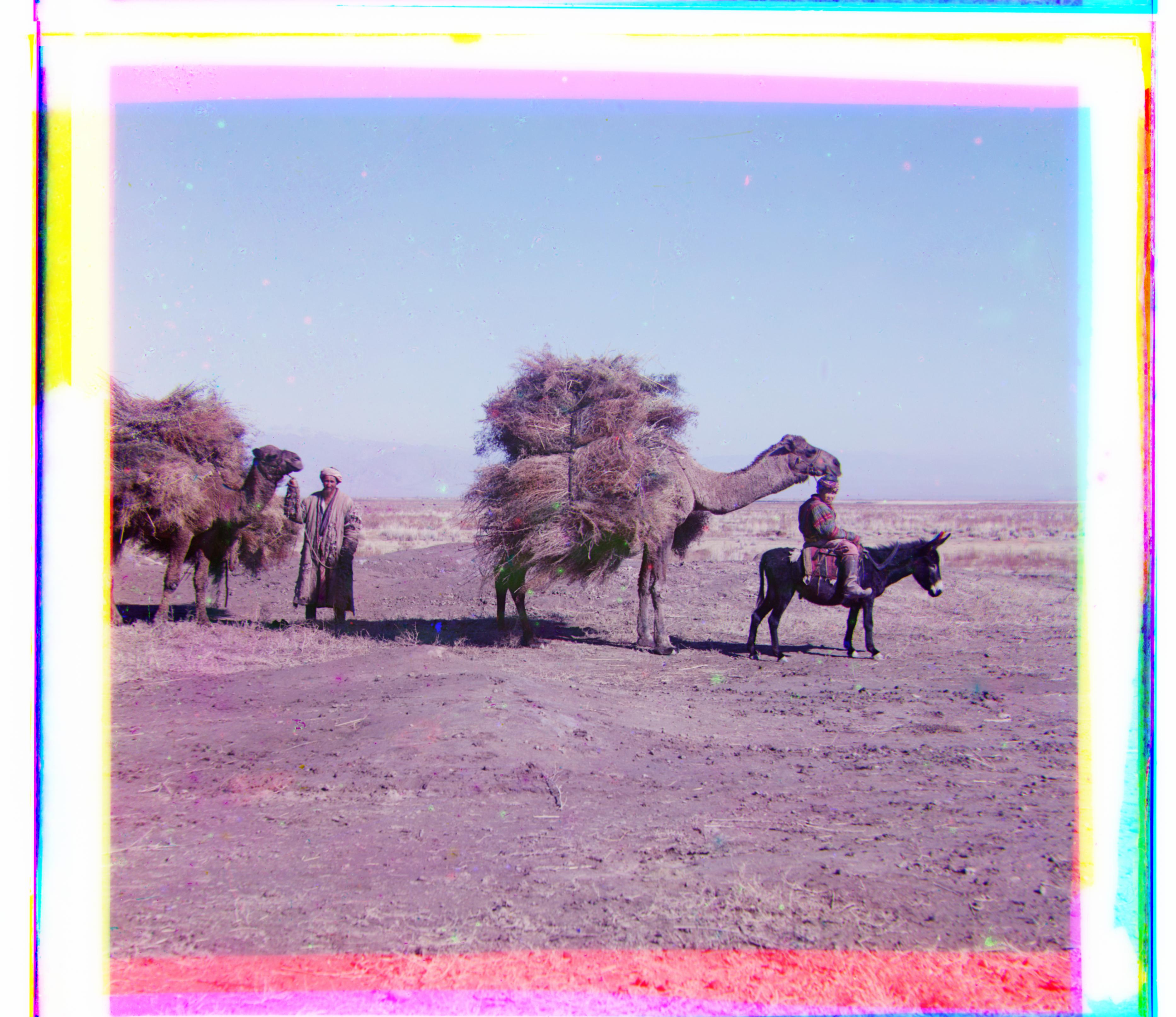

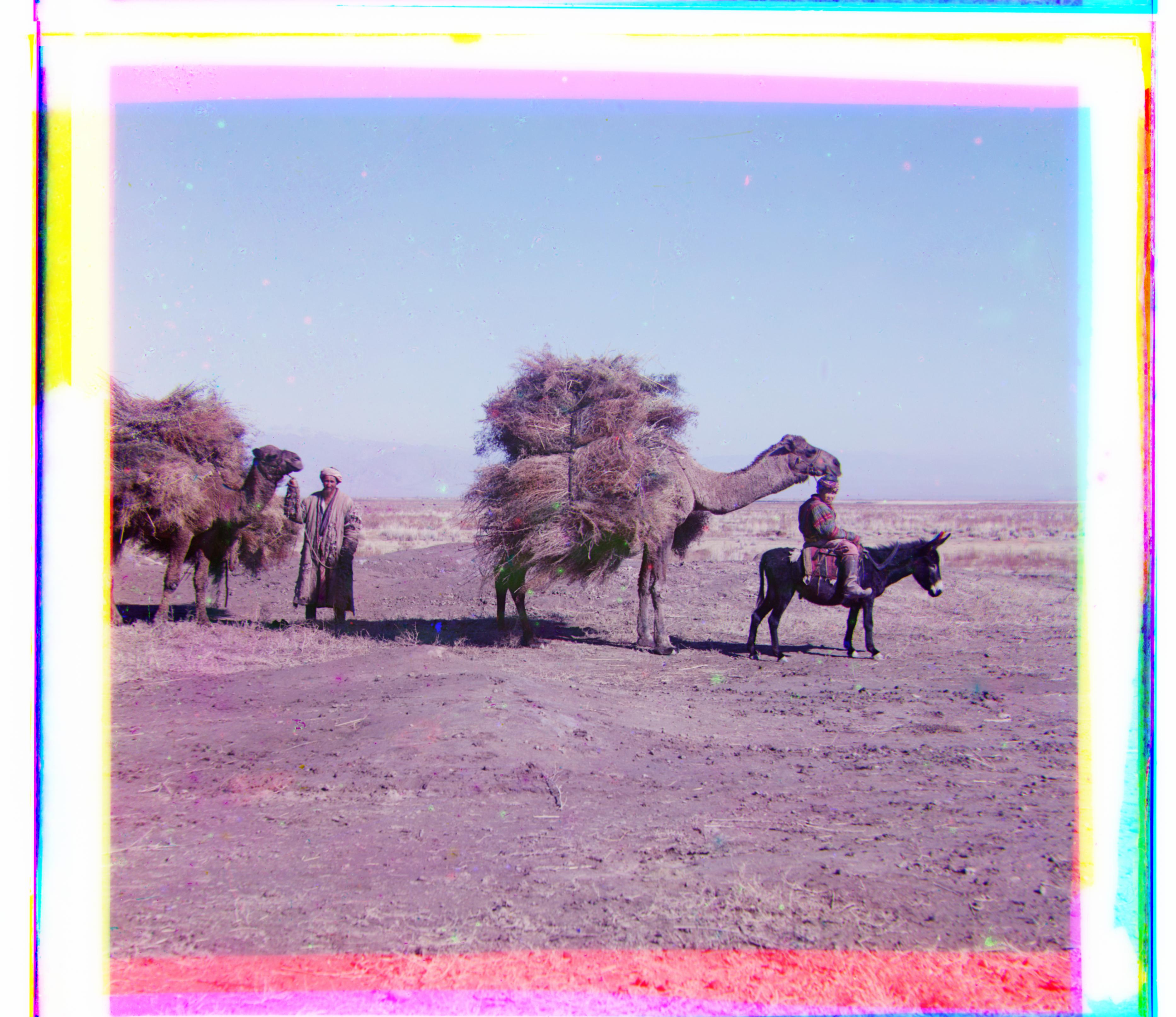

Camel Caravan

R: (40, 146), G: (23, 68)

|

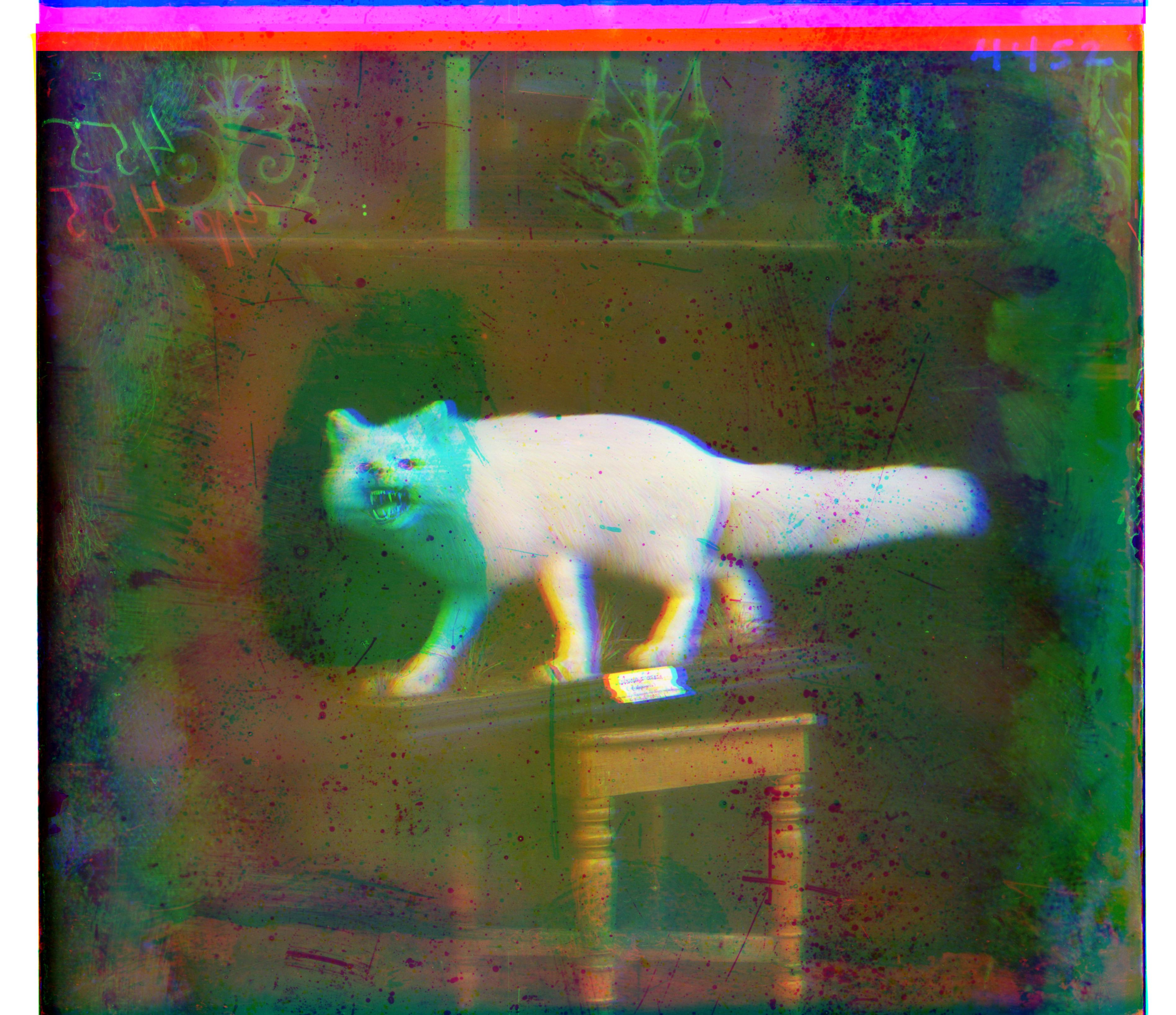

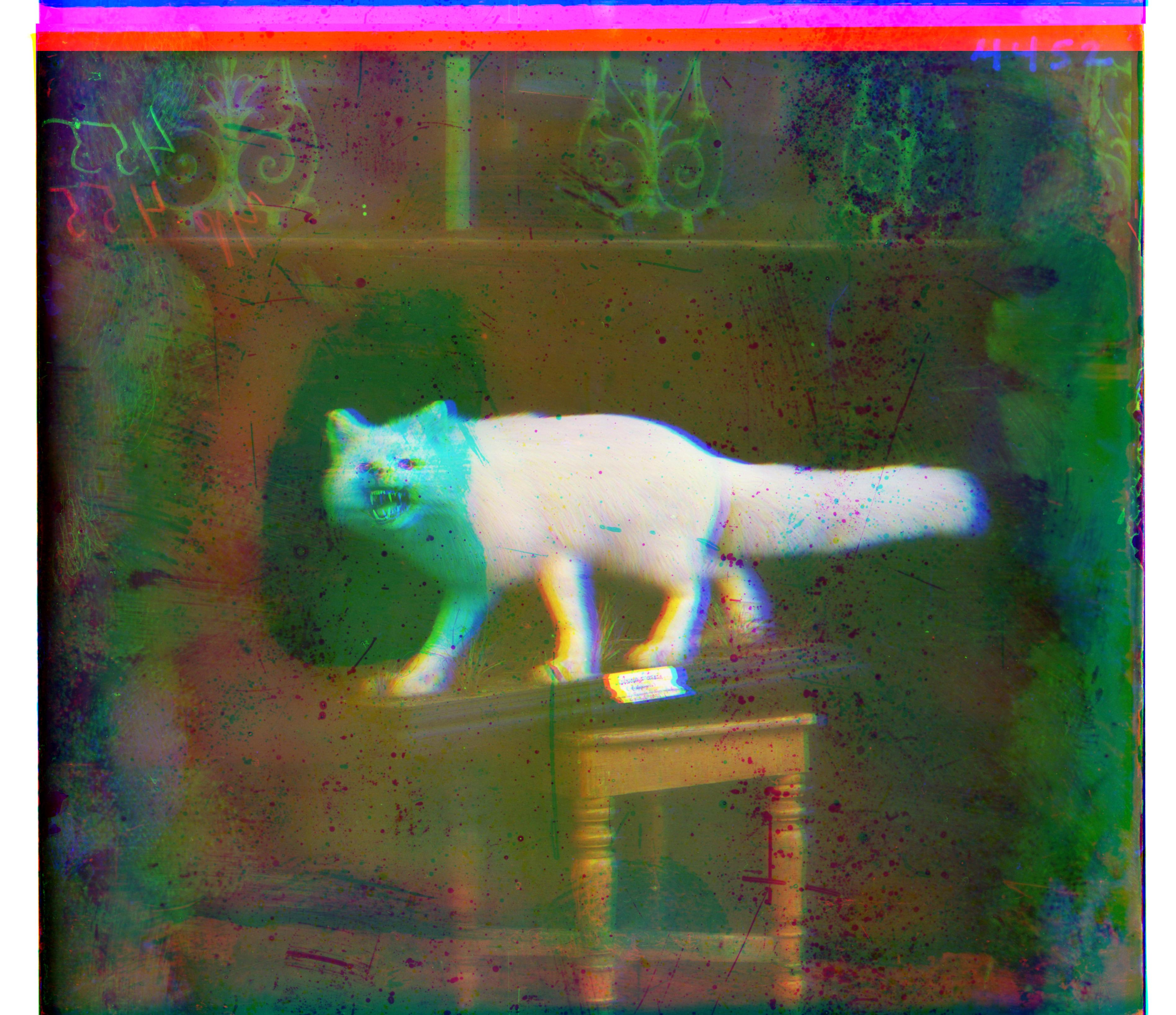

Fox

R: (-19, 164), G: (-9, 76)

|

Mulberries

R: (3, 124), G: (2, 53)

|

Rivers

R: (-6, 70), G: (-3, -10)

|

Result Analysis

For the most part, I am proud of the quality of many of the images. The Emir one does seem to have some blurriness, possibly fixable with more color contrasting and a different model, such as using a different metric or using gradients instead of RGB. The fox image also has issues, but given the nonlinearity of the blotches and how they aren't all on the border, I believe this is due to the quality of the original images.

Possible Future Work

This project could be improved in various ways, such as:

-

White balancing

-

More contrasting

-

Automatic trimming of blended edges

-

Using different metrics:

-

Cosine similarity

-

Gradients and other color representations