Project 1 - Colorizing the Prokudin-Gorskii Photo Collection

Project overview

Each set of images from the Prokudin-Gorskii photo collection contains the grayscale equivalent of the red, green, and blue channels used in colored photography today. The goal of the project was to align these grayscale images, then stack them in RGB order to produce the colorized versions of each image

Single scale implementation

For the single scale implementation, I iterated through shifts of 15 pixels both left and right, and up and down, and lined up the green layer to the blue layer, and the red layer to the blue layer. These were then stacked on top of each other (RGB). To score the images, I tried both SSD and NCC on the raw pixel data. Here is a comparison of the 2 colorized images, and it seems the images do relatively well with this metric.

One thing to recognize is that if I were to use NCC, I am trying to find the highest score, whereas for SSD, I am trying to find the lowest score. At first, I did not realize this, so SSD would perform really poorly when I implemented using NCC. When I realized this, I modified the align function to account for this.

SSD (green shift: (5, 2), red shift: (12, 3))

NCC (green shift: (5, 2), red shift: (12, 3))

SSD (green shift: (-3, 2), red shift: (3, 2))

NCC (green shift: (-3, 2), red shift: (3, 2))

SSD ((green shift: (3, 3), red shift: (6, 3))

NCC (green shift: (3, 3), red shift: (6, 3))

Image pyramid

For the larger images, I implemented a five layer pyramid. I decided to use SSD and NCC on the raw pixels, and the images did not line up very well. In order to debug the pyramid, I displayed intermediate images of the layers of the pyramid to see if the shifting layer lines up with the base (blue) layer. This is how I realized I was skipping a layer of the pyramid, resulting in my final image being misaligned despite the earlier, lower resolution images seeming to stay aligned. The images displayed before have my attempt at automatic cropping implemented, which is why the sizes appear different.

SSD (green shift: (-3, 7) , red shift: (107, 17))

NCC (green shift: (-3, 7) , red shift: (107, 17))

Improvement using gradient scoring

Then, I changed the scoring metric to finding the gradient of the images, then calculating the SSD on the gradient. The idea was that the gradient feature encodes the relationship between nearby pixels, which should remain consistent even if the brightnesses of the raw layer images are different. SSD on the gradient did much better, with images remaining lined up throughout the pyramid.

emir.tif - red shift: (107 40), green shift: (49 23)

harvesters.tif - red shift: (124, 14), green shift: (60, 17)

icon.tif - red shift: (90, 23), green shift: (40, 16)

lady.tif - red shift: (120, 13), green shift: (56, 9)

melons.tif - red shift: (177, 12), green shift: (80, 10)

onion_church.tif - red shift: (107, 36), green shift: (52, 24)

self_portrait.tif - red shift: (176, 37), green shift: (78, 29)

three_generations.tif - red shift: (111 8), green shift: (54 12)

train.tif - red shift: (85, 29), green shift: (41, 1)

village.tif - red shift: (137, 22), green shift: (65, 12)

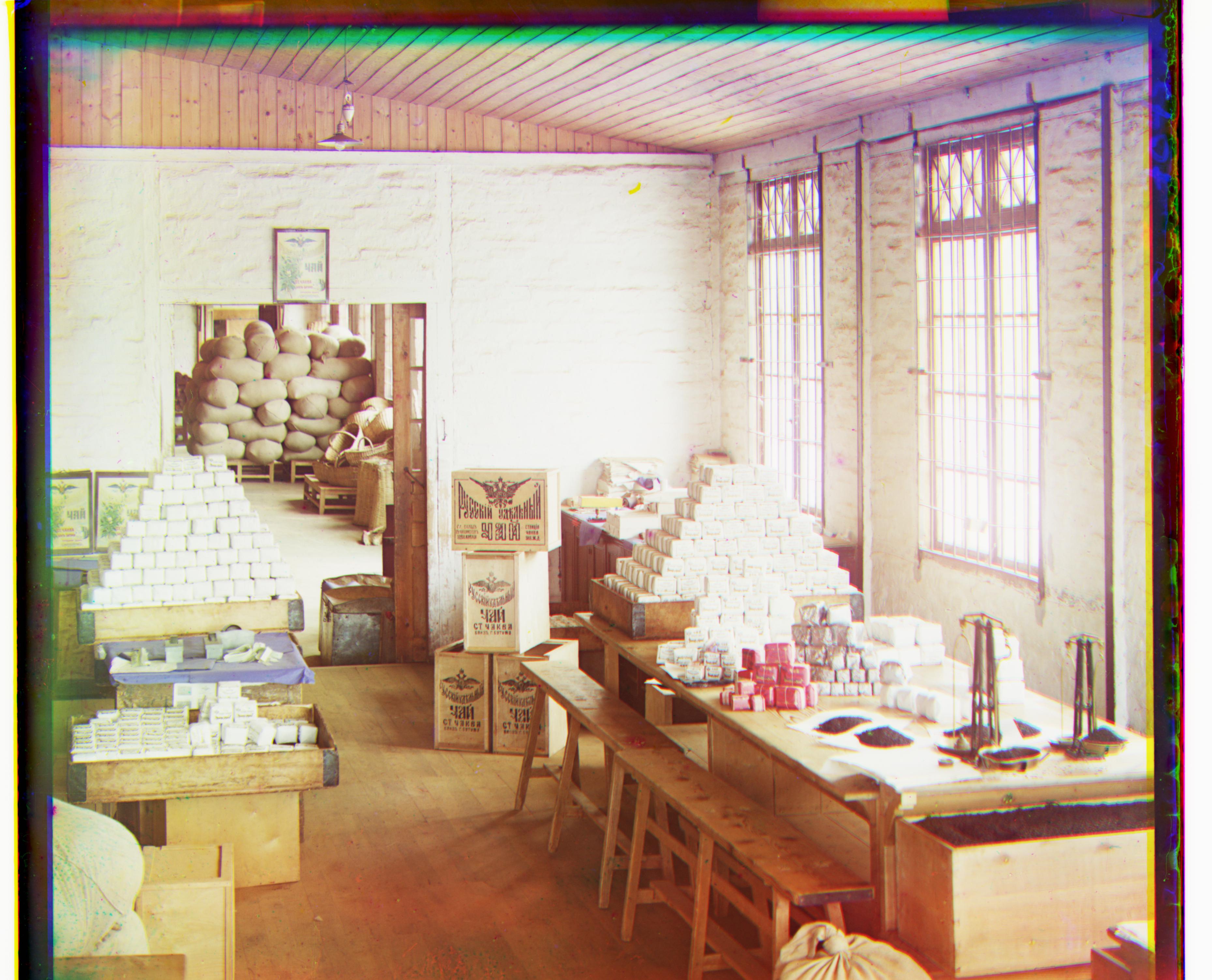

workshop.tif - red shift: (102, -12), green shift: (53, -1)

red shift: (35, 37), green shift: (11, 18)

red shift: (31, 65), green shift: (5, 39)

red shift: (132, -17), green shift: (63, 0)

red shift: (115, -28), green shift: (18, -12)

Automatic cropping

I attempted to implement automatic cropping by using the number of pixels shifted/rolled per layer, and cropping based off of the highest absolute value of the 2 shifted layers for that axis. For example, if in the up down direction, the red layer was rolled 100 pixels, and the green layer was rolled -20 pixels, the autocrop function would crop 100 pixels off the top and bottom. I did not actually implement edge detection, so often the borders are still very visible, especially in the left-right direction, as the original plate negatives' borders tend to be lined up already, so there is not much shift in that direction.