Images of the Russian Empire: Colorizing the Prokudin-Gorskii photo collection

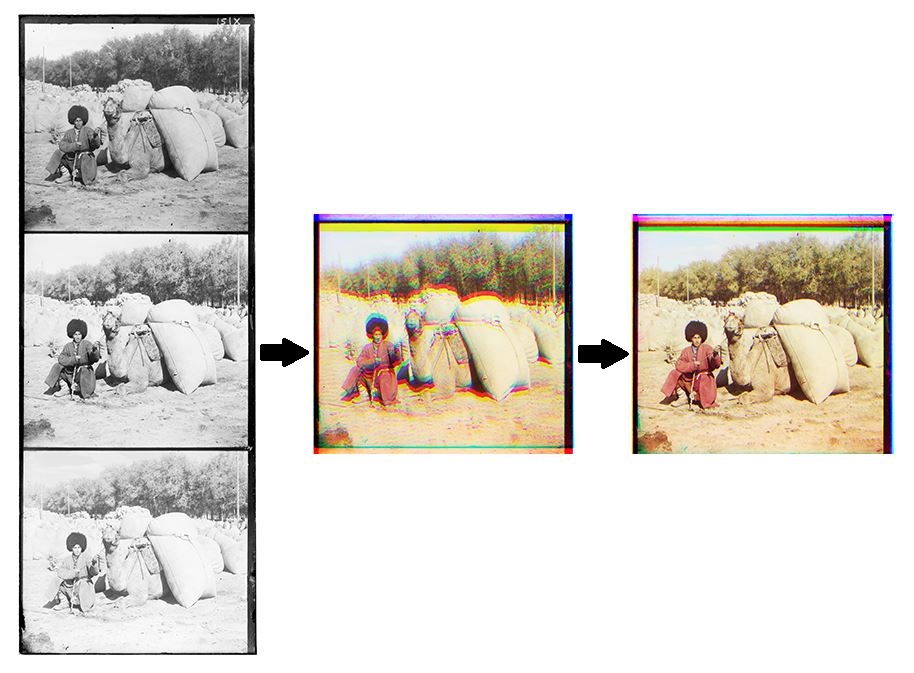

Kenny ChenSergei Mikhailovich Prokudin-Gorskii (1863-1944) [Сергей Михайлович Прокудин-Горский] was way ahead of his time, predicting more than 100 years ago that color photography would be the new wave. He got permission from the Tzar to travel across the Russian Empire to take color photographs. He did this by recording 3 exposures of each scene onto a glass plate using a red, a green, and a blue filter. The goal of this project was to align these three filters using image processing techniques so that they produce a color image with as few artifacts as possible.

Approach

The image channels are all initially part of one large image, so we first separate the three

images by slicing each third of the height of the large image (where the top image represents the blue channel,

the second represents the green, and the bottom the red).

I initially started with lower-resolution jpg

images using a naive method. In this method, I search over an $x$ and $y$-offset of [-20, 20].

I compare both the $r$ and $g$ channel images to the anchor image, $b$. This comparison

is done by calculating the Sum of Squared Distances,

$$SSD=\sum_x\sum_y(j_{x,y}-b_{x,y})^2$$

The Normalized-Cross Correlation was also used, but the $SSD$ metric ended up working better.

We choose the offsets that correspond to the lowest $SSD$, and align the $r$ and $g$

channels to the $b$ channel, stacking the three images to create a color image.

This naive algorithm works fine for small images, but is inefficient for large images.

For larger images, we use a recursive image pyramid algorithm to speed up the computation.

We use information from higher levels of the pyramid to inform the lower levels. At each

iteration of the algorithm, we rescale the images to be half their initial size and call

the recursive function with these resized images. We get the offset returned from this call and

multiply it by 2 (due to the fact that the image was scaled by a factor of 2), using this

new offset value to search a smaller range using the naive algorithm.

We keep going up the pyramid until we reach some cutoff resolution or a set number of iterations is reached.

The naive algorithm was modified to add some preprocessing. Because each image has some

border, a fraction of the sides of each image was removed before any comparison was done. The

pixel values were also normalized across rows of the image. This process resulted in most of the images

looking mostly-aligned.

Results

The following is a slideshow of the side-by-side comparison of the images without any anlignment and the images after alignment. I initially ran into a problem where the Emir image was very misaligned despite all the other images looking fine. I solved this issue by fine-tuning some of the parameters in my program such as minimum image resolution and number of iterations of the algorithm.

Other Examples

Failures

Most of the resulting images look fine, unless scrutinized closely. For example,

Emir looks slightly blurry if we zoom into the image. This is likely because the brightness

varies rather widely on his blue dress across color channels.

Melons also has some artifacts. This may be because there are too many similarly-shaped items (melons).

The self portrait also seems a bit blurry. This may be because the image is too varied.

There isn't solid color such as a sky as in many of the other images.