Overview

We seek to colorize images taken from the Prokudin-Gorskii photo collection, by merging three separate channels (red, green, blue) into a single colored image. Prokudin-Gorskii used three separate cameras with color filters to simultaneously capture the three shots per image. As each image was taken from a slightly different location, we cannot naively stack the channels on top of each other and must instead devise an intelligent approach to shift and stack them.

Approach

Per the project spec I implemented the Sum of Squared Differences (SSD) image difference metric, aka the squared \(L_2\)-norm of image pixel differences: \[\text{SSD}(I_1, I_2) = \sum_i\sum_j (I_1[i,j] - I_2[i,j])^2 = \|I_1 - I_2\|_2^2\]

As the images provided had uneven borders, I did not compute the metric over the edges of each channel (defined as the outer 10% of the image dimension).

For the small .jpg images (about 400 by 300 pixels), I brute-forced the optimal shifts within a fixed range (+/- 30 pixels in either dimension).

Image Pyramids

For the large .tif images, it became prohibitively expensive to brute-force search. For a rouglhy 3000 by 3000 pixel image, checking for shifts no larger than 10% in either direction would require \(600^2 = 360000\) computations. I implemented an image pyramid to improve efficiency, where each successive layer scaled each dimension down by a factor of 2.

In theory, this allows you to search for the optimal shift in runtime logarithmic to the number of pixels considered, but only if we assume the image difference metric is convex with respect to a shift in each direction (that is, the best shift at the finest resolution is certain to be within the area captured by the best shift at a coarser resolution). While this is not true for our difference function (\(L_2\) norms are convex in their argument, but not the individual elements in the difference), we crossed our fingers that the assumption was more or less true for the images we had.

I also did not want the search to be performed at too coarse a scale (lest important details be lost), so I decided to only build up the image pyramid until the image was about the size of the small .jpgs.

I performed the same brute force approach on the top (smallest) images in the image pyramids, saving the shift at that level, stepping down to the next largest image and applying the (doubled) previous shift, and checking all 1-pixel shifts ( because a scaling factor of two means that we only need to check the four pixels within a single pixel from the higher level). I repeated this process down the rest of the pyramid and returned the best shift, upscaled to the original image dimensions.

This method yielded acceptable results but there were some failure cases where the alignment was slightly but noticeably incorrect, like emir.tif (face closeup pictured below).

As the spec said, different colors in different parts of the image mean that we cannot assume uniform pixel values per each channel. For example, the emir's blue robe would have a high blue intensity but a low red intensity (and therefore a high difference in the metric) even with a perfect alignment. I think the failures were caused by this fact.

Image Gradients

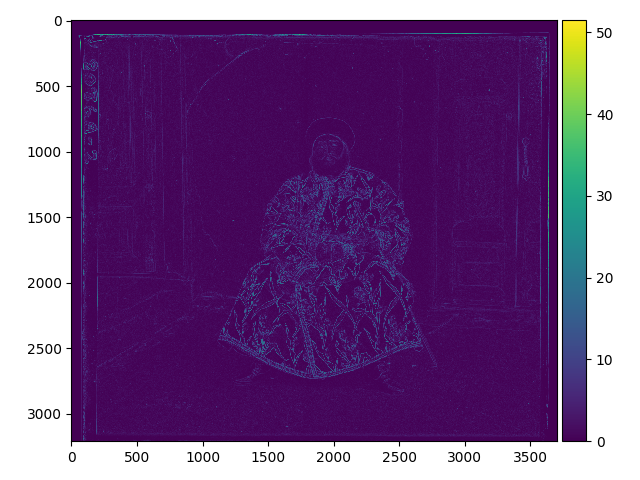

I decided to try a new approach- instead of minimizing the difference between channel pixel values across the entire image, I'd only want to ensure that the edges in each channel lined up. We can detect edges by seeing when pixel values suddenly change. To do so, I decided to process each channel such that each pixel was the normed gradient, or the intensity of color change at that pixel.

For a two-dimensional image \(I\), we can approximate the gradient as follows: \[\nabla I[x,y] = \begin{bmatrix} \frac{\partial I}{\partial x} & \frac{\partial I}{\partial y} \end{bmatrix} \approx \begin{bmatrix} \frac{I[x+1][y] - I[x-1][y]}{2} & \frac{I[x][y+1] - I[x][y-1]}{2} \end{bmatrix} \]

Plotting the gradient of a color channel for the emir yields the following:

Passing in each channel's gradient intensity map instead of the channel itself yielded far better results, such as a more aligned emir:

The results of my approach are displayed below.

Small Images

| title | shift (x, y) |

aligned image |

|---|---|---|

cathedral.jpg |

G: (2, 5) R: (3, 12) |

|

monastery.jpg |

G: (2, -3) R: (2, 3) |

|

tobolsk.jpg |

G: (2, 3) R: (3, 6) |

|

Large Images (.tifs)

| title | shift (x, y) |

aligned image |

|---|---|---|

emir.tif |

G: (24, 48) R: (40, 104) |

|

harvesters.tif |

G: (14, 56) R: (12, 124) |

|

icon.tif |

G: (16, 40) R: (22, 88) |

|

lady.tif |

G: (8, 56) R: (8, 116) |

|

melons.tif |

G: (8, 79) R: (12, 176) |

|

onion_church.tif |

G: (24, 48) R: (36, 104) |

|

self_portrait.tif |

G: (28, 77) R: (36, 175) |

|

three_generations.tif |

G: (12, 48) R: (8, 108) |

|

train.tif |

G: (2, 40) R: (29, 84) |

|

village.tif |

G: (10. 64) R: (20, 136) |

|

workshop.tif |

G: (-2, 52) R: (-16, 104) |

|

Other Collection Images

| title | shift (x, y) |

aligned image |

|---|---|---|

st_george.tif |

G: (22, 72) R: (32, 152) |

|