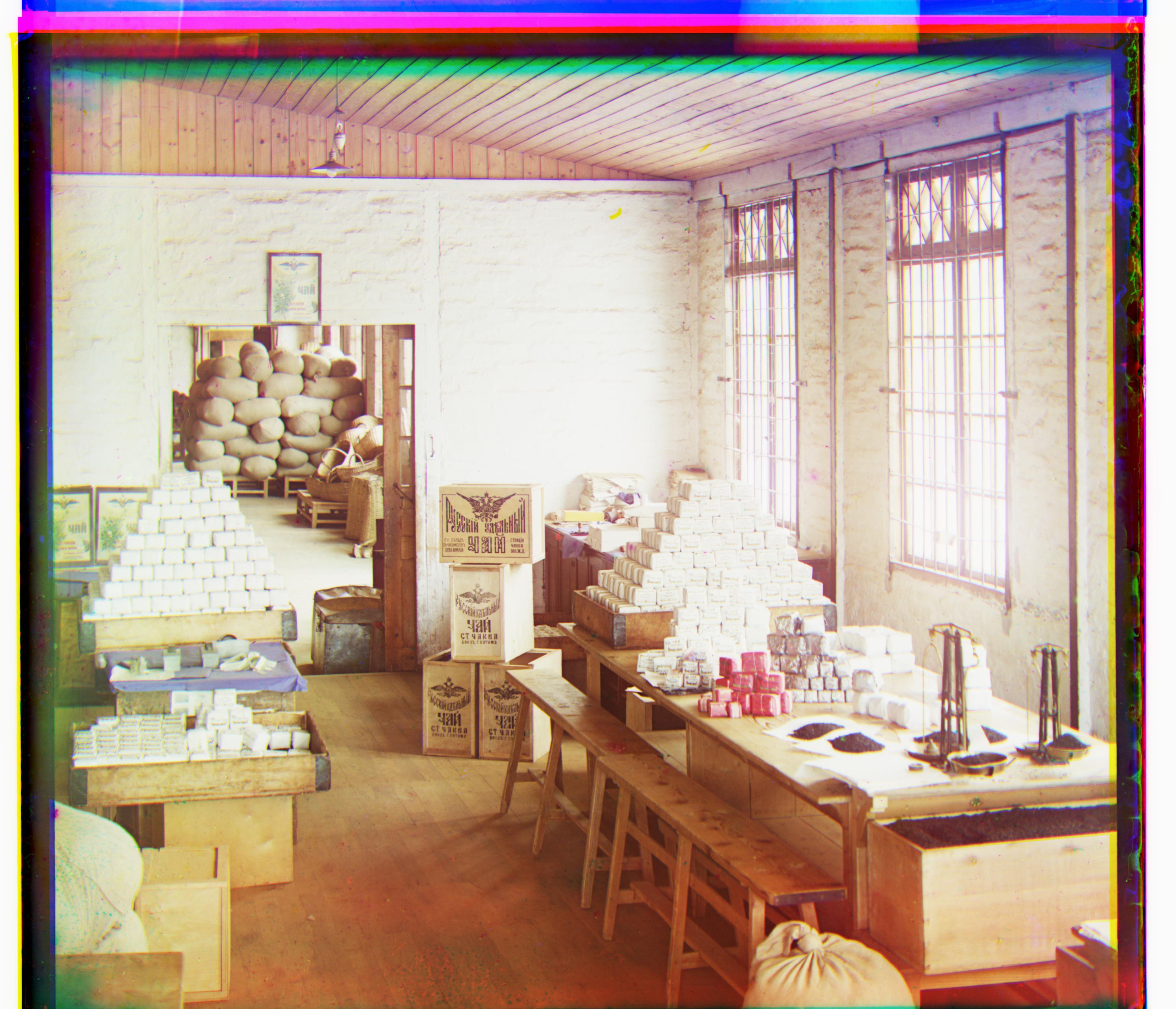

Sergei Mikhailovich Prokudin-Gorskii traveled across the Russian Empire in the early 20th century taking photographs of everything he could find. He wanted to capture these images in color, not just black and white, but all he had was a black and white camera. His solution was to take each image three times, once with a red filter, once with a green filter, and once with a blue filter. By interpreting the greyscale values of the red filter image as red values in the RGB color scheme, and so on with green and blue, we can recover the color photographs.

The tricky part is the image alignment. The input to my algorithm was the series of three images stacked on top of each other. I began by slicing the image into three equally sized pieces. Because the edges are often corrupted by age, warping, and scratches, I cropped off 10% of the image from each side before running my alignment algorithm (though I left the borders in the photos for the resulting images below). The corrupted borders would have interfered with my alignment metric.

The goal of my algorithm is to find displacements of the red and green plates so taht they align with the blue plate. For the low resolution jpeg images, I simply used np.roll to try a 40x40 window of displacements, choosing the one which minimized the alignment metric. I tried out two metrics. The first one subtracted the two images, squared each difference, and summed up all of the differences. In this way, it is minimized when the two images are most similar, which is hopefully when the two images are aligned. The second one converted the two images to line vectors, then returned the negative inner product of the two vectors. Since the inner product of two vectors is maximized when the two vectors have the same direction, hopefully the negative inner product is minimized when the two images are aligned (i.e. they have the same unit vector but just different magnitudes). Through trial and error, I found that the first metric worked better to product nice looking alignments. For higher resolution images, searching through a 40x40 window to find the best alignment would have taken too long. Instead, I used an image pyramid. Here, the image is downsampled to a lower resolution (I used 50x50 or lower). Then the naive algorithm is used to find an offset, say [2, 3]. This is returned to the previous function call, which is trying to align images with twice the resolution. The same offset is converted to the new resolution by multiplying its components by the scaling factor (2). So it becomes [4, 6]. This offset is used as the center of a much smaller search window (6x6 instead of 40x40). Now we can do a series of 6x6 searches on a series of lower resolution images instead of a big 40x40 search, which turns out to be much faster than the naive search.

My algorithm failed on the Emir. By inspection, the green slide aligned almost perfectly with the blue slide. But the Red slide calculated a wrong displacement at the top of the image pyramid which was only exacerbated as the algorithm descended the pyramid. It appeared to try to avoid overlapping the two versions of the Emir's robe. This is likely because the robe is very blue and has little red, so the metric that I used (SSD) calculated a very large error for a proper alignment. Moving the red slide's robe over the ground resulted in a smaller error, so the algorithm optimized for that.