CS 194 - Problem 3 - Face Morphing

Haoyan Huo

Warping between faces

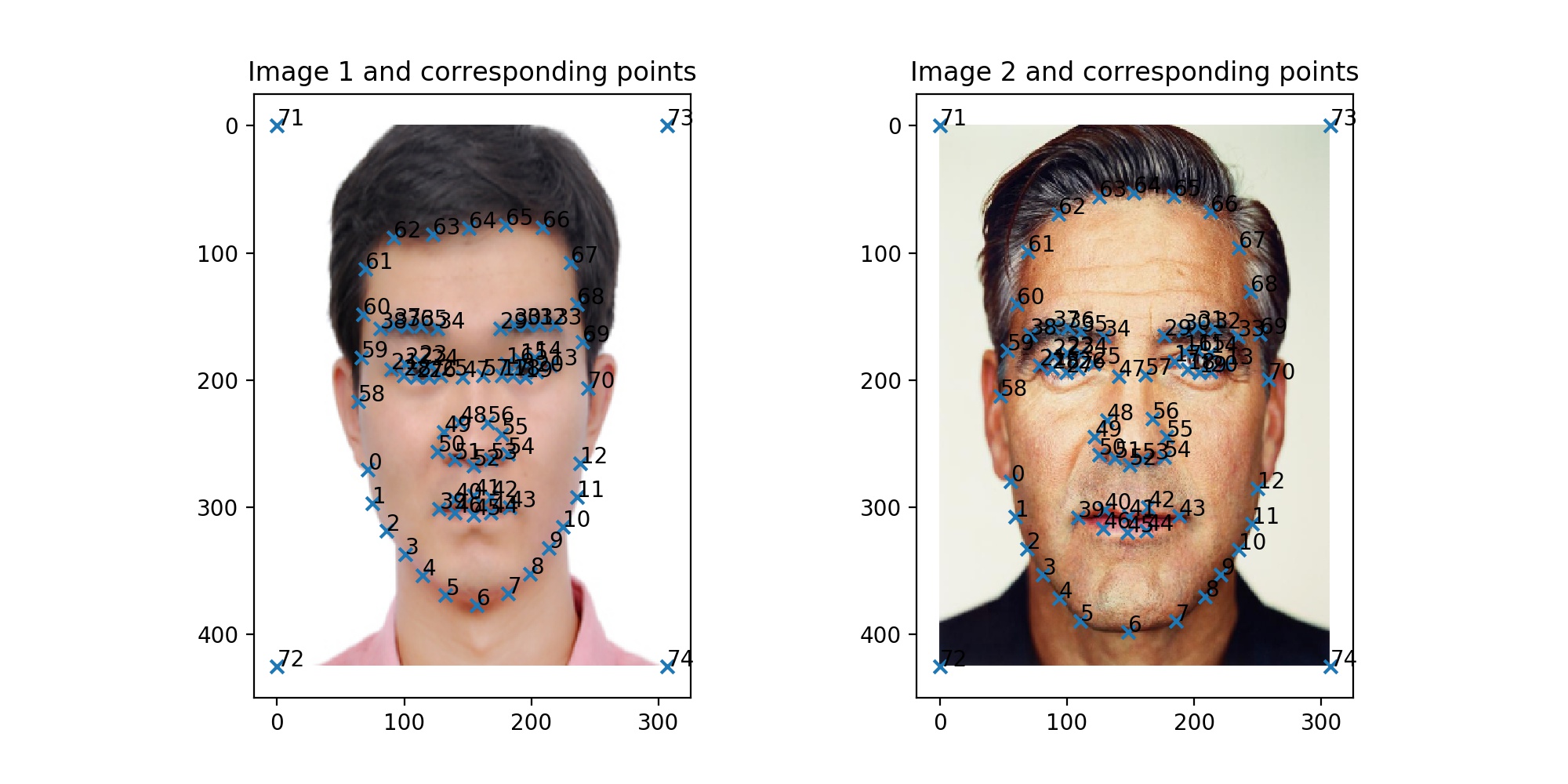

Defining correspondences

The first thing required for morphing faces is to define correspondences for two faces. Here I used the face of George. Generally, we should define as many correspondences as possible. However, we also need to consider the complexity of triangulation as well as the difficulty to accurately annotate these points. Thus, I defined correspondences for chins, eyes, eyebrows, nose, mouth, and the shape of the face as following.

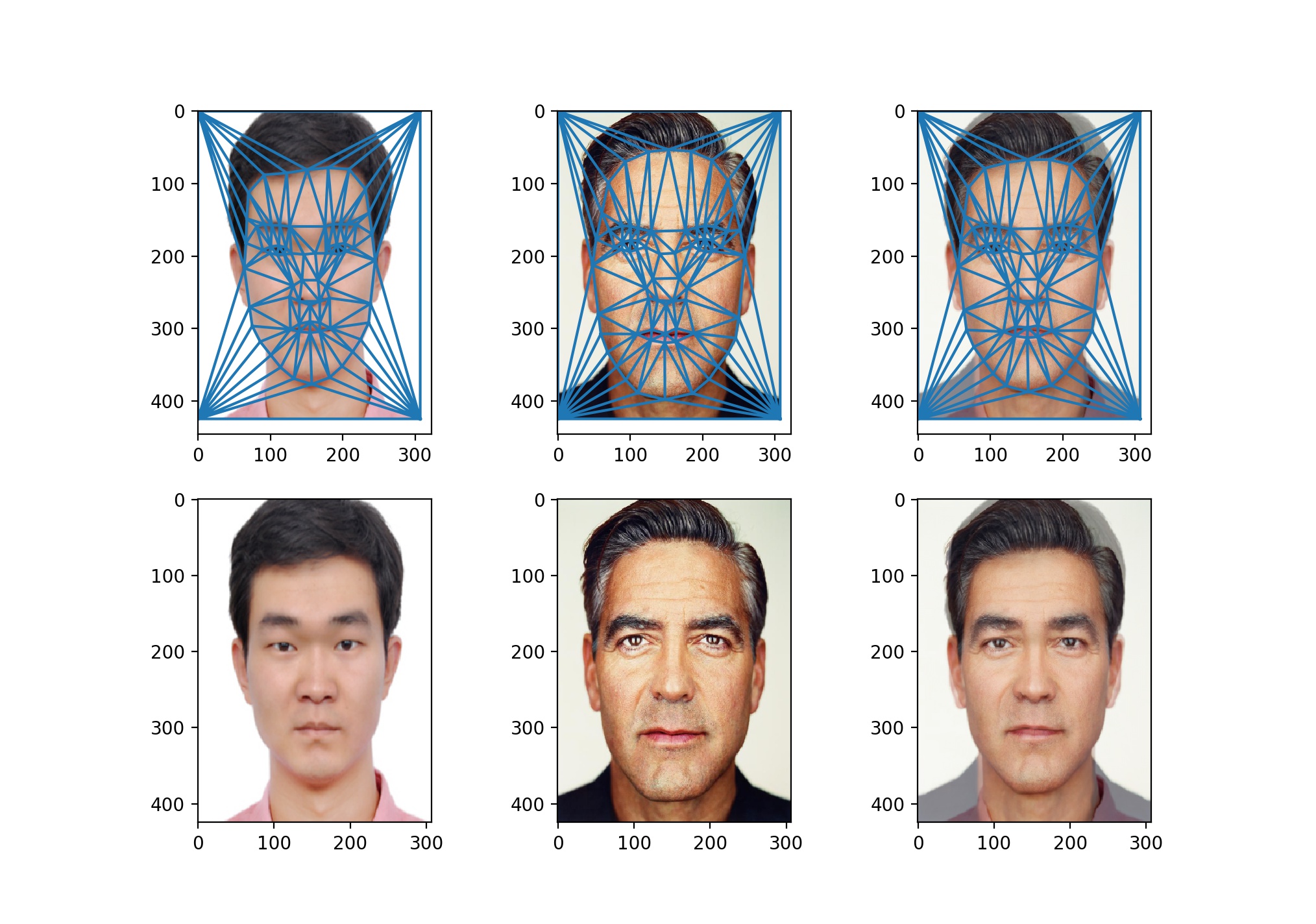

Warping images - the mid-way face

With the correspondences defined, we can compute the "average" shape of two faces by simply taking the average of all correspondence pairs. The Delaunay triangulation is then computed for these "average" shape points. The next step is warp both my face and George's face from the original triangles to the triangles defined by the "average" points. Finally we can take the average of these two warped images to create an "average" face.

Animation

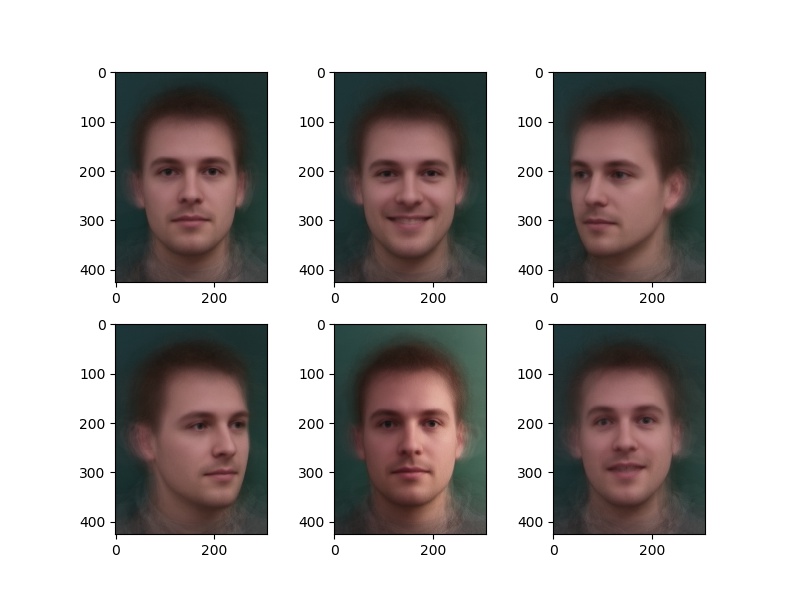

With this simple algorithm working, we defined a warping function that takes two fractions so that it creates weighted average faces. We combine them together to form an GIF of morphing between my face and George's face.

As we can see, the morphing of the face center is pretty good! However, the hair, as well as the neck and the cloth are not so well morphed. This is clearly due to insufficient correspondences for these pieces.

Warping between population faces

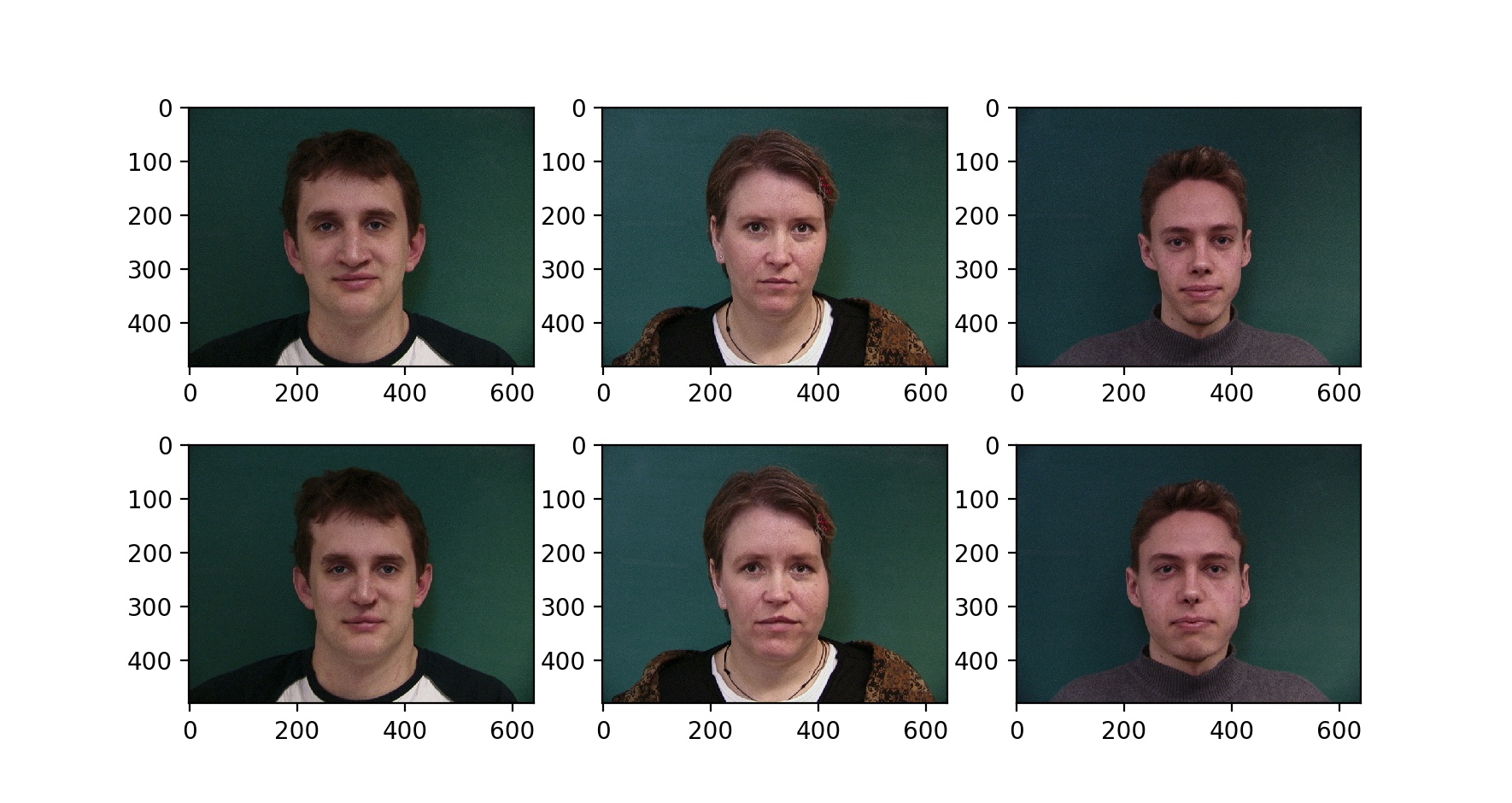

The "mean" face

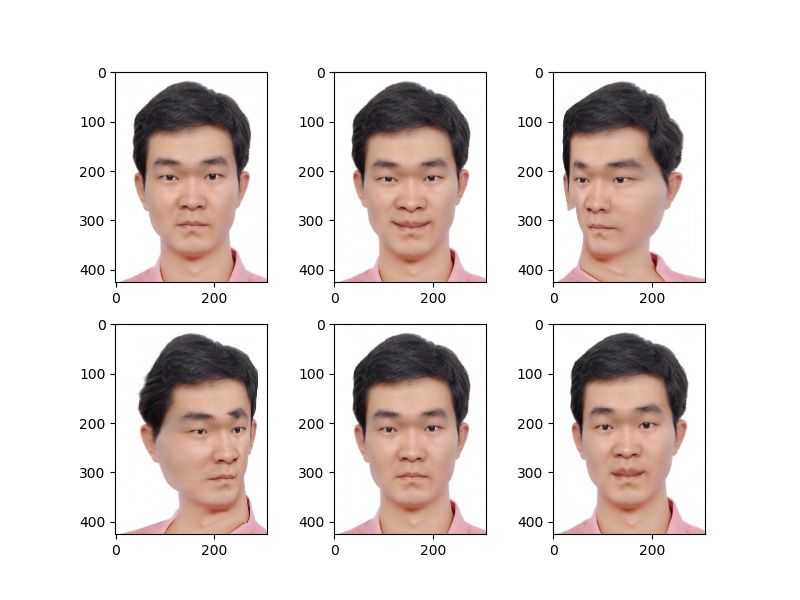

We used the IMM face dataset to compute a "mean" face of a population. The procedure is quite simple. First we average annotation points of each face to get a "average" face shape. Then we warp each face into this "average" face shape by repeating the same triangulation operation as above. We show here a few faces warped into the "mean" face shape. The first row is the original faces, and the second row is the "warped" faces.

It is interesting to note that women faces are more changed than men faces. This is due to the fact that the IMM face dataset is dominated by male faces. Also note that the last face is compressed vertically to make the face shorter (which is the feature of the "mean" face). Finally we just compute a "mean" image of all warped faces.

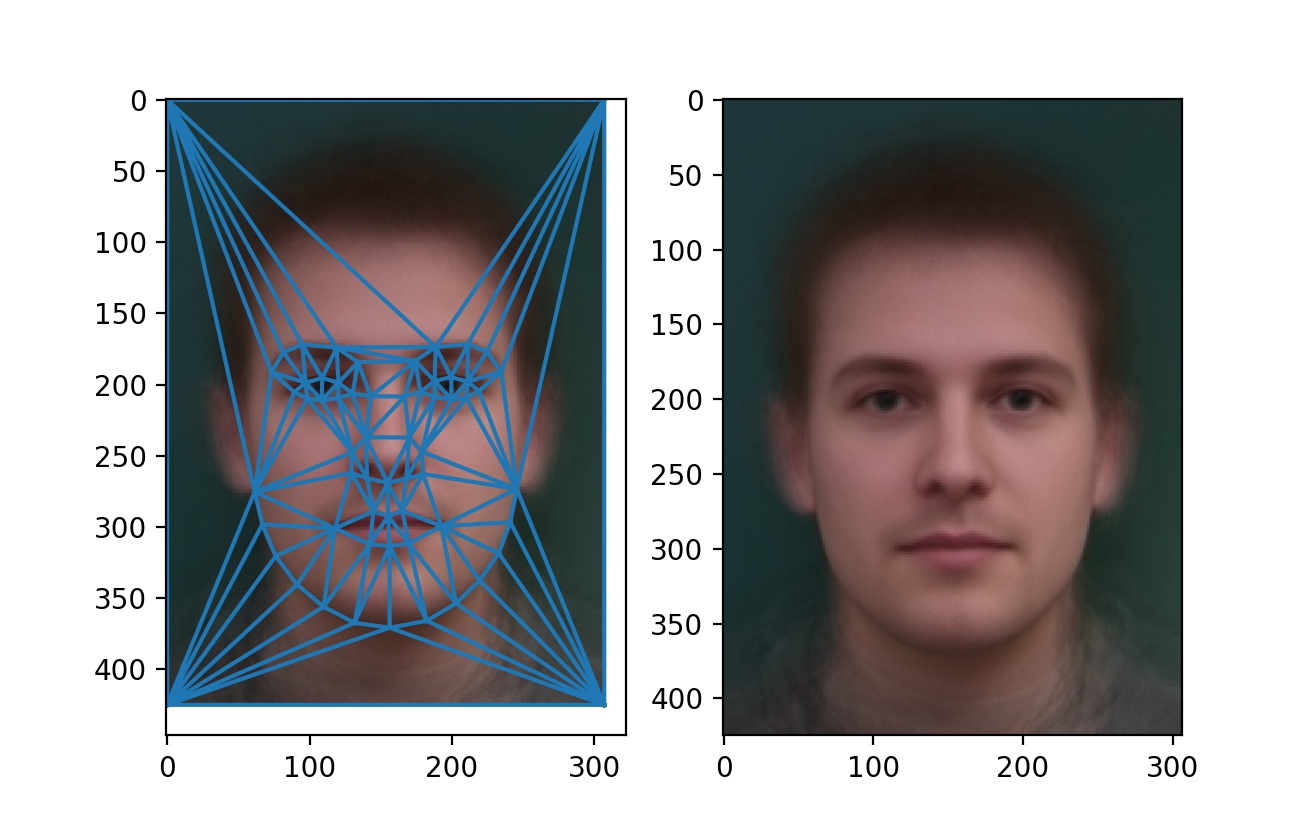

Morphing between my face and the "mean" face

I first try to warp my face into the "mean" face. The result is shown below. The warped face looks very "strange". First, my eyebrow is forced to be twisted into the shape of the "mean" face, while my own eyebrow is more flat. Second, my eyes have different tilting than the "mean" face, which makes the warped eyes seem rather unnatural. Finally, I have a small mouth while the "mean" face has a wide mouth, which also makes the warped mouth unnatural...

The exact opposite happens when I warp the "mean" face into my face shape, which can be found below. Also note that the warped "mean" face clearly have some triangle mismatch at his forehead. This is due to insufficient correspondence points at the forehead, and the triangles at either sides of the line get stretched differently.

Caricatures

The above images are computed according to $F = A\times (1-\alpha)+B\times \alpha$ when $\alpha \in [0, 1]$. When $\alpha$ is beyond 1, we are extrapolating. The following figure shows the extrapolated caricatures made using my face and the "mean" face. For this caricatures, $\alpha=1.4$.

One interesting artifact is that the caricature is frowning. This is because my face is "less happier" than the "mean" face. By the extrapolating formula, when $\alpha=1.4$, $F=A\times (1-\alpha)+B\times \alpha = 1.4B - 0.4A$. When $B$ is less happier than $A$, we are adding less "happiness" and subtracting more "happiness". As a result, the final face will have more "unhappiness".

Bells and whistles - cat morphing MV

Now we don't morph human faces, we morph cat faces! I downloaded a dataset of annotated cat faces at here. The cat images need some pre-processing to rotate, translate and center cat faces but that's no big deal. Then, we morph between each cat faces and connect them together into a music video. See below.

Bells and whistles - changing my expression

To change the expression of my face $M$, consider serious mean face $F_1$ and smiling mean face $F_2$. Ideally, $M' = M + \Delta F = M + (F_2-F_1)$ will create a smiling face of mine. To test this, I used the IMM dataset (only males), and computed the "mean" face of different expressions.

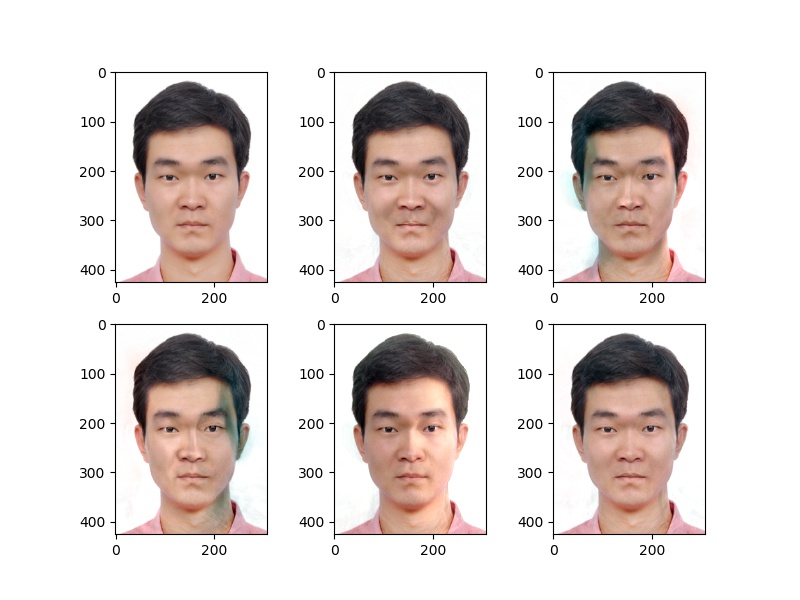

Next, I subtract the serious face (first image) from the other images (from the second to the sixth). Note that $M, F$ can either be the shape (points) of the face, or the pixels (texture). I first test using only the shape. Then these delta shape vectors are added to the shape vectors of my face. Finally I just warp my face into these target shape vectors.

Not too bad! We can see that the second face is quite good. However, the looking left/right face have some terrible texture mismatches at the left/right boundary. One possible solution is to add the "delta textures" to my face, which are the delta images created by subtracting the serious mean face from other mean faces. I demonstrate the effect of adding this delta image below.

Again, the second smiling face is very nicely modified (look at the smiling muscles around my mouth). The turning faces are not great at all. Indeed, we are missing information here, and this simple solution cannot create new information from the air. But the lighting effect of the fifth image is nicely modified.

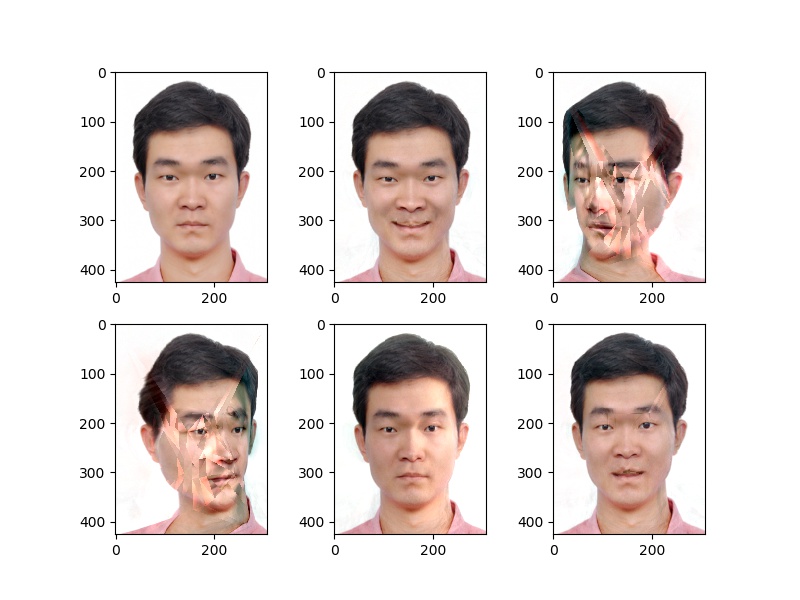

Finally, let's see what happens we add both the delta shape vector and the delta images to my face.

In general, half of the modifications (second, fifth, sixth) are okay, but the turning faces are again terribly messed up by the algorithm.