Overview

This project involved classification of images in the Fashion-MNIST dataset and semantic segmentation of images in the Mini Facade dataset using deep convolutional neural networks with PyTorch and Google Colab.

Part 1: Image Classification

In this part images of clothing from 10 classes are categorized. 60,000 training and 10,000 test images are used from the FashionMNIST dataset.

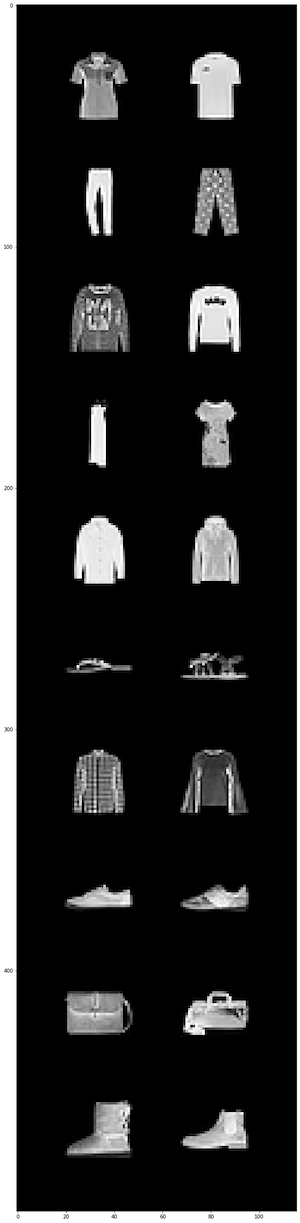

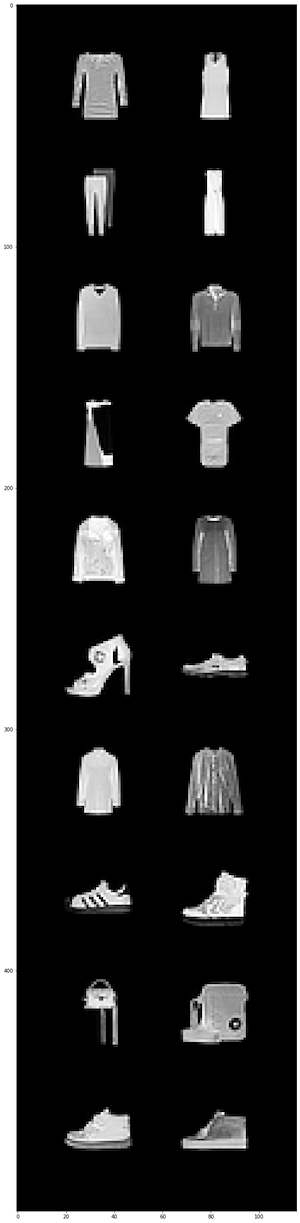

Sampling from the FashionMNIST Dataset

classes = { 0:'T-shirt/top', 1:'Trouser', 2:'Pullover', 3:'Dress', 4:'Coat', 5:'Sandal', 6:'Shirt', 7:'Sneaker', 8:'Bag', 9:'Ankle boot' }

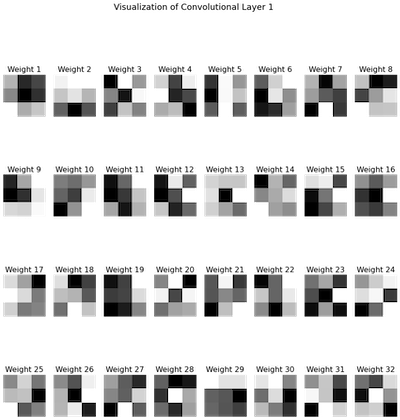

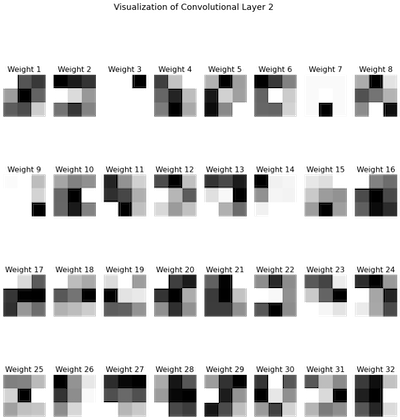

The architecture of the neural network is 2 convolutional layers, 32 channels each, each followed by a ReLU nonlinearity followed by a maxpool. This is followed by 2 fully connected networks with ReLU after the first fc layer.

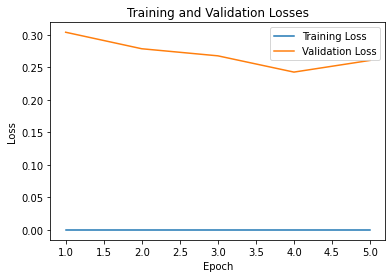

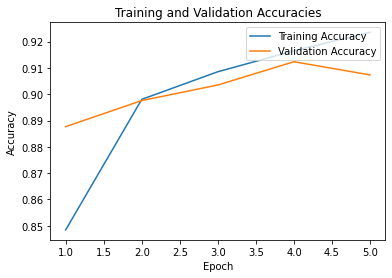

Training the CNN

The CNN was trained over five epochs with Adam optimizer using learning rate 1e-3 and weight decay 1e-5. Cross entropy loss was used as the prediction loss.

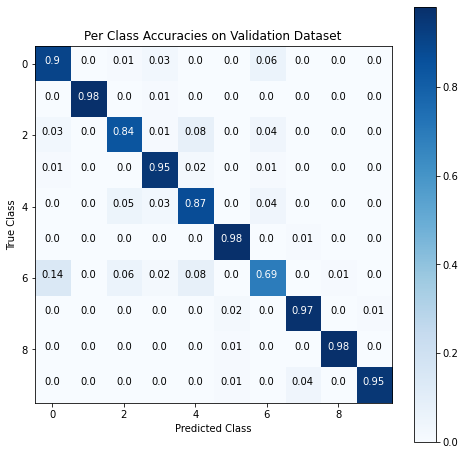

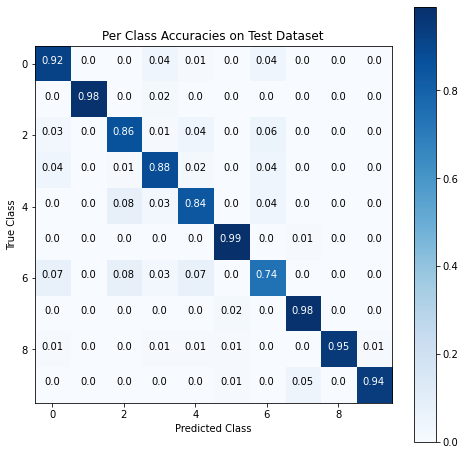

Results

The CNN was performed the worst on class 6, the shirt class, with accuracy 74%.

Examples of Correctly Labeled Images

Examples of Incorrectly Labeled Images

Visualizing Learned Filters

Part 2: Semantic Segmentation

In this part semantic segmentation is performed on images of architecture from around the world from the Mini Facade dataset. Every pixel of each image is labeled with one of five classes: balcony, window, pillar, facade and others.

CNN Architecture

Hyperparameters

The model used cross entropy loss, Adam optimizer with learning rate 0.0009841 and weight decay 0.000005803.

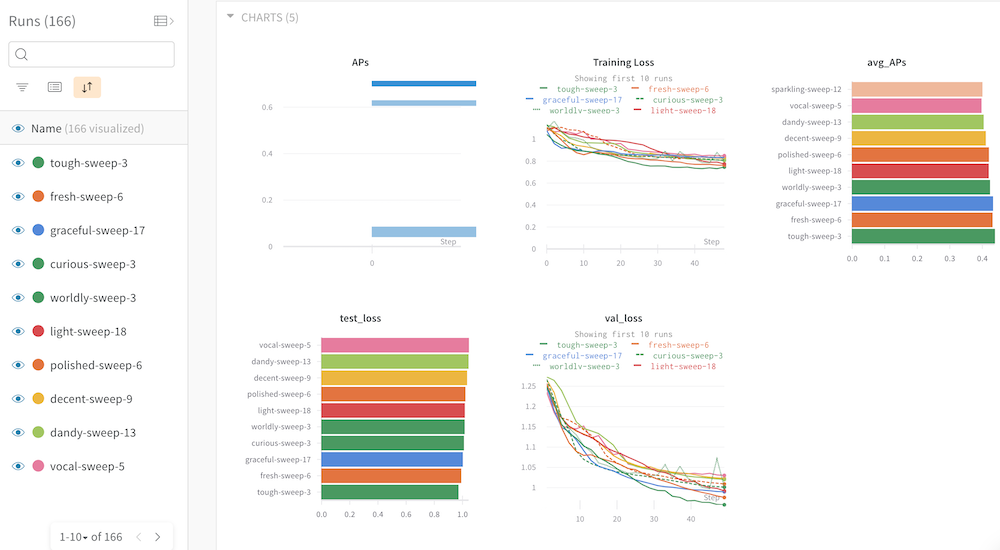

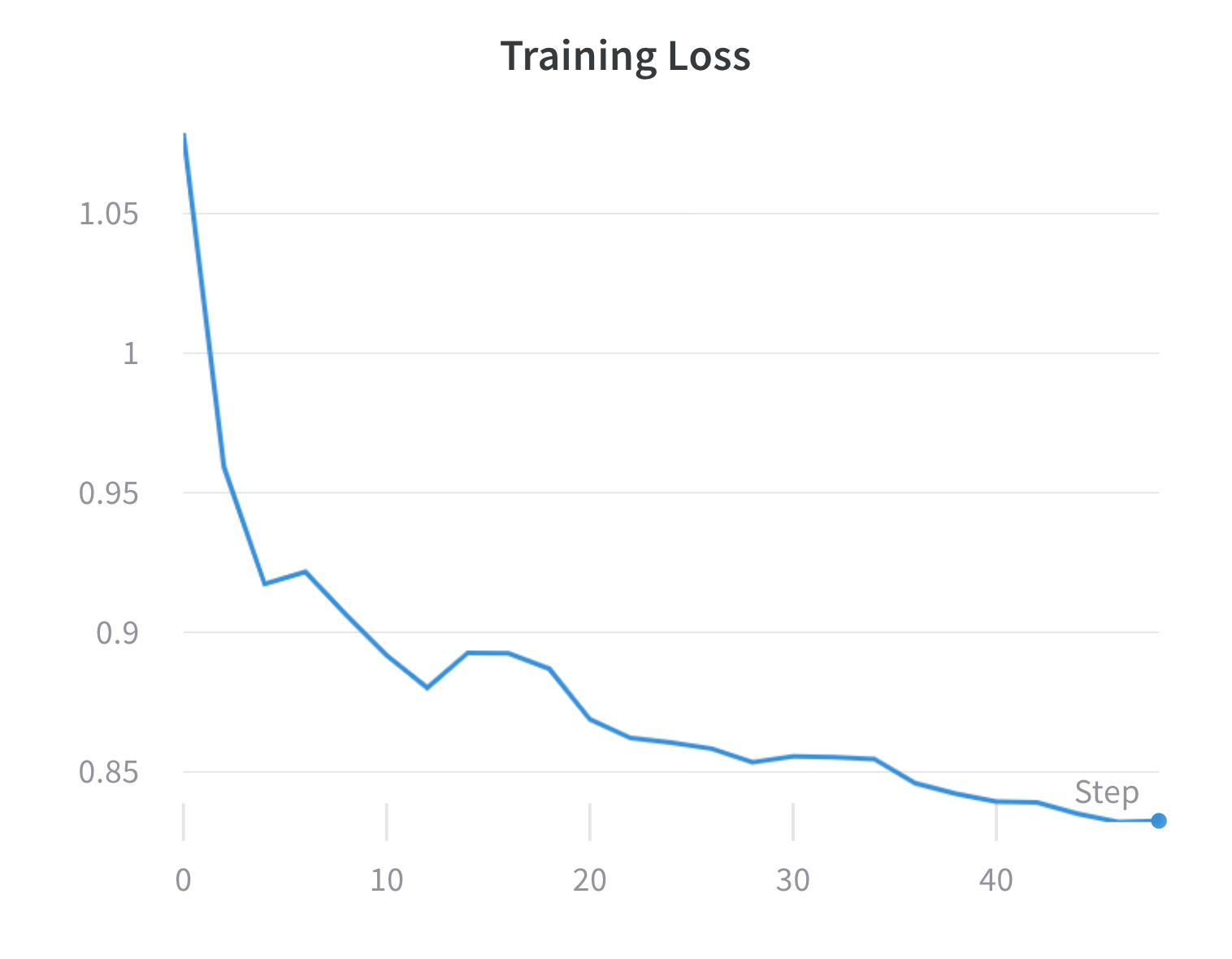

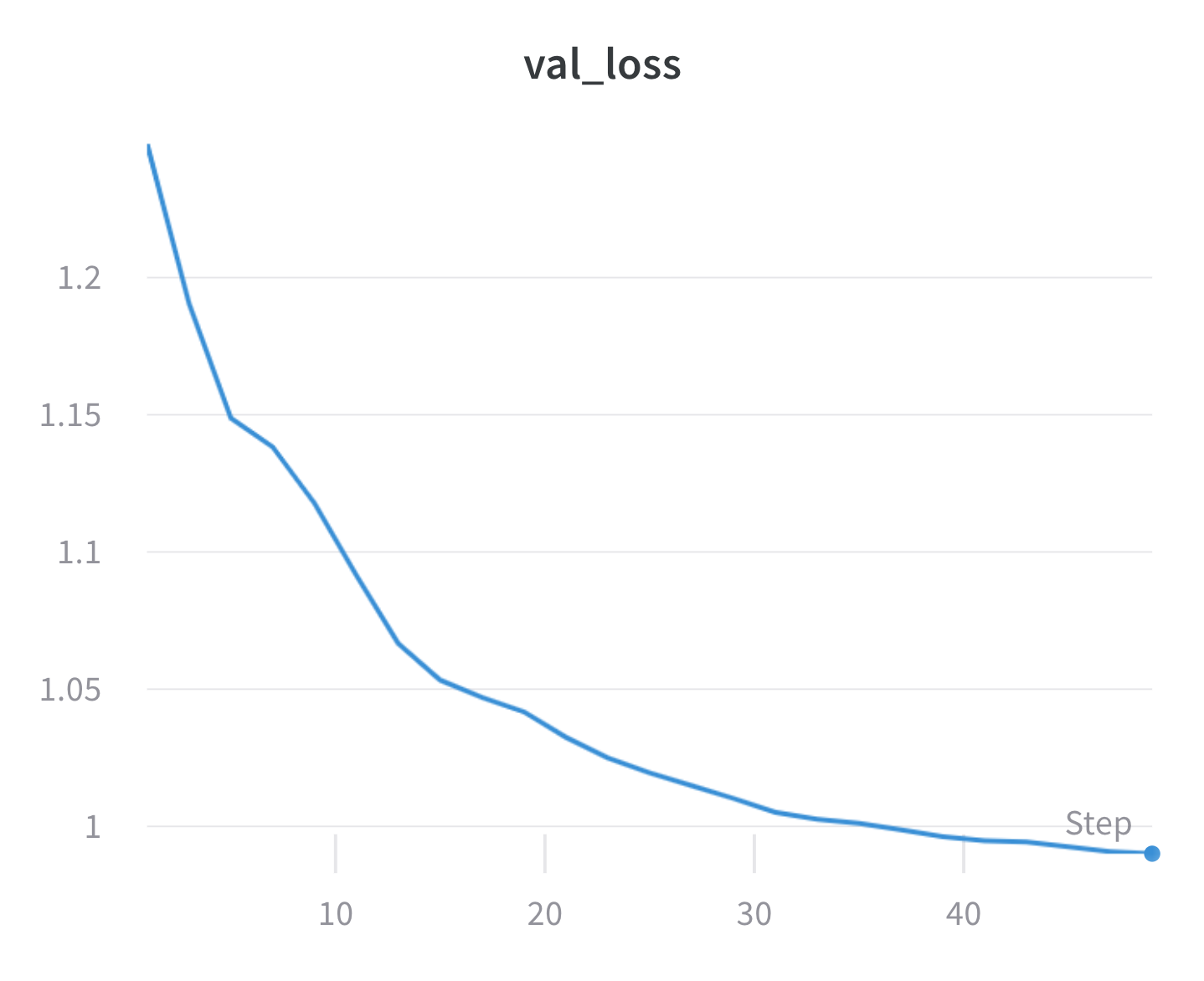

To find the learning rate and weight decay, I used Weights and Biases to

sweep over possible hyperparameters. The hyperparameter combination

resulted in the lowest validation loss of 0.9900442345456762 and highest average precision

0.4318011398682467.

All Runs: https://app.wandb.ai/qwerty321/proj4

Best Run: https://app.wandb.ai/qwerty321/proj4/runs/2la3f43f/overview?workspace=user-qwerty321

Training

lr = 0.0009841, weight decay = 5.803e-06 Start training -----------------Epoch = 1----------------- [epoch 1] loss: 1.074 elapsed time 19.803 1.2475201530115945 -----------------Epoch = 2----------------- [epoch 2] loss: 1.099 elapsed time 19.730 1.184222888488036 -----------------Epoch = 3----------------- [epoch 3] loss: 1.104 elapsed time 19.824 1.1611246067089038 -----------------Epoch = 4----------------- [epoch 4] loss: 1.085 elapsed time 19.724 1.1474093188951304 -----------------Epoch = 5----------------- [epoch 5] loss: 1.039 elapsed time 19.729 1.1315813195574416 -----------------Epoch = 6----------------- [epoch 6] loss: 0.995 elapsed time 19.753 1.1094945272901555 -----------------Epoch = 7----------------- [epoch 7] loss: 0.929 elapsed time 19.822 1.0924140545693073 -----------------Epoch = 8----------------- [epoch 8] loss: 0.913 elapsed time 19.731 1.0763650487412464 -----------------Epoch = 9----------------- [epoch 9] loss: 0.896 elapsed time 19.782 1.061426763678645 -----------------Epoch = 10----------------- [epoch 10] loss: 0.871 elapsed time 19.751 1.0424758926197724 -----------------Epoch = 11----------------- [epoch 11] loss: 0.814 elapsed time 19.776 1.0196489950457772 -----------------Epoch = 12----------------- [epoch 12] loss: 0.808 elapsed time 19.840 1.021347157575272 -----------------Epoch = 13----------------- [epoch 13] loss: 0.791 elapsed time 19.782 1.0112490899615236 -----------------Epoch = 14----------------- [epoch 14] loss: 0.778 elapsed time 19.806 1.0023106788540934 -----------------Epoch = 15----------------- [epoch 15] loss: 0.804 elapsed time 19.724 1.0026988318333259 -----------------Epoch = 16----------------- [epoch 16] loss: 0.788 elapsed time 19.740 0.9974784284502596 -----------------Epoch = 17----------------- [epoch 17] loss: 0.756 elapsed time 19.792 0.9924349172429724 -----------------Epoch = 18----------------- [epoch 18] loss: 0.773 elapsed time 19.760 0.9855036938583458 -----------------Epoch = 19----------------- [epoch 19] loss: 0.785 elapsed time 19.821 0.9867260564159561 -----------------Epoch = 20----------------- [epoch 20] loss: 0.763 elapsed time 19.797 0.9813663579605438 -----------------Epoch = 21----------------- [epoch 21] loss: 0.767 elapsed time 19.840 0.9819232621690729 -----------------Epoch = 22----------------- [epoch 22] loss: 0.763 elapsed time 19.755 0.9804798403939048 -----------------Epoch = 23----------------- [epoch 23] loss: 0.764 elapsed time 19.723 0.9735997528820247 -----------------Epoch = 24----------------- [epoch 24] loss: 0.756 elapsed time 19.804 0.9686579458661132 -----------------Epoch = 25----------------- [epoch 25] loss: 0.756 elapsed time 19.747 0.9665454098811517 -----------------Epoch = 26----------------- [epoch 26] loss: 0.757 elapsed time 19.813 0.9643744079621284 -----------------Epoch = 27----------------- [epoch 27] loss: 0.753 elapsed time 19.764 0.9617108489785876 -----------------Epoch = 28----------------- [epoch 28] loss: 0.754 elapsed time 19.786 0.9610359177484618 -----------------Epoch = 29----------------- [epoch 29] loss: 0.747 elapsed time 19.750 0.9577845488930796 -----------------Epoch = 30----------------- [epoch 30] loss: 0.749 elapsed time 19.783 0.957324892937482 -----------------Epoch = 31----------------- [epoch 31] loss: 0.757 elapsed time 19.783 0.9622196610812302 -----------------Epoch = 32----------------- [epoch 32] loss: 0.746 elapsed time 19.735 0.9562080554909759 -----------------Epoch = 33----------------- [epoch 33] loss: 0.750 elapsed time 19.747 0.9616343205446726 -----------------Epoch = 34----------------- [epoch 34] loss: 0.758 elapsed time 19.708 0.9653705122706654 -----------------Epoch = 35----------------- [epoch 35] loss: 0.760 elapsed time 19.849 0.9668513670727447 -----------------Epoch = 36----------------- [epoch 36] loss: 0.745 elapsed time 19.862 0.9569179949524639 -----------------Epoch = 37----------------- [epoch 37] loss: 0.742 elapsed time 19.775 0.9566913279858265 -----------------Epoch = 38----------------- [epoch 38] loss: 0.736 elapsed time 19.724 0.9529203235448062 -----------------Epoch = 39----------------- [epoch 39] loss: 0.751 elapsed time 19.768 0.9600898954239521 -----------------Epoch = 40----------------- [epoch 40] loss: 0.752 elapsed time 19.778 0.9593775049670712 -----------------Epoch = 41----------------- [epoch 41] loss: 0.753 elapsed time 19.762 0.9571986391649141 -----------------Epoch = 42----------------- [epoch 42] loss: 0.751 elapsed time 19.794 0.9560067801030128 -----------------Epoch = 43----------------- [epoch 43] loss: 0.732 elapsed time 19.721 0.9498448755059924 -----------------Epoch = 44----------------- [epoch 44] loss: 0.738 elapsed time 19.815 0.949612496645896 -----------------Epoch = 45----------------- [epoch 45] loss: 0.740 elapsed time 19.723 0.9521561438565725 Finished Training, Testing on test set 0.9656797733746076 Generating Unlabeled Result AP = 0.6303548205778786 AP = 0.7219391347969041 AP = 0.048428428434867055 AP = 0.7036956574739648 AP = 0.10522684861314587 Average AP on test set: 0.442

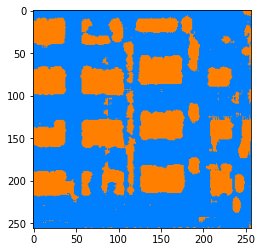

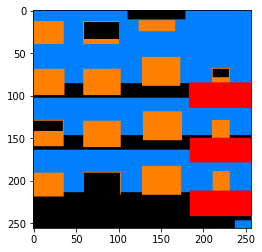

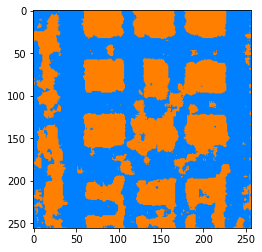

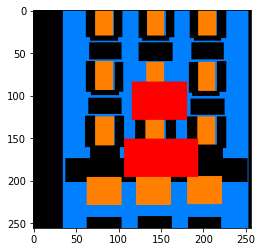

Results

The algorithm model correctly labels the facade and windows but fails to label the remaining classes (others, pillar, and balcony).