Project 4 - Classification and Segmentation

Project overview

The first part of the project was training a neural net to classify a set of images into 10 diffferent categories. This net architecture used convolutional layers followed by fully connected layers. The second part of the project was training a neural net to label parts of a building image into different classes (ie windows, pillars, etc). This net architecture used many convolutional layers (with nonlinearities).

Part 1: Image Classification

CNN architecture

The model consisted of 2 convolutional layers and 2 fully connected layers. Each convolutional layer consisted of a 48 channel 2d convolution with a 3x3 filter, followed by a softmax, then maxpool. Then, the output after the 2 convolutional layers goes through a fully connected layer, a ReLu, and then another fully connected layer. The final output is a 10-vector, and the class with highest activation is the predicted label.

Loss function and optimizer and training

I used torch.optim.Adam as the optimizer, with a learning rate of 0.01 and weight decay of 0.00001. I used nn.CrossEntropyLoss as the loss function. The net was trained for 5 epochs.

Part 1 Results

Accuracies

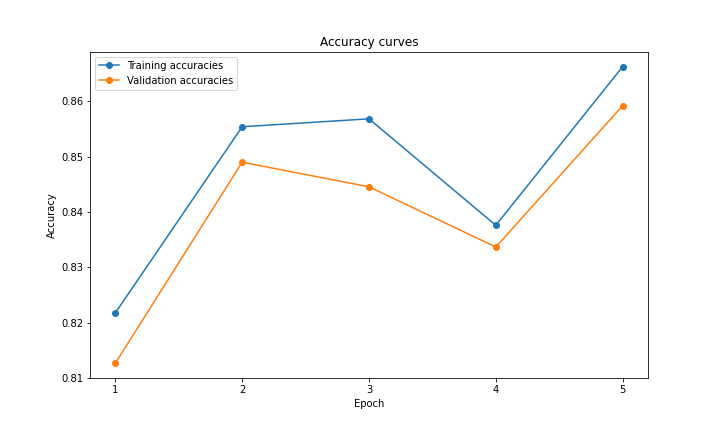

The train and validation accuracies during the training process:

Accuracy of the network on the validation images: 85%

Accuracy of the network on the test images: 85%

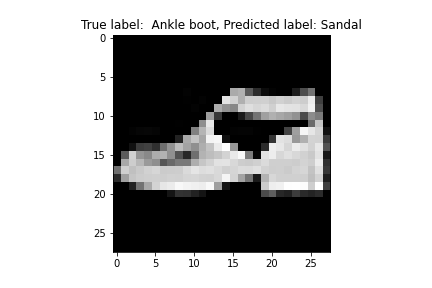

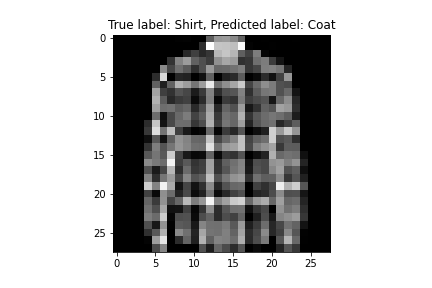

Class accuracies

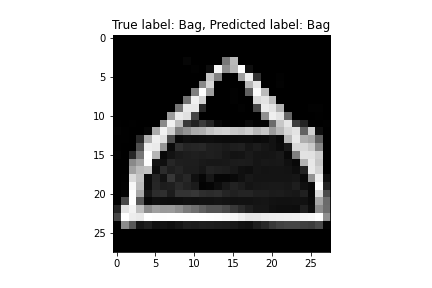

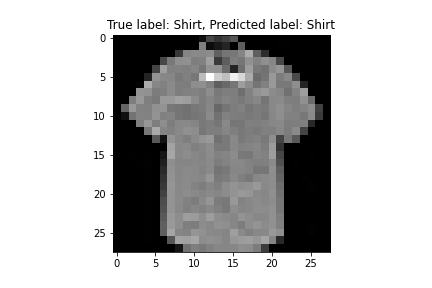

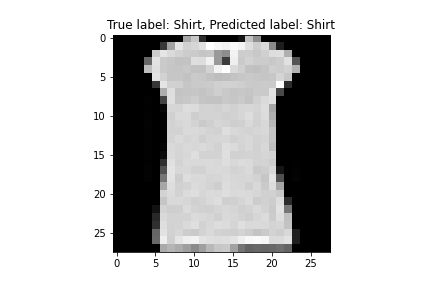

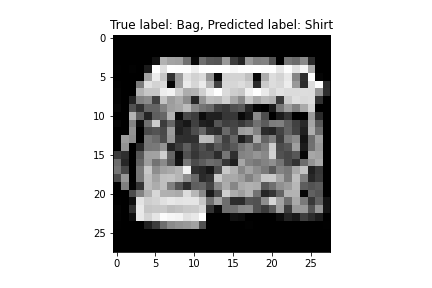

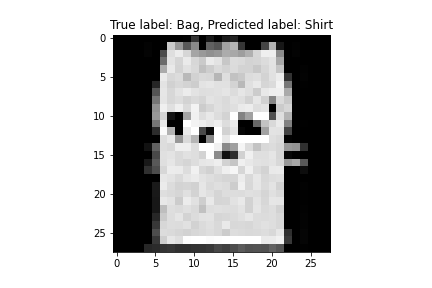

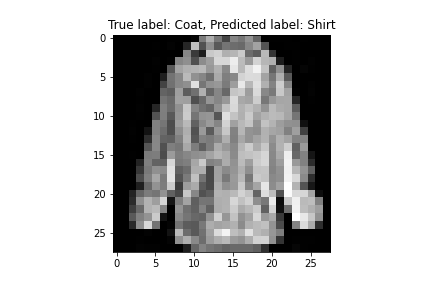

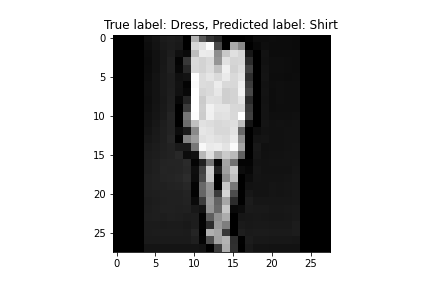

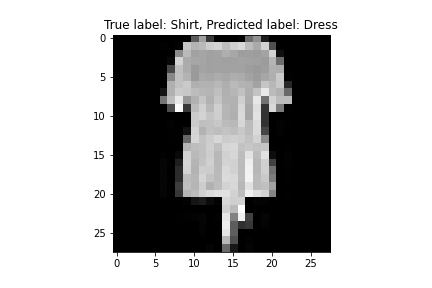

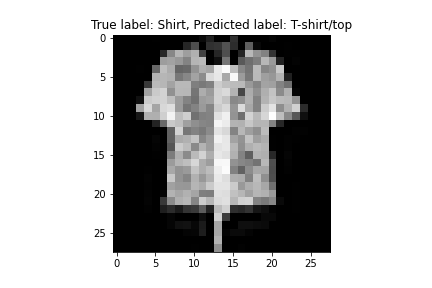

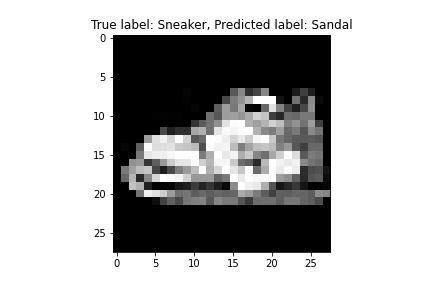

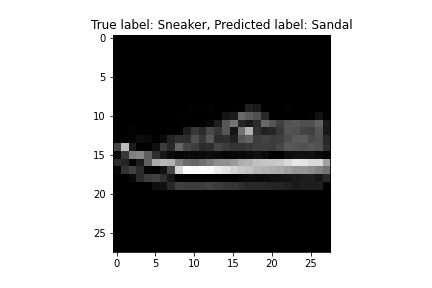

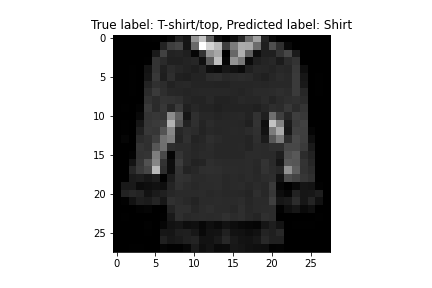

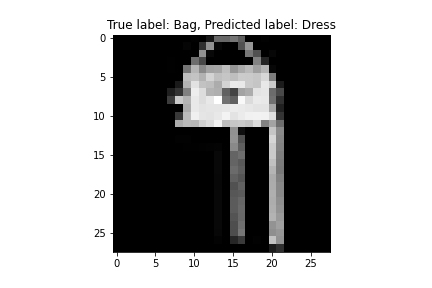

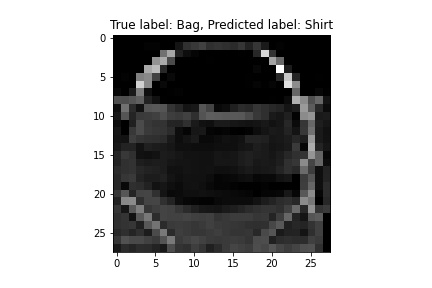

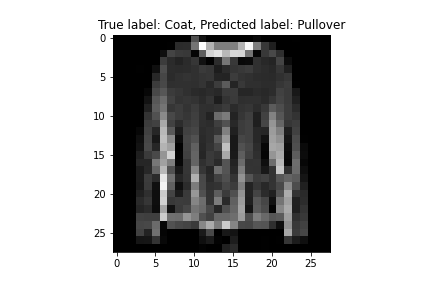

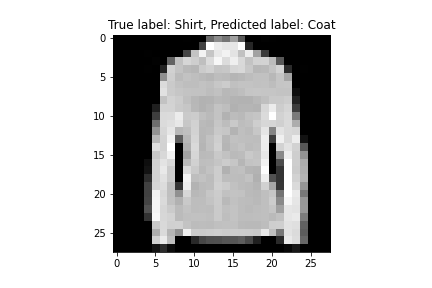

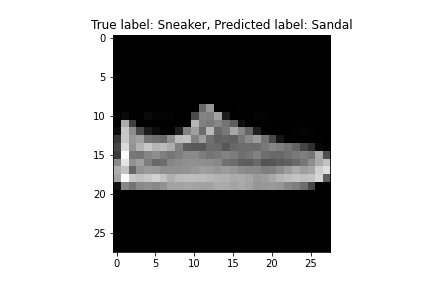

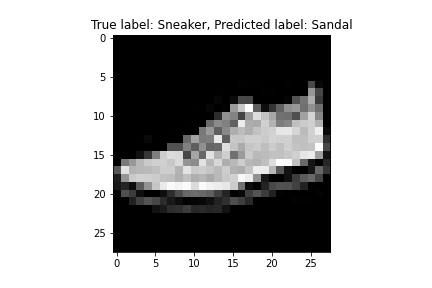

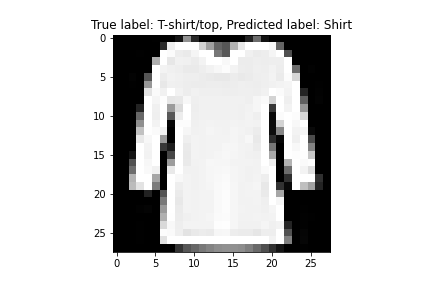

For both the validation and test set, "Bag" had the highest accuracy and "Shirt" had the lowest.

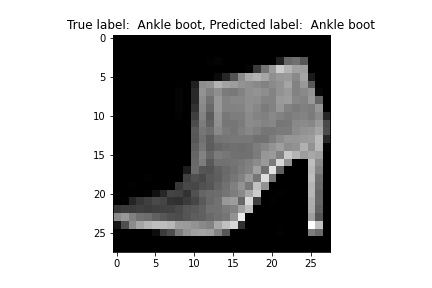

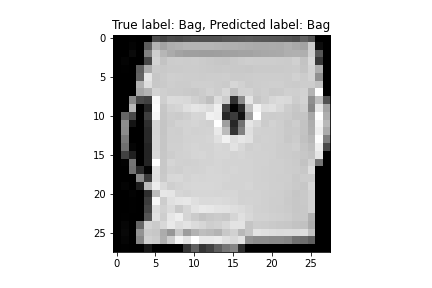

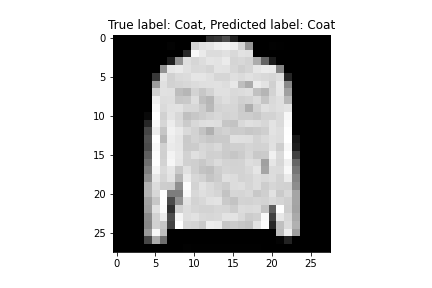

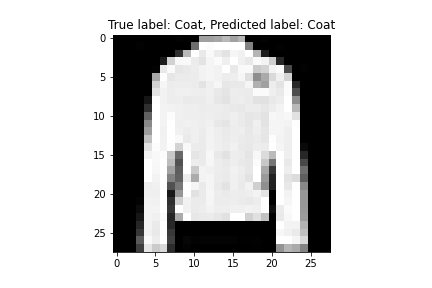

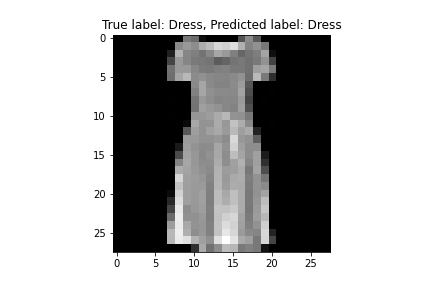

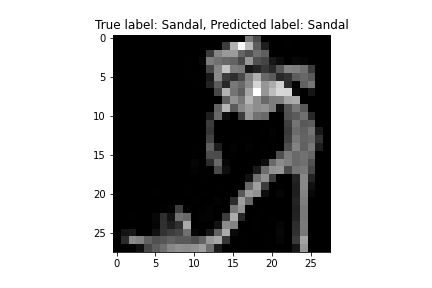

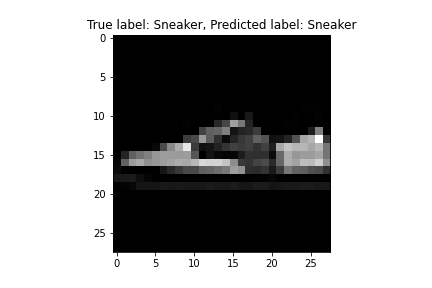

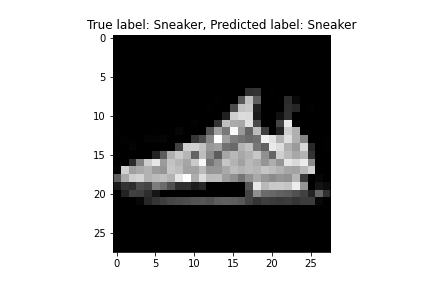

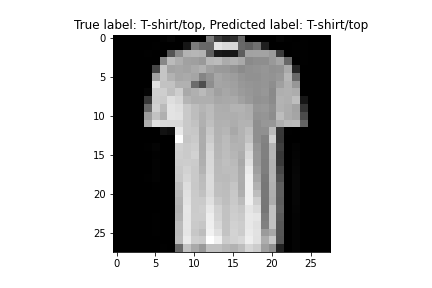

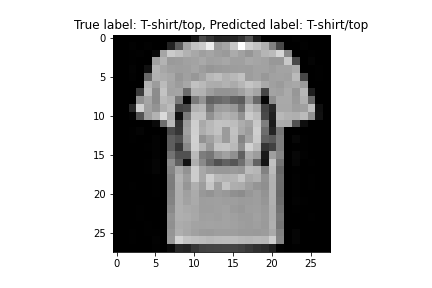

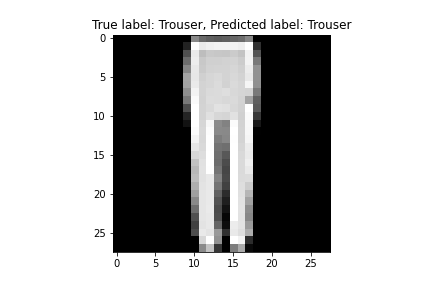

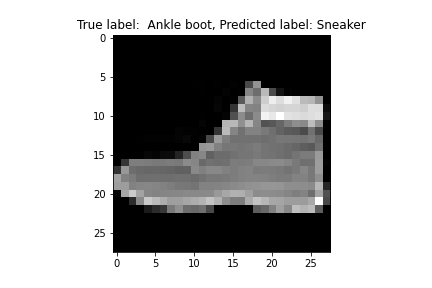

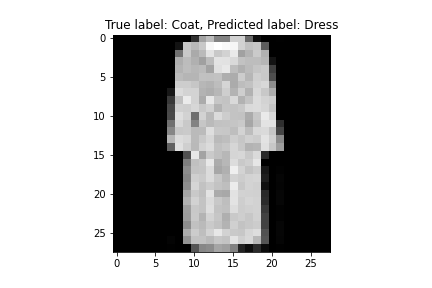

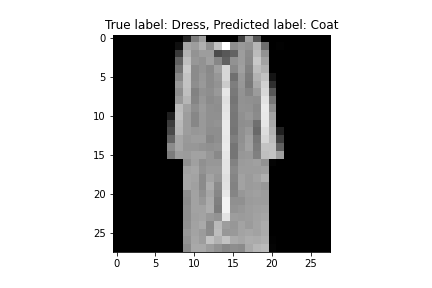

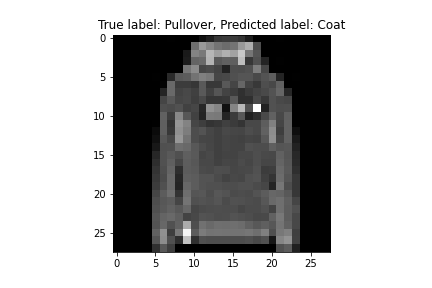

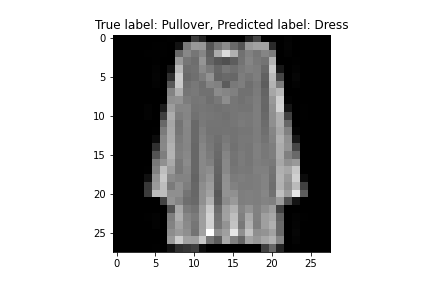

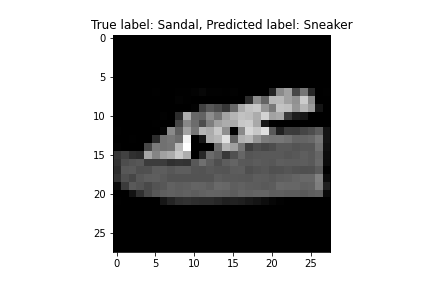

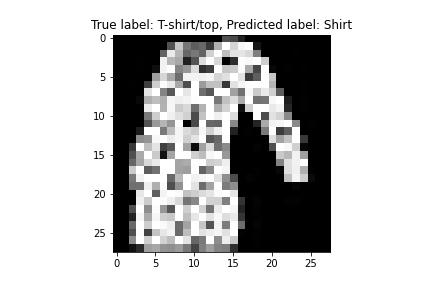

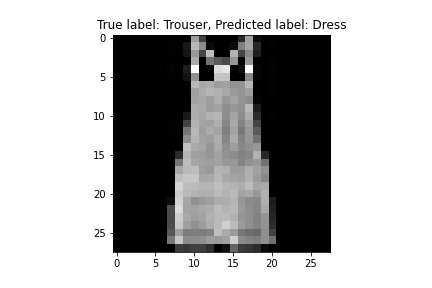

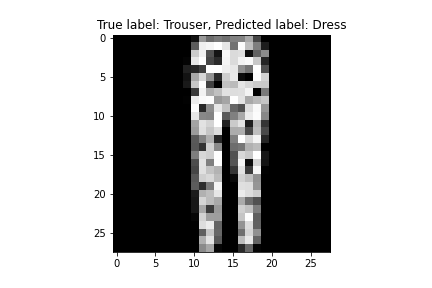

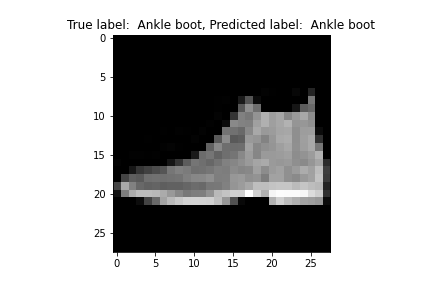

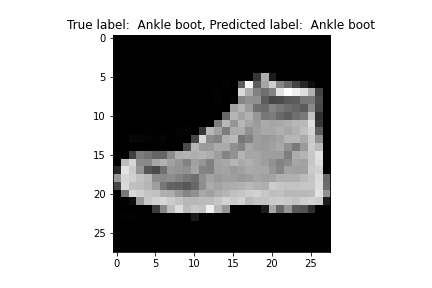

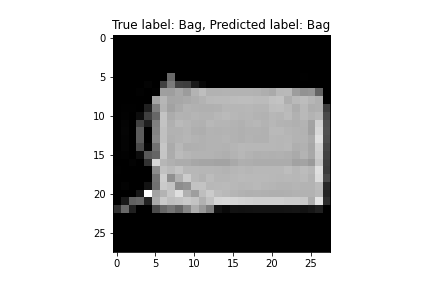

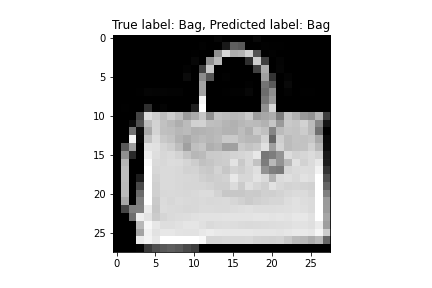

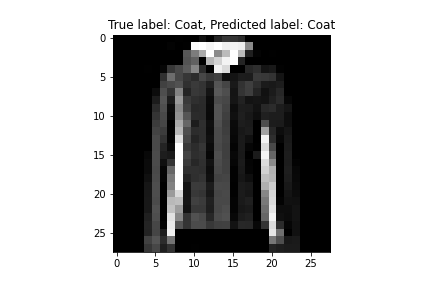

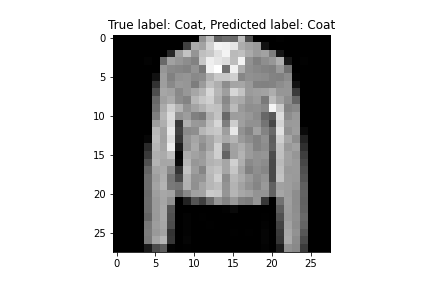

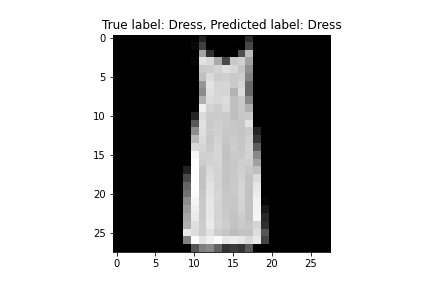

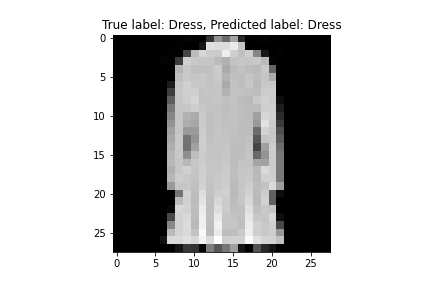

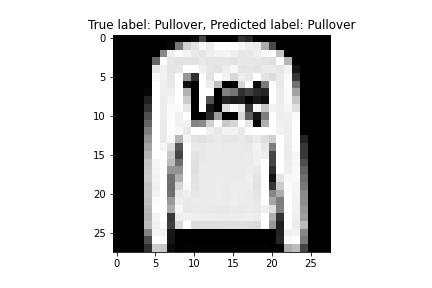

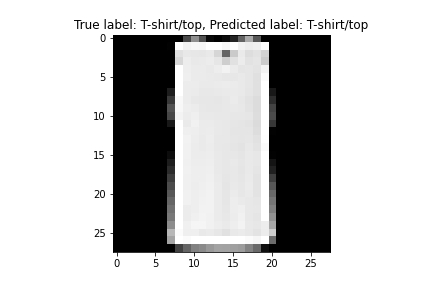

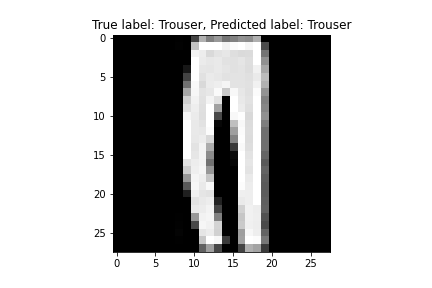

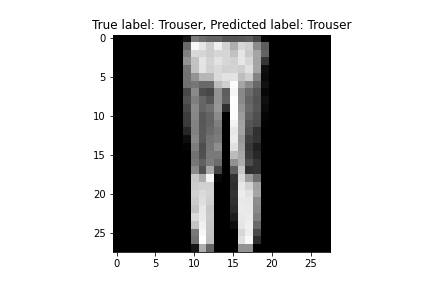

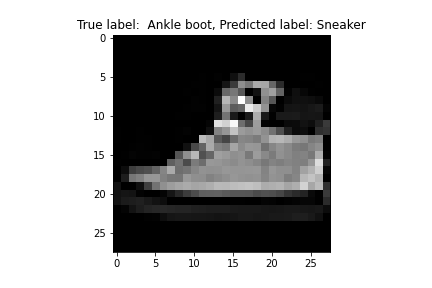

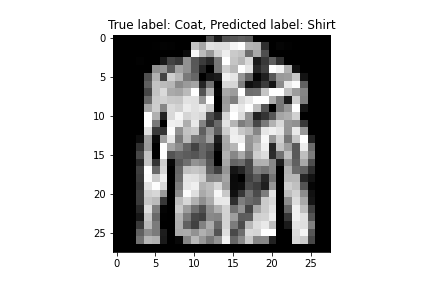

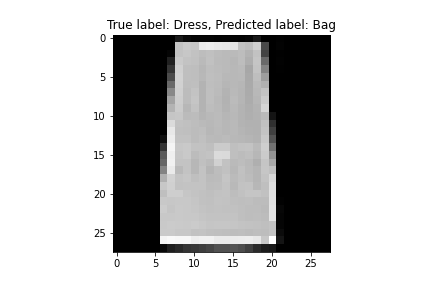

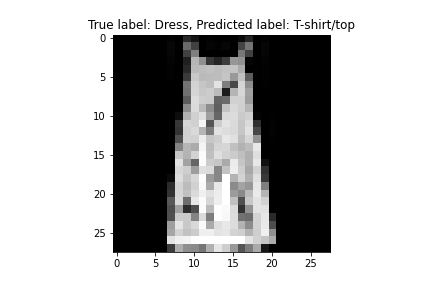

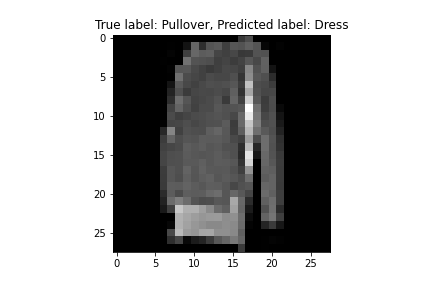

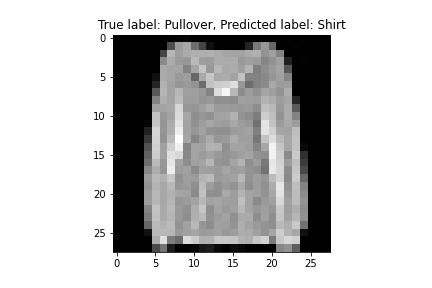

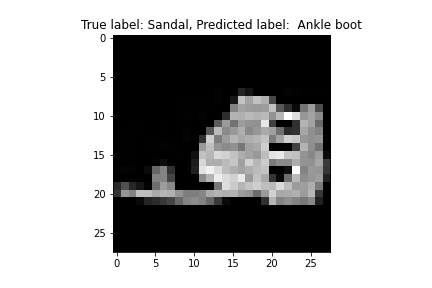

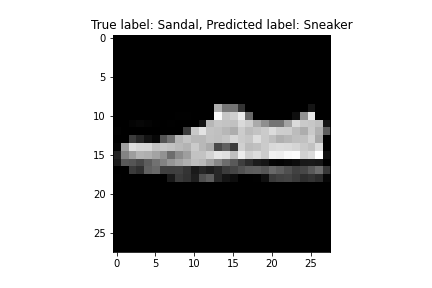

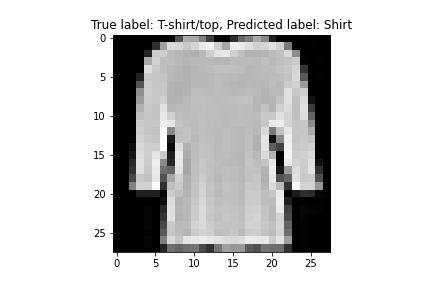

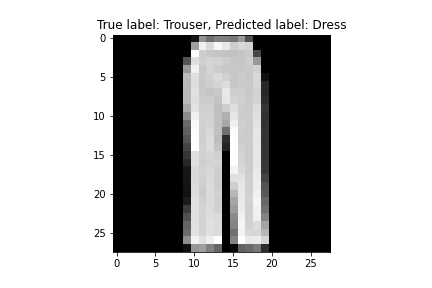

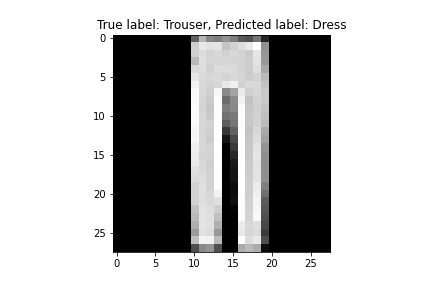

Validation set examples:

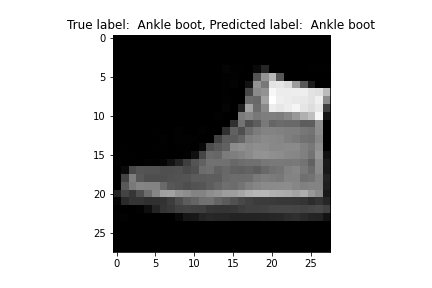

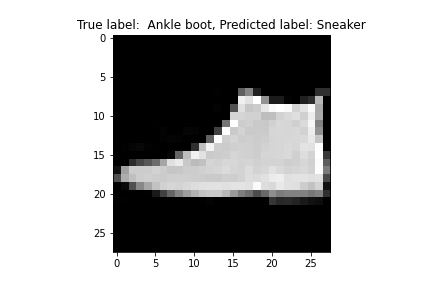

Test set examples:

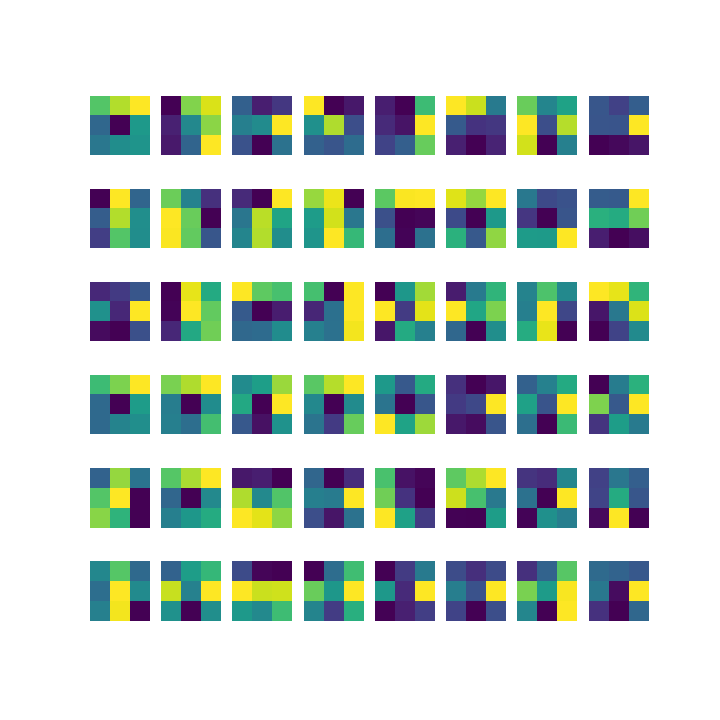

Visualized filters

These are the learned 3x3 filters of the first convolutional layer (before nonlinearities were applied).

Part 2: Semantic Segmentation

I split the provided training data into a training set and validation set (100 images) prior to loading the data.

CNN architecture

The model consists of 6 convolutional layers, as follows:

1. input --> Conv2d (in: 5 channels, out: 32 channels), filter size=3x3, padding=1 --> MaxPool2d, filter size=2x2 --> Upsample, scale=2 -->

2. --> Conv2d (in: 32 channels, out: 64 channels), filter size=3x3, padding=1 --> MaxPool2d, filter size=2x2 --> Upsample, scale=2 -->

3. --> Conv2d (in: 64 channels, out: 128 channels), filter size=3x3, padding=1 --> MaxPool2d, filter size=2x2 --> Upsample, scale=2 -->

4. --> Conv2d (in: 128 channels, out: 64 channels), filter size=3x3, padding=1 --> MaxPool2d, filter size=2x2 --> Upsample, scale=2 -->

5. --> Conv2d (in: 64 channels, out: 32 channels), filter size=3x3, padding=1 --> MaxPool2d, filter size=2x2 --> Upsample, scale=2 -->

6. --> Conv2d (in: 32 channels, out: 5 channels), filter size=3x3, padding=1 --> MaxPool2d, filter size=2x2 --> Upsample, scale=2 --> output

Loss function, optimizer, and training hyperparameters

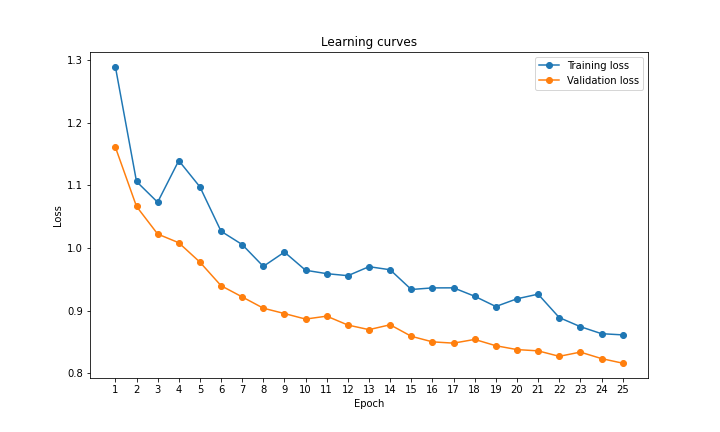

The input data was processed using batch size = 1. I used optim.Adam as my optimizer with a learning rate = 0.001 and weight decay = 0.00001, nn.CrossEntropyLoss as my loss function, and trained the network for 25 epochs.

Part 2 Results

Loss graph

The training loss and validation loss has been plotted over 25 epochs.

Average Precision

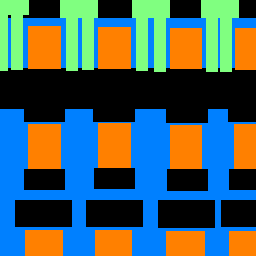

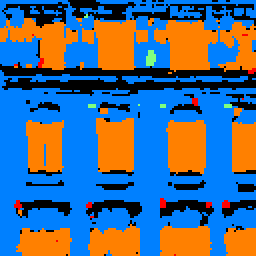

Average precision values fall between 0 and 1. These are the AP scores for the classes [others, facade, pillar, window, balcony], corresponding to [black, blue, green, orange, red]:

AP = 0.6560492458662615

AP = 0.7634421164751314

AP = 0.13545104091410726

AP = 0.7752812762598212

AP = 0.39690699127001716

Average AP (approx.) = 0.544

Sample result

The trained network classifies windows and facades (orange and blue) relatively well, and it doesn't do so hot on pillars (green)