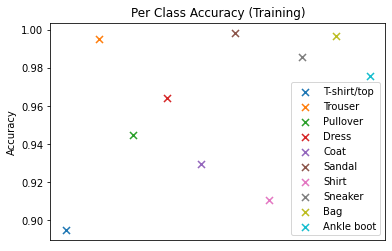

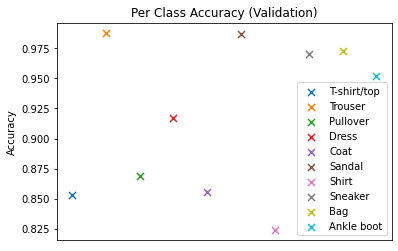

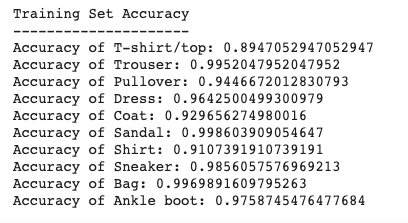

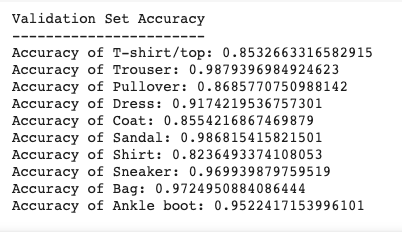

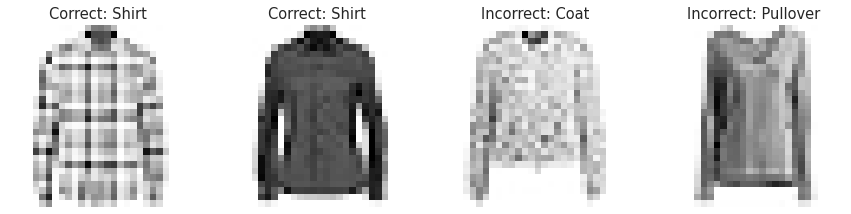

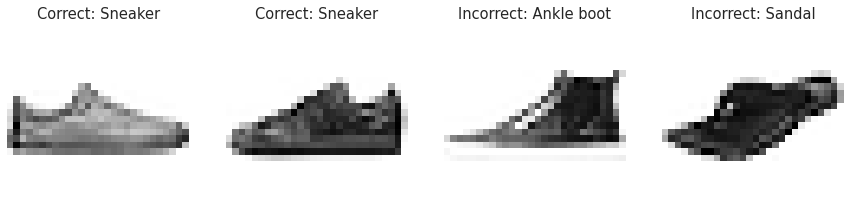

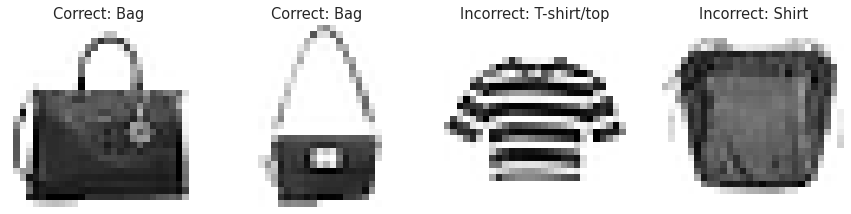

We can view this with the raw output as well. From the plots and the raw output we can clearly see that the T-shirt/top and Shirt classes are the two most difficult classes to obtain high accuracy for in both the training and validation datasets. Sandals, trousers, sneakers, and bags all had very high accuracy in both datasets.

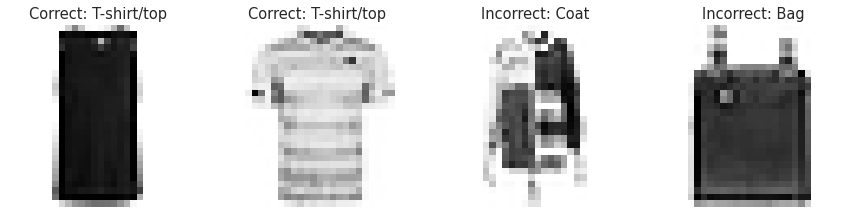

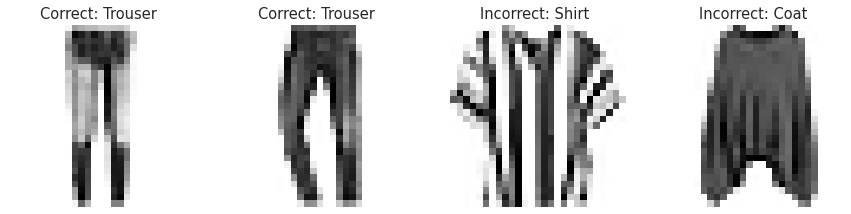

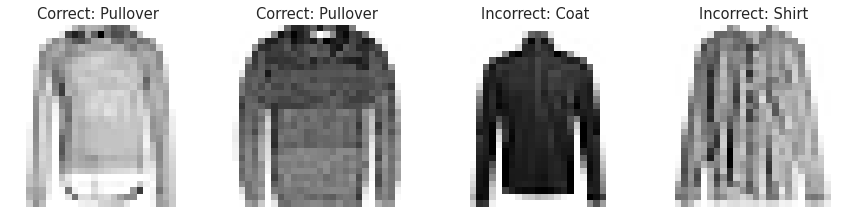

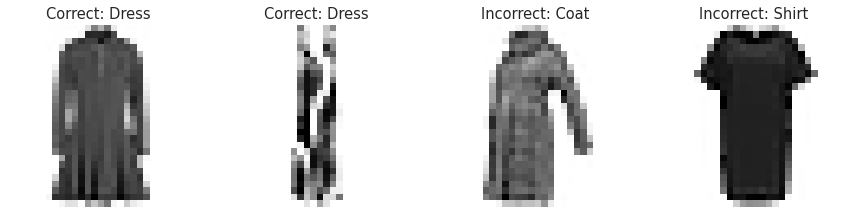

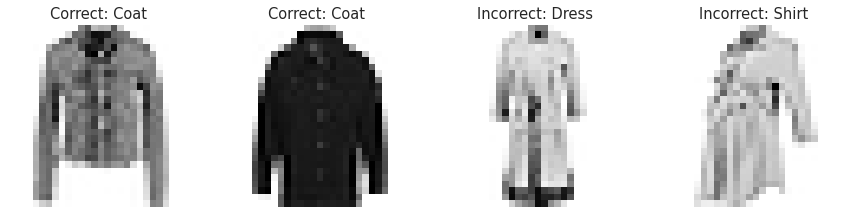

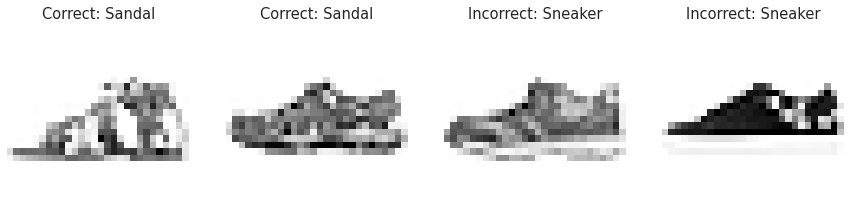

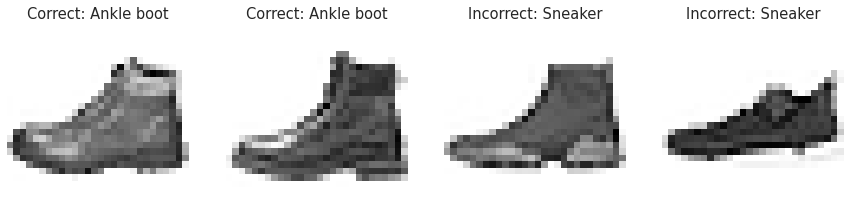

Next, we show 2 images from each class where the classifier correctly classifies the clothing, and 2 images where it does not.

s

s

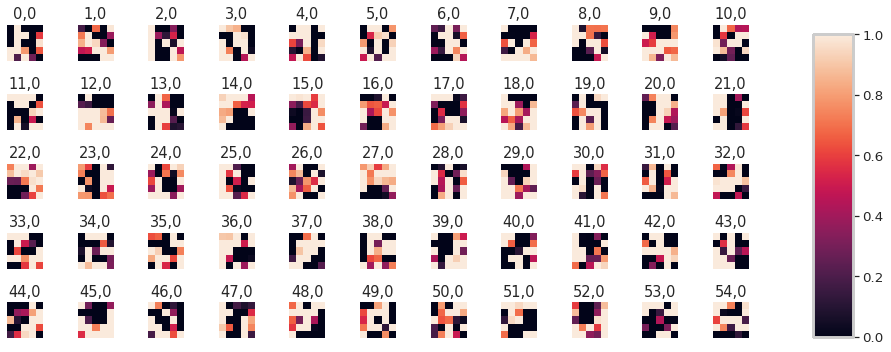

We can also visualize the individual layers of the convolutional neural network. Here, we show the 55 filters for the first layer.

Part 2: Semantic Segmentation

Overview

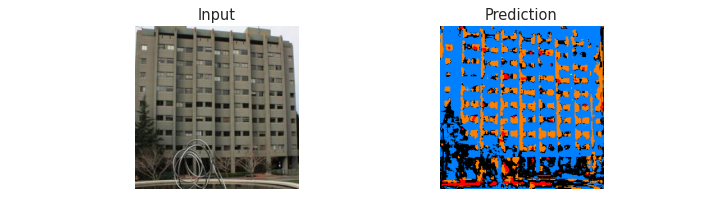

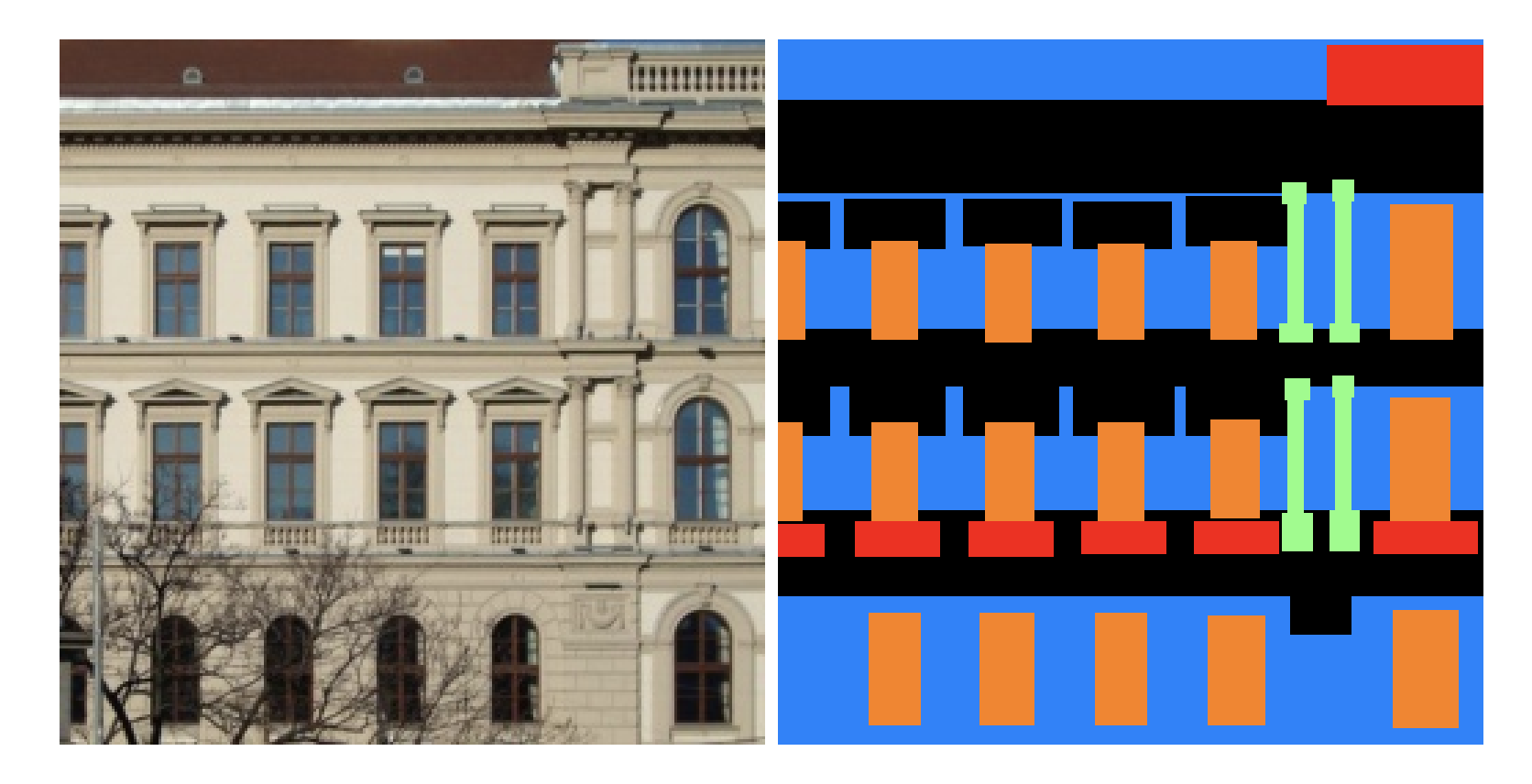

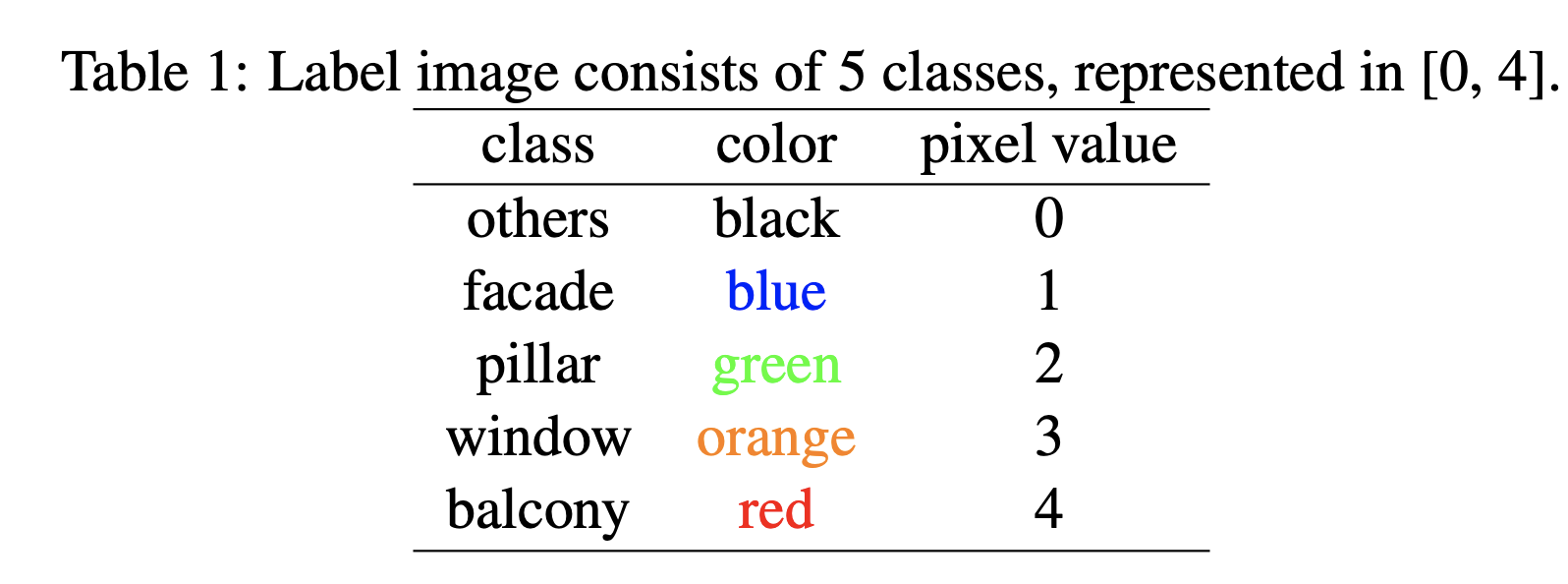

In this part of the project we build a convolutional neural network to label each pixel in the image to its correct object class. For this part, we will use the Mini Facade dataset. Just as in the previous part, CUDA GPU capability is used through Google Colab to speed up computation. The image and table below show an example of what we're trying to achieve here.

The table lists 5 classes (or features) we wish to classify given an image (of a facade from the Mini Facade dataset). The images on the left show an example image of a facade, with the semantic segmentation shown in colors corresponding to the table. In this case, the semantic segmentation shown is the ground truth. Below, we attempt to design and train a convolutional neural network to achieve an average precision (AP) of at least 0.45 (45%) across all 5 classes.

Dataloader

I split the data into a training set and a validation set, with a ratio of 80/20 respectively. Additionally, I create a onehot encoded set from 100 training samples in order to quickly compute average precision during training.

Convolutional Neural Network (CNN) Architecture

The CNN is organized as follows. It uses the architecture suggested in the project spec, with 5 convolution layers:

nn.Conv2d(3, 32, 3, padding=2)ReLUnn.Conv2d(32, 64, 3, padding=1)ReLUnn.MaxPool2d(2)nn.ConvTranspose2d(64, 64, 2, stride=2)nn.Conv2d(64, 128, 3, padding=1)ReLUnn.Conv2d(128, 64, 3, padding=1)nn.MaxPool2d(2)nn.ConvTranspose2d(64, 32, 2, stride=2)nn.Conv2d(32, self.n_class, 3)

As recommended in the project spec, prediction loss is defined as the cross entropy loss using torch.nn.CrossEntropyLoss, and the neural network is trained using Adam. In order to yield high classifcation accuracy, I train the neural network over 20 epochs using a learning rate of 1e-3 and a weight decay of 1e-5.

Results

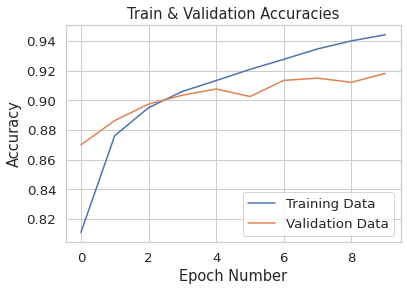

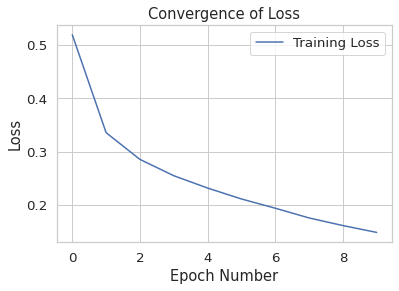

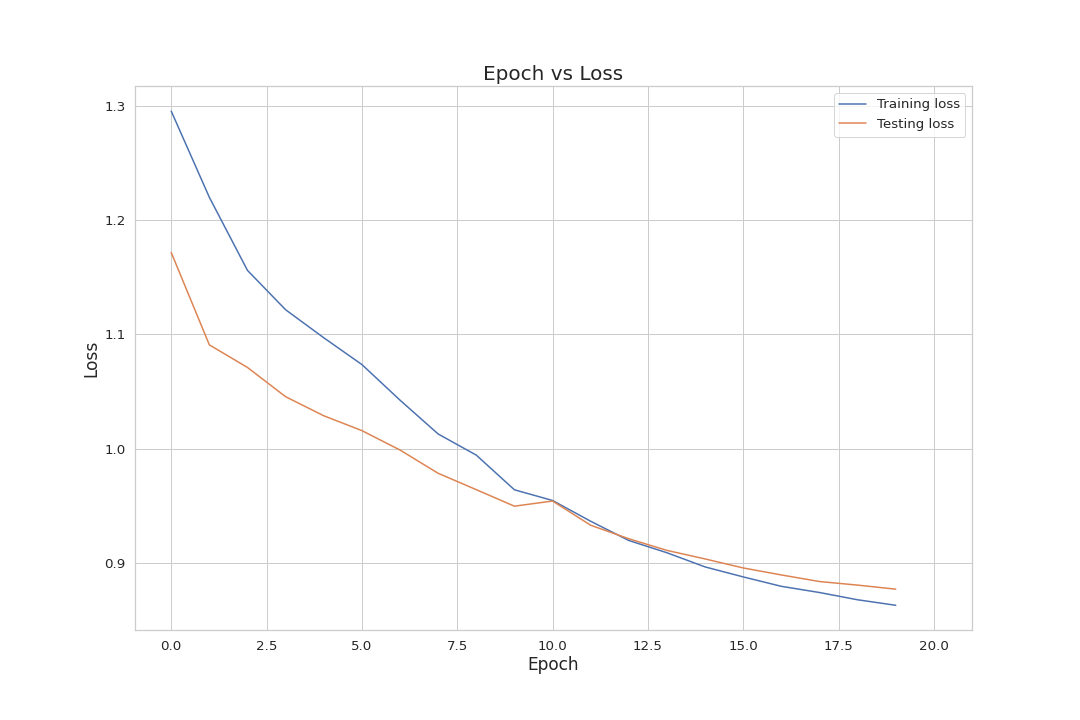

Below, we've plotted the training and validation loss across all 20 epochs (0-19). Training loss converges to 0.8629, and test loss converges to 0.8770

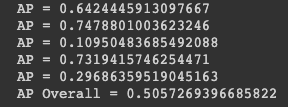

In terms of average precision, the model performed well with an average AP (AP Overall in below figure) of 0.505. The order of the initial AP values is:

- Others

- Facade

- Pillar

- Window

- Balcony

From this, we see the neural net is capable in terms of classifying facades, windows, and non-specified items, but poorly classifies pillars and balconies. We expect the prediction results to indicate this below.

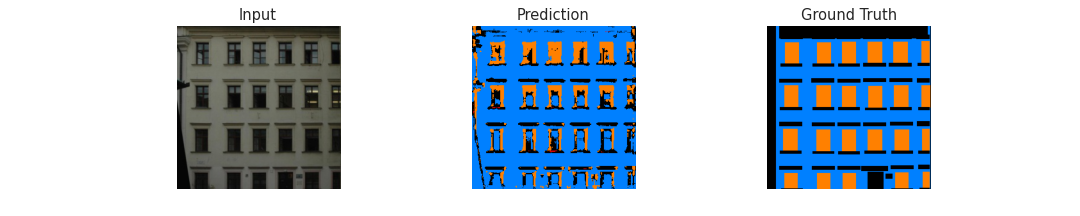

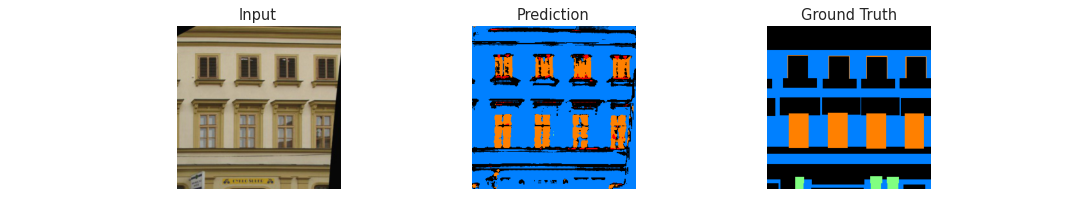

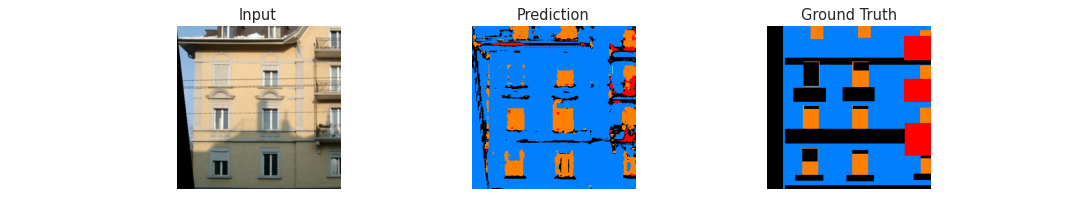

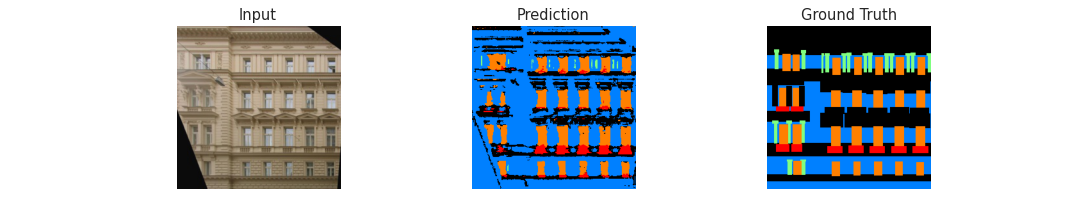

Here, we show the results of the model predicting the classes of facade images provided in the test_dev folder. The original, input images are shown alongside the prediction and the ground truth. Overall, we see the neural net is extremely capable in classifying windows and the facade itself, just as the AP scores indicate. It struggles to capture the pillars in the 3rd and 5th images. It seems to detect balconies somewhat, but not enough to make out the whole balcony and exhibits some false positives as well. The results make sense, as the the windows and facade walls were more prevalent structures in the dataset than balconies and pillars, and so the neural net was trained to detect these classes better than the others.

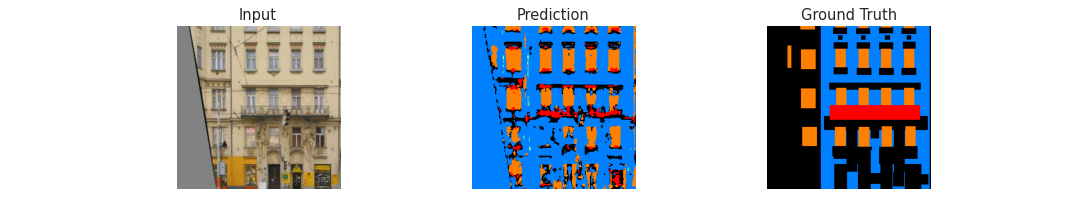

We also test the neural net on 2 images not originally in the test_dev folder: