Project Overview¶

For this project, I will first solve a image classification problem for the Fashion-MNIST dataset in part 1, and then solve a semantic segmentation problem for the Mini Facade dataset in part 2.

Data: The data we used is the fashion-MNIST dataset, which is already included in Pytorch. Each image is of dimension 28x28x3.

CNN: We used the following CNN:

- Layers

- Conv Layer 1: 5x5 kernel with 1 input channel and 6 output channels (followed by ReLU and max pool)

- Conv Layer 2: 5x5 kernel with 6 input channel and 16 output channels (followed by ReLU and max pool)

- Linear FC Layer 1: 256 to 120

- Linear FC Layer 2: 120 to 84

- Linear FC Layer 3: 84 to 10

- all Conv layers followed by ReLU and maxpool, all FC layers followed by ReLU except the last layer

- Optimizer: Adam

- Loss function: cross-entropy loss

- Layers

Hyperparameters:

- learning rate: 0.001

- epochs: 40

- batch-size: 4

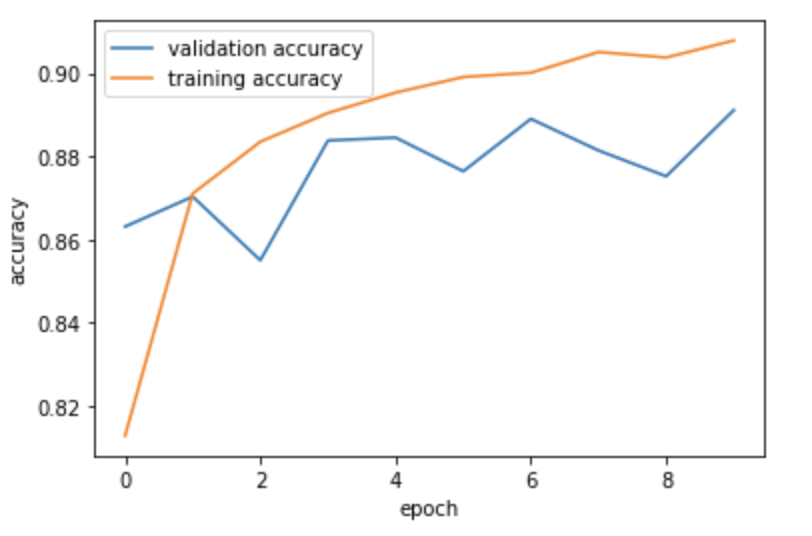

Section 1.2: Accuracy Curve¶

We have also kept track of our model's training and validation accuracy throughout the training

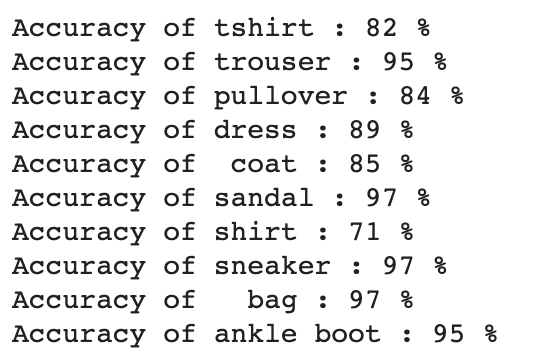

Section 1.3: Accuracy per class¶

It seems like among all classes, the "shirt" class is the hardest class to classify correctly, while the bag class is the easiest to classify correctly. (image here)

| Class | Correctly Classified | Incorrectly Classified |

| tshirt |

|

|

| trouser |

|

|

| pullover |

|

|

| dress |

|

|

| coat |

|

|

| sandal |

|

|

| shirt |

|

|

| sneaker |

|

|

| bag |

|

|

| ankle boot |

|

|

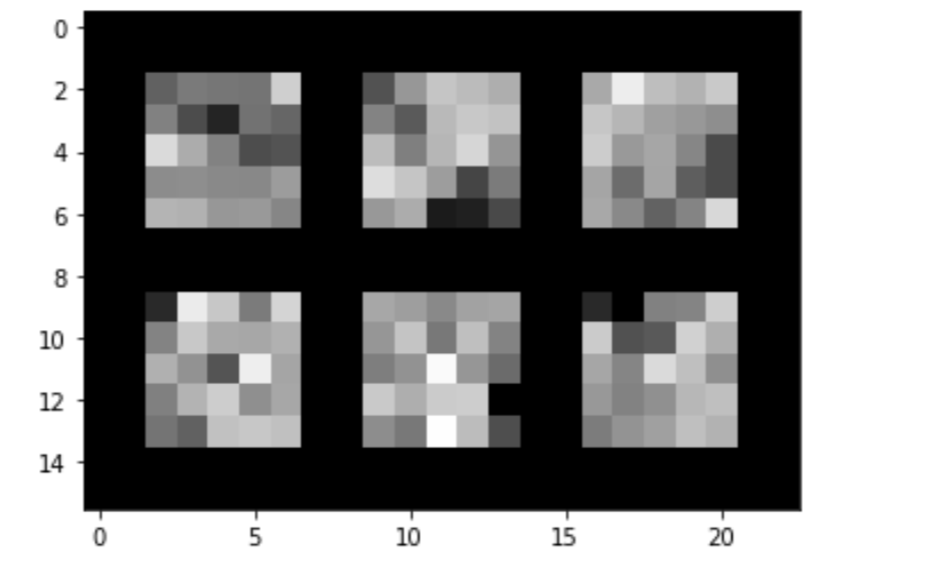

Section 1.4: Visualization of the Learned Filters¶

We are visualizing some of the filters that our model learned.

Part 2: Semantic Segmentation¶

Section 2.1 CNN, Architecture and Hyperparameter¶

For this semantic segmentation task, I used the following CNN:

- Layers

- Conv Layer 1: 5x5 kernel with 64 output channels

- ReLU

- Conv Layer 2: 5x5 kernel with 256 output channels

- ReLU

- Conv Layer 3: 5x5 kernel with 512 output channels

- ReLU

- Conv Layer 4: 5x5 kernel with 256 output channels

- ReLU

- Conv Layer 5: 5x5 kernel with 64 output channels

- ReLU

- Conv Layer 6: 5x5 kernel with 5 output channels

- (all convolutional layers have zero padding of size 2)

- Optimizer: Adam

- Loss function: cross-entropy loss

- Learning rate: 0.001

- Weight decay: 0.00001

I did a 70-20-10 split for the training, validation and test set. As I will show below, although this vanilla CNN model is extremly simple, to y surprise it actually achieves acceptable result.

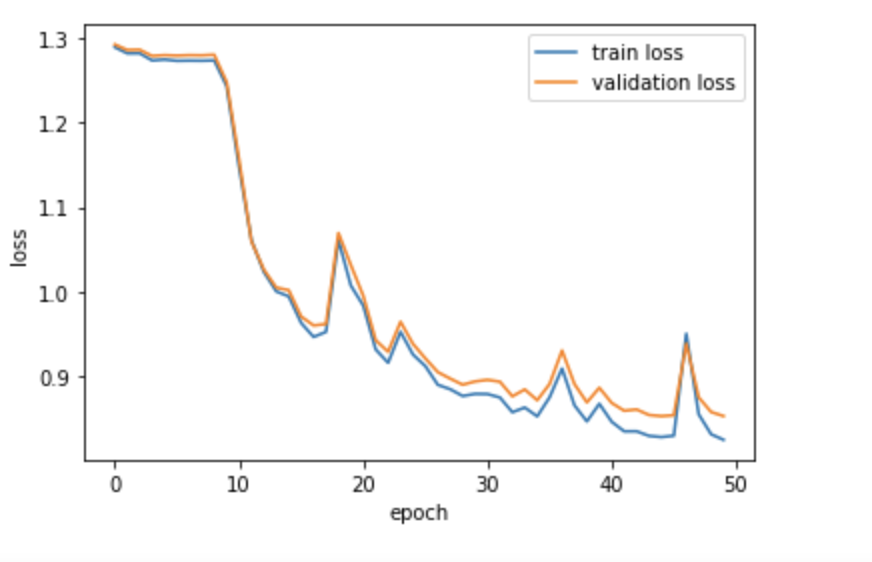

Section 2.2: Loss Curve¶

We also kept track of the loss throughout the process of training. After 40 epochs, the validation loss begins to fluctuate and does not go down anymore, indicating that it might start to overfit. So I train the model for only 50 epochs.

Section 2.3: Model Performance¶

Here is how our model perform with each category

| Class | AP Score |

| others | 0.6400833712306877 |

| facade | 0.7638179312442582 |

| pillar | 0.11012632195617077 |

| window | 0.7898640813673297 |

| balcony | 0.32036415495583426 |

| Average AP | 0.5248511721508562 |

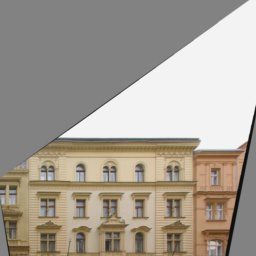

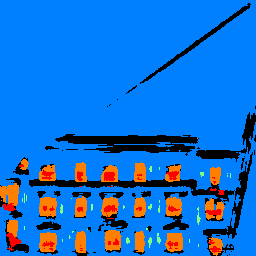

Section 2.4: Sample Results¶

Here are some sample test images, including an image of my own.

Consistent with the AP of each classes, the model does the best when classifying parts of pixels that is part of a window. It did poorly on pillars,

|

|

|

|

|

|

|

|

.jpg)

|

|