CS 194 Project 4: Classification and Segmentation

Richard Chen

In this project, we use convolutional neural networks (CNNs) to solve several classic computer vision problems: classification and segmentation.

Part 1: Image Classification

In this part, we implement a CNN to classify images from the Fashion MNIST Dataset which contains 10 classes, 60,000 training+validation images, and 10,000 test images. My neural network architecture includes 2 convolutional layers with 32 channels, followed by 2 fully connected layers. I used an ADAM optimizer and Cross Entropy loss, training for 10 epochs.

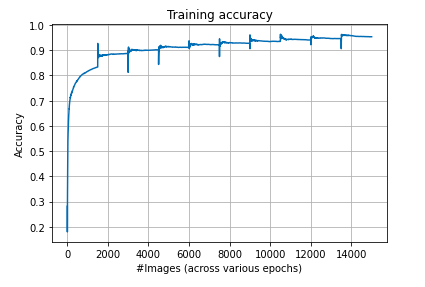

Training accuracy

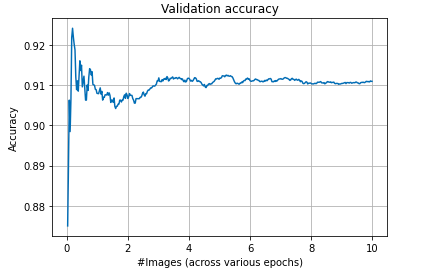

Validation accuracy

Shown above is the training and validation accuracy plotted during the training process. We can see training accuracy gradually increasing as time goes on which is to be expected. The validation accuracy initially jumps up, then stabilizes around 90% accuracy. This is good as it suggests it's fighting against overfitting.

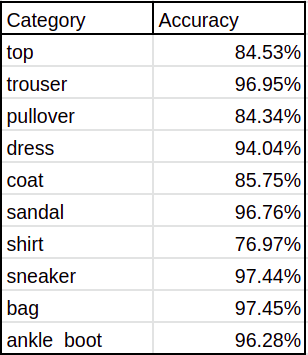

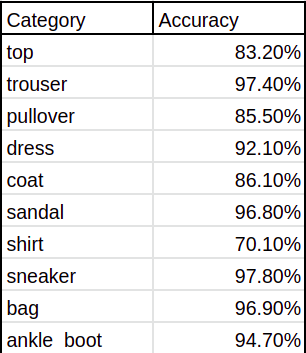

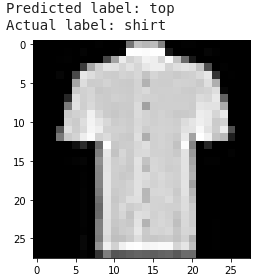

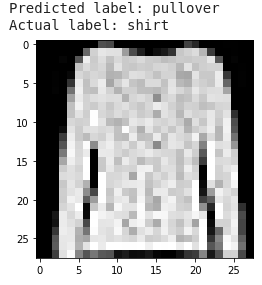

Now let's look at the specific class accuracies for the validation and test set. This was made by calculating the confusion matrix and looking at the diagonal scores. We can see that the hardest classes to classify are shirts and tops.

Class accuracy of validation set

Class accuracy of test set

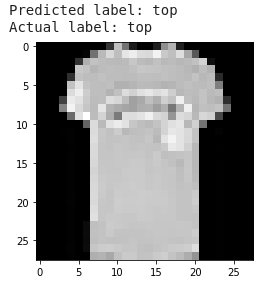

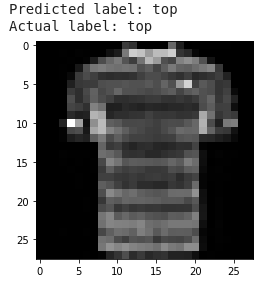

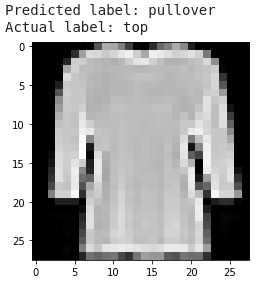

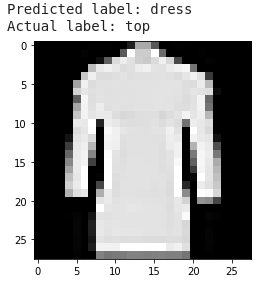

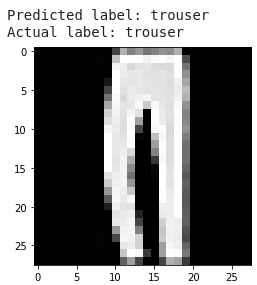

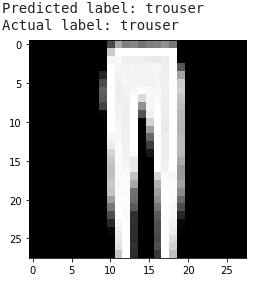

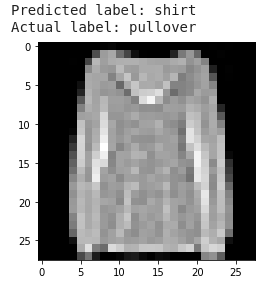

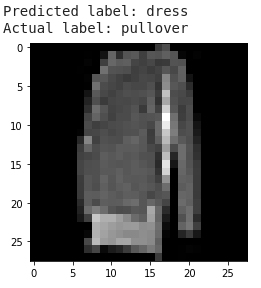

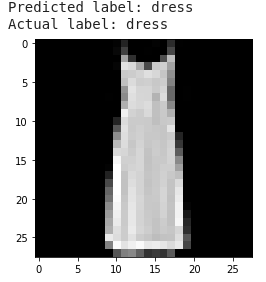

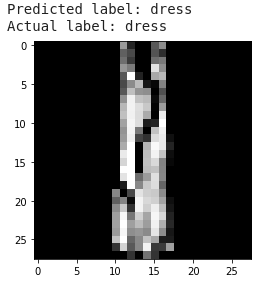

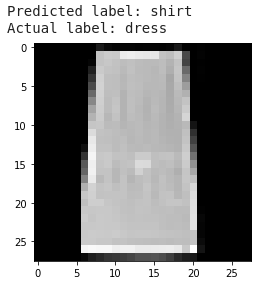

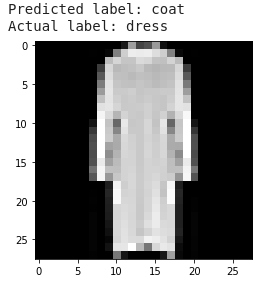

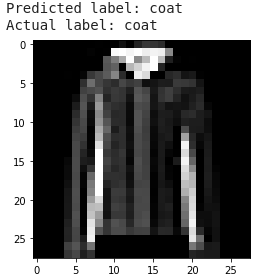

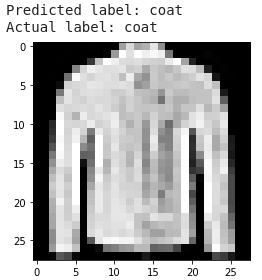

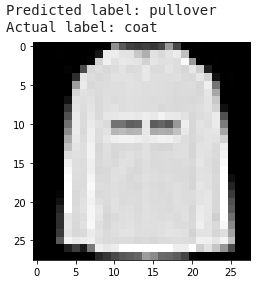

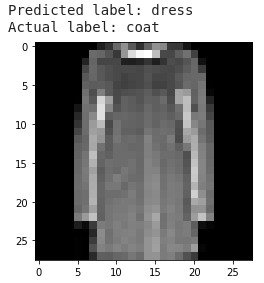

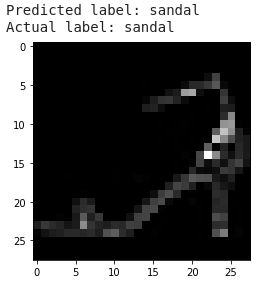

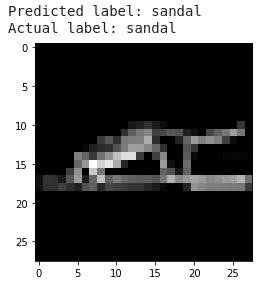

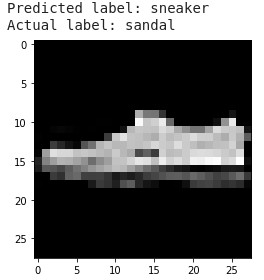

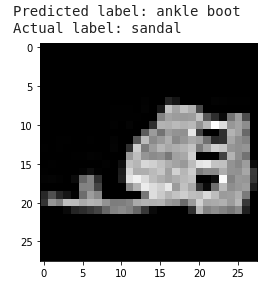

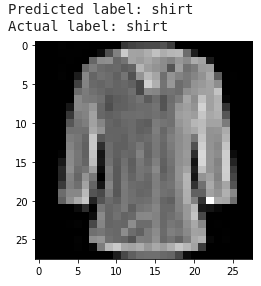

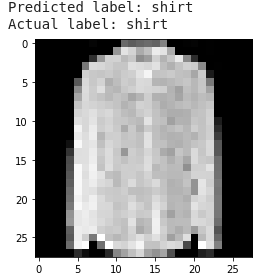

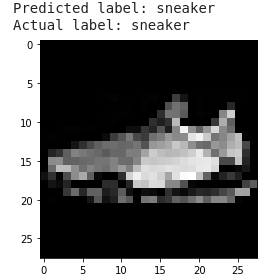

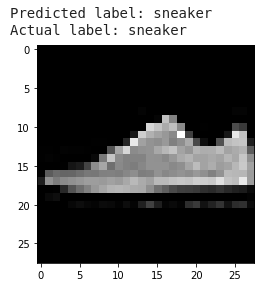

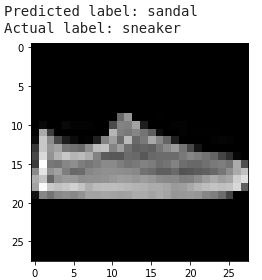

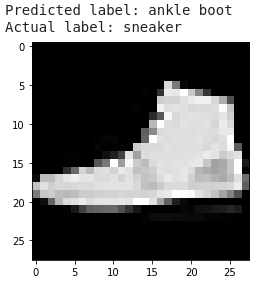

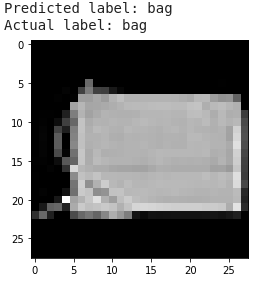

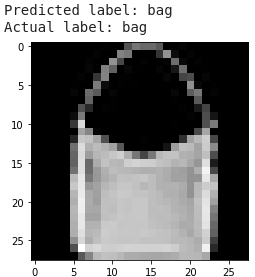

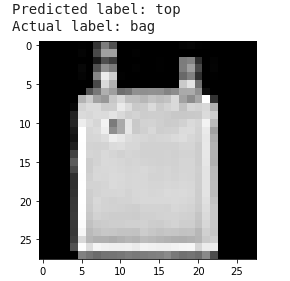

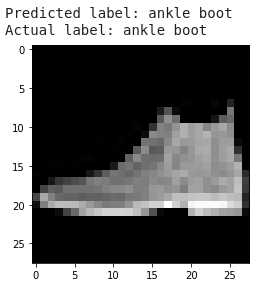

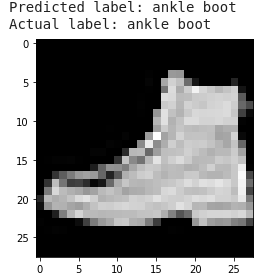

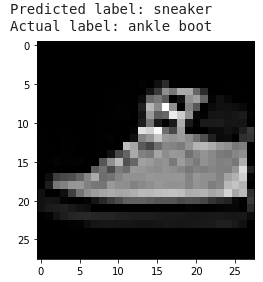

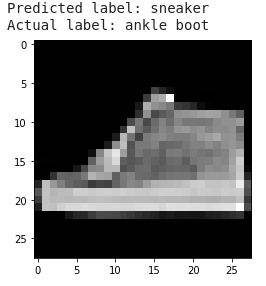

Now we can look at some of our results.

Tops

Trouser

Pullover

Dress

Coat

Sandal

Shirt

Sneaker

Bag

Ankle Boot

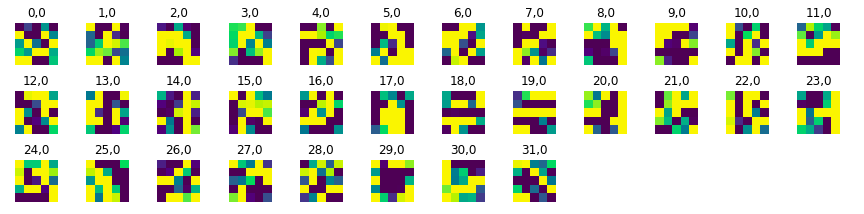

We can also visualize the learned convolutional filters, shown below.

Part 2: Segmentation

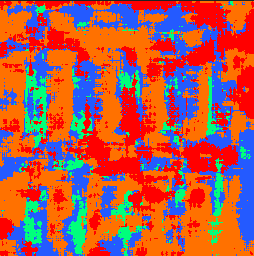

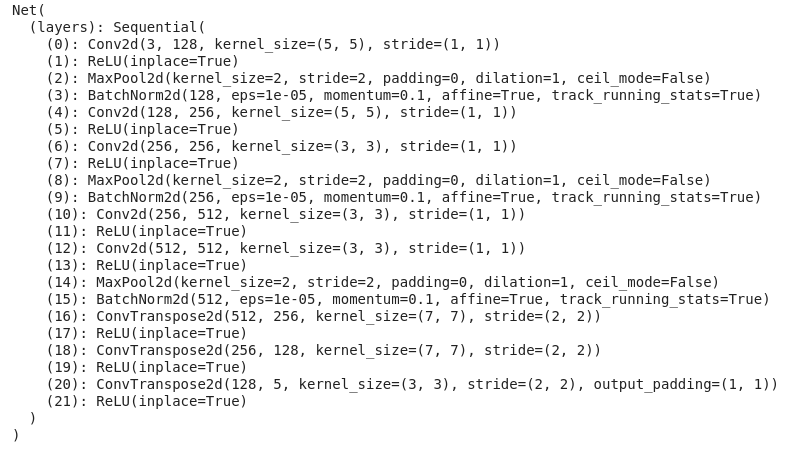

We can also use CNN's to do image segmentation for pixel by pixel classification. We train on the Mini Facade dataset over 35 epochs with an Adam optimizer of learning rate=1e-3, weight decay = 1e-5. The specific architecture is shown below and was based on a U-net with slight modifications. I added some additional fully connected layers, and also included batch norm layers to improve the AP score.

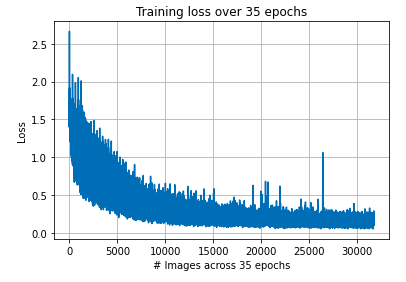

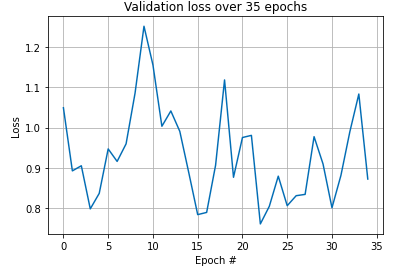

Below we also show the training and validation loss during the training. We can see validation stabilizes after a couple of epochs.

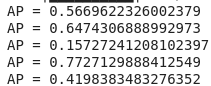

The AP scores are shown below. They come out to an average AP score of 0.5128 The worst performing one is the one for pillars/columns. This makes sense intuitively to me because it's hard to distinguish columns to just empty space between windows and on walls.