CS 194 Project 5 Part I+II

James Li

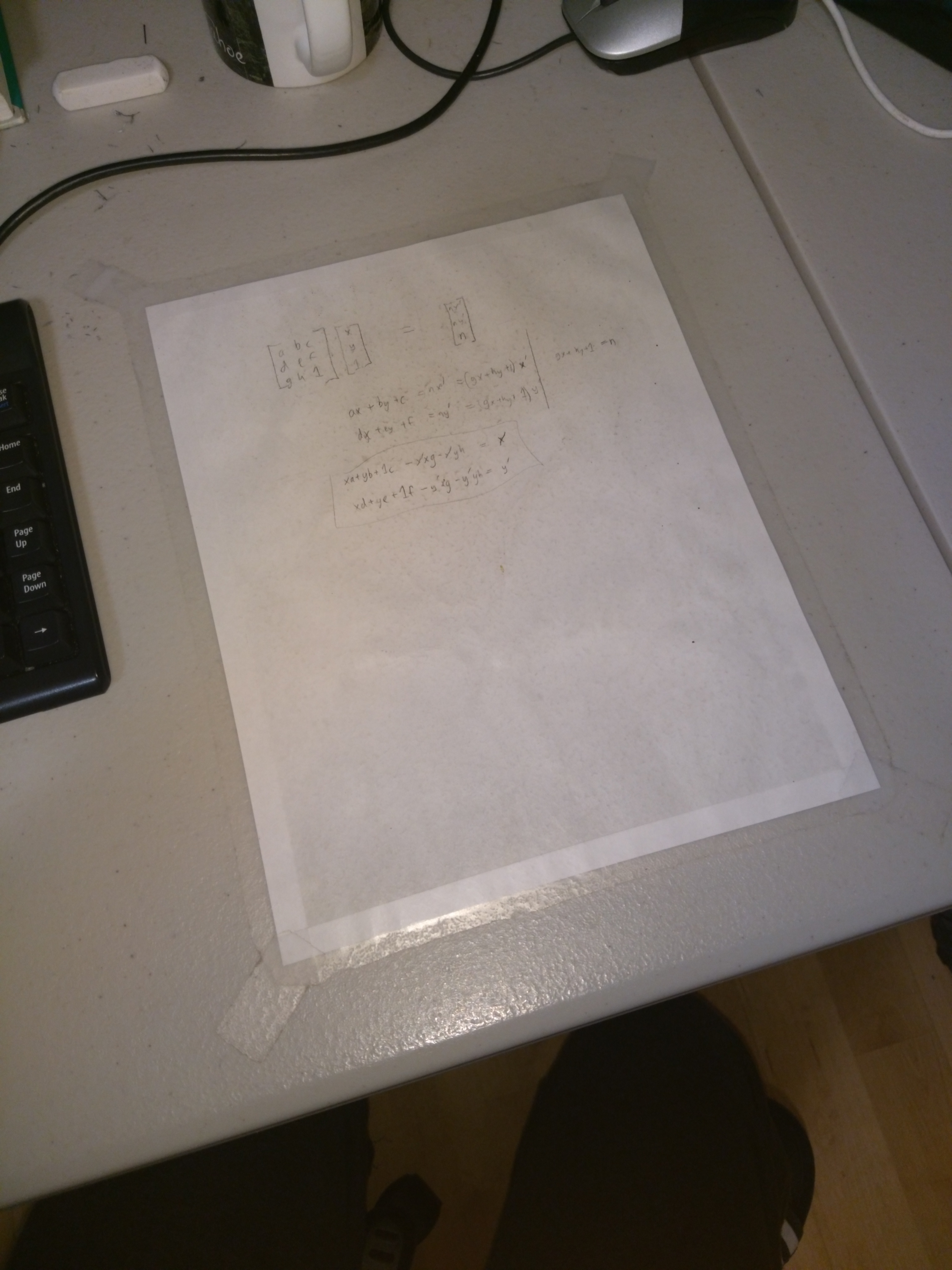

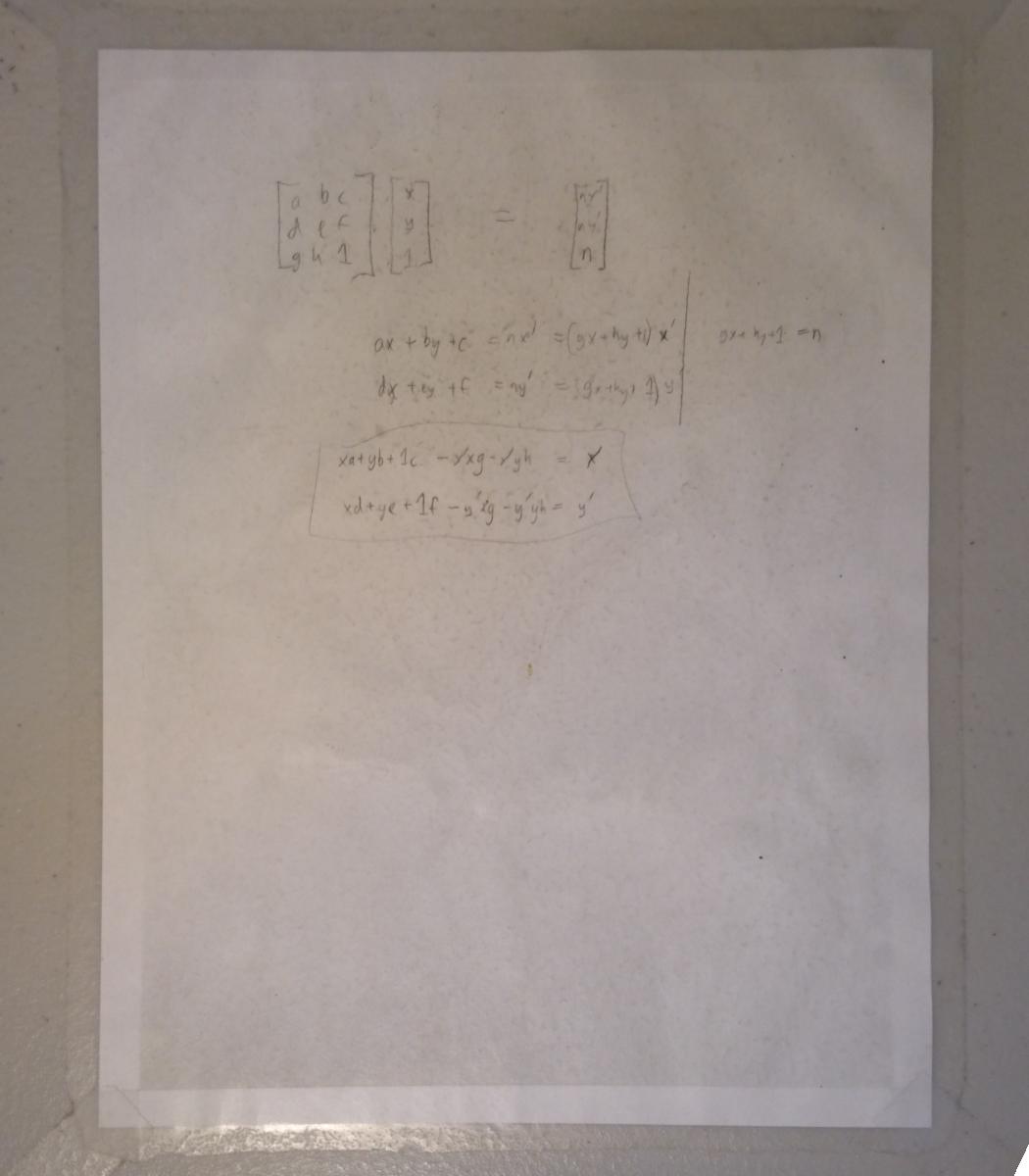

Rectification

I used the 4 corners of the sheet of paper, and transformed them to an 850x1100 pixel region.

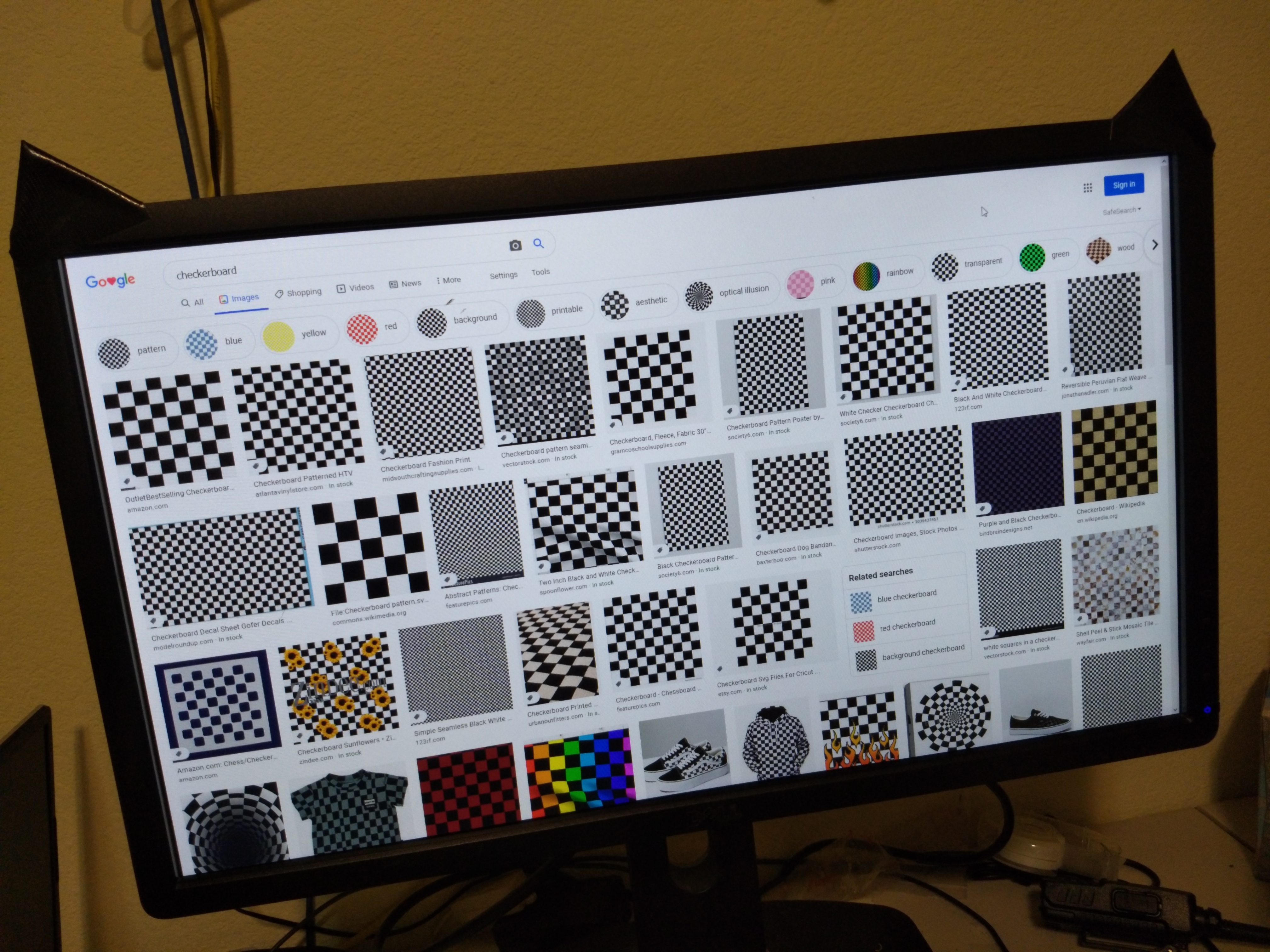

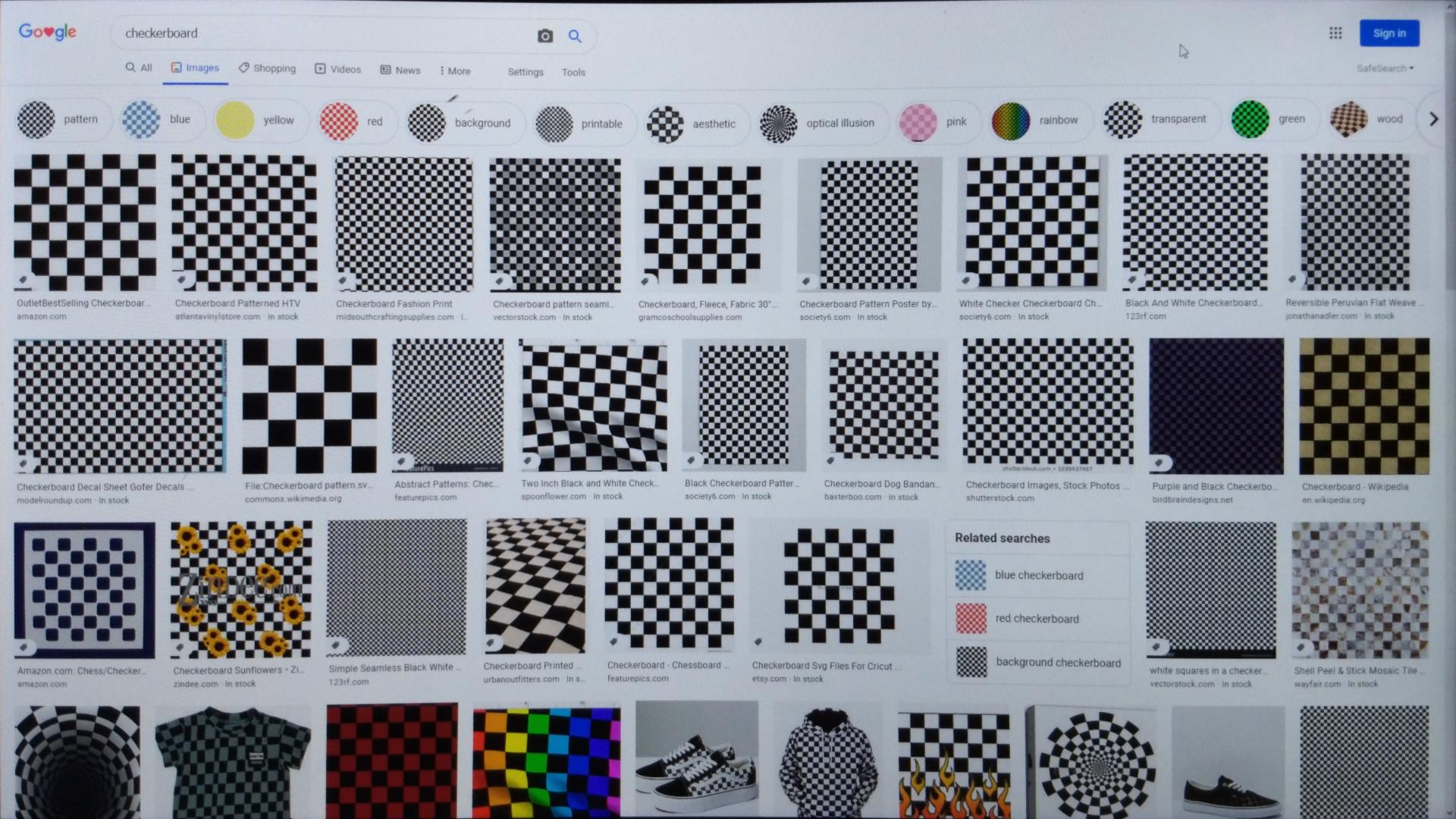

Similarly I rectified an image of my 1920x1080 computer monitor (🐱) and compared it with a screenshot. Comparing the two revealed that my phone camera had some degree of radial distortion.

Mosaic

The images are blended along an a curved seam. For each image, I generate a "bi-parabolic window" by assigning a parabola to x and y, and multiplying them together. This window tapers smoothly, and intersects 0 near the edges. For every pixel, I sample from the image with the higher window value.

Blending the images is a bit more complex. I compute a boolean "which image to sample from", blur it to produce a field from 0 to 1, but ignore images with zero alpha channel.

The ghosting is mostly manageable with an 4px blur sigma. A 2-level Laplacian pyramid would produce better results, but these are mostly good enough.

Tell us what you've learned

Yet again I learned how much this class frustrates me. Maybe the importance of just submitting something (even if it's incomplete) before I burn out completely.

Part II

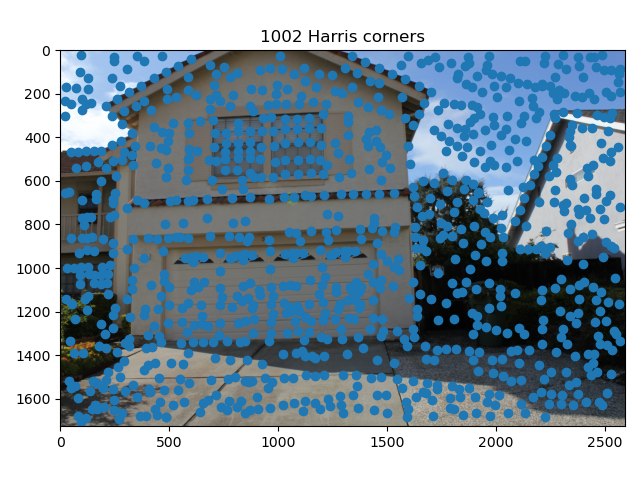

Step 1: Corners

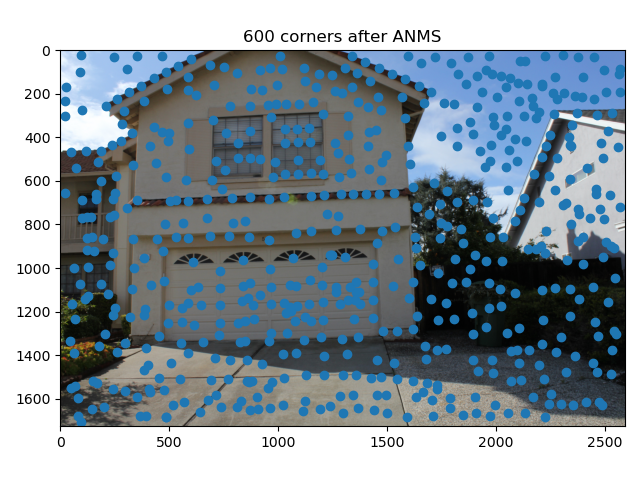

I computed Harris corners on the average of all 3 RGB channels.

I altered the Harris corner detector code to use a somewhat wider standard deviation. This reduced the number of peaks present in the first place, possibly speeding up the code.

I implemented Adaptive Non-Maximal Suppression, then picked out a fixed number of features with maximal suppression radius. I kept c_robust at 0.9.

Step 2: Feature descriptors

I extracted features on the average of all 3 RGB channels.

I blurred the images at a sigma of 5/2, before sampling every 5 pixels.

I decided to add back 0.2 of the original mean variation, after normalizing for bias/gain. It would be silly to match similar patterns found in sky vs. darkness.

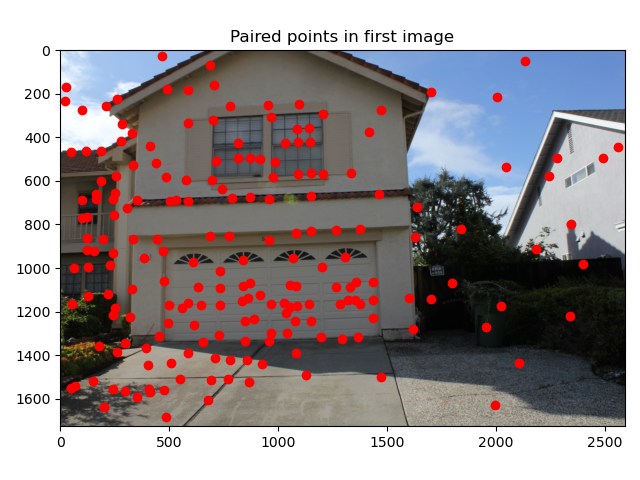

Step 3: Feature matching

I interpreted every feature as a length-64 real vector, and chose to pick the top 200 features in the first image with lowest (1nn_error / 2nn_error) (finding neighbors in the second image). This strategy was good enough and I didn't feel like experimenting with more complex strategies.

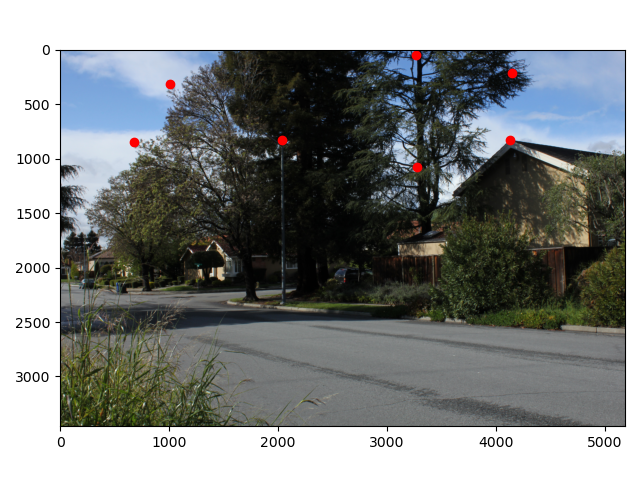

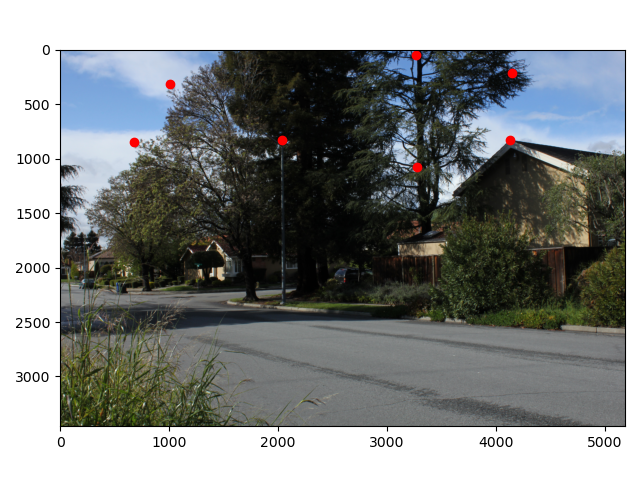

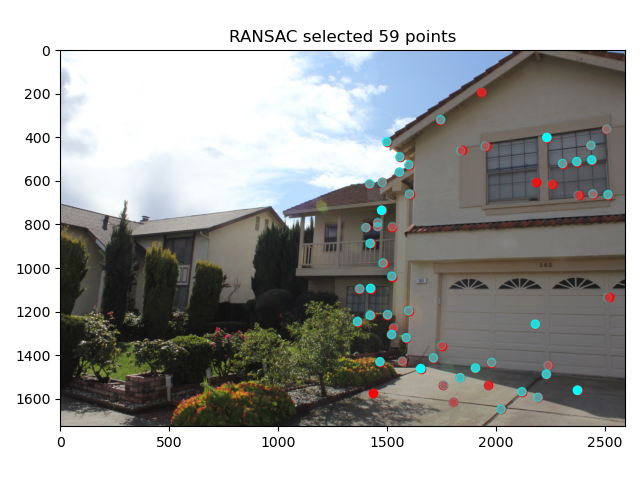

Step 4: RANSAC

I implemented RANSAC with a tolerance of 0.001 * largest approximate image dimension (by looking at feature points and not the image itself, it's a dirty hack but good enough). For large images, this computes to be larger than 1.5 pixels.

When visualizing RANSAC on two poorly aligned images, I noticed it chose well-fitting points from a small portion of the two images' overlap, instead of compromising to find a fit which made the entire panorama acceptable.

I chose to modify RANSAC. For the final computation, it would weigh in points further from an ideal match, but decrease their weight during the least-squares computation. Setting the tolerance to 0.01 was a good idea for images with significant camera translation. But in the end, I set the tolerance to 0.002 (barely different from regular RANSAC) to reduce issues in well-aligned images.

The red points are taken from this image, and the translucent blue points are computed based on points from the other image, with opacity proportional to how strongly it was weighted. (This plot is poorly designed honestly. The red, not blue, points were fed into least-squares with varying weights.)

Comparison of auto/manual

| Manual | Automatic |

|---|---|

|  |

|  |

|  |

The automatic and manual stitches are fairly comparable, and both good enough quality. They vary slightly on which portions of the image they choose to align exactly or not.

What have you learned?

Blending images together is hard. There are order-of-magnitude differences between different image interpolation functions, and matplotlib's ImageGrid is horrifically slow. And it's much faster to downscale your images to 50% if there is no fine detail in the first place.