Part 1: Image Warping and Mosaicing¶

Jonathan Tan, cs-194-abl

Rectifications¶

When we take pictures of something at an angle, e.g. a square pillow, we can define it's points as something that maps to an actual square. The mapping itself can be defined as a homography, which I use to square'ify my creeper pillow and DDR dance pad.

Here's the rectified dance pad, been getting some pretty good use out of it during the quarentine

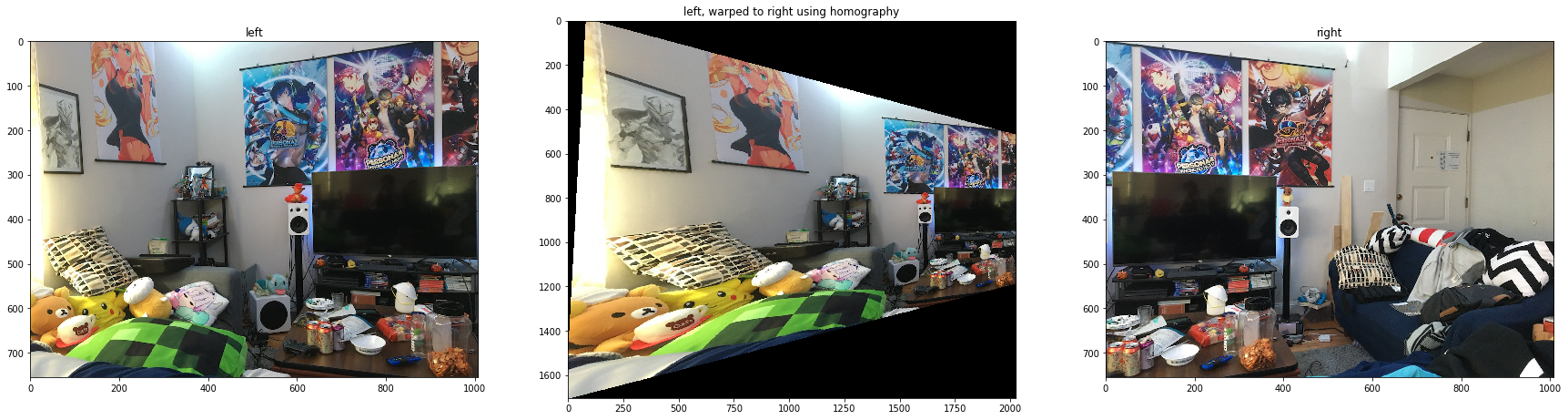

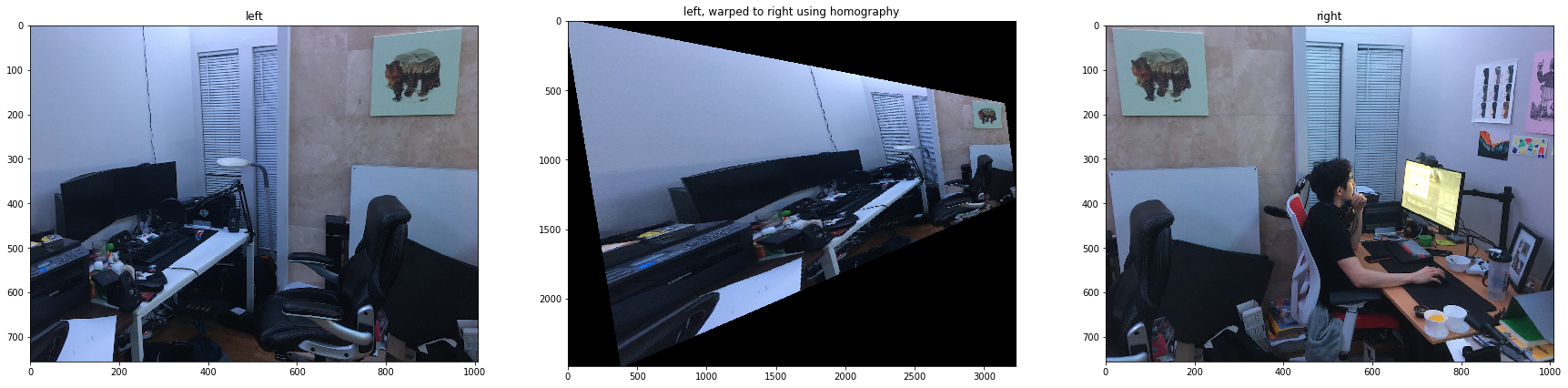

Mosaics¶

The neat application of this is being able to warp one picture onto another picture's space, effectively allowing you to stitch together pictures with minimal distortion. Homographies are the stuff panoramas are made of! I start by manually defining point correspondances in two pictures that share a field of vision, and then I use least squares to calculate a homography matrix that defines the warp.

Part 2: Feature Matching and Autostitching¶

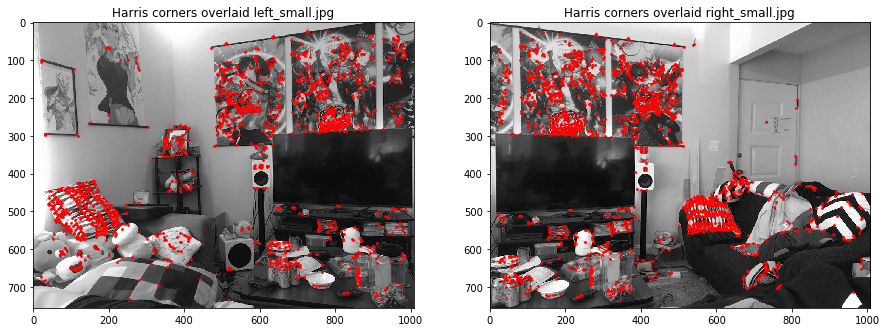

Doing our point selections by hand is somewhat tedious: let's automate the process. We start by using the Harris Corner Detector on images to find points that look like corners, i.e. points that could encode a bunch of information. Additionally, we toss out a bunch that aren't particularily "strong". By using the corner detector provided, we're able to determine which points seem "weak" à la histogram.

Then, we can take a look at our harris corners! These images will be grayscale for ease of vision.

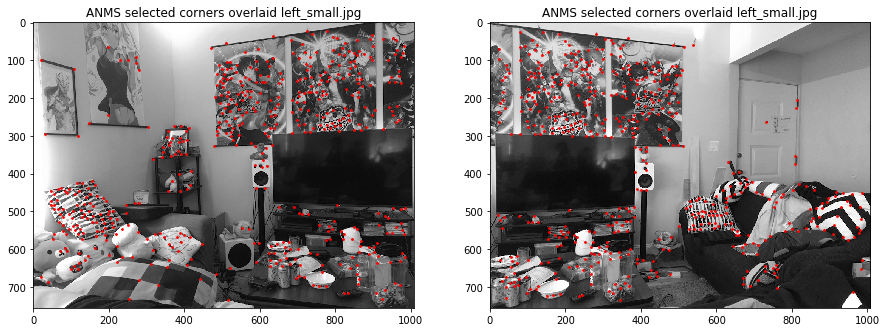

We definitely need to prune some more though. We'll use adaptive non-maximal suppression to do so. For each harris corner, we'll find it's nearest neighbor that's "stronger" than it, and note the distance. Doing this for each corner, we're able to basically sort all our points by whichever is the strongest in their region, and pick the top 500 or so corners.

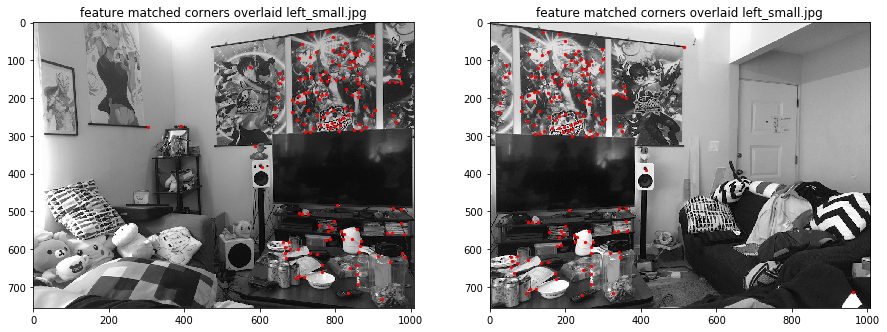

Now, for each point, we take a 40x40 square centered at our point and downscale it to 8x8. We compare patches across both images and only keep the ones that look like they match each other using the sum of squared differences as a metric, as well as its two closest matches. Simply put, if the second closest match isn't too different than the first closest match, we probably want to toss out the given match.

After pruning with patch extraction and feature matching, we're left with only a few points:

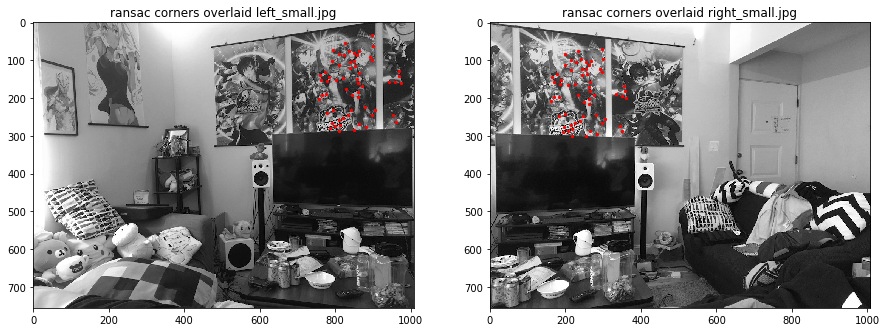

But there're still some outliers! Let's tackle them with RANSAC. I sample four random points, calculate the homography they definite, and apply it on all the other point matches we have. Then, we can calculate the error from using the estimated homography to warp the left picture to the right, and if it looks bad, we toss the match. We do this repeatedly, taking as many matches as we possibly can, giving us a random sample consensus.

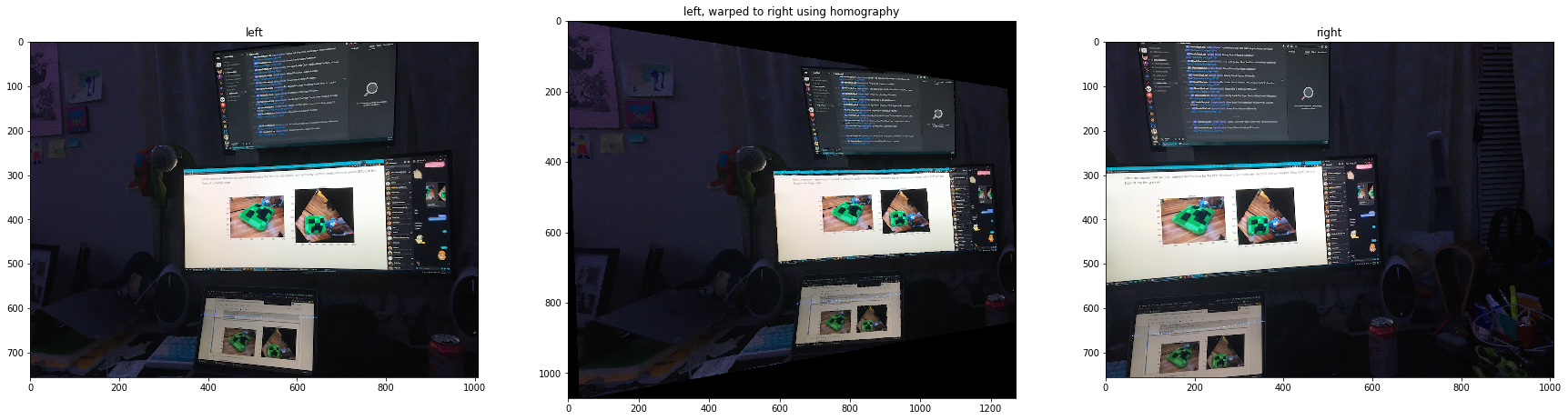

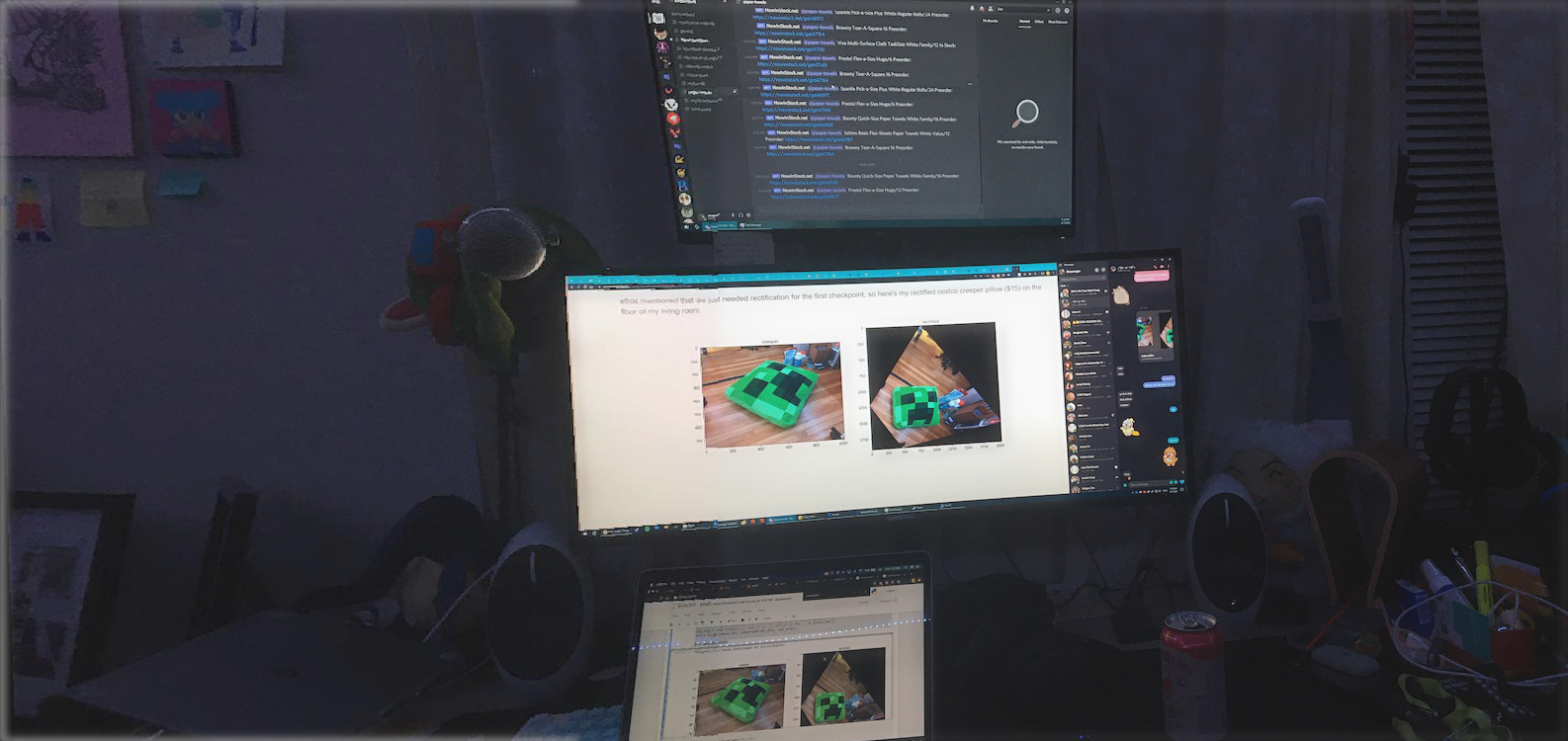

Using this system, we're now able to automatically recover homographies from images that share sufficient fields of view. Neat! Here are some mosaics generated using this process + the blending we used in Project 3, and for the first part.

We can do a side by side comparision of my living room, manually vs automatically done:

| Manual | Auto |

|---|---|

|

|

I'm actually not sure why markdown gives the automatic one more width. Feel free to open them in a new tab. It's not bad, but in some cases, the automatic stitching kinda mucks up or can't find corners properly. Likely needs more parameter tuning and dynamic thresholding.

What did you learn/find cool?¶

Part 1¶

I really like how we can basically program warps traditionally done in image manipulation software. Very cool as I'm a somewhat avid 'shopper.

Part 2¶

Wow, really surprised at how effective automating this process is. Makes me wonder if similar panorama generating code is on our smartphones, or if a different method is used for stiching.