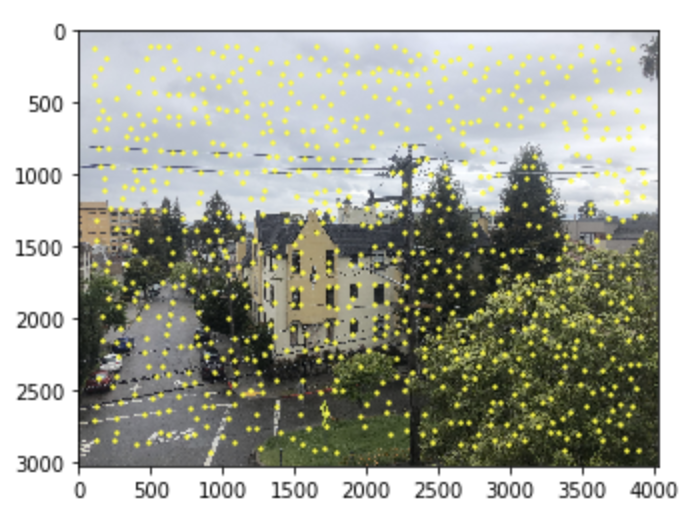

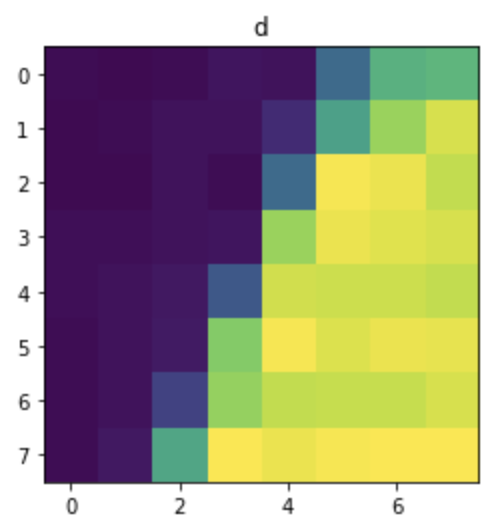

Image 1 - Original

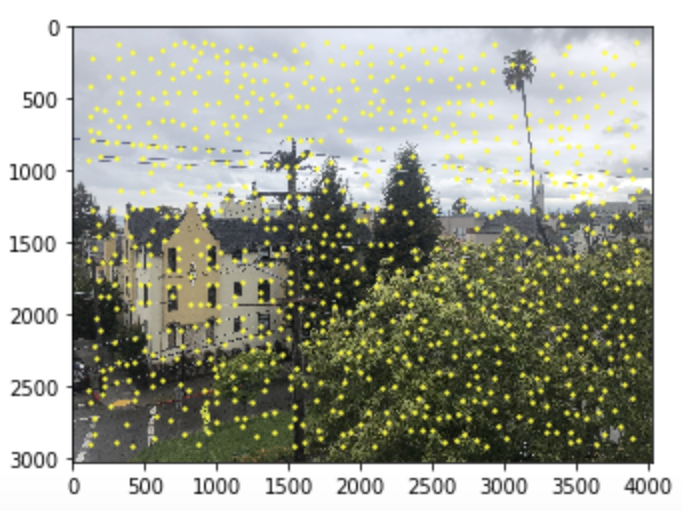

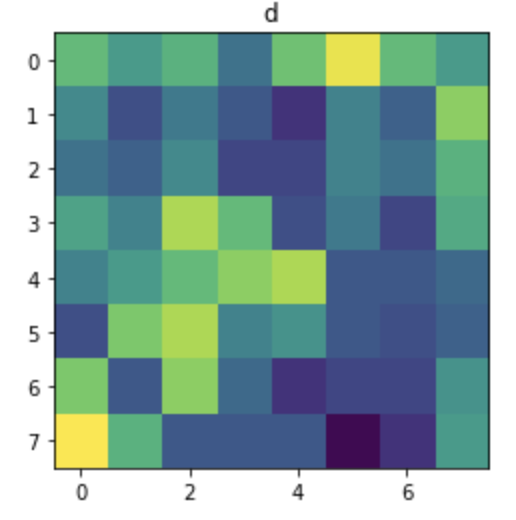

Image 2 - Orginal

For the first part of this assignment, we want to detect corners in a given image. To do this, we use Harris Corner Detection. This is the first step in creating a panoramic as we want to identify features in both images that are correlated. Below you can see the two original images, then the harris corners of them as well. For this, I had min_dist set to 50 in my 4k image. This means, no to harris corners will be within 50 pixels of each other. I also set edge_discard to be 100, meaning the 100 pixels closest to the edges of the picture will not be included in the corner detection algorithm.

Image 1 - Original

Image 2 - Orginal

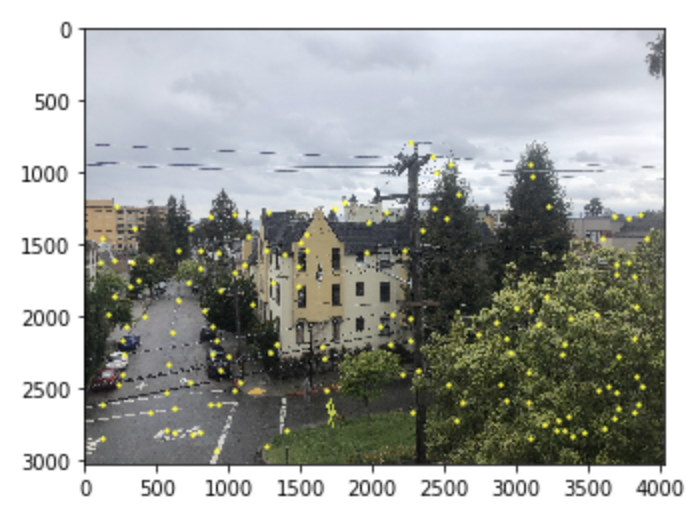

Image 1 Harris Corners

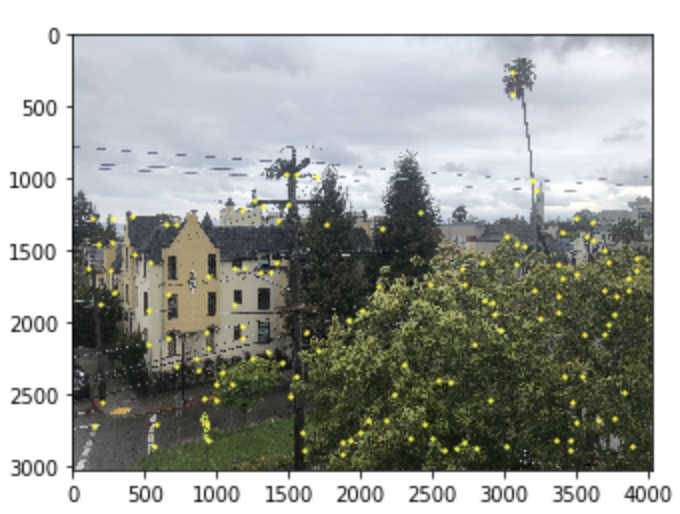

Image 2 Harris Corners

After finding the Harris Corners of the image, we want to refine the corner selection using Adaptive Non-Maximal Suppression. ANMS helps us extract the most important features in an image, meaning the strongest corners relative to neighboring corners. It also helps with spacing out our corners, which can help when aligning corners of two different images to make a mosaic.

ANMS of Image 1

ANMS of Image 2

After using ANMS to find the strongest corners in an image, we then want to be able to identify these corners with respect to its neighboring pixels. To do this, we use a feature descriptor. The feature descriptor I implemented searched the forty pixels surrounding our corner pixel. However, depending on how pixelated an image is, we can adjust how many pixels we search around a corner. After finding these pixel values surrounding our corner, we resize to an 8 by 8 patch, and use this as a descriptor. This is useful for the next section.

ANMS of Image 1

ANMS of Image 2

Using these patches, we can use a feature matcher to identify patches that are similar in two different images. To do this, I passed in two lists of patches. I would then iterate through these patches and for each respective patch in one image, I would find the best and the next best correlated patches using a dist2 function. Using the ratio between the best match dist2 result, and the second best match dist2 result, I used a threshold of 0.6 to identify if the two patches were correlated. My output of this function was indices of patches that were successfully correlated.

We use RANSAC, Random Sample Consensus Algorithm, to compute the Homography matrix to transform one image into the orientation and shape of another in order to create a panoramic. The input to this is not only the corners returned by ANMS of each image, but the matches that correspond to which patches in both images are correlated. By using the algorithm shown below, we can input selected corners to our computeHomography function from part 1 of this project to find the homography matrix to transform an image. Although my RANSAC implementation did not thoroughly work, I believe I was in a step in the right direction.