|

|

|

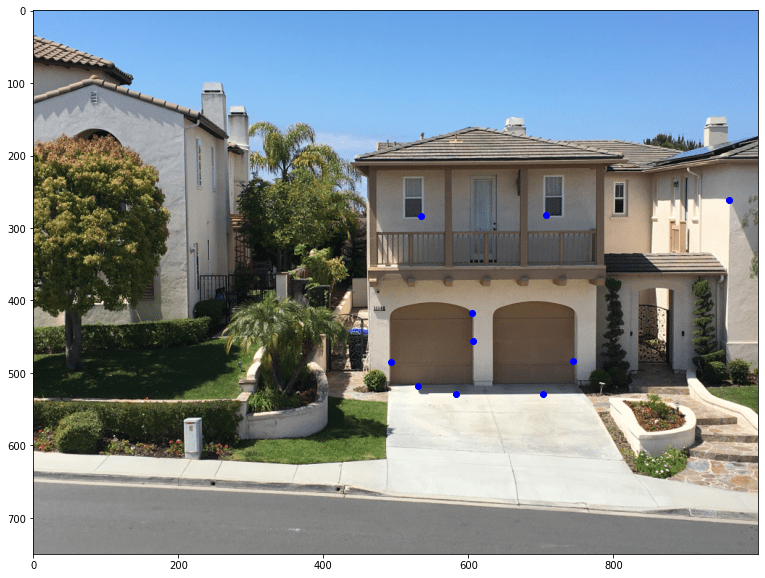

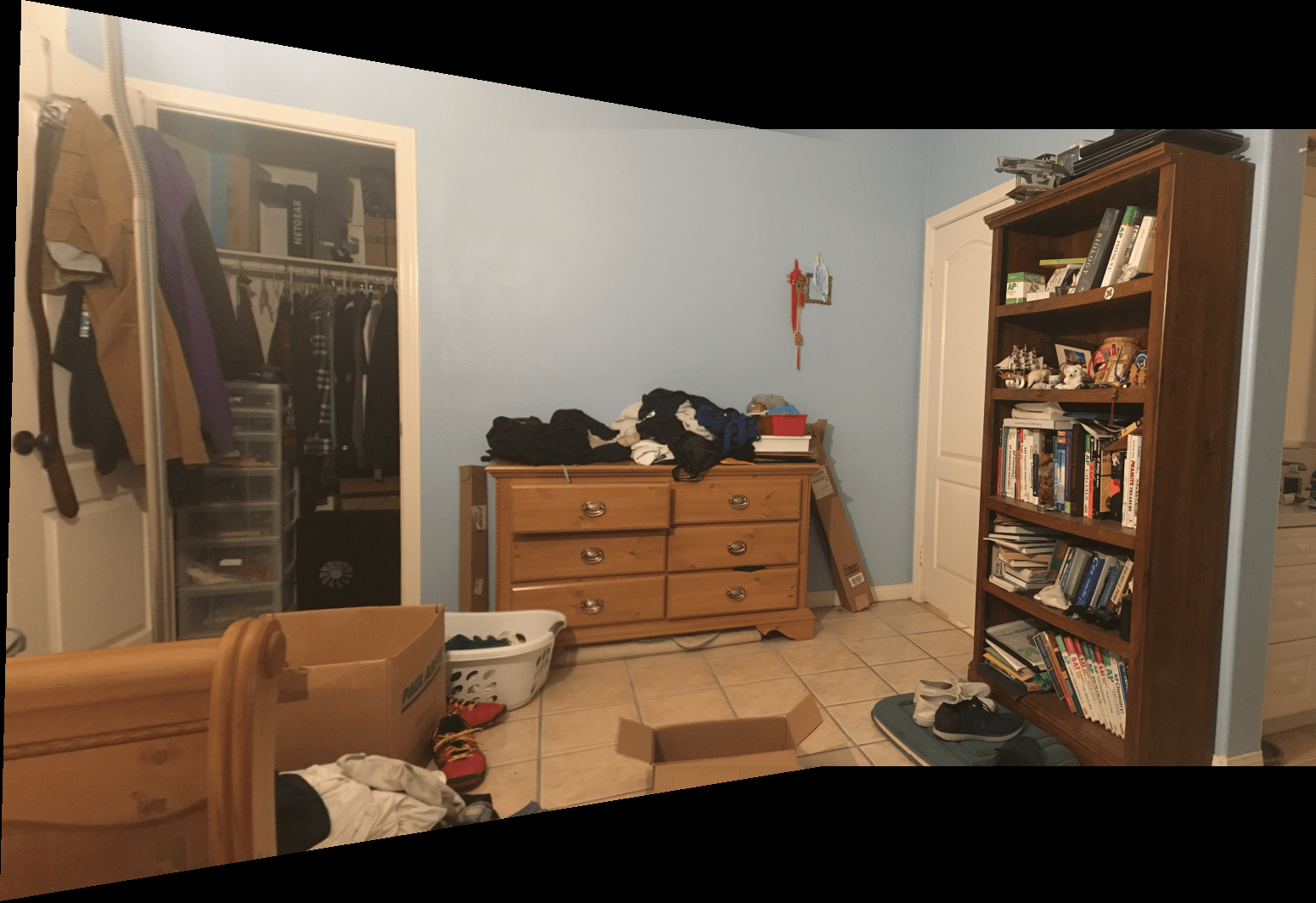

For this checkpoint, we are required to implement the computeH and warpImage functions, and apply them in the context of rectifying an image.

To find the homography matrix in this application, I simply defined four corresponding point sets on the center of the tile for a well-defined solution, and used SVD (not necessary) to solve the linear system. The results on a teddy bear laid on tile is shown below:

|

|

|

Noticably, there is distortion near the edges of the image, where there isn't enough "information" to reconstruct (interpolation probably makes it worse). Furthermore, especially since the bear has features that we'd be looking for (ie. the eye that is hidden given the original angle), the rectified image looks slightly odd, as if the bear is balancing on its head.

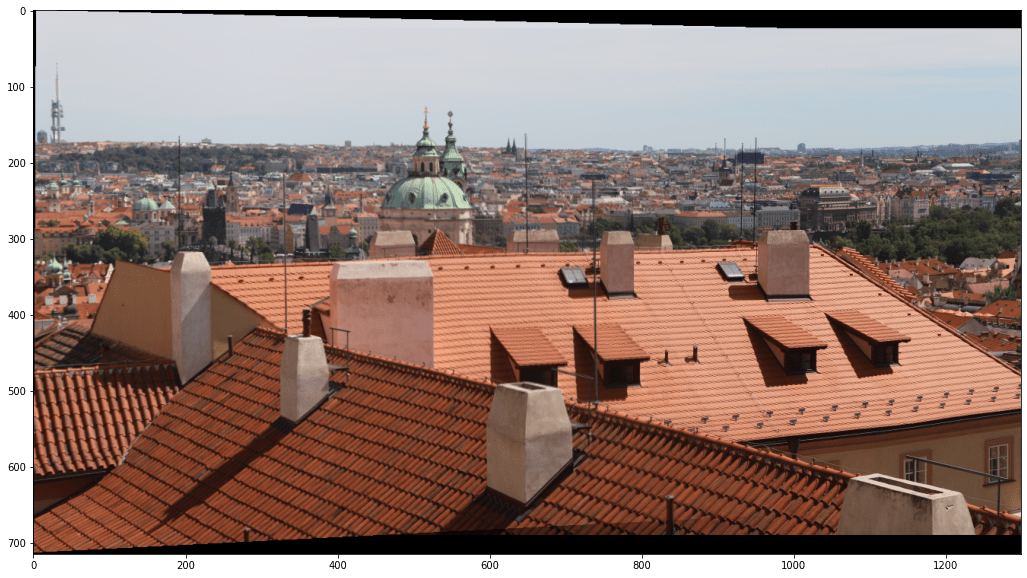

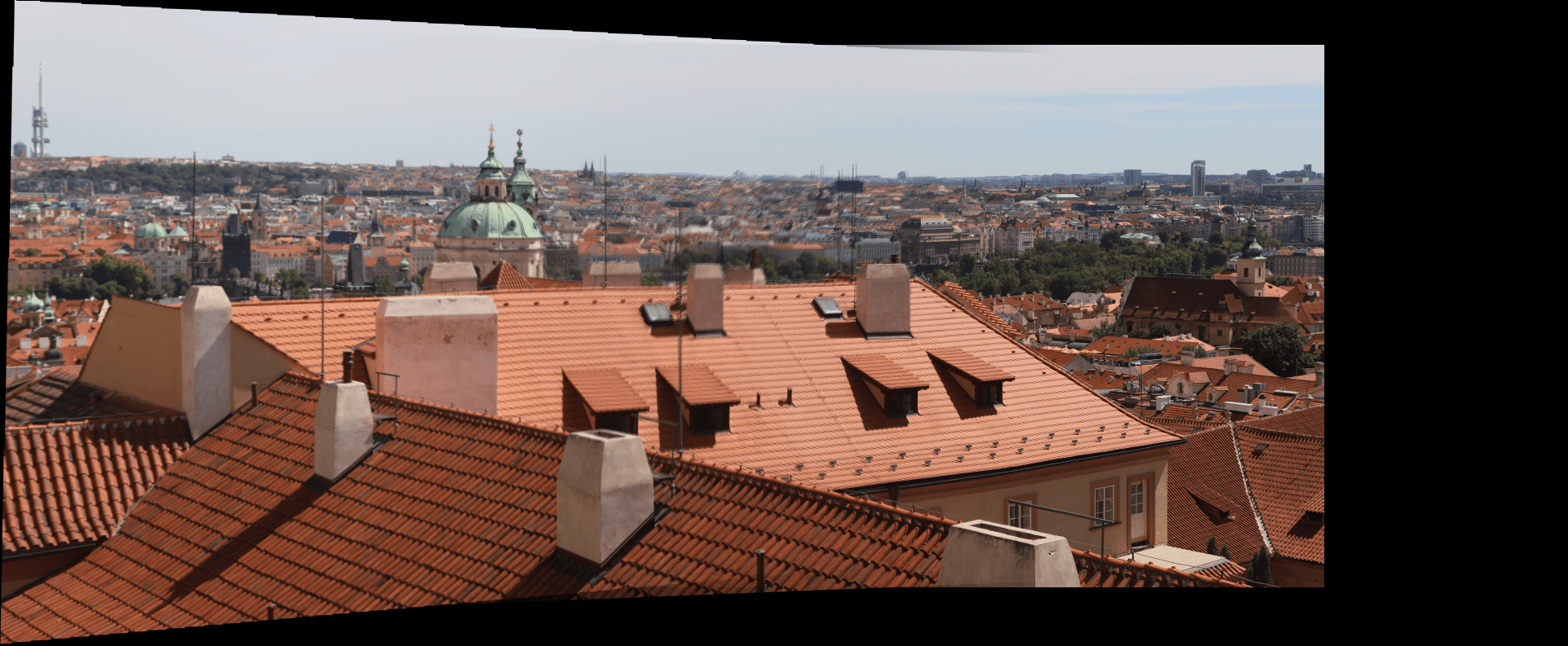

Please see below for manual mosaics, which will be displayed next their automatically generated counterparts.

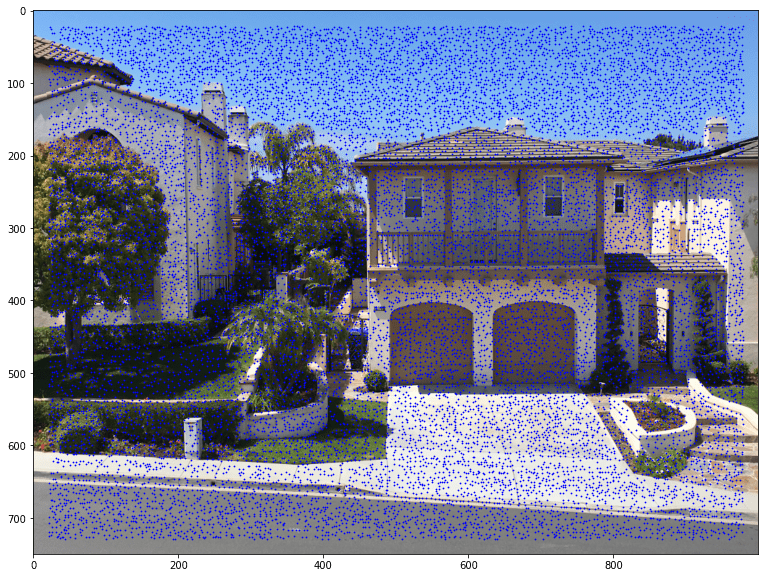

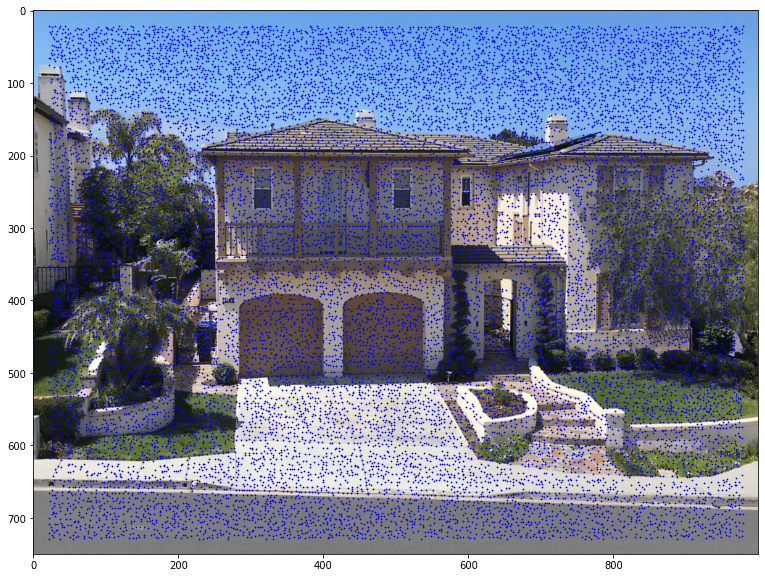

To detect corner features, I use the provided starter code to find the Harris interest points with all default settings.

|

|

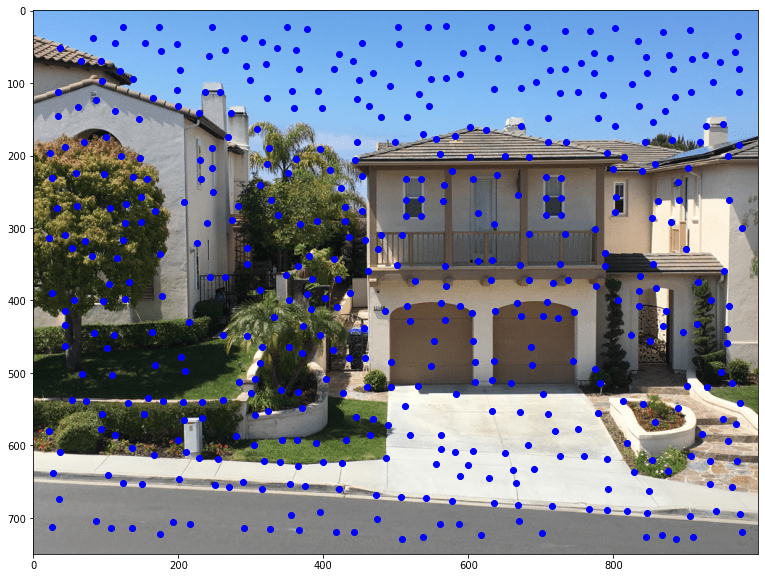

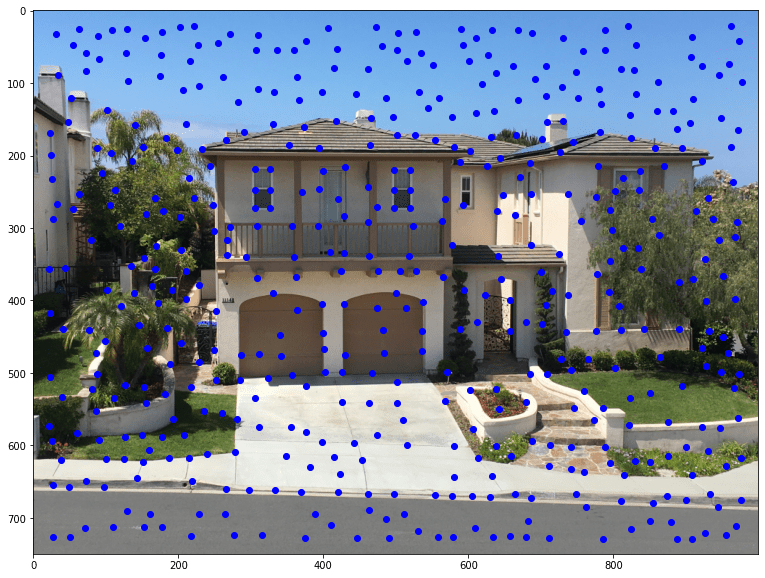

To implement ANMS, I followed the following steps:

The results were quite good.

|

|

To extract feature descriptors, I created a 8 by 8 coordinate grid with step=5 between each (effectively 40 by 40) and iterated through all of the 500 ANMS coordinates. For each, I took the 64 points indexed by the aforementioned grid, flattened into a 1-d array, demeaned and standardized it, and stored it into a featureDescriptor array.

To match feature descriptors, I again used the provided dist2 function, but this time between the featureDescriptor arrays of two separate but corresponding images. For each feature in one image, I took the top two matches and performed Lowe thresholding on a threshold of 0.5 (removing those > 0.5, ie. too similar to the second best match). Each coordinate would then be stored in an array along with its pair (best match) in the second array. I then sorted these arrays by a corresponding list of match strength (from dist2) between each coordinate and its top pair. Sorting meant I could take as many matching pairs as I'd like, and the first would be the best pairs.

|

|

Note that one of the matches on the left is actually missing on the right (it overlaps with another point for the right). This indicates that perhaps toggling the Lowe threshold might be beneficial. However, RANSAC effectively makes this a nonissue.

RANSAC involves taking a random subset of size 4 of the available points, computing a homography, and testing for inliers (those whose homography-computed pair are within 1px of the previously matches pair). The number of inliers are computed, and the set of starting coordinates with the maximum number of inliers is recorded (if the max number of inliers equals the number of points, the function ends early). I ran RANSAC for 1000 iterations on 10 points with good results (see below).

|

|

|

|

|

|

|

|

The city panoramas use images provided as a dataset on Piazza. In general, the automatically produced panoramas are higher in quality, as the features chosen and homographies computed are more accurate (manual features were inputted in ginput, which is very inaccurate). For example, you can see that in the manual ones, there's blurriness that comes from misalignment. For blending, I did a simple weighted linear blend.

Overall, this was a really enjoyable project to learn and do. I really enjoyed learning about homographies and how to compute them; the rectification results alone were really interesting, and applying them to stitching mosaics even more so. Also, implementing feature extraction and matching really surprised me in how a simple algorithm could yield pretty good results. In the future, I'd like to generalize my code to be able to build a panorama from any number of images in any order.