Yuhong Chen (cs194-26-afk)

In this project, we implement a simplified version of MOPS: “Multi-Image Matching using Multi-Scale Oriented Patches” by Brown et al.. First, we use the harris detector to extract interest points from two images that form a larger image. We then cut down the number of interest points with adaptive non-max supression and feature matching. Finally, we use RANSAC to select the best subset of points and use them to calculate a homography matrix and warp the images into one.

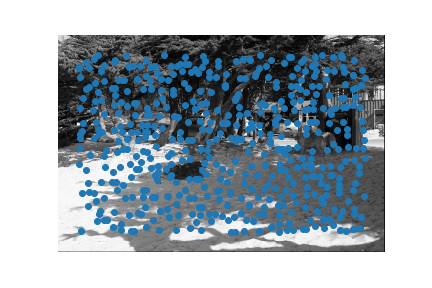

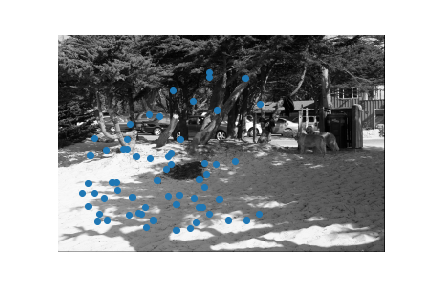

Using the harris detector, we can find a response for the harris corner for each pixel. But we obviously only want a small number of points for computing the homography matrix. A naive method of cutting down the number of points is taking the maximum n points. This doesn't work so well:

The poings are clustered together, and don't provide a good represetation of the orientation of the image.

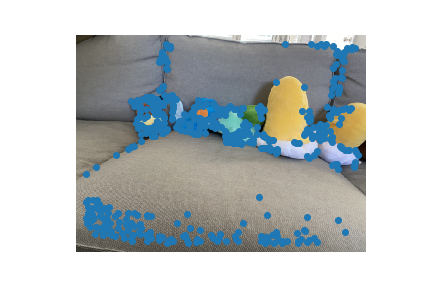

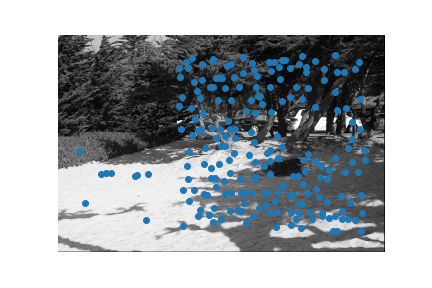

Instead, we use ANMS: Adaptive Non-Maximal Supression, which supresses interest points that don't have the maximum response in an adaptive radius. The minimum supression radius for each point is given by r_i = min_j | x_i - x_j |, s.t. f(x_i) < cf(x_j). Sorting by the supression radius would give us a better subset of points:

To find a point-to-point correspondence between the points selected by ANMS, we perform feature matching. The first part is extracting a feature vector, which is calculated for each point by sampling a patch around the point, downsampling the patch, and normalizing its mean and standard deviation. The result is a fixed-length vector containing a featurized version of the patch around the interest point.

We then try to match the points by computing the distance between the corresponding feature vectors. To increase the accuracy of feature matching, we only accept points that are closer in feature space by at least 50% than it's closest competitors. In other words, we only keep points where the best match is 50% better than the second best. This is because bad matches are generally closer, with bad values, and good matches generally drastically outperform bad matches.

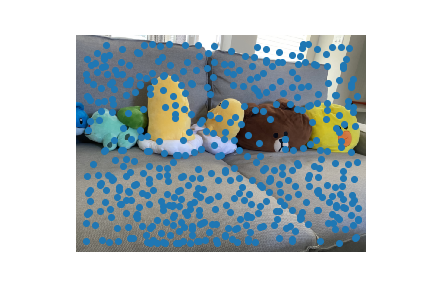

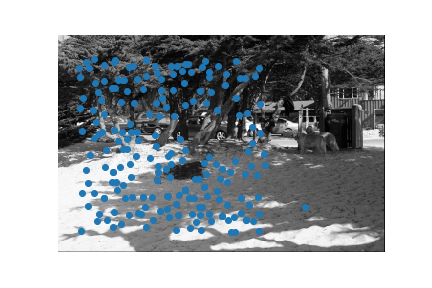

After feature matching, the remaining points look like this:

Most of the non-matching points were rejected, and a lot of the remaining points have corresponding points in the other picture.

For RANSAC, we randomly choose a subset of points to compute a homography matrix. We then project the points from image A to image B using the computed matrix and keep the points that are within an error threshold. We repeat this procedure for many iterations and take the iteration with the maximum points kept. This produces a good estimation of the non-outlier points.

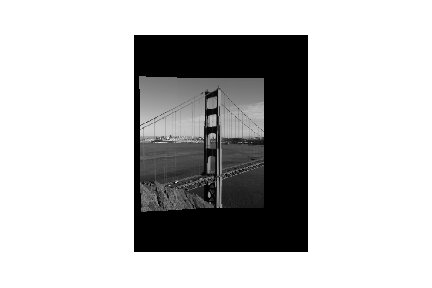

Finally, we use the kept points to compute a homography matix and combine the images! Here are some results:

The auto-stitched results are also more stable than the ones I made with manual points in part 1, Here's a side-by-side comparison: