Note: Images from this project were obtained from Adobe, this course's staff and MIT 6.819's course staff. All panoramas were stitched together with my code for this project.

Part 1: Image Warping via Learned Homographies

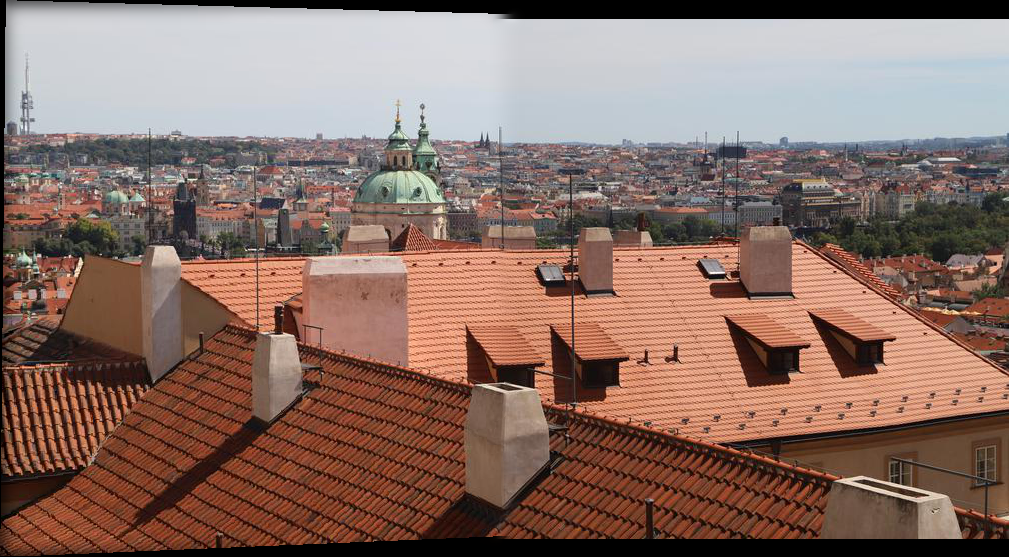

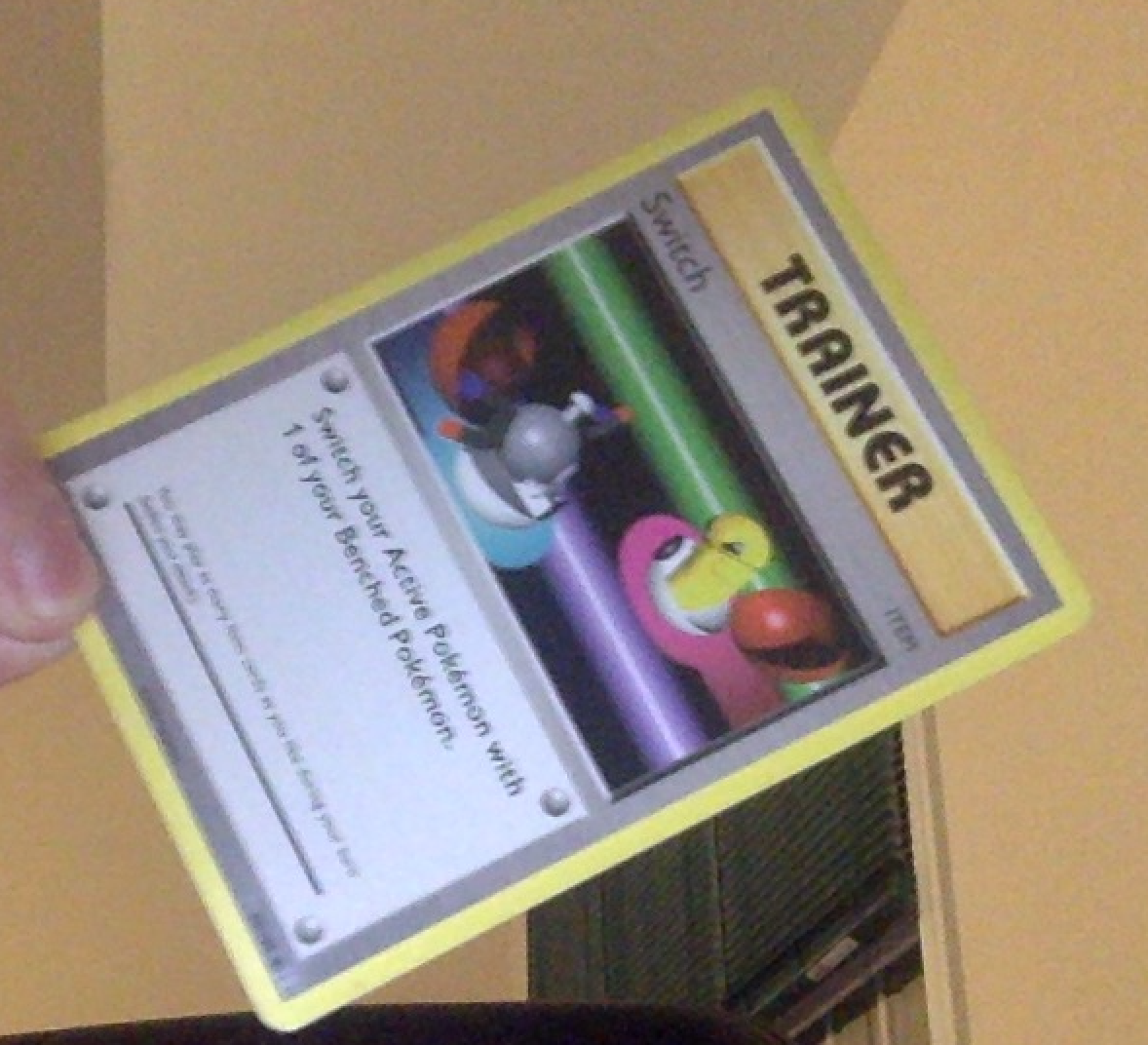

In this problem, we aim to identify a 3x3 homography transformation that can align images. For this part, our only goal is to learn to warp an image with a homography to a more canonical pose. Here is an example of an image I will be trying to align:

To do this, I first manually computed the corners of the Pokemon card, and a target alignment (where the card is vertical--up to any errors in my hand-labeling). Next, I computed a homography matrix by solving a least squares problem. Specifically, the problem is minimizing the distance between predicted points (Hp) and the ground truth points (y) that correspond to when the card is perfectly horizontally. The least squares problem is ||Hp - y||, where ||.|| denotes the L2 norm. We can construct a linear system A that is a function of both p and y: Ah = Hp - y, where h is a flattened H. The problem then reduces to solving ||Ah|| which simply amounts to finding the h vector in the nullspace of A. Since it isn't always possible to do this (for example, A may have an empty nullspace), a good approximate solution is to find a vector that A "squishes" very close to zero. This is exactly the minimal singular vector of A, which can be efficiently computed via SVD (or by directly computing the eigenvectors of A^T A). Once we find this singular vector, we simply scale it so it's final component is equal to 1 and reshape it to a 3x3 homography matrix. Once the homography is computed, I apply a reverse-mode warp to generate the final image, just like in project 3. However, doing this naively can sometimes cause coordinates from the original image to be mapped to negative coordinates in the output image. To solve this, I simple shift all coordinates such that the minimum coordinate is zero, and I center-padded the input image. Below is the resulting warp, which ends up doing a good job aligning the card (note that the card wasn't quite perfectly rectangular to begin with--it was a bit bent):

Here's a second example of a more extreme warp (which requires more than just rotation to properly align). The first image is the input and the second is post-alignment to a canonical pose:

The most interesting thing I learned was that Pokemon cards aren't perfectly straight :). On the technical side of things, it was interesting to (re-)learn that you have to manually handle the scaling of the image both after learning the homography as well as after applying it to an image.

Part 2: Panorama Stitching

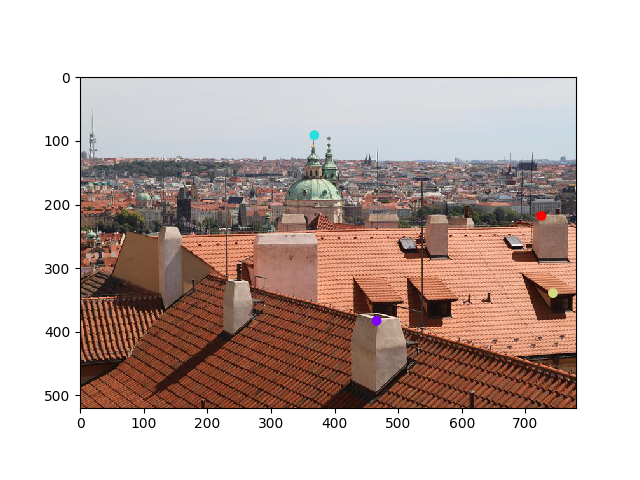

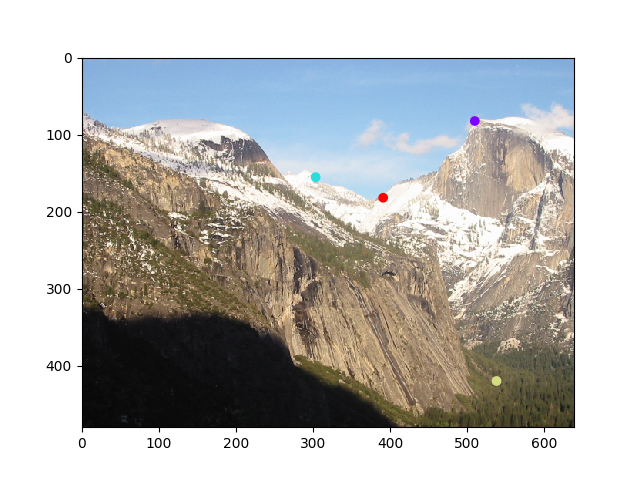

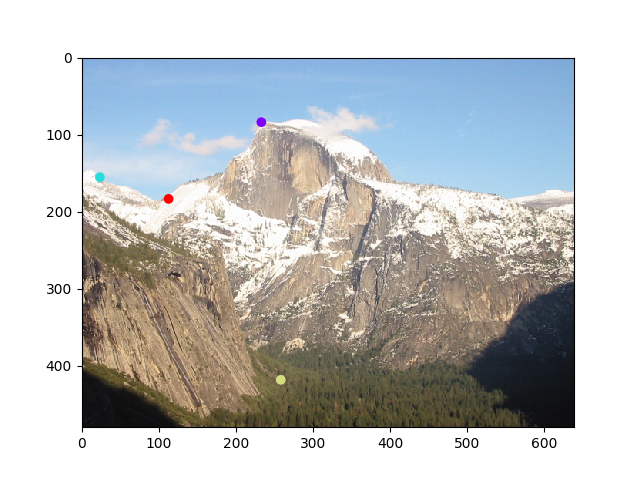

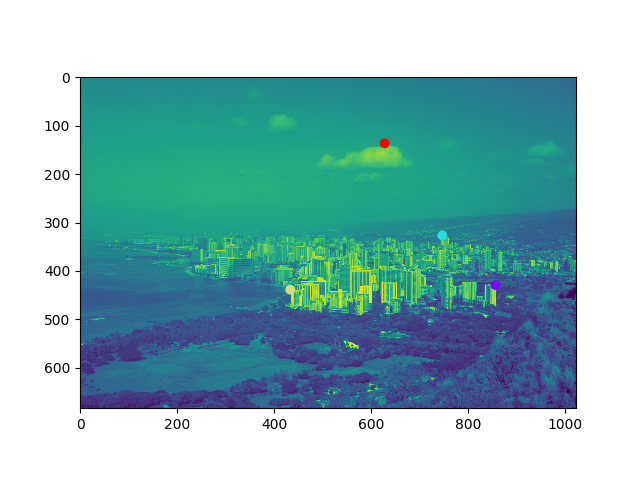

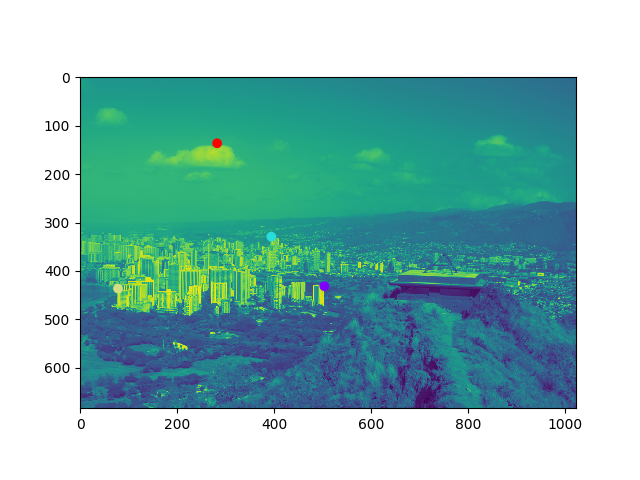

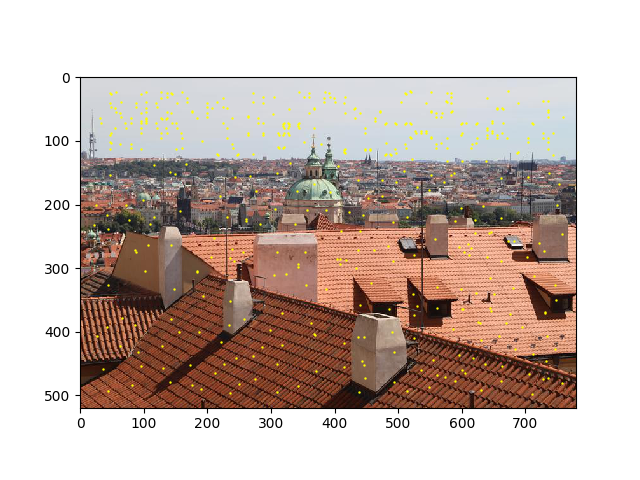

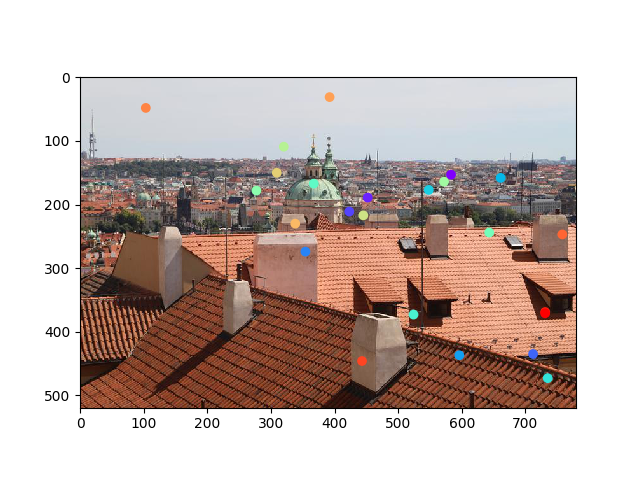

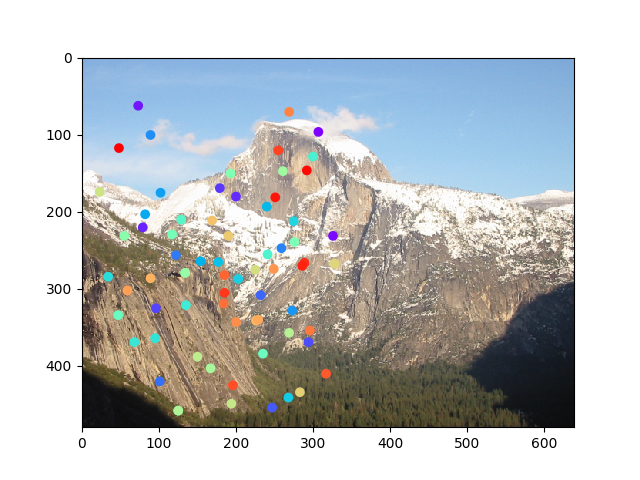

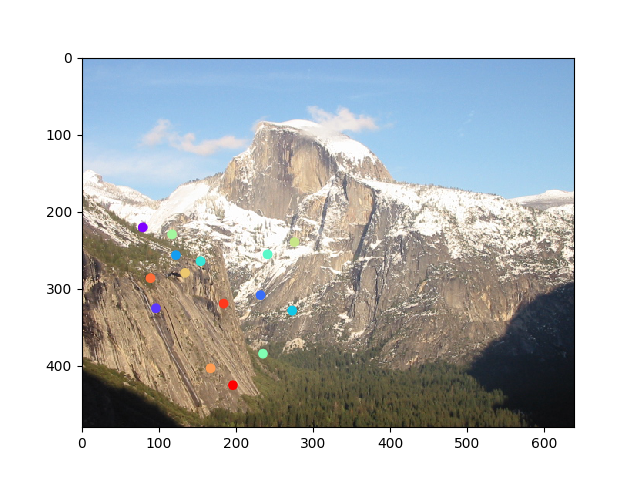

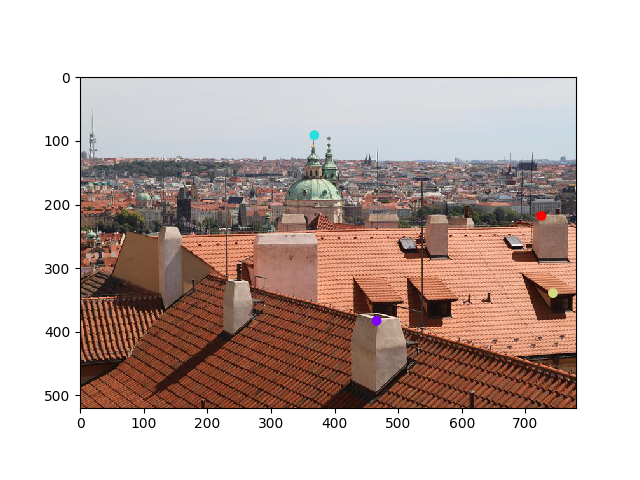

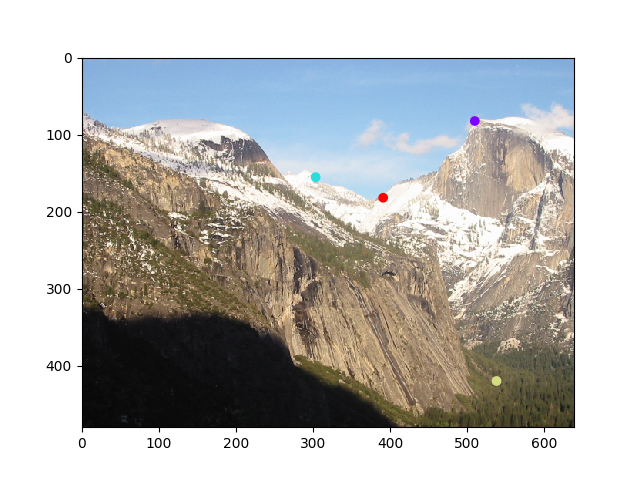

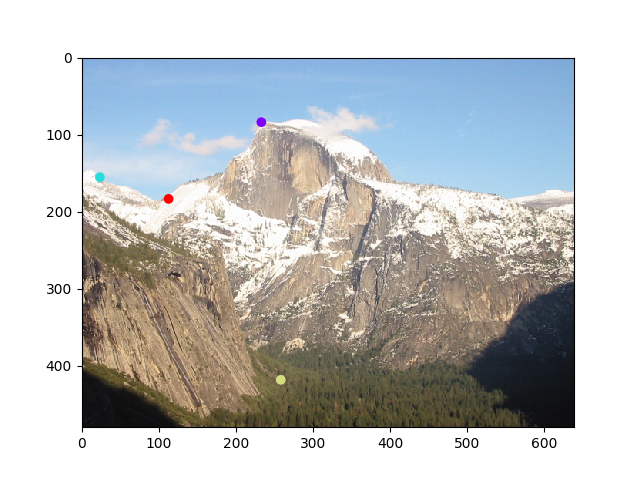

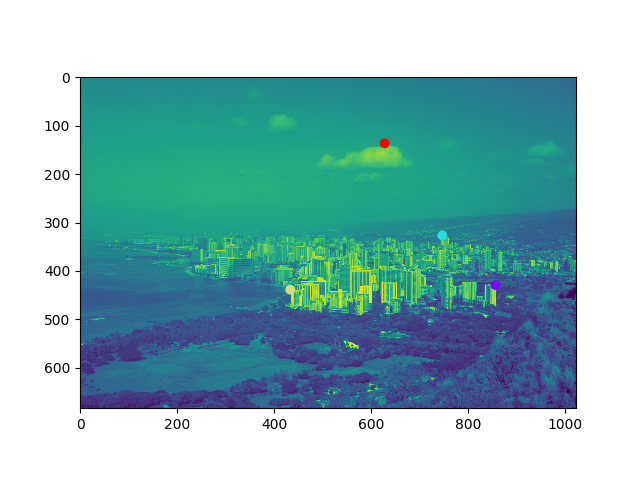

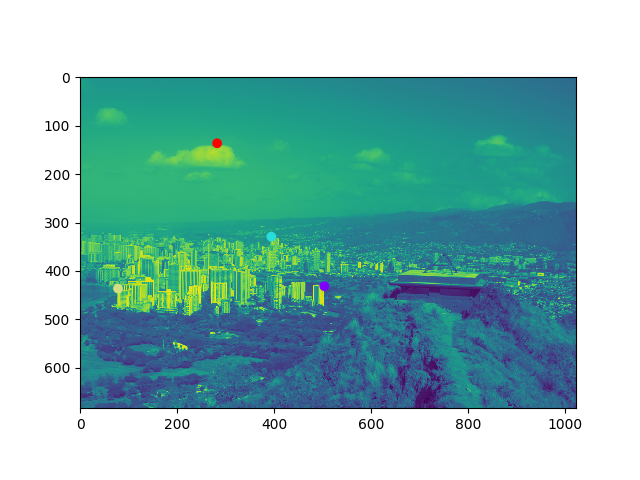

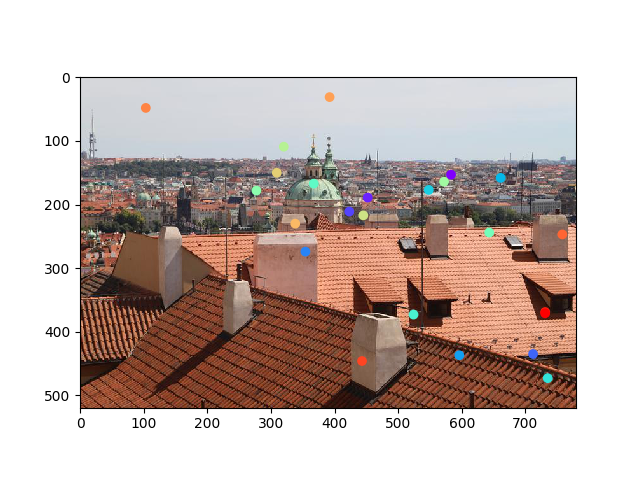

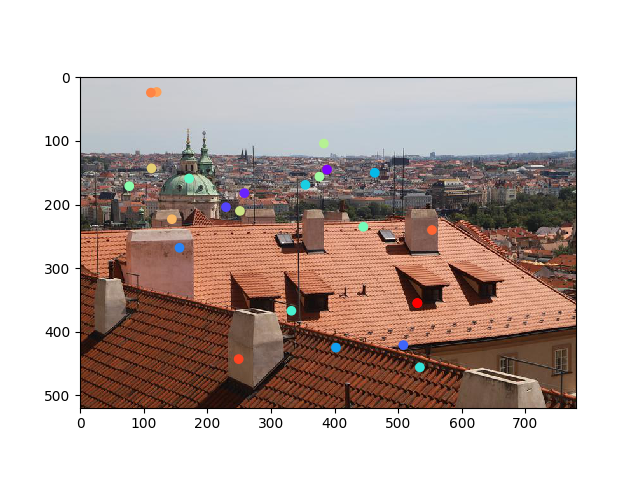

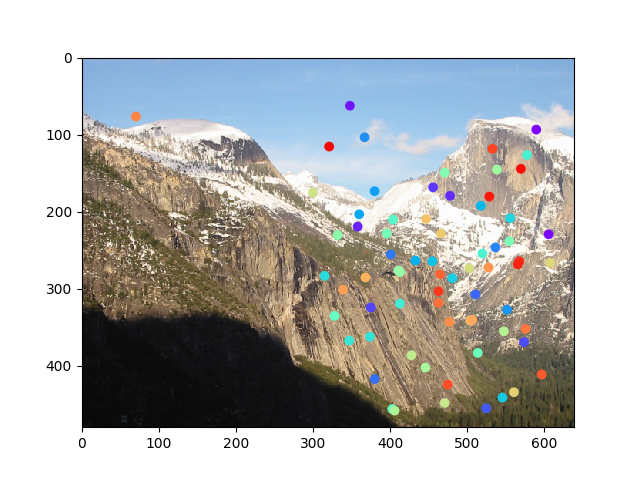

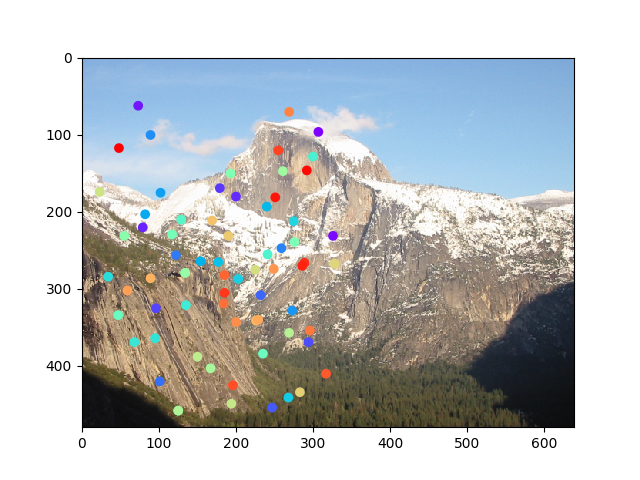

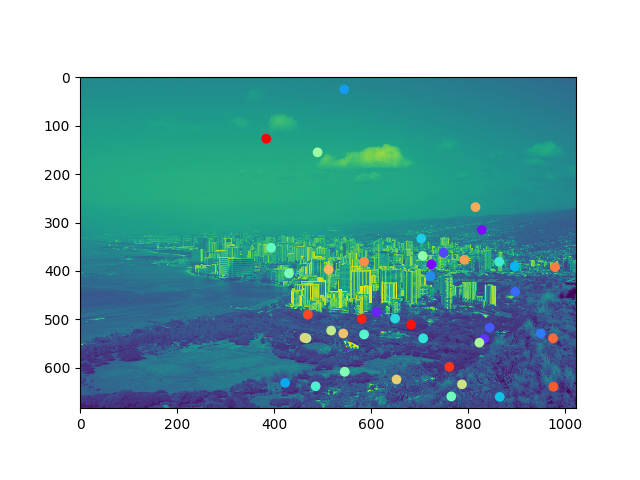

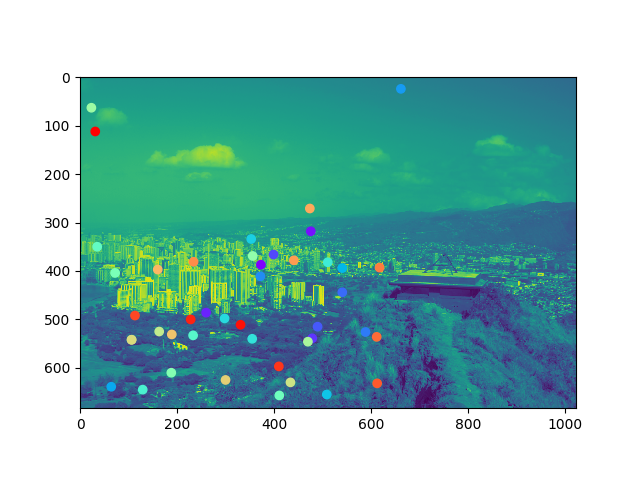

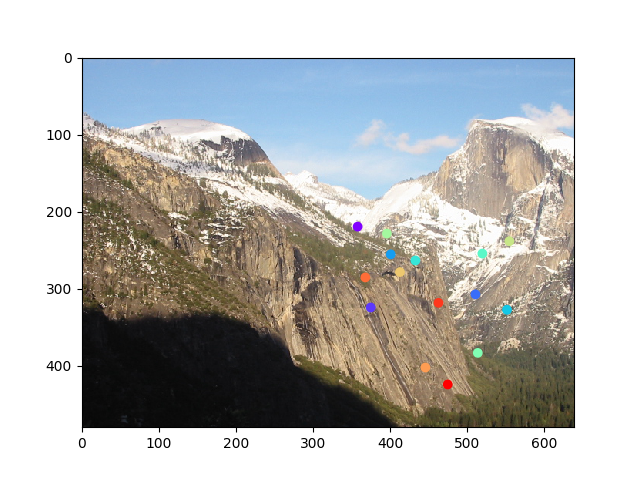

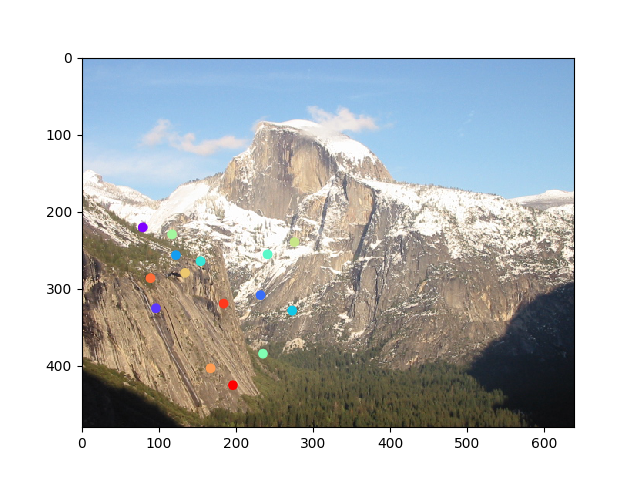

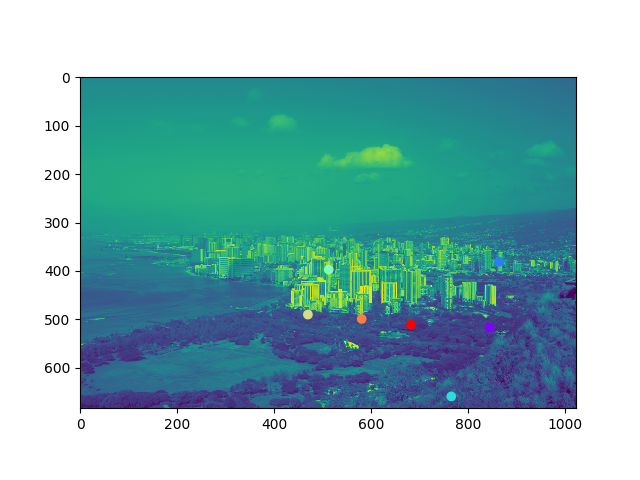

For the second part of this project, we explore the problem of stitching two images together to generate a panorama. This is closely related to the prior problem of image warping; when images on an identical plane are taken, then we can use a homography to align them together. As a warm-up, we began by assuming we could label sets of aligned points manually in the two images. Because homographies consist of eight parameters (they are more general than affine transforms), we need a minimum of four equations to learn a homography (because each equation has two variables). I actually found that only labeling four points was usually sufficient to get nice panoramas. Below I show my manually labeled points for three pairs of images (points with the same color represent correspondences between the two images):

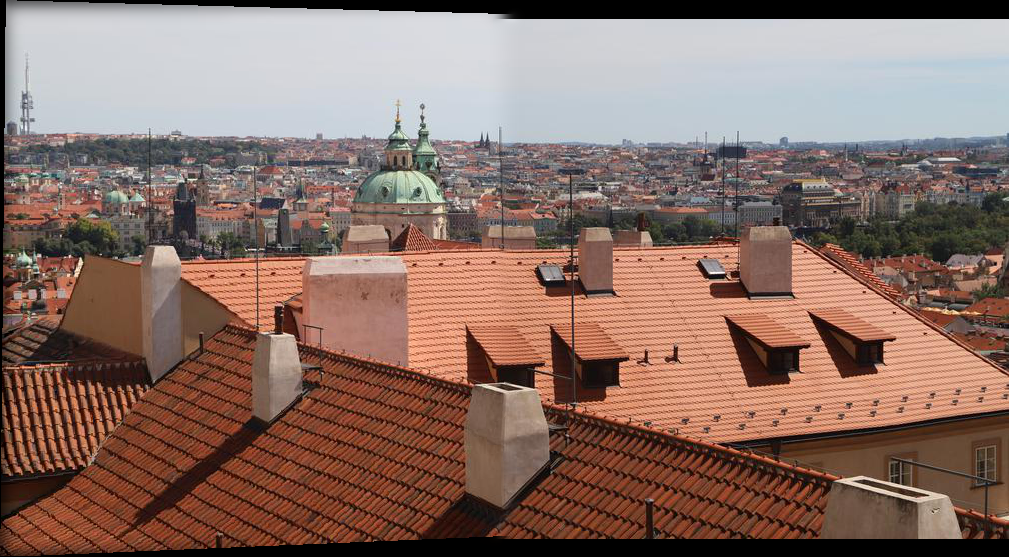

I show the final panoramas at the end of this webpage (to compare against the auto-stitching algorithm).

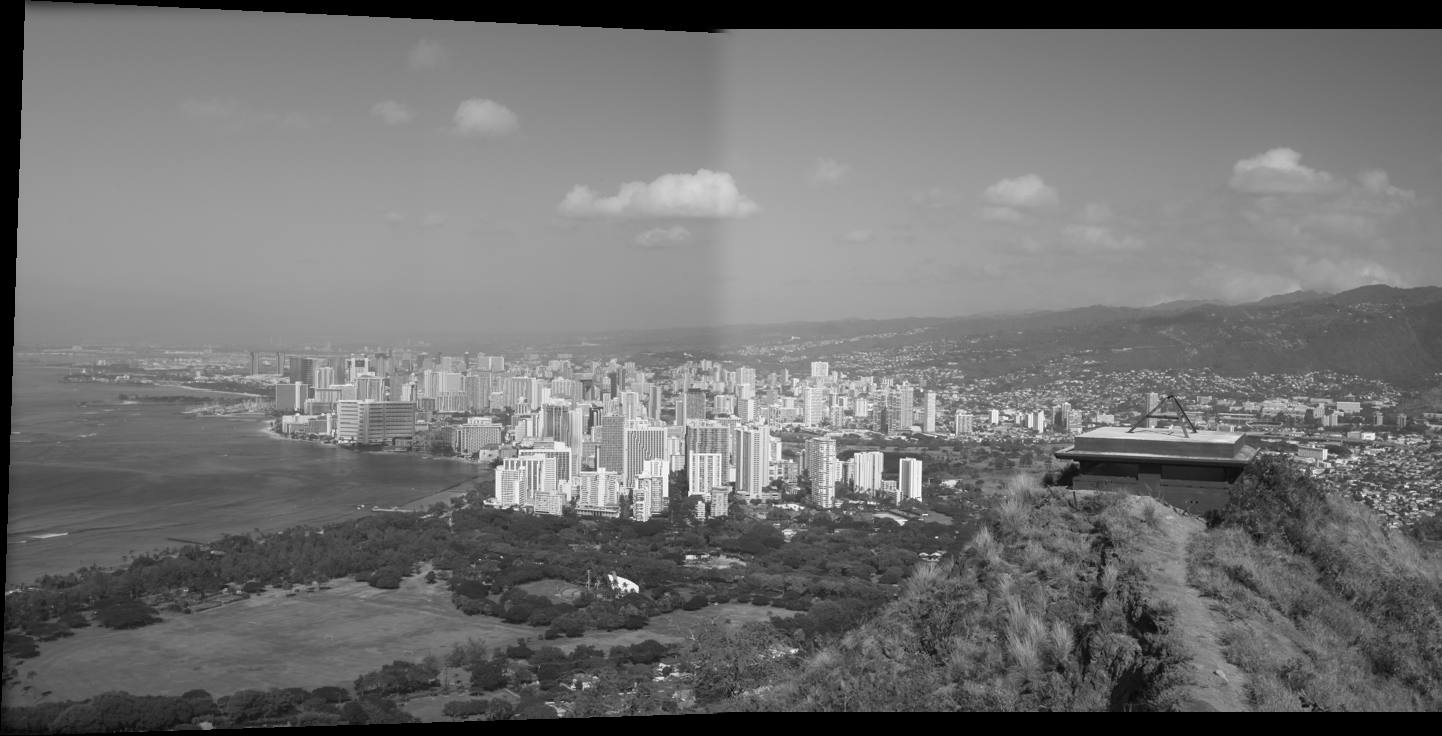

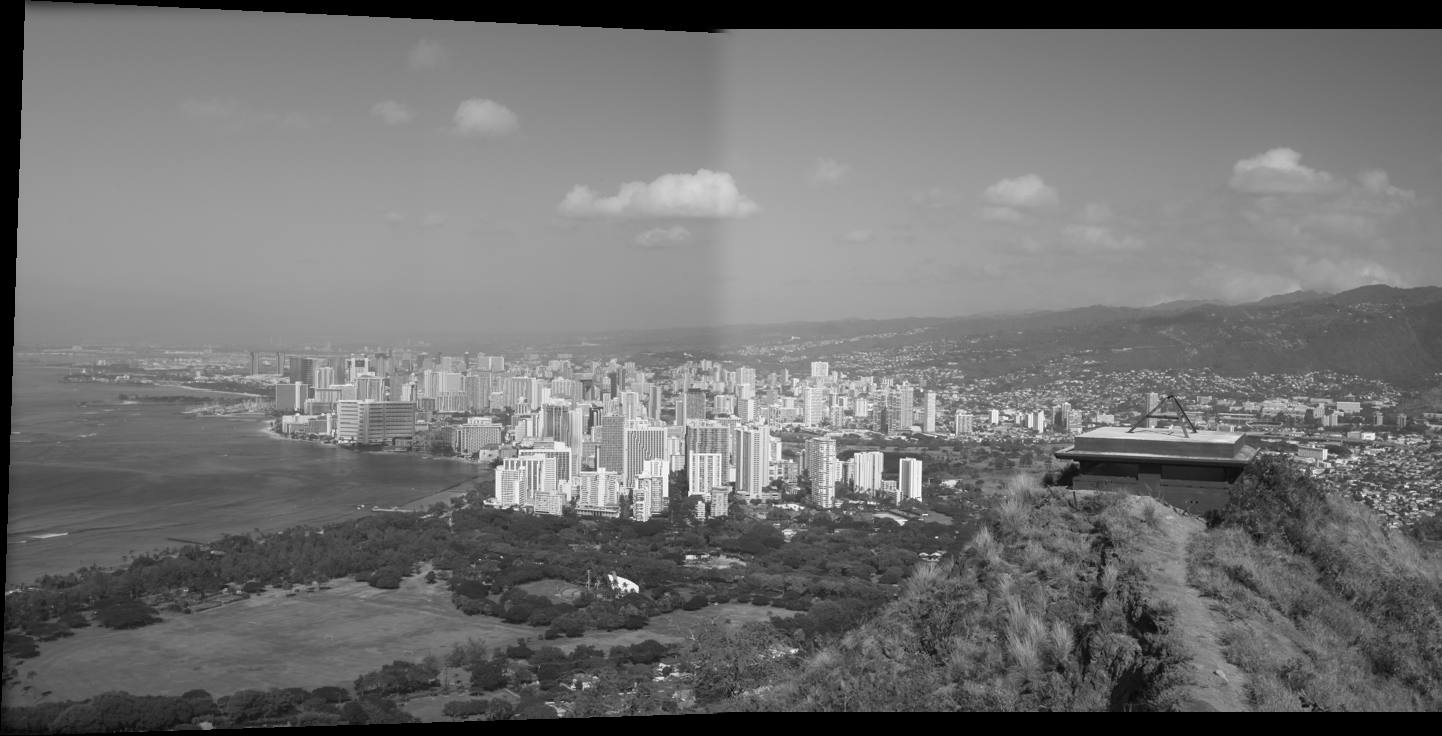

What if we want to learn homographies in an unsupervised fashion, where no points are manually annotated by humans? In this case, we can implement a feature detector system. We implemented a simplified version of MOPS (poor man's SIFT), which finds correspondences between points in two images. The algorithm works by generating Harris corners, using non-max suppression to filter out high-energy corners that are spatially close to one another and extracting feature patches from an image to determine correspondences with a second image. In the correspondence step, each point's feature patch in the first image is compared to each point's feature patch in the second image (simple nearest neighbors under an L2 norm). Using Lowe's trick, I only kept correspondences if the ratio of the first nearest neighbor to the second was less than 0.4. I tended to get very good features from this step, but I also used RANSAC to remove false positive correpsondences and use a refined set of correspondences (only the "inliers") to compute the final homography. In both the manual and auto-stitching cases, I blended the two images together using Laplacian pyramid blending with four levels. This does a really nice job of removing seams, although it isn't completely perfect (the seam in the greyscale Diamondhead image below is still noticeable).

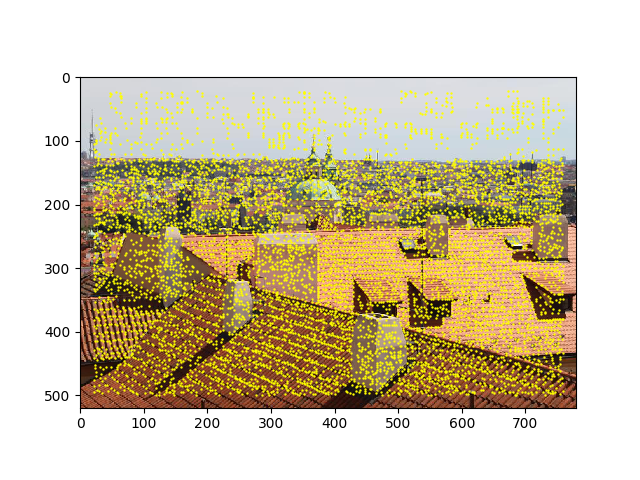

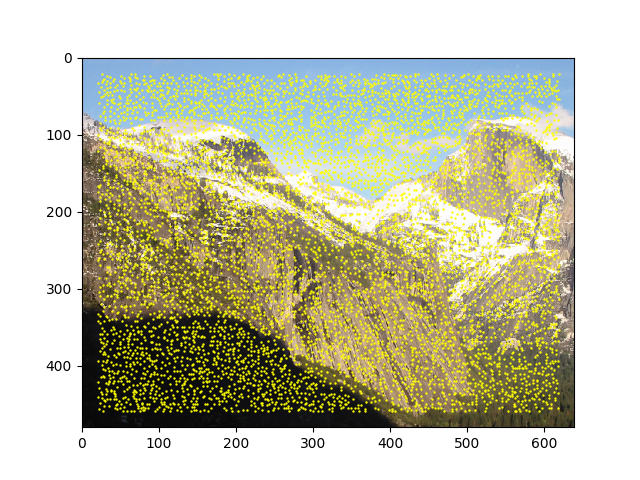

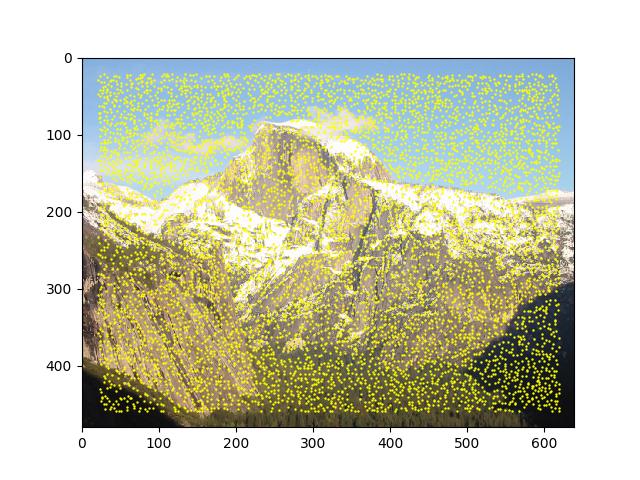

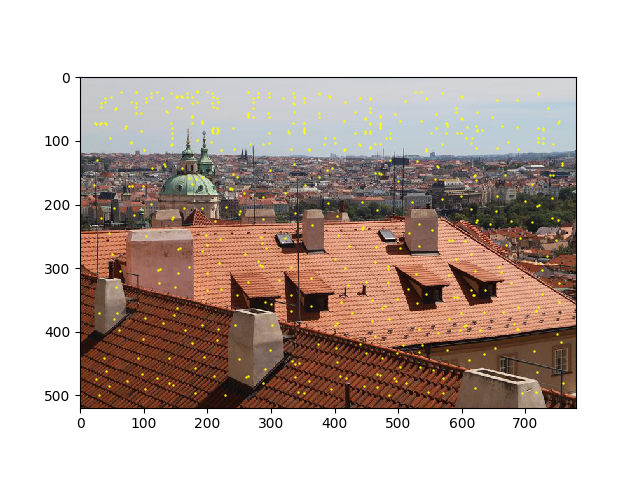

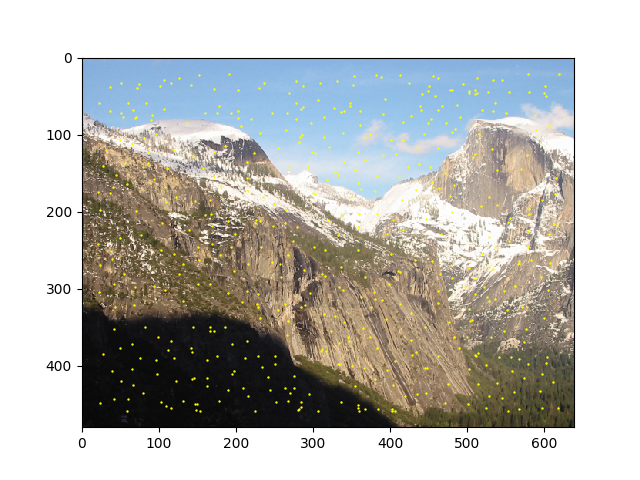

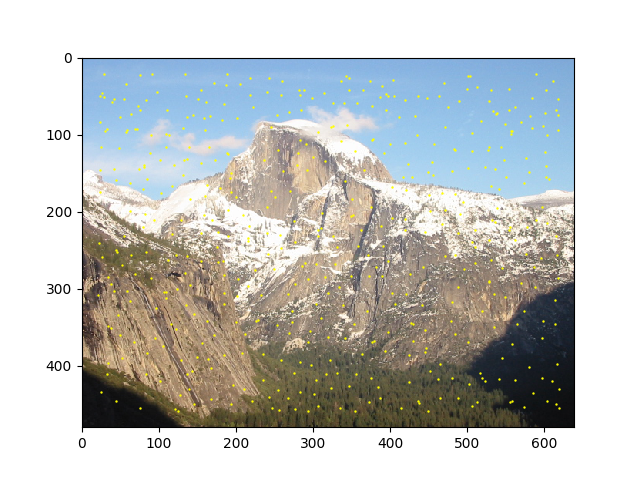

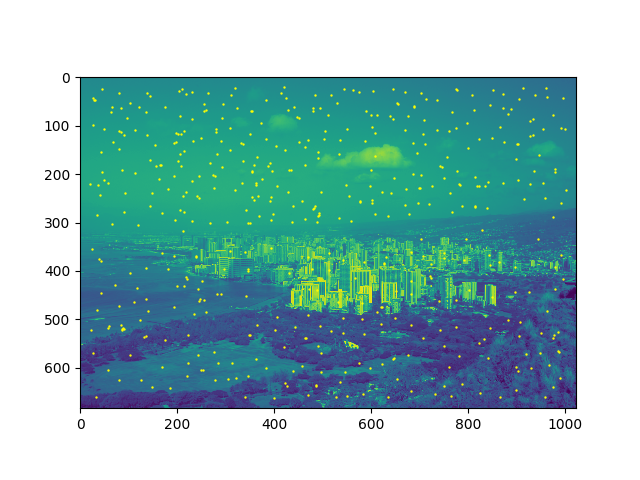

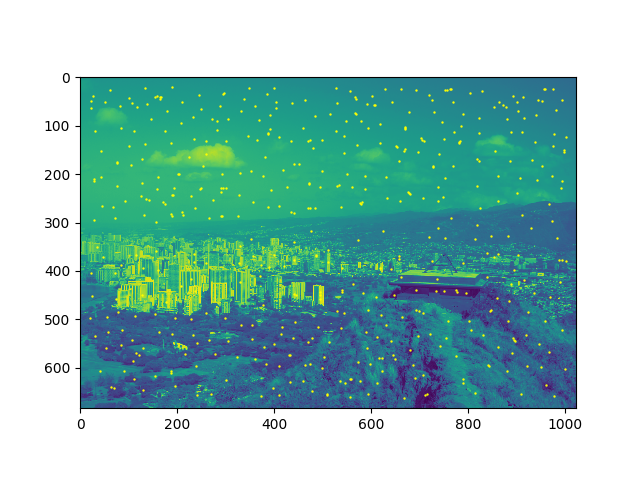

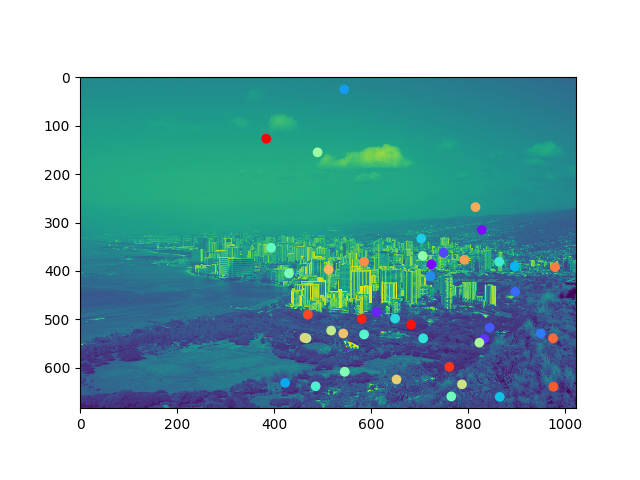

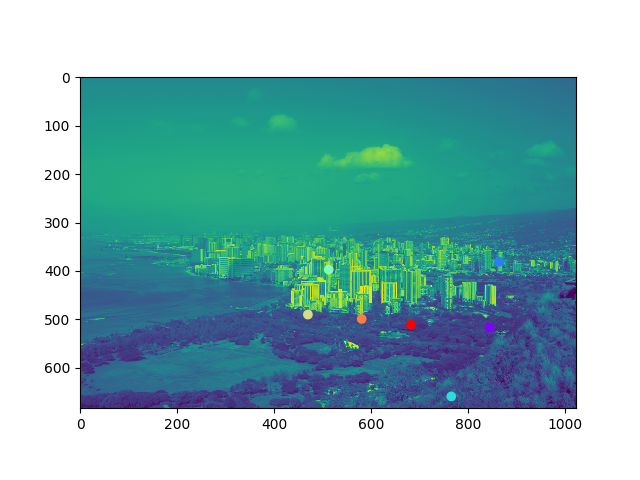

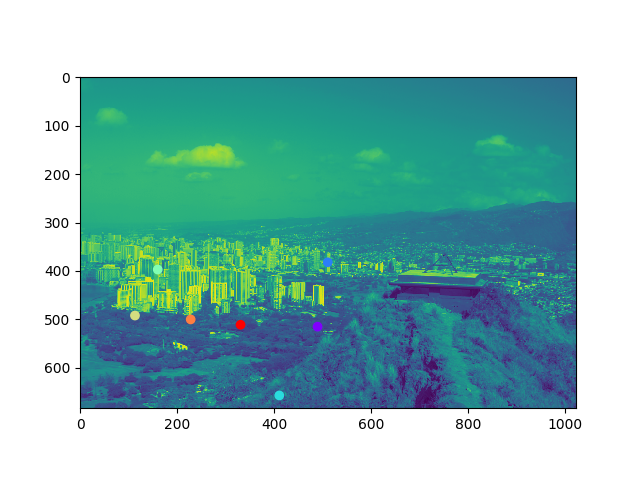

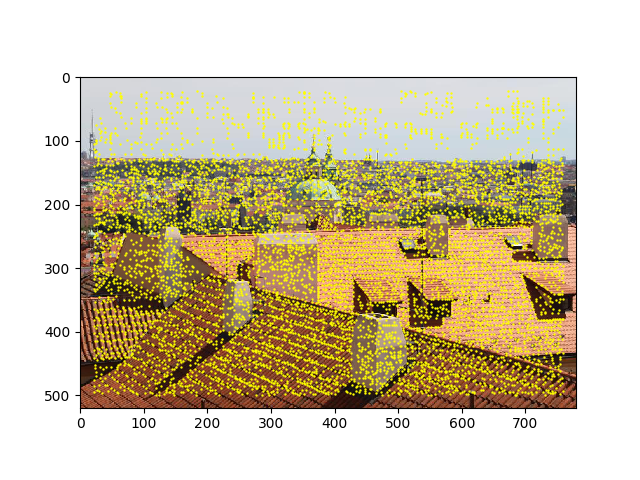

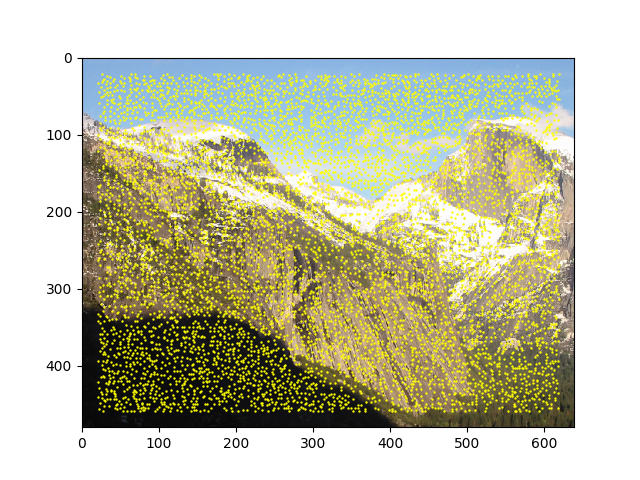

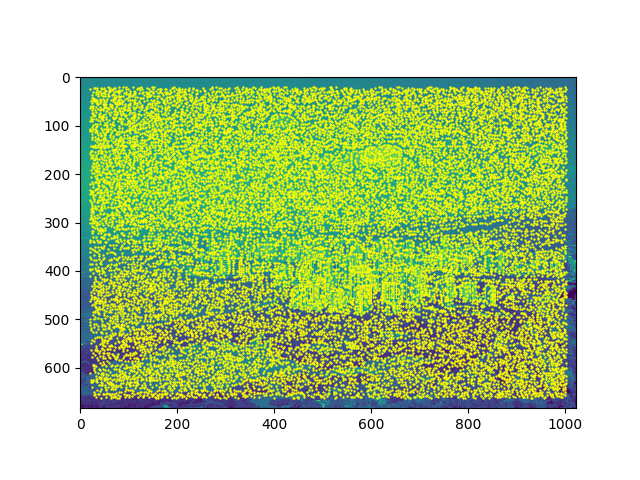

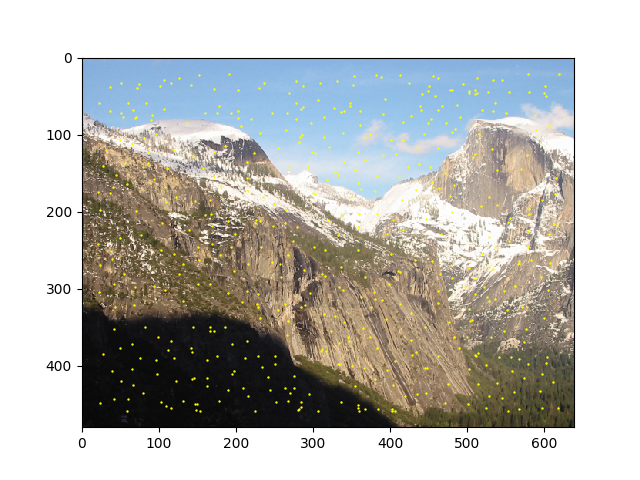

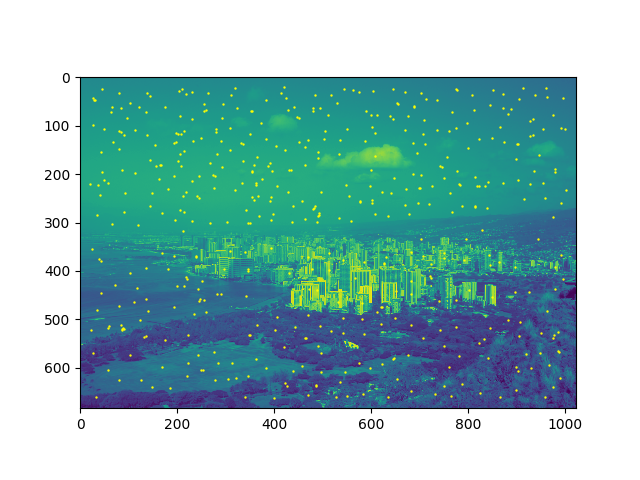

Below are the Harris corners I found in the three pairs:

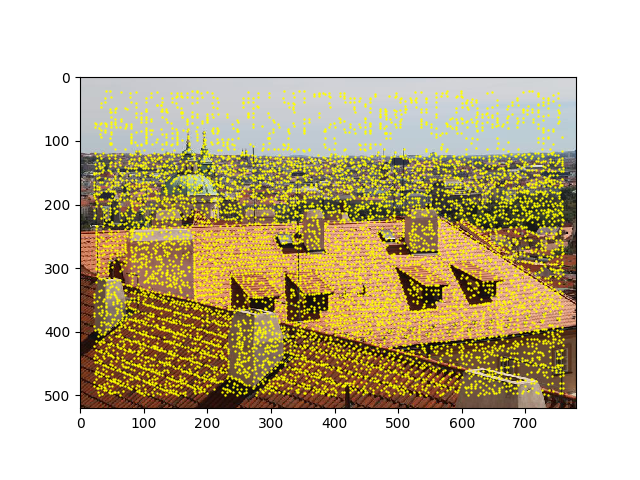

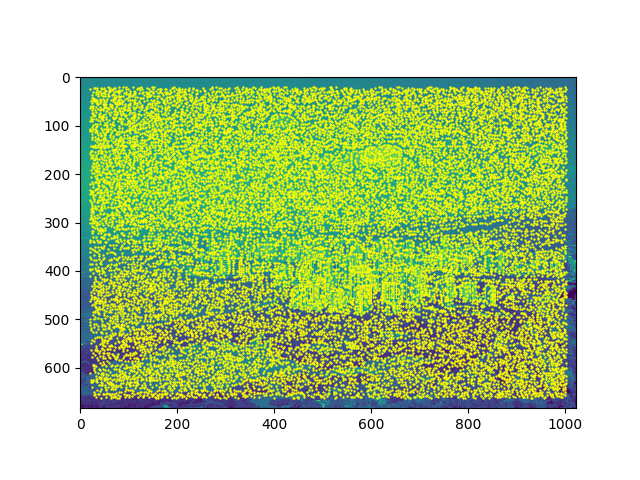

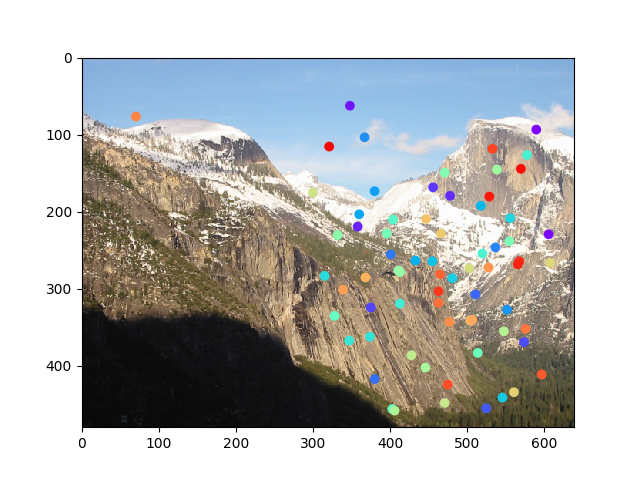

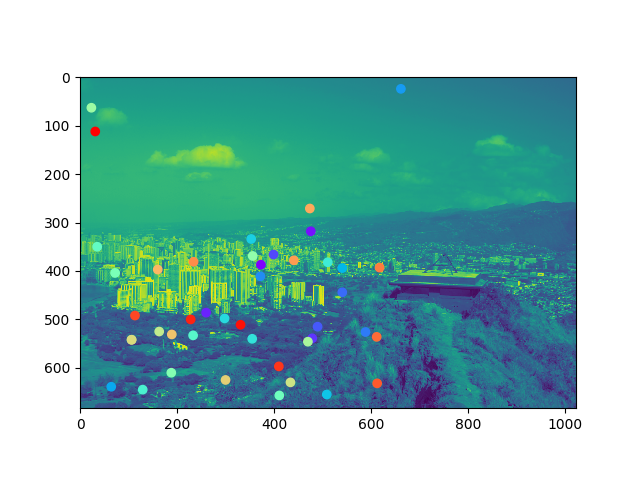

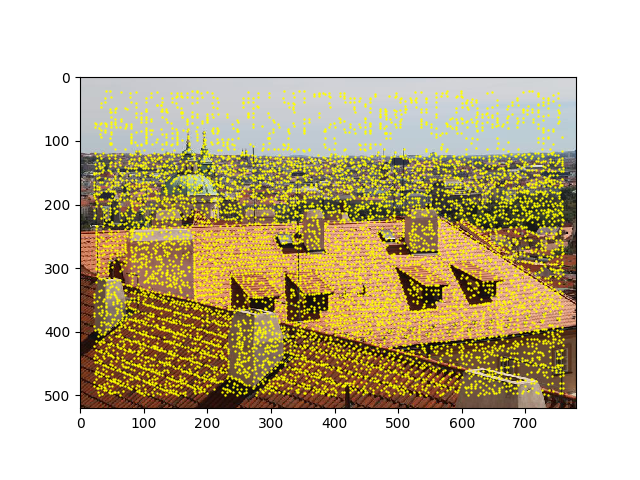

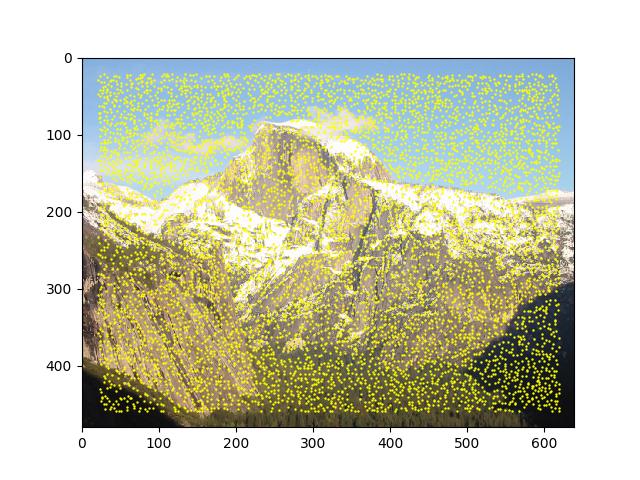

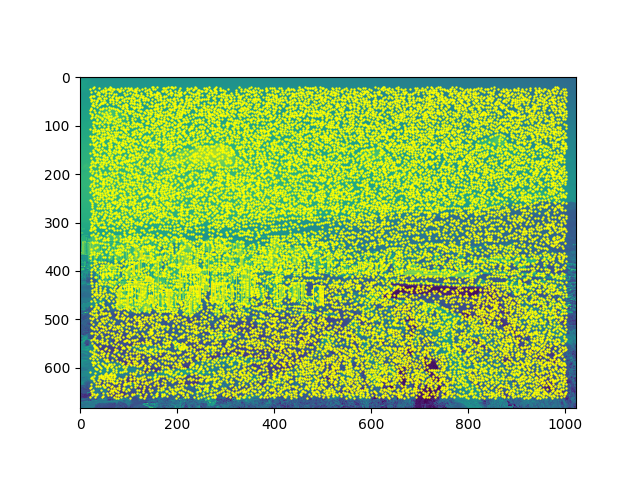

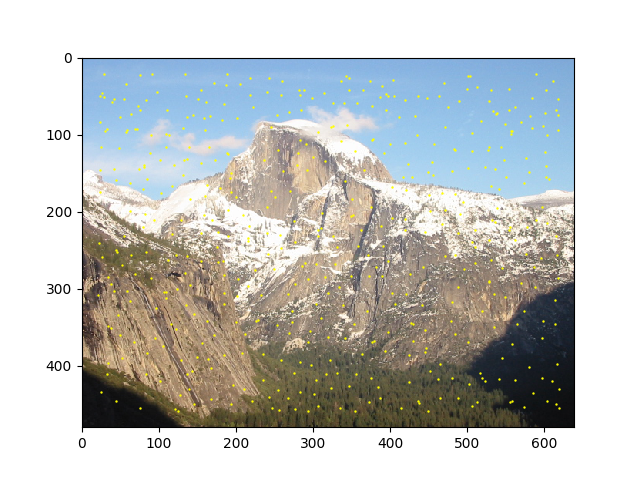

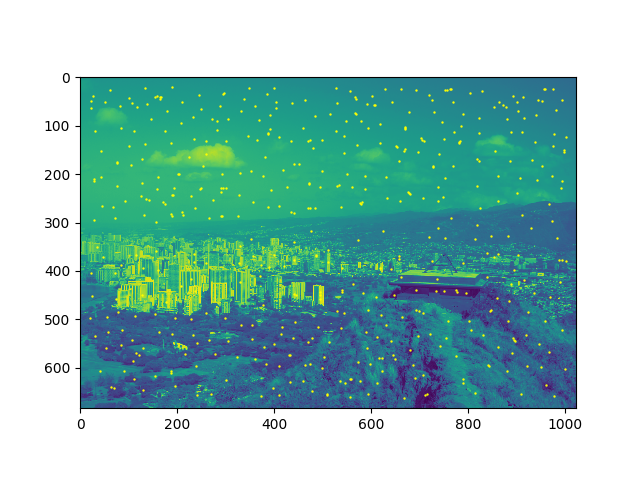

Here are the points once they've been processed with adaptive non-max suppresion (leaving 500 points per image):

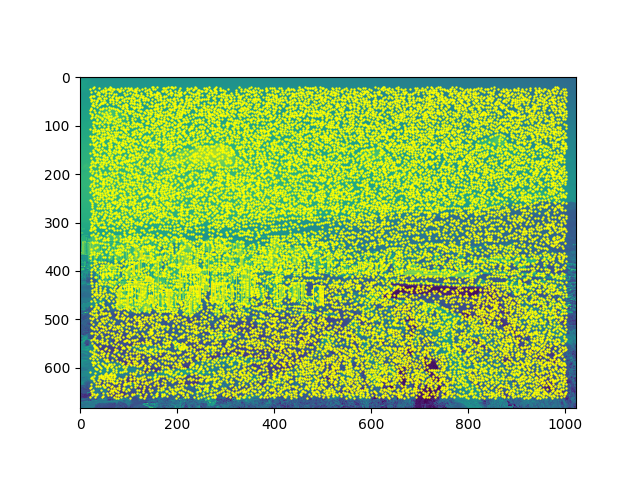

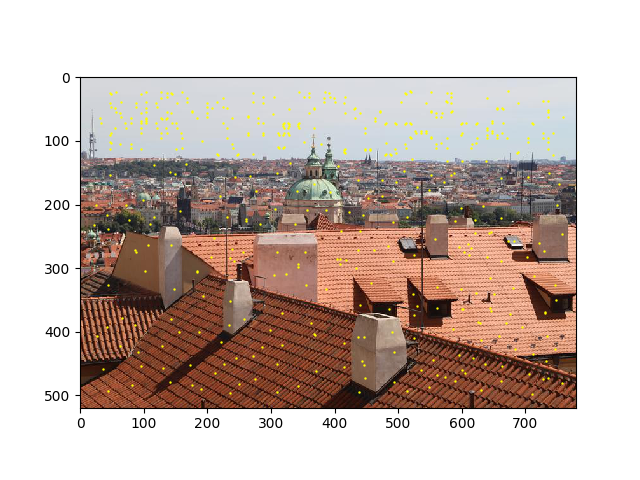

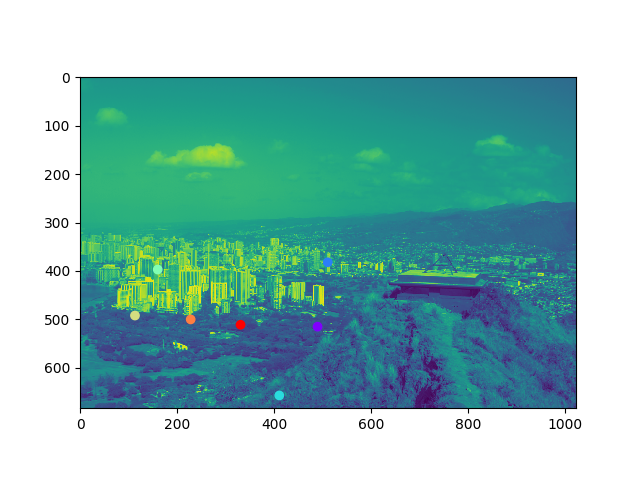

After computing the alignment step, we are left with the following correspondences (a point with the same color across the two images is a correspondence):

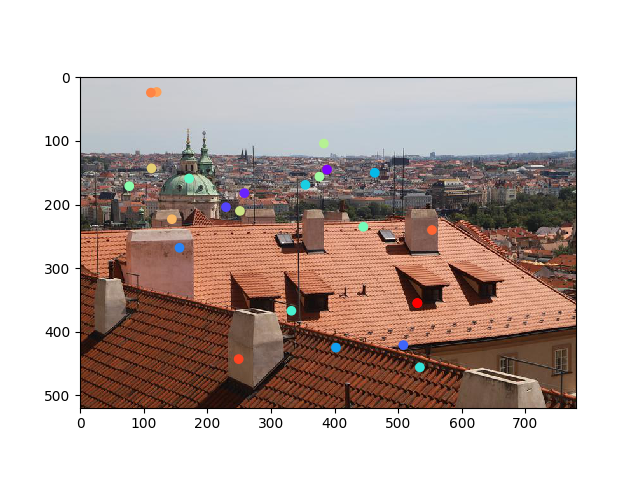

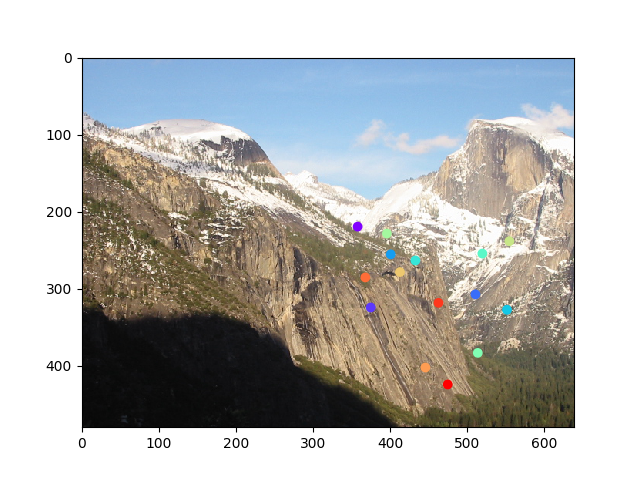

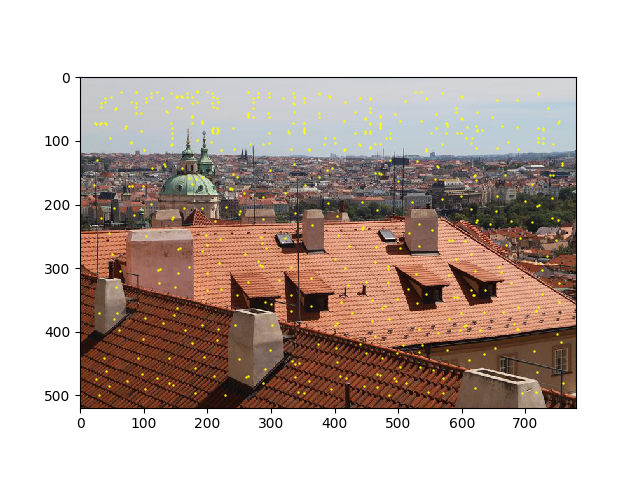

Finally, here are the inliers computed by RANSAC. These are the final points that were used to estimate the homography:

Below are comparisons between the manually aligned panoramas and the auto-stitched panoramas (first image in each pair is the panorama generated from manually-annotated correspondences while the second image is the auto-stitched panorama). The automatically stitched panoramas tend to feature less extreme warping and are more visually pleasing. I was surprised that our simplified version of MOPS was so effective; the raw correspondences produced from the algorithm were so good that RANSAC seemed like it was nearly unneeded.