PROJECT #1: SEAM CARVING

In this project, I implemented the seam carving algorithm as described in Avidan and Shamir's Seam Carving for Content-Aware Image Resizing paper. The idea of seam carving is to successfully resize images while being content aware. Instead of rescaling or cropping an image, we instead want to remove 'seams' - a connected path from one side of the image to the other, choosing one pixel in each row (for vertical seam carving). The implementation is split into two parts:1. Determine the importance of each pixel using an energy function.

2. Keep on removing lowest-important seam until desired image size.

Algorithm details:

The energy function I used was simply taking the sum of the derivatives in the x and y directions at each pixel. To find the lowest cost vertical seam, let e(r,c) be the energy at row r and column c, and let f(r,c) be a function that computes the lowest-cost path from the top of the image to pixel at row r and column c. Let f(r,c) = e(r,c) + min(f(r-1, c-1), f(r-1, c), f(r-1, c+1)). Thus this function uses the coordinates of the 3 pixels in the previous row adjacent to r,c to compute the shortest path. Once I computed f(r,c) on all pixels, I found the pixel in the last row with the smallest f(r,c) value. From there, I backtracked each row above to follow the adjacent pixel with the smallest f(r,c) value, keeping track of the index of each pixel to be removed. Once I reached the top of the image, I now had all the indices in each row that would produce my seam. I removed the seam with simple numpy operations.

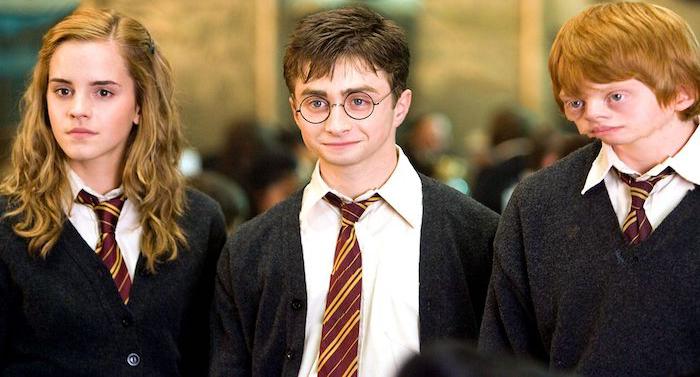

Here are some rather successful results:

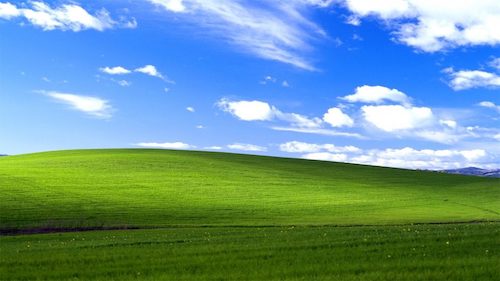

Vertical seam removal: 150 columns

| Before | After |

|---|---|

|

|

|

|

|

|

|

|

Horizontal seam removal: 90 rows

|

|

|

|

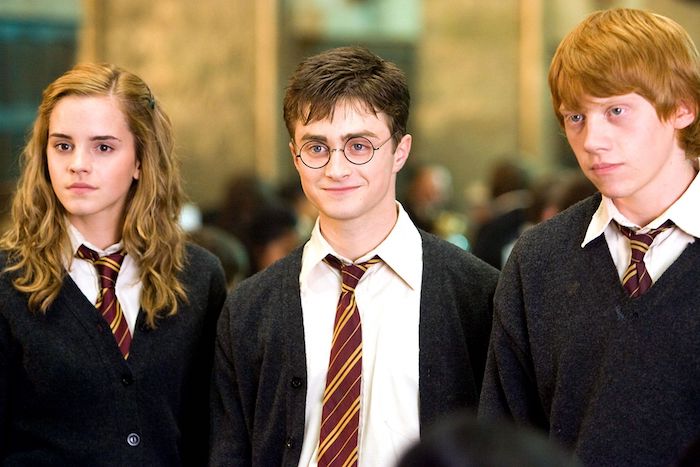

And here are some failures... I classified these as failures because I did not consider the resizing to be that content aware. For example, faces were either disproportional, vertical/horizontal lines in the image were not maintained properly, or there were noticable artifacts that would make your head scratch if you saw the image for the first time.

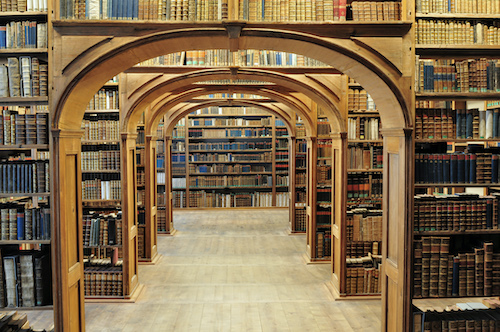

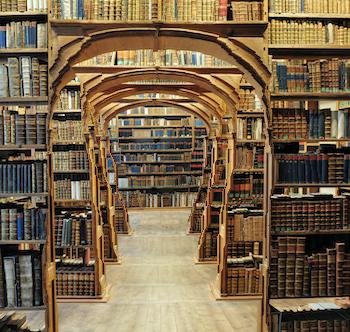

Vertical seam removal: 150 columns

| Before | After |

|---|---|

|

|

|

|

|

|

|

|

Horizontal seam removal: 90 rows

|

|

|

|