CS 194-26 Image Manipulation and Computational Photography

Final Project : A Neural Algorithm of Artistic Style

Yin Tang cs194-26-acd, Jamarcus Liu cs194-26-adh

Overview

In this project, we reimplement A Neural Algorithm of Artistic Style that describes how we can use neural network to transfer image styles to other images while preserving their contents.

Model Architecture

As suggested in the paper, we use a VGG-19 pretrained net as our feature extractor, and the model is trained using conv4-2 as content representation, which is layer 21, and a combination of conv1-1, conv2-1, conv3-1, conv4-1, conv5-1 as style representations, which are layers 0, 5, 10, 19, 28. The structure of the model we use is pretty much the same as the one described in the paper. After experimenting, we use learning rate of 0.01, content weight of 1 and style weight of 1e10 to generate the style transfer pictures.

| VGG-19 Model |

|

Examples

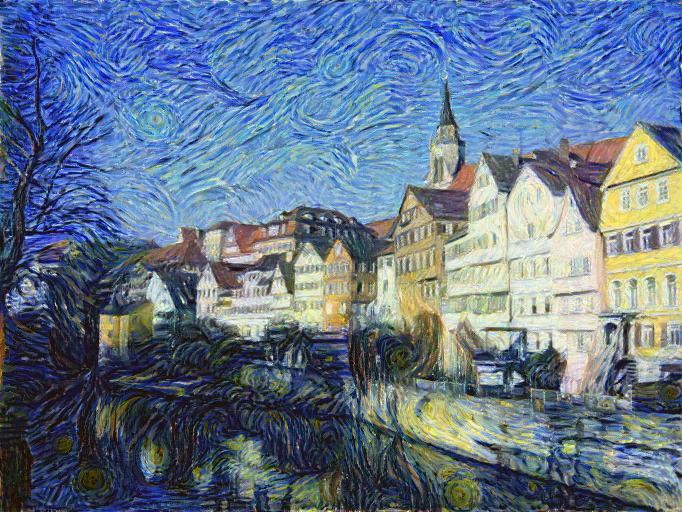

We choose three content images, one of them is the Neckarfront picture from the paper and five style images, four of them are from the paper.

Content Images

Style Images

Neckarfront

The results are pretty good, but not as sharp as those in the paper. I think part of it is because the resolution of the images I use is not that big, and also the training time may not be as long as they use. Here are some other results:

Wuhan

Momo

Failure Cases

When I tried to run the Neckarfront picture on the style image I choose, it is strange to see that the sky is turing purple and lighting as in the style image, but the part near the building does not change much. I think this has something to do with the structure of the style image, baceuse the lighting is in a white background, so it is very hard for the network to learn the style and apply it to the content image. This problem as also be seen on the style transfer image on Wuhan from the same style, but not as obvious as this one.

| Style |

Result |

|

|