CS194-26: Image Manipulation and Computational Photography

Sping 2020

Final Project

Zixian Zang, CS194-26-act

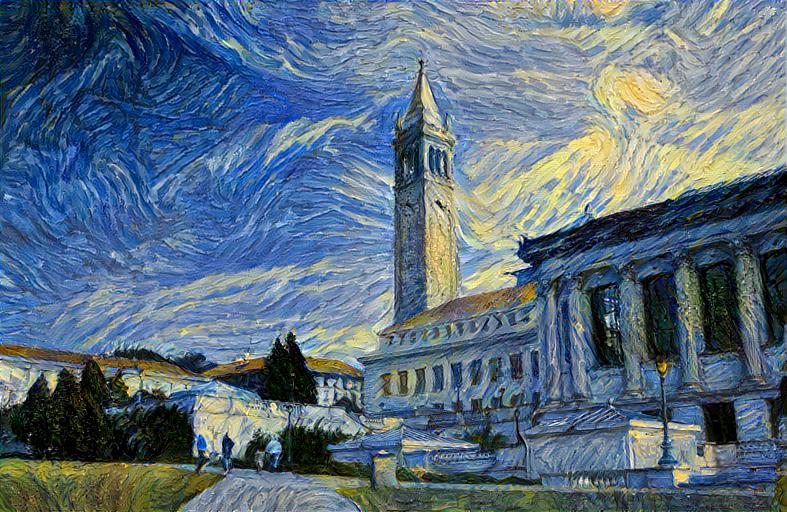

First Project: Neural Style Transfer

Overview

In this project, we reimplement “A Neural Algorithm of Artistic Style,”

a paper that describes how we can use the intermediate feature steps of a trained CNN

to transfer the style of one image to another.

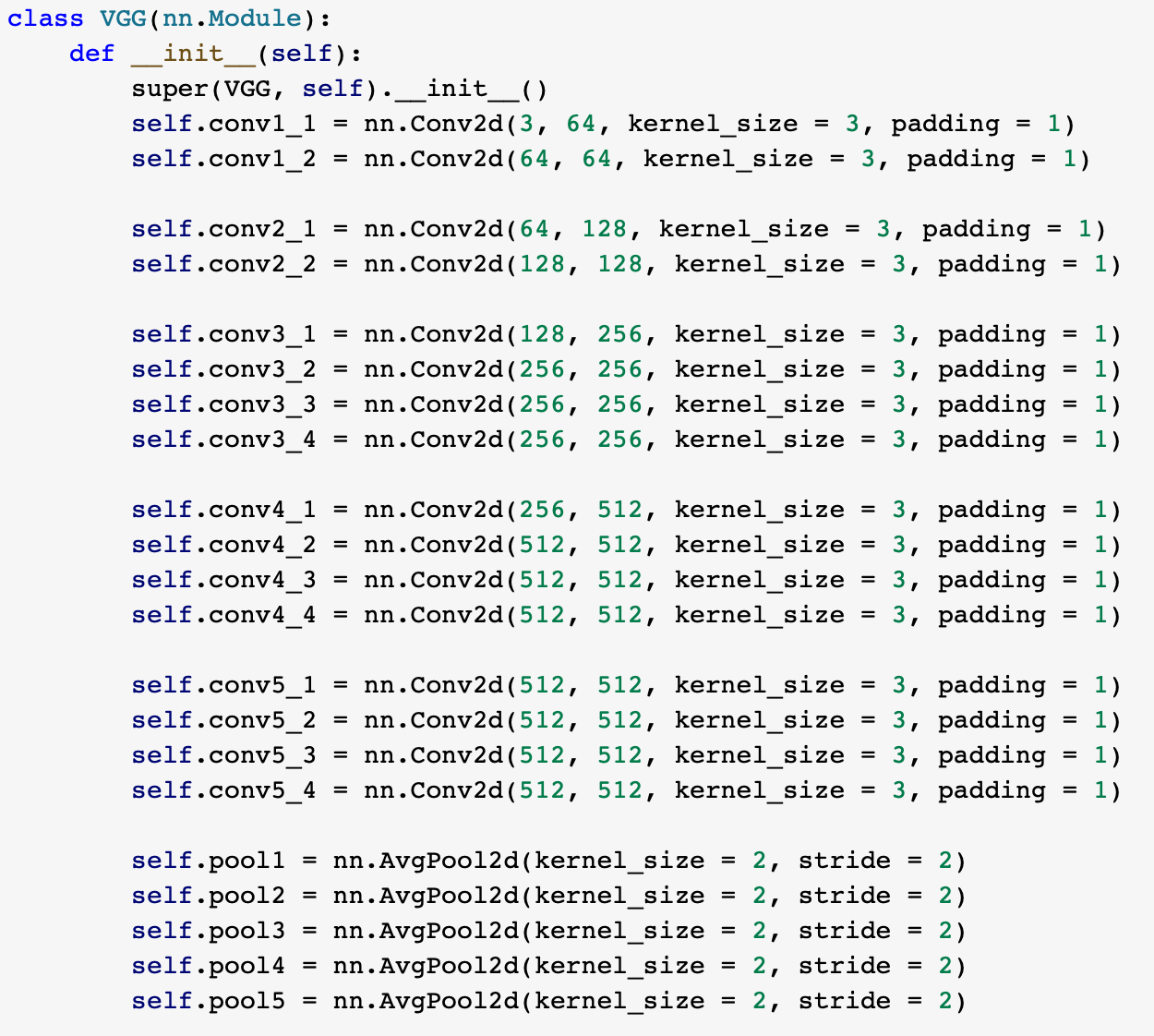

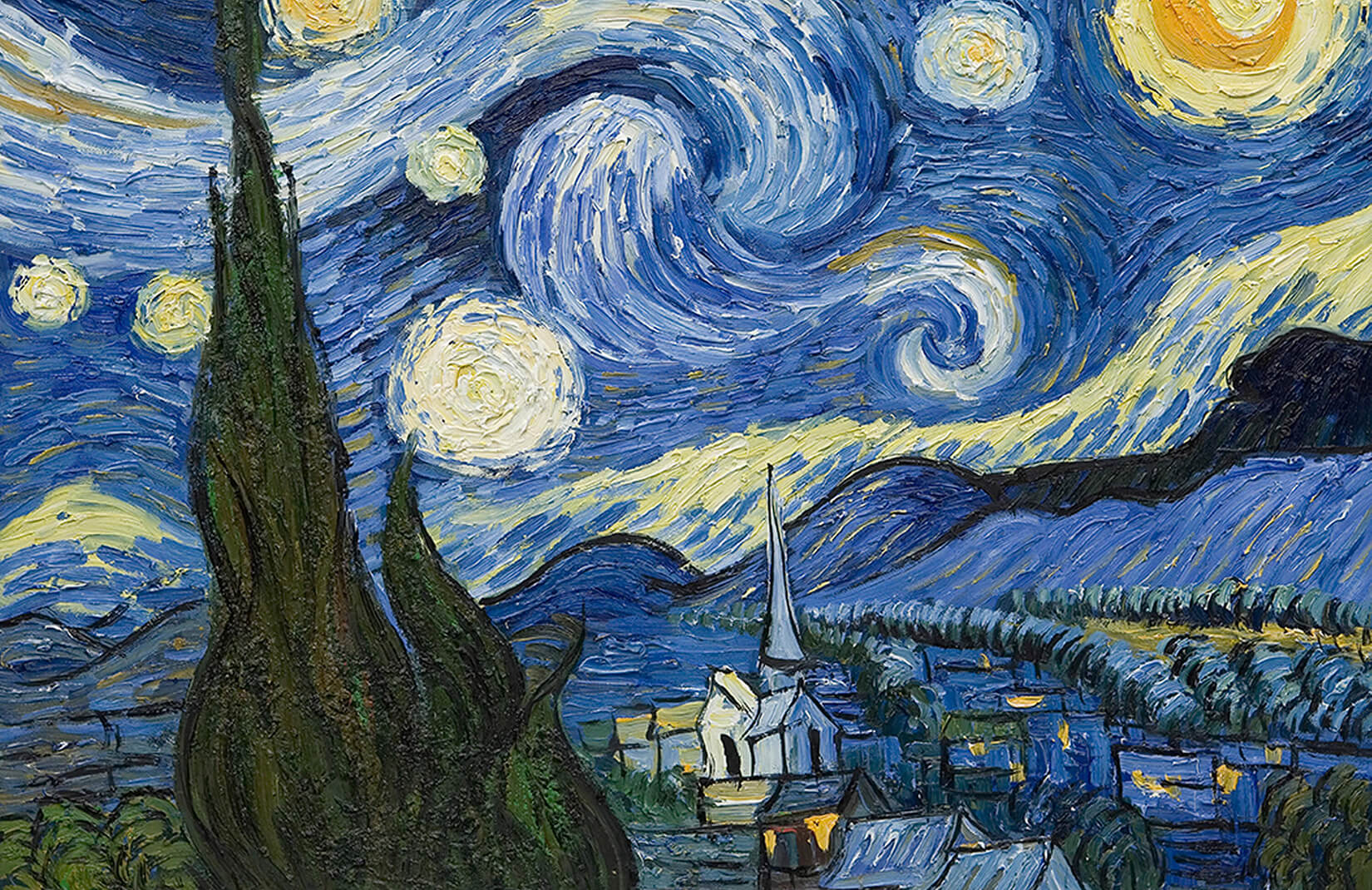

Pretrained VGG-19 CNN

In this paper, VGG-19 CNN is used as a protocol network. During the training

process, I used output from layer4-2 as content representation and outputs from layer1-1, 1-2,

3-1, 4-1 and 5-1 as style representations. I used L-BFGS optimizer to minimize the content and style loss.

Style loss is calculated with Gram Matrix. I trained the CNN for 500 iterations.

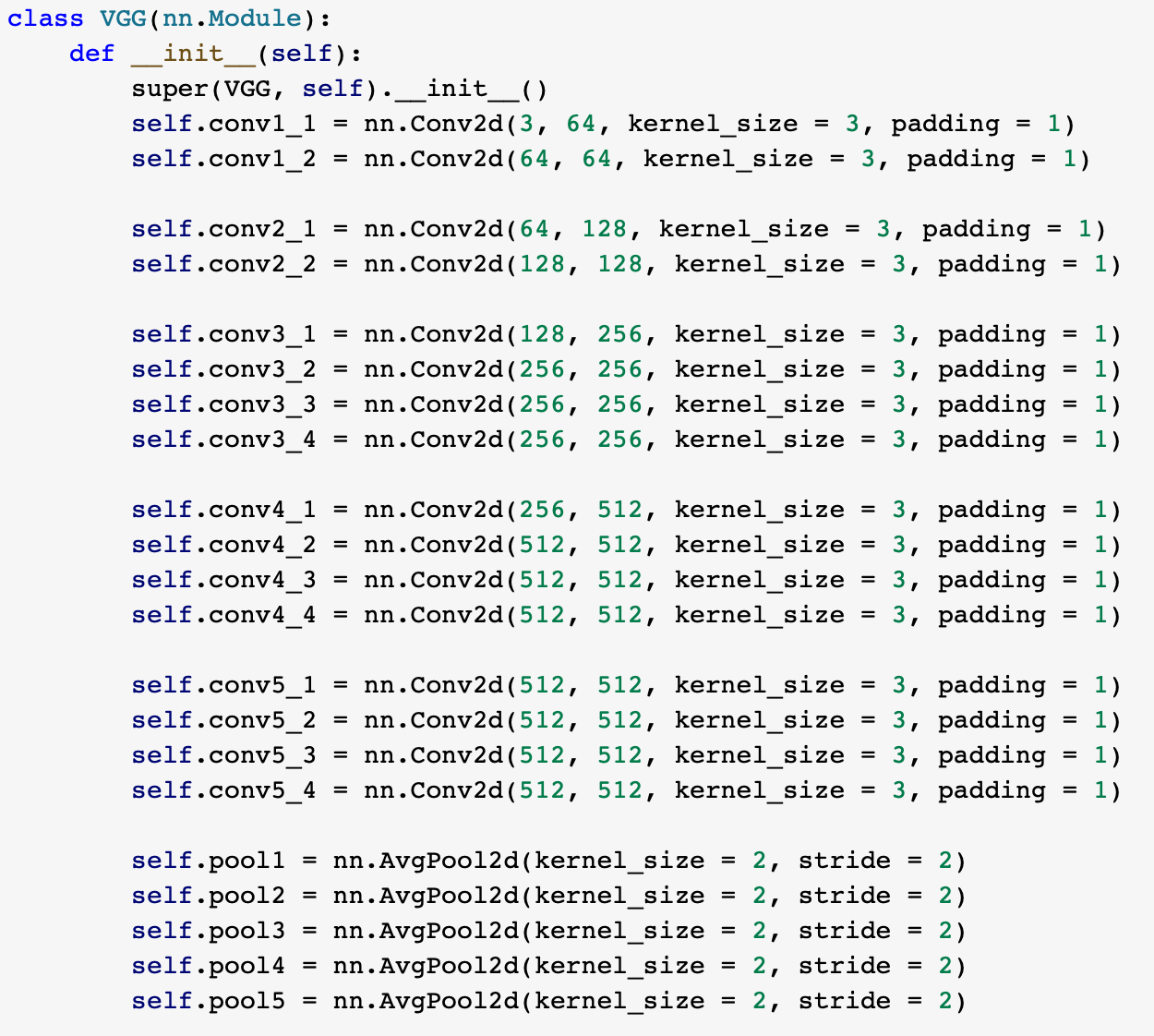

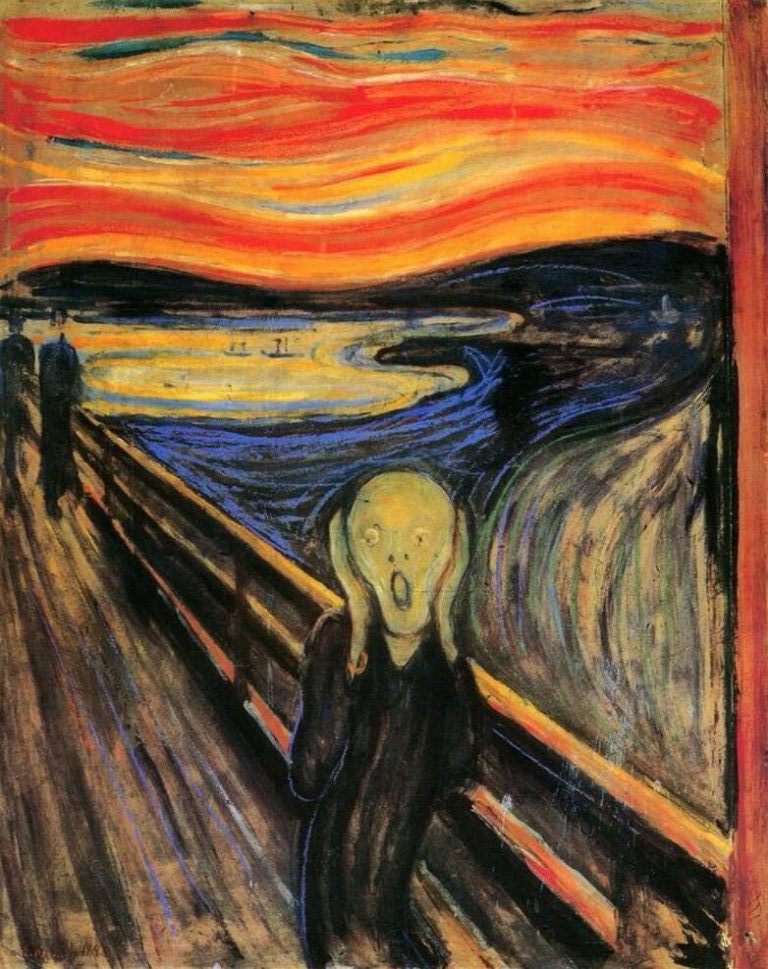

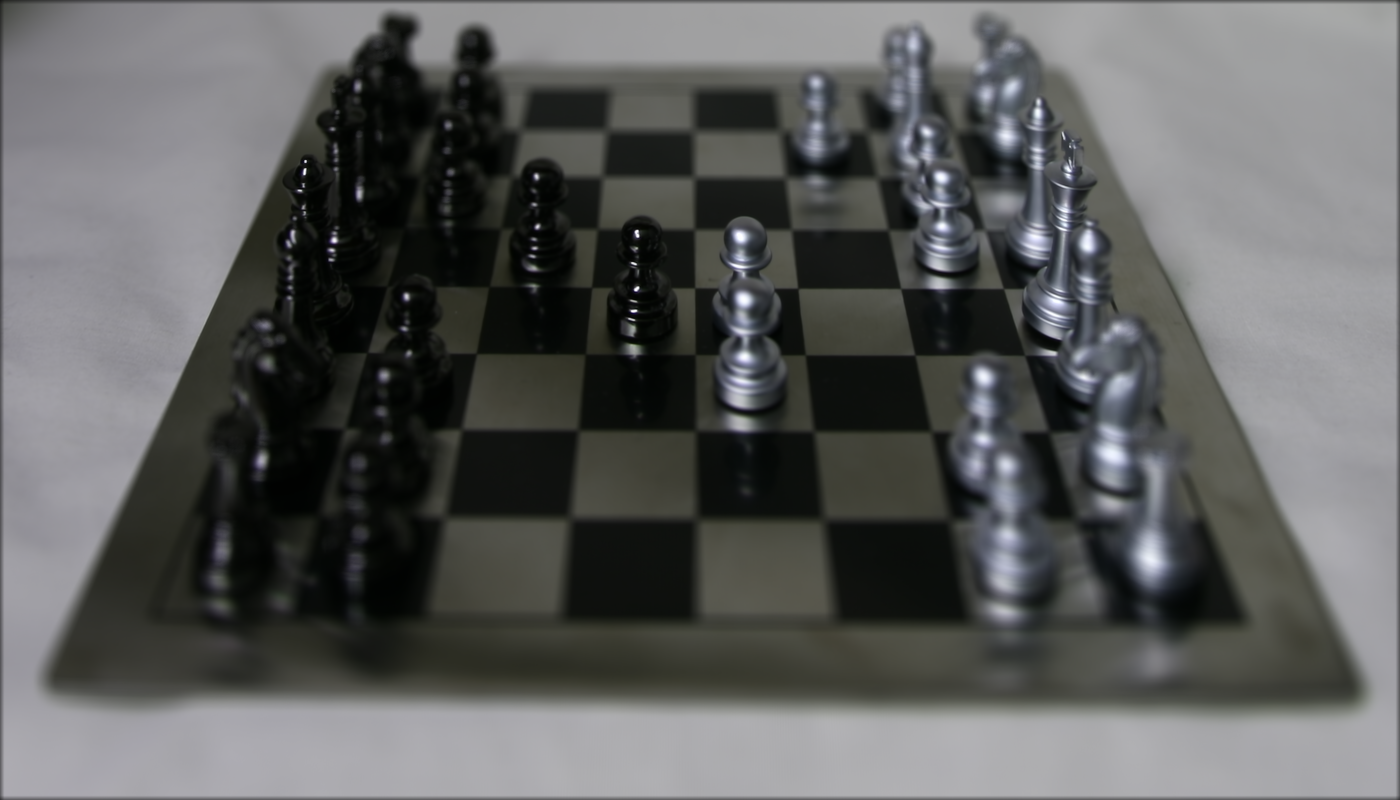

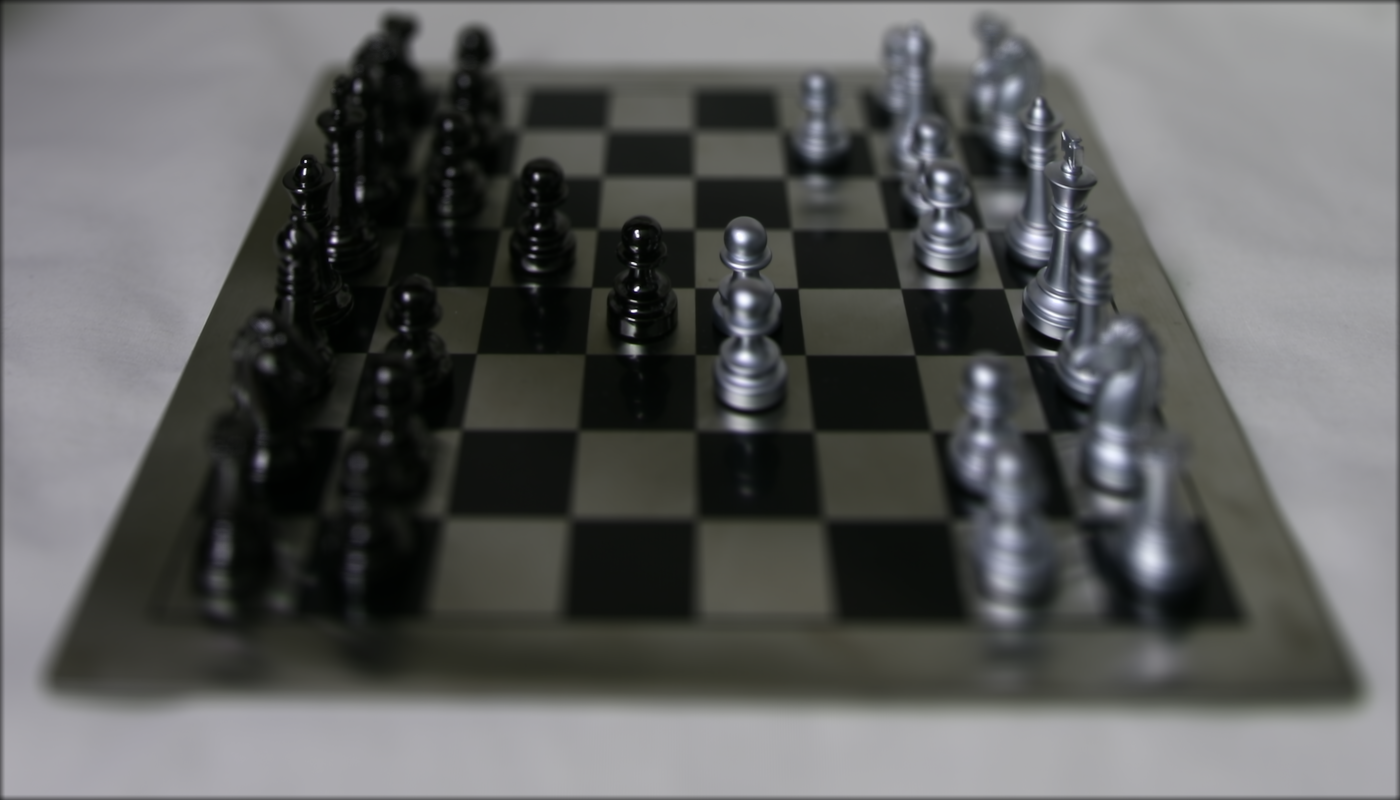

Style Images

Content Images

Sample Results

Summary

From this paper I learned a lot about the representation of the style of a image, also a

unusual aspect of optimization problem.

Second Project: Light Field Camera

Overview

Using lightfield data, we can achieve complex effects with simple

shifting and averaging operations. We use data from the Stanford Light Field Archive.

Each dataset contains 289 images taken with a 17x17 grid of cameras.

In particular, we implement depth refocusing and aperture adjustment.

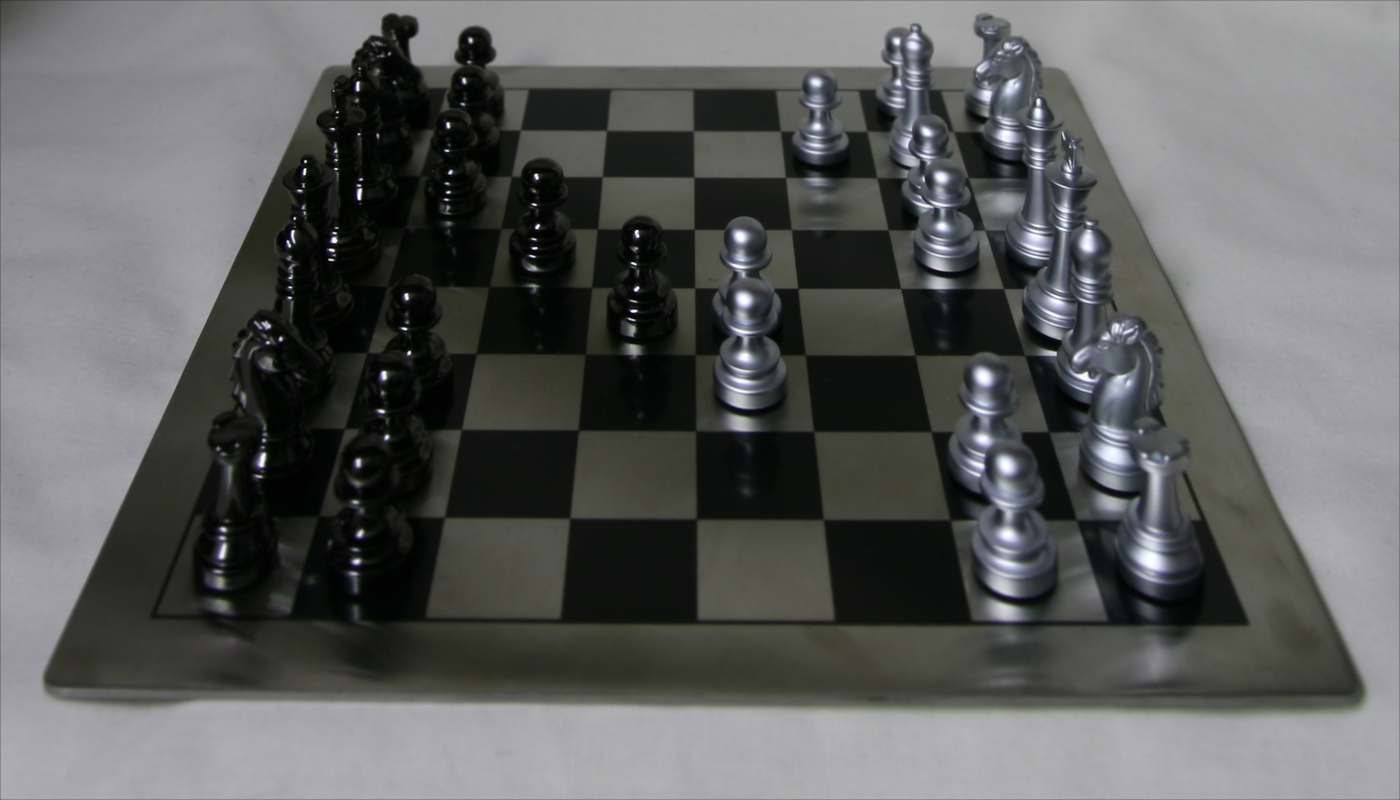

Part A: Depth Refocusing

We want to be able to change the point of focus on some

image after the fact. We can take advantage of the idea that objects which

are far from the camera do not vary in position significantly when the camera

moves around while keeping the optical axis direction the same. Conversely, close-by

objects vary their positions significantly across images. Therefore, when we average

all the images in the dataset, the resulting image will look blurry in the objects

close to the camera and clear in far-away objects.

To control the amount of shifting, we parse the filenames and extract the focal point for every

image, then use translation to shift the image.

Here are the results.

Part B: Depth Refocusing

We can simulate changes in aperture size by changing the number of images

we average together.

When we use a small subset of sub-apertures, we simulate a small aperture which therefore

accepts a small amount of light. All the rays of light will be roughly parallel, so everything

in the image will be in focus. The results are as follows:

Summary

I learned a lot about how light field cameras encode information that can be used to create interesting

effects not otherwise possible with traditional cameras. Also I learned about the concepts and machnisms of

light field.