Part 1: Seam Carvings

The Story

Not all pictures are perfect; some are too large, while others are too small. And nowadays, there are so many different screensizes, that creating a perfect image that won't be incorrectly distorted to fit the different sizes is nearly impossible. However, Seam Carving solves this issue!

Using seam carving, we can resize the images both horizontally and vertically by cutting out insignificant parts of the picture, so our resizing seems almost perfect. (Almost because I will show you a couple of examples where the algorithm I implemented did not work out.) My implementation was, determining the energy value of each pixel until we get the number of seams we wanted removed, we find the seam with the minimal energy value, and we remove that seam. This seam energy value is what we consider insignificant in the image. To determine the energy values, I passed the image through a Sobel filter and got its gradient magnitude.

I hope you enjoy the following images. I tried using seam carving on several naturey images and on the Berkeley campus!

SUCCESS

SUCCESS

SUCCESS

FAIL (the man was carved out)

SUCCESS

SUCCESS

SUCCESS

FAIL (RIP doe)

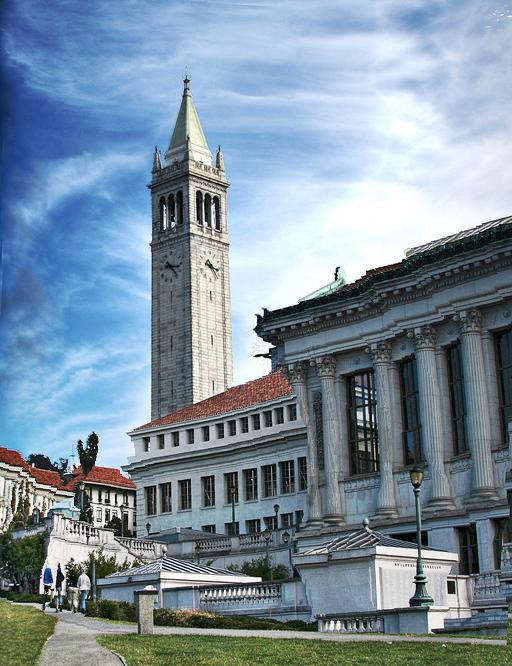

FAIL (RIP campanile)

What I Learned

The most important thing I learned was the use of dynamic programming. I learned it in 170 and used it in coding interviews, but it was nice to actually apply it to a project and have results out of it! Also, I thought that being able to remove seams and to resize the image without makign the image look distorted was very cool!