Manipulating images by playing with gradients

Tianhui (Lily) Yang and Kamyar Salahi CS194 SPRING 2020

There are many methods to create composite images, such as multi-resolution blending and alpha blending. These mechanisms focus on softening edges, mixing high and low frequencies of each pictures, or weighted-averaging. Despite how smooth the transitions may be, there is bound to be differences in color or style that we can easily pick up.

Gradient domain fusion solves this problem by ignoring the overall intensity of images and focusing on the gradients, or how each pixel changes with repect to its neighbors. Since humans are a lot more responsive to gradient changes, this would make blended images seem a lot more natural. However, because the new image is constructed based on gradients and not the true intensity values, coloring of images may change as a result.

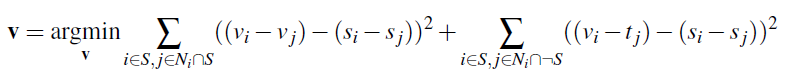

So how do we actually do this? We can sum up the compositing as a least squares problem:

where given a source image s, and a target image t, we want to find new intensity values v within source region S (as defined by our masking) that minimize the difference in gradients between the new values and the old source image values. We split up this problem into 2 portions, the first summation regards all pixel values inside of S and the second summation deals with all boundary values of S. All values v inside of S should should have the same gradients as that of S. We want all boundary values in the new image to be the same as those of the bounding intensity values of the target image.

Before we began creating composite pictures, we tested our methodology with a Toy problem. Here we try to reconstruct an image from one of its pixels and it’s gradient representations. We split up the problem into 3 main objectives.

To format this into a least squares problem, we compute an x that best fits Ax = b. In the following, we denote height and width as the height and width of our source image, s.

A is a sparse matrix that is shape (2 * height * width + 1, height * width). It holds the gradient operations of the image for every pixel in the image, with x gradients covering the first height * width rows and y gradients covering the second. The additional row is to make a correspondence for the corner intensity of the image.

b is a matrix that is shape (2 * height * width + 1, 1) and it represents the known gradient values of our source image, as well as the intensity value of the corner pixel. We find this vector by computing the dot product between our flattened source image and the gradient operation matrix A.

We use sparse least squares and convert our arrays into lil_matrix format to save memory and increase compute efficiency, since most of our array is zeros. The gradient in the x-direction involves a simple finite difference between pixel at (y, x + 1) and pixel at (y, x). The same can be applied in the y-direction with changes in y and x being held constant.

Here is the result of solving this equation for our toy image. The least squares result is on the right and the original image is on the left. As you can see, they look almost identical with minimal error, indicating successful reconstruction.

After we made sure our implementation worked, we moved onto creating composite images. Here we applied the same principle of least squares, only modifying A to have more corresponding intensity values at the border. We split our methodology into the following:

align images and resize: We utilize horizontal and vertical translations that are determined manually

identify points of mask: we select correspondence points using ginput

extract boundary values using edge detection from our binary mask. To get the new coordinates of points for extracting intensities from our target image, we found all nonzero indices from our edge mask and retrieved the respective pixel values.

use sparse least squares to solve after appending the target image values, processing each color channel separately

blend: Using masking, we create a hold in the target image and fill it with our source image

Here are some of our results. You can see the naive version on the left and the blended version on the right.

As you can see, the majority of the pictures are rather context driven, with water images paired to other water images and faces paired to other body parts. We found the best results when the textures of the composite images are similar and blurred backgrounds for source images helped everything mesh together if the textures were not consistent.

Here are some of the failure cases we encountered.

Here the texture around the crocodile and the coffee is very different. The algorithm did a good job at creating similar colors, but the edges are too obviously off. The branches and woody material on the ground of the crocodile image wasn’t ideal and makes it easy for the viewer to see noticeable differences. The lighting of the coffee image is also highly filtered, making new additions of objects obvious.

Here, the opposite issue of color changes arises. Because the target background was comparatively dark and the image of the person jumping was already quite dark, the result is a sillouhette effect. However, since the background of the person is blurred, it blends very well into the rest of the picture.

Grayscale conversions of colored photographs lose the contrast of color intensities. To remediate this, we perform a tonal re-mapping, by transferring the gradients in each channel of the source image from HSV space into grayscale. We will be essentially using the saturation and value gradients of the image in a mixed gradients problem. Here, the gradient value of the grayscale image at a pixel will be defined as the maximum of the absolute value of the corresponding gradients in the saturation and value domains. Our boundary conditions will be replaced by an aribitrary amount of pixels from the grayscale image.

As you can see, the contrast created by the colors is now reflected in its grayscale representation.

From this project, we learned the importance of utilizing sparse representations of dense arrays. We saw two orders of magnitude decrease in computation time when making this change. Also memory allocation thresholds for dense arrays create large constraints. This is also solved through scipy.sparse.lil_matrix. We also found that faces blend very well together even when obstructions like glasses are captured.