|

Eigenvalue Decomposition of Symmetric Matrices

|

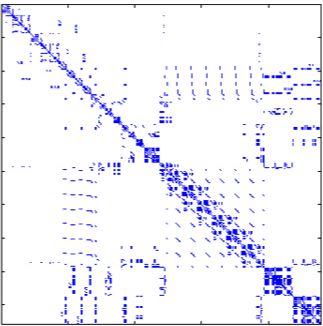

Symmetric matrices are square with elements that mirror each other across the diagonal. They can be used to describe for example graphs with undirected, weighted edges between the nodes; or distance matrices (between say cities), and a host of other applications. Symmetric matrices are also important in optimization, as they are closely related to quadratic functions.

A fundamental theorem, the spectral theorem, shows that we can decompose any symmetric matrix as a three-term product of matrices, involving an orthogonal transformation and a diagonal matrix. The theorem has a direct implication for quadratic functions: it allows a to decompose any quadratic function into a weighted sum of squared linear functions involving vectors that are mutually orthogonal. The weights are called the eigenvalues of the symmetric matrix.

The spectral theorem allows in particular to determine when a given quadratic function is ‘‘bowl-shaped’’, that is, convex. The spectral theorem also allows to find directions of maximal variance within a data set. Such directions are useful to visualize high-dimensional data points in two or three dimensions. This is the basis of a visualization method known as principal component analysis (PCA).

|

|