Weak Duality

Lagrange dual problem

Weak duality and minimax inequality

Examples

Lagrange dual problem

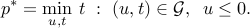

Primal problem

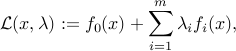

In this section, we consider a possibly non-convex optimization problem

![p^ast := displaystylemin_x : f_0(x) ~:~ begin{array}[t]{l} f_i(x) le 0,quad i=1,cdots, m, % h_i(x) = 0, quad i=1,cdots,p, end{array}](eqs/5121102846567423464-130.png)

where the functions  We denote by

We denote by  the domain of the problem (which is the intersection of the domains of all the functions involved), and by

the domain of the problem (which is the intersection of the domains of all the functions involved), and by  its feasible set.

its feasible set.

We will refer to the above as the primal problem, and to the decision variable  in that problem, as the primal variable. One purpose of Lagrange duality is to find a lower bound on a minimization problem (or an upper bounds for a maximization problem). Later, we will use duality tools to derive optimality conditions for convex problems.

in that problem, as the primal variable. One purpose of Lagrange duality is to find a lower bound on a minimization problem (or an upper bounds for a maximization problem). Later, we will use duality tools to derive optimality conditions for convex problems.

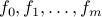

Lagrangian

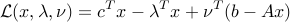

To the problem we associate the Lagrangian  , with values

, with values

where the new variables  ,

are called Lagrange multipliers, or dual variables.

,

are called Lagrange multipliers, or dual variables.

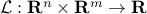

We observe that, for every feasible  , and every

, and every  ,

,  is bounded below by

is bounded below by  :

:

The Lagrangian can be used to express the primal problem (ref{eq:convex-pb-L11}) as an unconstrained one. Precisely:

where we have used the fact that, for any vector  , %

, % ,

we have

,

we have

Lagrange dual function

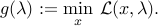

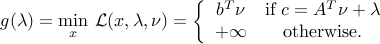

We then define the Lagrange dual function (dual function for short) as the function with values

Note that, since  is the point-wise minimum of affine functions (

is the point-wise minimum of affine functions ( is affine for every

is affine for every  ), it is concave. Note also that it may take the value

), it is concave. Note also that it may take the value  .

.

From the bound above, by minimizing over  in the right-hand side, we obtain

in the right-hand side, we obtain

which, after minimizing over  the left-hand side, leads to the lower bound

the left-hand side, leads to the lower bound

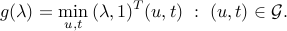

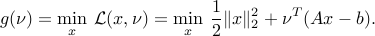

Lagrange dual problem

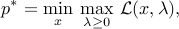

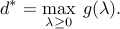

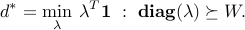

The best lower bound that we can obtain using the above bound is  , where

, where

We refer to the above problem as the dual problem, and to the vector  as the dual variable. The dual problem involves the maximization of a concave function under convex (sign) constraints, so it is a convex problem. The dual problem always contains the implicit constraint

as the dual variable. The dual problem involves the maximization of a concave function under convex (sign) constraints, so it is a convex problem. The dual problem always contains the implicit constraint  .

.

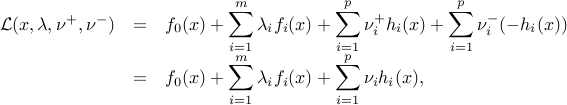

Case with equality constraints

If equality constraints are present in the problem, we can represent them as two inequalities. It turns out that this leads to the same dual, as if we would directly use a single dual variable for each equality constraint, which is not restricted in sign. To see this, consider the problem

![p^ast := displaystylemin_x : f_0(x) ~:~ begin{array}[t]{l} f_i(x) le 0,quad i=1,cdots, m, h_i(x) = 0, quad i=1,cdots,p. end{array}](eqs/8985535029580102030-130.png)

We write the problem as

![p^ast := displaystylemin_x : f_0(x) ~:~ begin{array}[t]{l} f_i(x) le 0,quad i=1,cdots, m, h_i(x) le 0, ;; -h_i(x) le 0, quad i=1,cdots,p. end{array}](eqs/2599917865295799633-130.png)

Using a multiplier  for the constraint

for the constraint  , we write the associated Lagrangian as

, we write the associated Lagrangian as

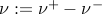

where  does not have any sign constraints.

does not have any sign constraints.

Thus, inequality constraints in the original problem are associated with sign constraints on the corresponding multipliers, while the multipliers for the equality constraints are not explicitly constrained.

Weak duality and minimax inequality

Weak duality theorem

We have obtained:

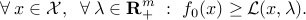

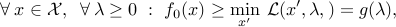

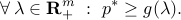

For a general (possibly non-convex) problem

![p^ast := displaystylemin_x : f_0(x) ~:~ begin{array}[t]{l} f_i(x) le 0,quad i=1,cdots, m, h_i(x) = 0, quad i=1,cdots,p , end{array}](eqs/9084382040779430365-130.png)

weak duality holds:  .

.

Geometric interpretation

Assume that there is only one inequality constraint in the primal problem ( ), and let

(

), and let

(

{cal G} := left{ (f_1(x),f_0(x)) : x in mathbf{R}^n right}.

)

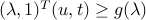

We have

and

If the minimum is finite, then the inequality  defines a supporting hyperplane, with slope

defines a supporting hyperplane, with slope  , of

, of  at

at  . (See Figs. 5.3 and 5.4 in BV,p.233.)

. (See Figs. 5.3 and 5.4 in BV,p.233.)

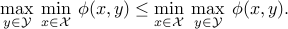

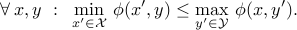

Minimax inequality

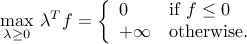

Weak duality can also be obtained as a consequence of the following minimax inequality, which is valid for any function  of two vector variables

of two vector variables  , and any subsets

, and any subsets  ,

,  :

:

To prove this, start from

and take the minimum over  on the right-hand side, then the maximum over

on the right-hand side, then the maximum over  on the left-hand side.

on the left-hand side.

Weak duality is indeed a direct consequence of the above. To see this, start from the unconstrained formulation (ref{eq:pb-primal-unconstr-L11}), and apply the above inequality with  the Lagrangian of the original problem, and

the Lagrangian of the original problem, and  the vector of Lagrange multipliers.

the vector of Lagrange multipliers.

Interpretation as a game

We can interpret the minimax inequality result in the context of a one-shot, zero-sum game. Assume that you have two players A and B, where A controls the decision variable  , while B controls

, while B controls  . We assume that both players have full knowledge of the other player’s decision, once it is made. The player A seeks to minimize a payoff (to player B)

. We assume that both players have full knowledge of the other player’s decision, once it is made. The player A seeks to minimize a payoff (to player B)  , while B seeks to maximize that payoff. The right-hand side in (ref{eq:min-max-theorem-L11}) is the optimal pay-off if the first player is required to play first. Obviously, the first player can do better by playing second, since then he or she knows the opponent’s choice and can adapt to it.

, while B seeks to maximize that payoff. The right-hand side in (ref{eq:min-max-theorem-L11}) is the optimal pay-off if the first player is required to play first. Obviously, the first player can do better by playing second, since then he or she knows the opponent’s choice and can adapt to it.

Examples

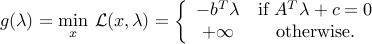

Linear optimization problem, inequality form

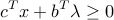

Consider the LP in standard inequality form

where  ,

,  , and the inequality in the constraint

, and the inequality in the constraint  is interpreted component-wise.

is interpreted component-wise.

The Lagrange function is (

{cal L}(x,lambda) = c^Tx + lambda^T(Ax-b)

)

and the corresponding dual function is

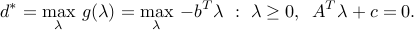

The dual problem reads

The dual problem is an LP in standard (sign-constrained) form, just as the primal problem was an LP in standard (inequality) form.

Weak duality implies that

for every  such that

such that  ,

,  . This property can be proven directly, by replacing

. This property can be proven directly, by replacing  by

by  in the left-hand side of the above inequality, and exploiting

in the left-hand side of the above inequality, and exploiting  and

and  .

.

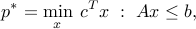

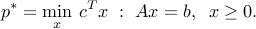

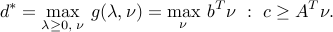

Linear optimization problem, standard form

We can also consider an LP in standard form:

The equality constraints are associated with a dual variable  that is not constrained in the dual problem.

that is not constrained in the dual problem.

The Lagrange function is

and the corresponding dual function is

The dual problem reads

This is an LP in inequality form.

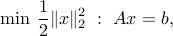

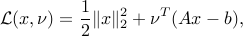

Minimum Euclidean distance problem

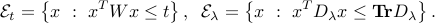

Consider the problem of minimizing the Euclidean distance to a given affine space:

where  ,

,  . We assume that

. We assume that  is full row rank, or equivalently,

is full row rank, or equivalently,  . The Lagrangian is

. The Lagrangian is

and the Lagrange dual function is

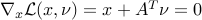

In this example, the dual function can be computed analytically, using the optimality condition  . We obtain

. We obtain  , and

, and

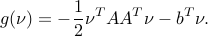

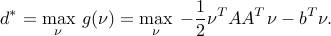

The dual problem expresses as

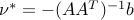

The dual problem can also be solved analytically, since it is unconstrained (the domain of  is the entire space

is the entire space  ). We obtain

). We obtain  , and

, and

We have thus obtained the bound  .

.

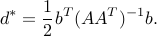

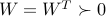

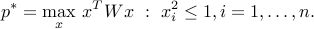

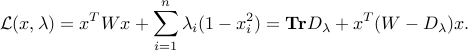

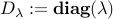

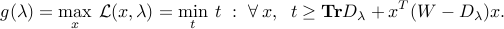

A non-convex boolean problem

For a given matrix  , we consider the problem

, we consider the problem

In this maximization problem, Lagrange duality will provide an upper bound on the problem. This is called a ‘‘relaxation’’, as we go above the true maximum, as if we’d relax (ignore) constraints.

The Lagrangian writes

where  .

.

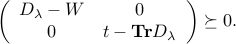

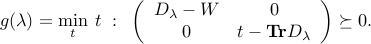

To find the dual function, we need to maximize the Lagrangian with respect to the primal variable  . We express this problem as

. We express this problem as

The last inequality holds if and only if

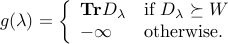

Hence the dual function is the optimal value of an SDP in one variable:

We can solve this problem explicitly:

The dual problem involves minimizing (that is, getting the best upper bound) the dual function over the variable  :

:

The above is an SDP, in variable  . Note that

. Note that  is automatically enforced by the PSD constraint.

is automatically enforced by the PSD constraint.

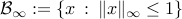

The Lagrange relaxation of the primal problem can be interpreted geometrically, as follows.

,

,

for which the ellipsoid

for which the ellipsoid  contains the ball

contains the ball  . Note that for every

. Note that for every  ,

,  contains the ball

contains the ball  . To find an upper bound on the problem, we can find the smallest

. To find an upper bound on the problem, we can find the smallest  for which there exist

for which there exist  such that

such that  . The latter condition is precisely

. The latter condition is precisely  ,

,  .

.