Project 1: Colorizing the Prokudin-Gorskii Photo Collection

Project Overview

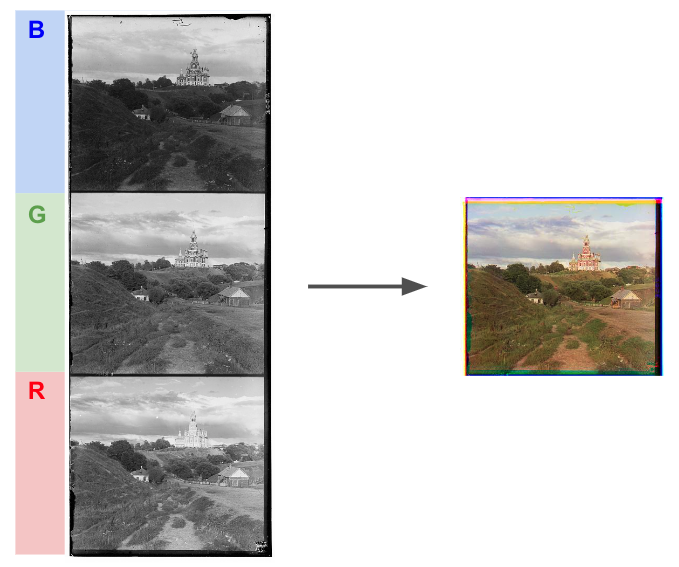

In the early 1900s, Sergey Prokudin-Gorskii traveled the Russian Empire in an

attempt to capture colorized photographs for the current Tzar. To achieve this,

Prokudin-Gorskii generated three glass negatives of the scene, each

created using a different color filter (red, green, and blue). As such, aligning these

three negatives would produce a colorized image.

The aim of this project is to take the digitized glass plate images from the Prokudin-Gorskii

collection and align them automatically into a colored image. This is achieved by

aligning the red and green channels onto the blue channel and overlaying the

three channels together.

Project

Link

Task 1: Exhaustive Search

The initial approach was to implement a sliding-window algorithm that would exhaustively

test every possible displacement of the red and green channels over

a predefined interval (i.e. -15px to 15px).

Two different scoring metrics were available to evaluate how closely the aligned red or

green channel matched with the blue channel:

Sum of Squared Distances (SSD)

![ssd(u,v) = \sum_{(x,y)\in N}{[I(u+x,v+y)-P(x,y)]^{2}}](img/SSD.png) The SSD is the sum of squared differences between each pixel's value in two different

images (I and P). As such, the lower the SSD of two images are,

the more similar they are (thus, the goal is to minimize the SSD for most

similarity).

The SSD is the sum of squared differences between each pixel's value in two different

images (I and P). As such, the lower the SSD of two images are,

the more similar they are (thus, the goal is to minimize the SSD for most

similarity).

Normalized Cross-Correlation (NCC)

![ncc(u,v) = \frac{\sum_{(x,y)\in N}

{[I(u+x,v+y)-\bar{I}][P(x,y)-\bar{P}]}}{\sqrt{\sum_{(x,y)\in N}{[I(u+x,v+y)-\bar{I}]^{2}]}\sum_{(x,y)\in N}{[P(x,y)-\bar{P}]^{2}]}}}](img/NCC.png) The NCC measures the similarity of two images I and P using cross-correlation, where

larger values of NCC correlate with higher simiarity.

The NCC measures the similarity of two images I and P using cross-correlation, where

larger values of NCC correlate with higher simiarity.One can think of the NCC as an algorithm that executes the following steps:

- 1. Flatten each 2D image I and P into one-dimensional vectors I_flat and P_flat

- 2. Normalize both I_flat and P_flat

- 3. Take the dot product betwen I_flat and P_flat. This value is the NCC of I and P

In the end, the NCC scoring metric was chosen as it better accounts for intensity between two pixels (instead of only taking their difference). As such, the implemented algorithm would align the red and green channels to the blue channel by testing every alignment within a -15px to 15px window and outputting the best alignment that corresponded with the highest NCC value. Note that the edges of the aligned images were cropped (~7.5% on each side) before scoring to eliminate any noise from the images' borders (i.e. we want to only score the pixels that compose the main image).

The results for the low-resolution images are shown below:

cathedral.jpg

- R Offset: (3, 12)

- G Offset: (2, 5)

- B Offset: (0, 0)

- Time Elapsed: 1.2686s

monastery.jpg

- R Offset: (2, 3)

- G Offset: (2, -3)

- B Offset: (0, 0)

- Time Elapsed: 1.3150s

tobolsk.jpg

- R Offset: (3, 6)

- G Offset: (3, 3)

- B Offset: (0, 0)

- Time Elapsed: 1.2498s

Task 2: Image Pyramid

Although the exhaustive search works for low-resolution images (~200KB), it fails on

high-resolution images (~70MB), as we cannot effectively test every possible

alignment on the entire image due to large computation times. In addition, the -15px to 15px

window isn't sufficient in finding the best alignment, as the

required alignment may involve shifting channels laterally by hundreds of pixels.

To mitigate this issue, we implement an image pyramid algorithm that recursively downscales

each image by a factor of 2 until the image's width is less than 100px.

After, we apply the shifting-window algorithm from task 1 on the downscaled image to produce

an alignment estimate on the image. Once the estimate is found, we go back to the next

upscaled image (by a factor of 2) and run the shifting-window algorithm centered around the

previous alignment estimate. This continues until we reach + align the original image.

- The window was set to (-3px, 3px) for the original image and increased by 3 every time the image was downsized (i.e. the first downsized image would run the sliding-window algorithm on a (-6px, 6px) window, the second downsized image would use a (-9px, 9px) window, etc.

- The algorithm could be more efficient by not increasing the window size so aggressively during each iteration, but these parameters were sufficient for aligning most examples in a reasonable amount of time

- The estimated alignment was multiplied by 2 before being passed to the upscaled image (since the image is upscaled by a factor of 2, the alignment must be scaled by 2 as well)

The results for all images using the image-pyramid algorithm are shown below:

cathedral.jpg

- R Offset: (3, 12)

- G Offset: (2, 5)

- B Offset: (0, 0)

- Time Elapsed: 0.2945s

monastery.jpg

- R Offset: (2, 3)

- G Offset: (2, -3)

- B Offset: (0, 0)

- Time Elapsed: 0.2933s

tobolsk.jpg

- R Offset: (3, 6)

- G Offset: (3, 3)

- B Offset: (0, 0)

- Time Elapsed: 0.2981s

three_generations.tif

- R Offset: (11, 112)

- G Offset: (14, 53)

- B Offset: (0, 0)

- Time Elapsed: 32.0443s

melons.tif

- R Offset: (13, 178)

- G Offset: (10, 82)

- B Offset: (0, 0)

- Time Elapsed: 32.8161s

onion_church.tif

- R Offset: (36, 108)

- G Offset: (26, 51)

- B Offset: (0, 0)

- Time Elapsed: 32.1898s

train.tif

- R Offset: (32, 87)

- G Offset: (6, 43)

- B Offset: (0, 0)

- Time Elapsed: 31.9313s

church.tif

- R Offset: (-4, 58)

- G Offset: (4, 25)

- B Offset: (0, 0)

- Time Elapsed: 29.8983s

icon.tif

- R Offset: (23, 89)

- G Offset: (17, 41)

- B Offset: (0, 0)

- Time Elapsed: 32.1540s

sculpture.tif

- R Offset: (-27, 140)

- G Offset: (-11, 33)

- B Offset: (0, 0)

- Time Elapsed: 33.4713s

self_portrait.tif

- R Offset: (36, 176)

- G Offset: (29, 79)

- B Offset: (0, 0)

- Time Elapsed: 33.4049s

harvesters.tif

- R Offset: (13, 124)

- G Offset: (17, 60)

- B Offset: (0, 0)

- Time Elapsed: 31.7961s

lady.tif

- R Offset: (11, 117)

- G Offset: (9, 55)

- B Offset: (0, 0)

- Time Elapsed: 31.9350s

emir.tif (aligned to blue)

- R Offset: (-1041, 171)

- G Offset: (24, 49)

- B Offset: (0, 0)

- Time Elapsed: 30.1437s

emir.tif (aligned to green)

- R Offset: (17, 57)

- G Offset: (0, 0)

- B Offset: (-24, -49)

- Time Elapsed: 30.4310s

Additional Examples

Below are some additonal aligned examples from the Prokudin-Gorskii collection not included with the assignment.

piony.tif

- R Offset: (-6, 104)

- G Offset: (3, 51)

- B Offset: (0, 0)

- Time Elapsed: 33.3134s

sitting_boy.tif

- R Offset: (-11, 103)

- G Offset: (-13, 45)

- B Offset: (0, 0)

- Time Elapsed: 35.6745s

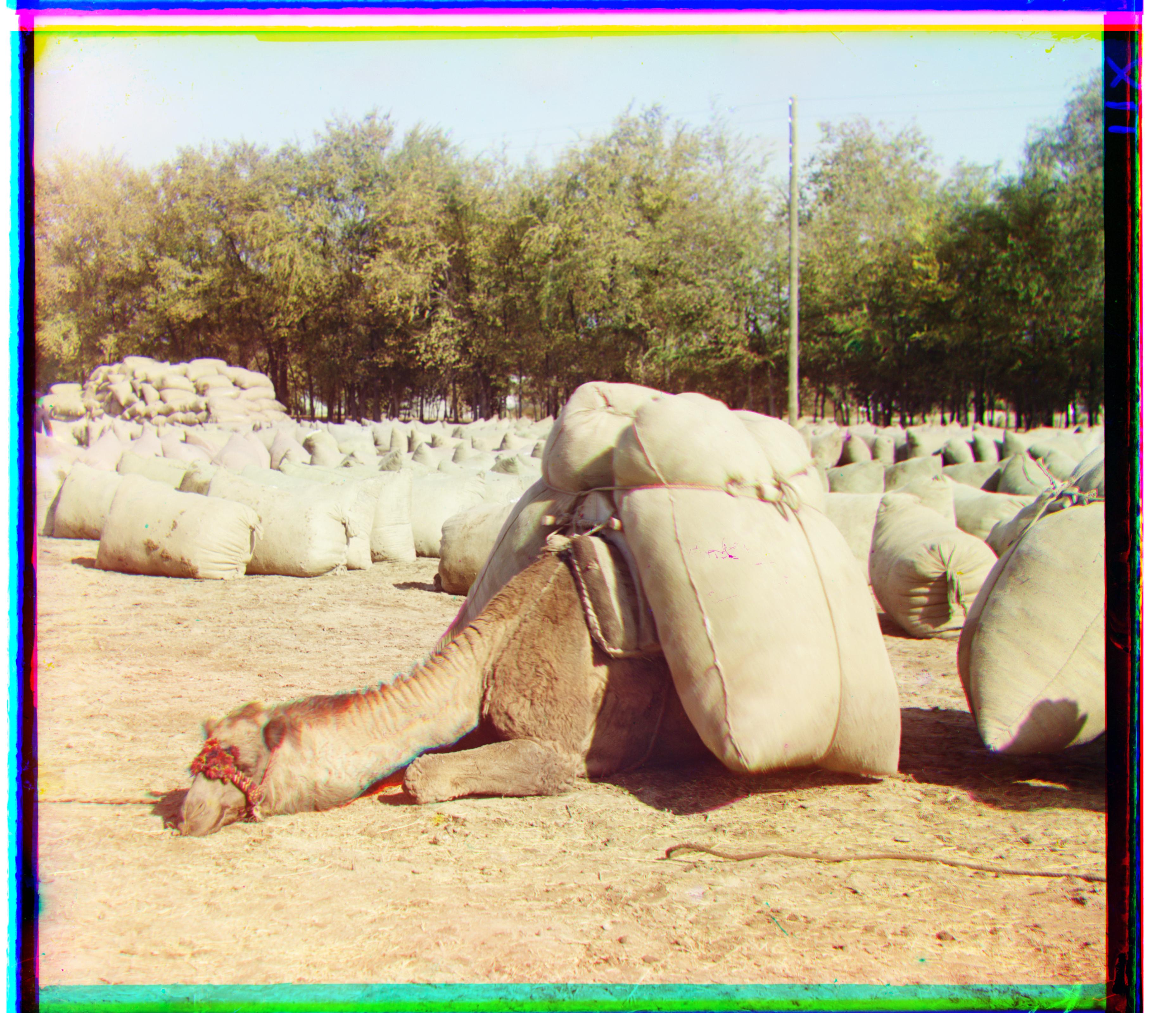

camel.tif

- R Offset: (-20, 98)

- G Offset: (-4, 46)

- B Offset: (0, 0)

- Time Elapsed: 32.5295s

lake.tif

- R Offset: (-14, 111)

- G Offset: (-7, 30)

- B Offset: (0, 0)

- Time Elapsed: 36.2856s

cathedral.tif

- R Offset: (-5, 108)

- G Offset: (2, 49)

- B Offset: (0, 0)

- Time Elapsed: 31.8459s

candlestick.tif

- R Offset: (-6, 106)

- G Offset: (2, 48)

- B Offset: (0, 0)

- Time Elapsed: 33.2487s