Matrices

|

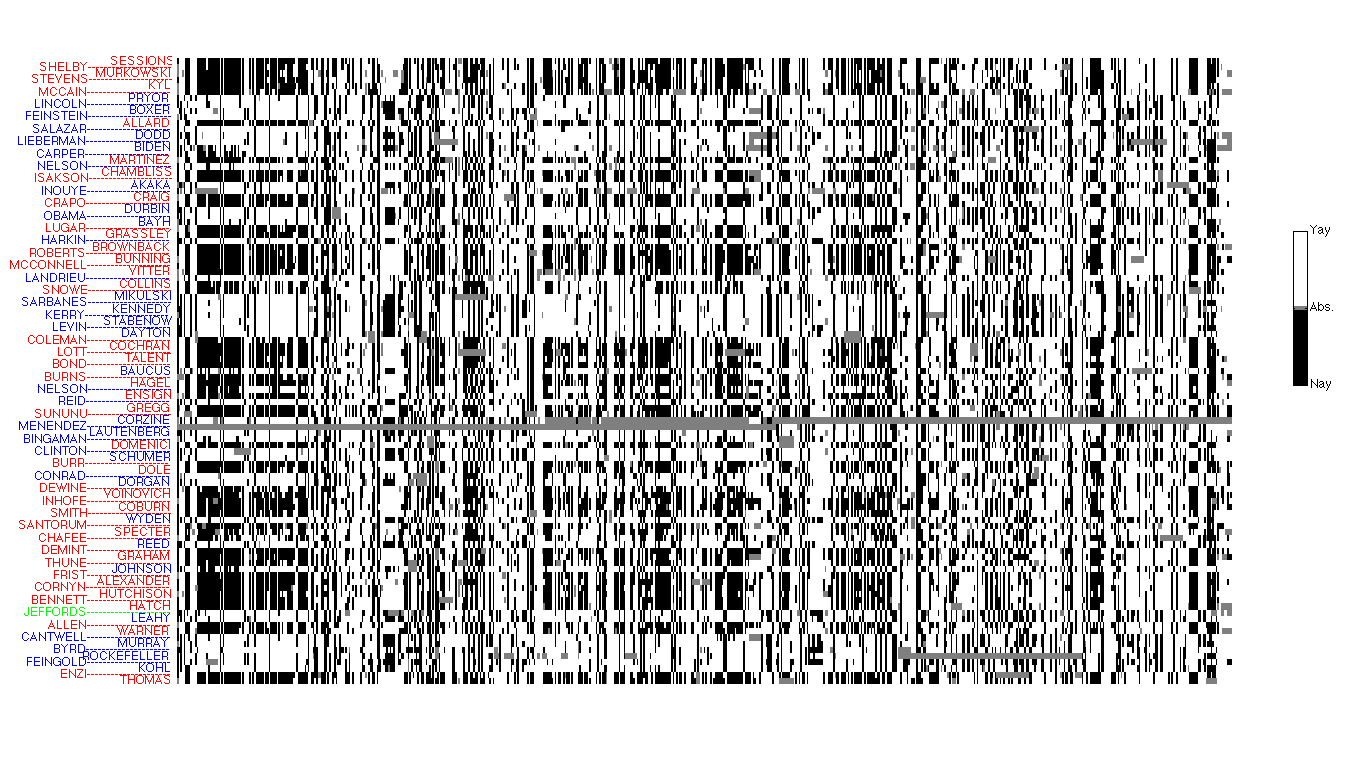

Matrices are collections of vectors of same size, organized in a rectangular array. The image shows the matrix of votes of the 2004-2006 US Senate. (Source.) Via the matrix-vector product, we can interpret matrices as linear maps (vector-valued functions), which act from an ‘‘input’’ space to an ‘‘output’’ space, and preserve addition and scaling of the inputs. Linear maps arise everywhere in engineering, mostly via a process known as linearization (of non-linear maps). Matrix norms are then useful to measure how the map amplifies or decreases the norm of specific inputs. |

We review a number of prominent classes of matrices. Orthogonal matrices are an important special case, as they generalize the notion of rotation in the ordinary two- or three-dimensional spaces: they preserve (Euclidean) norms and angles. The QR decomposition, which proves useful in solving linear equations and related problems, allows to decompose any matrix as a two-term product involving an orthogonal matrix and a triangular matrix.