Linear regression

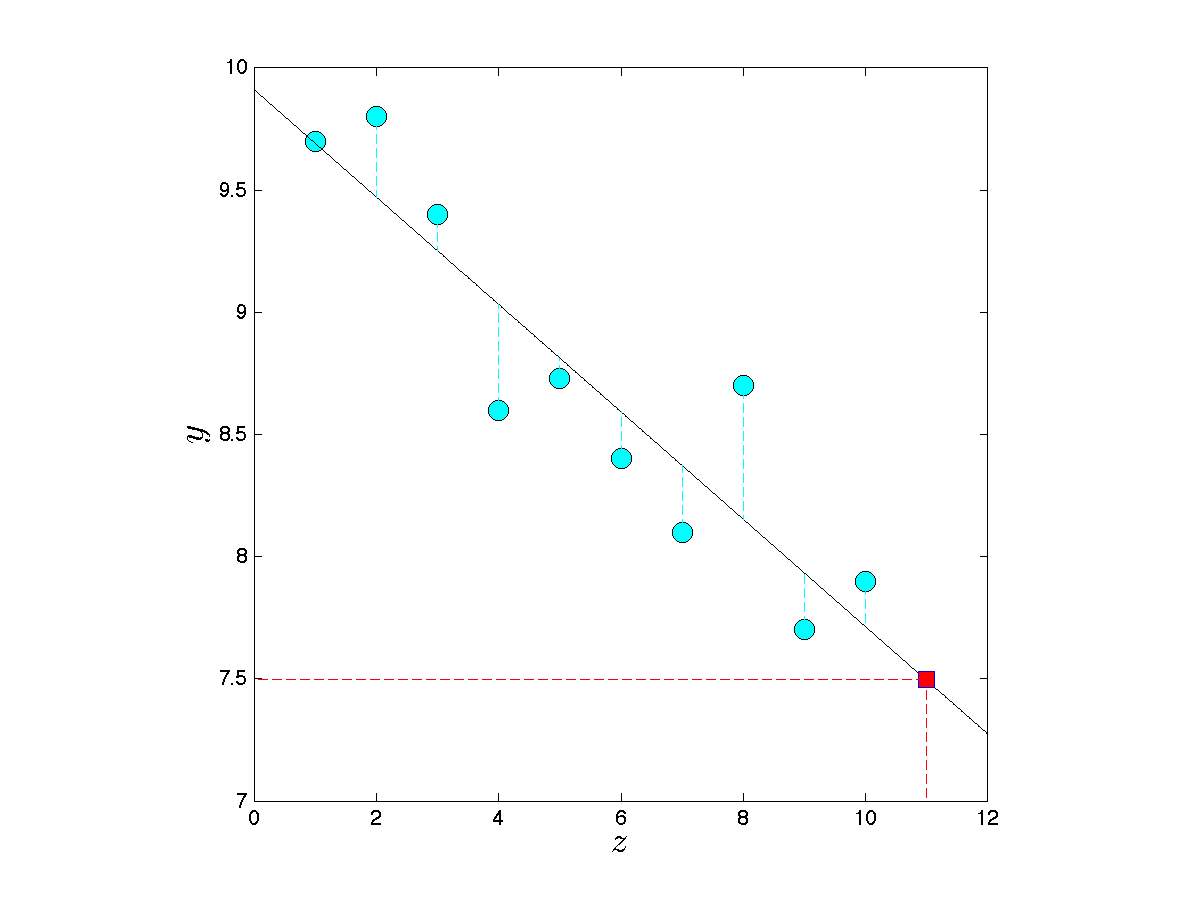

A popular example of least-squares problem arises in the context of fitting a line through points. This is illustrated below in two dimensions.

The linear regression approach can be extended to multiple dimensions, that is, to problems where the  -axis in the above problem contains more than one dimension (see here). It can also be extended to the problem of fitting non-linear curves.

-axis in the above problem contains more than one dimension (see here). It can also be extended to the problem of fitting non-linear curves.

,

,  . The

. The  's contain the prices of the item, and the

's contain the prices of the item, and the  's the average number of customers who buy the item.

's the average number of customers who buy the item. , where

, where  contains the decision variables. The quality of the fit of a generic line is measured via the sum of the squares of the error in the component

contains the decision variables. The quality of the fit of a generic line is measured via the sum of the squares of the error in the component  (blue dotted lines). Thus, the best least-squares fit is obtained via the least-squares problem

(blue dotted lines). Thus, the best least-squares fit is obtained via the least-squares problem